Tutorial: Ingest and query monitoring data in Azure Data Explorer

This tutorial will teach you how to ingest data from diagnostic and activity logs to an Azure Data Explorer cluster without writing code. With this simple ingestion method, you can quickly begin querying Azure Data Explorer for data analysis.

In this tutorial, you'll learn how to:

- Create tables and ingestion mapping in an Azure Data Explorer database.

- Format the ingested data by using an update policy.

- Create an event hub and connect it to Azure Data Explorer.

- Stream data to an event hub from Azure Monitor diagnostic metrics and logs and activity logs.

- Query the ingested data by using Azure Data Explorer.

Note

Create all resources in the same Azure location or region.

Prerequisites

- An Azure subscription. Create a free Azure account.

- An Azure Data Explorer cluster and database. Create a cluster and database. In this tutorial, the database name is TestDatabase.

Azure Monitor data provider: diagnostic metrics and logs and activity logs

View and understand the data provided by the Azure Monitor diagnostic metrics and logs and activity logs below. You'll create an ingestion pipeline based on these data schemas. Note that each event in a log has an array of records. This array of records will be split later in the tutorial.

Examples of diagnostic metrics and logs and activity logs

Azure diagnostic metrics and logs and activity logs are emitted by an Azure service and provide data about the operation of that service.

Diagnostic metrics example

Diagnostic metrics are aggregated with a time grain of 1 minute. Following is an example of an Azure Data Explorer metric-event schema on query duration:

{

"records": [

{

"count": 14,

"total": 0,

"minimum": 0,

"maximum": 0,

"average": 0,

"resourceId": "/SUBSCRIPTIONS/<subscriptionID>/RESOURCEGROUPS/<resource-group>/PROVIDERS/MICROSOFT.KUSTO/CLUSTERS/<cluster-name>",

"time": "2018-12-20T17:00:00.0000000Z",

"metricName": "QueryDuration",

"timeGrain": "PT1M"

},

{

"count": 12,

"total": 0,

"minimum": 0,

"maximum": 0,

"average": 0,

"resourceId": "/SUBSCRIPTIONS/<subscriptionID>/RESOURCEGROUPS/<resource-group>/PROVIDERS/MICROSOFT.KUSTO/CLUSTERS/<cluster-name>",

"time": "2018-12-21T17:00:00.0000000Z",

"metricName": "QueryDuration",

"timeGrain": "PT1M"

}

]

}

Set up an ingestion pipeline in Azure Data Explorer

Setting up an Azure Data Explorer pipeline involves several steps, such as table creation and data ingestion. You can also manipulate, map, and update the data.

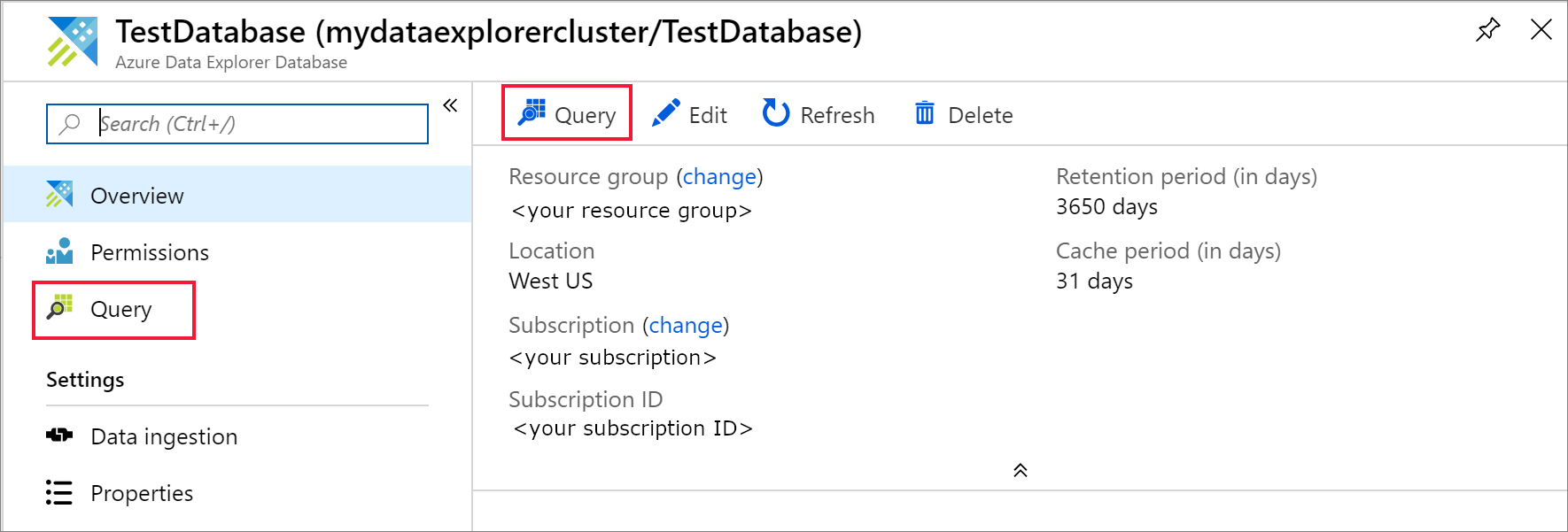

Connect to the Azure Data Explorer web UI

In your Azure Data Explorer TestDatabase database, select Query to open the Azure Data Explorer web UI.

Create the target tables

The structure of the Azure Monitor logs isn't tabular. You'll manipulate the data and expand each event to one or more records. The raw data will be ingested to an intermediate table named ActivityLogsRawRecords for activity logs and DiagnosticRawRecords for diagnostic metrics and logs. At that time, the data will be manipulated and expanded. Using an update policy, the expanded data will then be ingested into the ActivityLogs table for activity logs, DiagnosticMetrics for diagnostic metrics and DiagnosticLogs for diagnostic logs. This means that you'll need to create two separate tables for ingesting activity logs and three separate tables for ingesting diagnostic metrics and logs.

Use the Azure Data Explorer web UI to create the target tables in the Azure Data Explorer database.

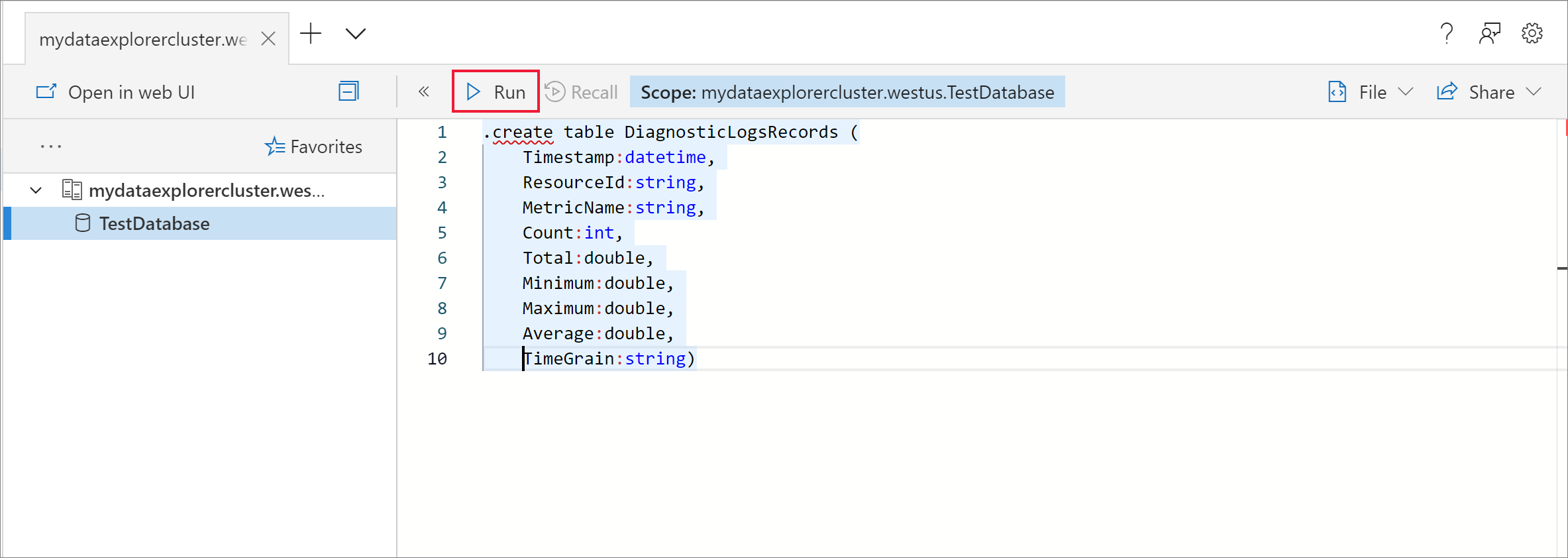

Create tables for the diagnostic metrics

In the TestDatabase database, create a table named DiagnosticMetrics to store the diagnostic metrics records. Use the following

.create tablemanagement command:.create table DiagnosticMetrics (Timestamp:datetime, ResourceId:string, MetricName:string, Count:int, Total:double, Minimum:double, Maximum:double, Average:double, TimeGrain:string)Select Run to create the table.

Create the intermediate data table named DiagnosticRawRecords in the TestDatabase database for data manipulation using the following query. Select Run to create the table.

.create table DiagnosticRawRecords (Records:dynamic)Set zero retention policy for the intermediate table:

.alter-merge table DiagnosticRawRecords policy retention softdelete = 0d

Create table mappings

Because the data format is json, data mapping is required. The json mapping maps each json path to a table column name. JSON paths that include special characters should be escaped as ['Property Name']. For more information, see JSONPath syntax.

Map diagnostic metrics and logs to the table

To map the diagnostic metric and log data to the table, use the following query:

.create table DiagnosticRawRecords ingestion json mapping 'DiagnosticRawRecordsMapping' '[{"column":"Records","Properties":{"path":"$.records"}}]'

Create the update policy for metric and log data

Create data update policy for diagnostics metrics

Create a function that expands the collection of diagnostic metric records so that each value in the collection receives a separate row. Use the

mv-expandoperator:.create function DiagnosticMetricsExpand() { DiagnosticRawRecords | mv-expand events = Records | where isnotempty(events.metricName) | project Timestamp = todatetime(events['time']), ResourceId = tostring(events.resourceId), MetricName = tostring(events.metricName), Count = toint(events['count']), Total = todouble(events.total), Minimum = todouble(events.minimum), Maximum = todouble(events.maximum), Average = todouble(events.average), TimeGrain = tostring(events.timeGrain) }Add the update policy to the target table. This policy will automatically run the query on any newly ingested data in the DiagnosticRawRecords intermediate data table and ingest its results into the DiagnosticMetrics table:

.alter table DiagnosticMetrics policy update @'[{"Source": "DiagnosticRawRecords", "Query": "DiagnosticMetricsExpand()", "IsEnabled": "True", "IsTransactional": true}]'

Create an Azure Event Hubs namespace

Azure diagnostic settings enable exporting metrics and logs to a storage account or to an event hub. In this tutorial, we'll route the metrics and logs via an event hub. You'll create an event hub namespace and an event hub for the diagnostic metrics and logs in the following steps. Azure Monitor will create the event hub insights-operational-logs for the activity logs.

Create an event hub by using an Azure Resource Manager template in the Azure portal. To follow the rest of the steps in this article, right-click the Deploy to Azure button, and then select Open in new window. The Deploy to Azure button takes you to the Azure portal.

Create an event hubs namespace and an event hub for the diagnostic logs. Learn how to create an event hubs namespace.

Fill out the form with the following information. For any settings not listed in the following table, use the default values.

Setting Suggested value Description Subscription Your subscription Select the Azure subscription that you want to use for your event hub. Resource group test-resource-group Create a new resource group. Location Select the region that best meets your needs. Create the event hub namespace in the same location as other resources. Namespace name AzureMonitoringData Choose a unique name that identifies your namespace. Event hub name DiagnosticData The event hub sits under the namespace, which provides a unique scoping container. Consumer group name adxpipeline Create a consumer group name. Consumer groups enable multiple consuming applications to each have a separate view of the event stream.

Connect Azure Monitor metrics and logs to your event hub

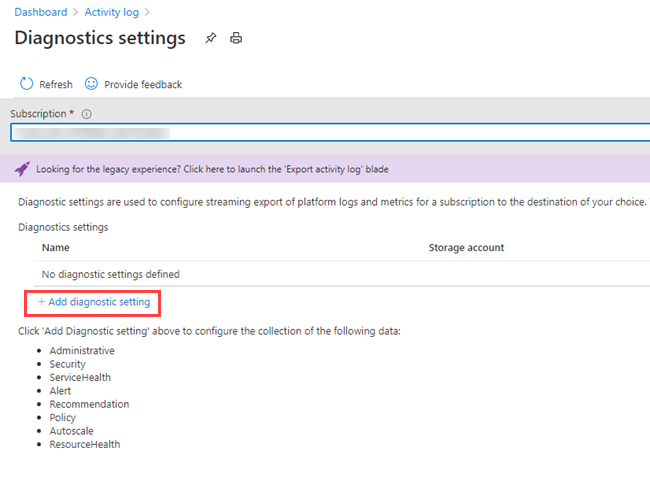

Now you need to connect your diagnostic metrics and logs and your activity logs to the event hub.

Connect diagnostic metrics and logs to your event hub

Select a resource from which to export metrics. Several resource types support exporting diagnostic data, including event hubs namespace, Azure Key Vault, Azure IoT Hub, and Azure Data Explorer clusters. In this tutorial, we'll use an Azure Data Explorer cluster as our resource, we'll review query performance metrics and ingestion results logs.

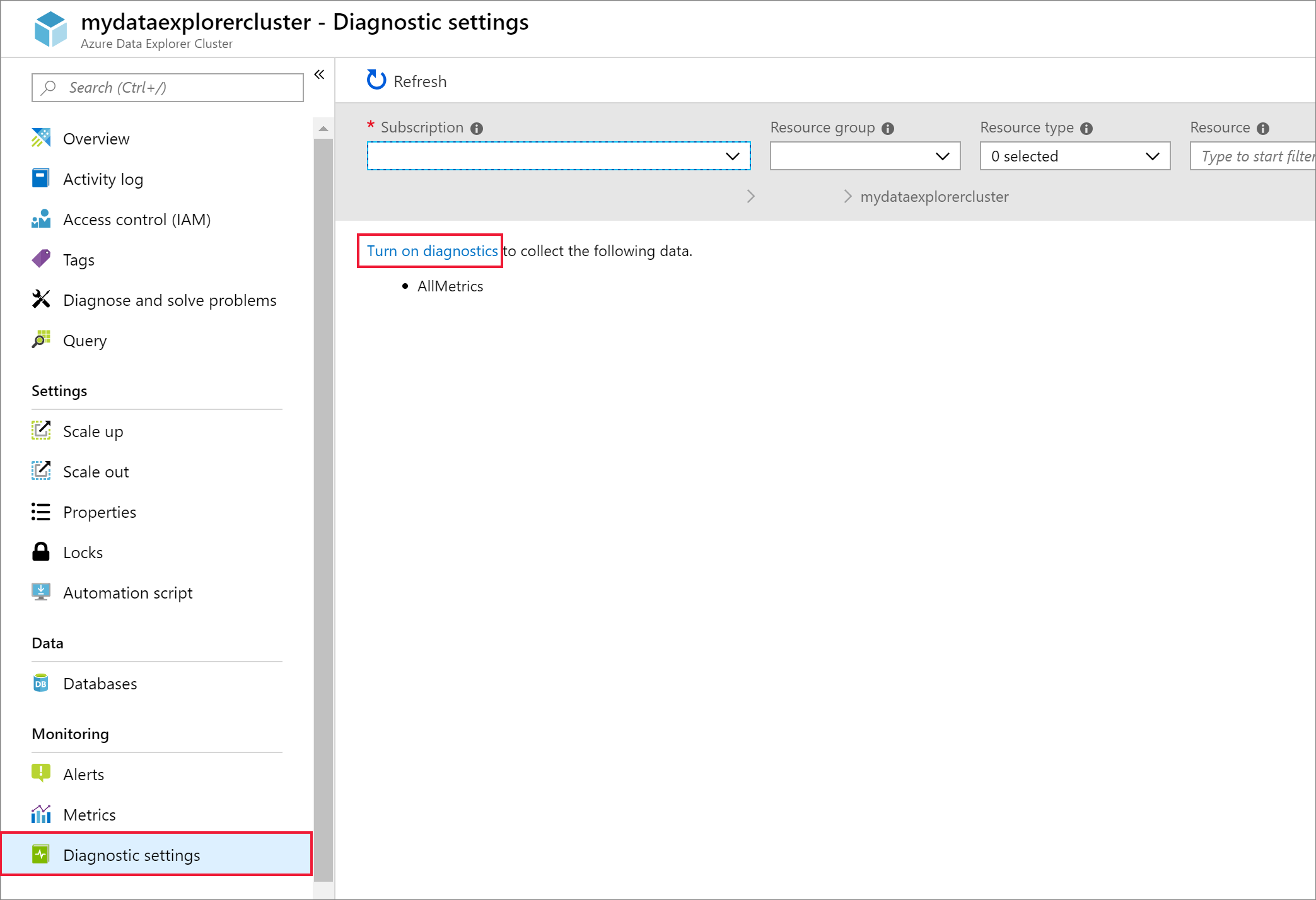

Select your Kusto cluster in the Azure portal.

Select Diagnostic settings, and then select the Turn on diagnostics link.

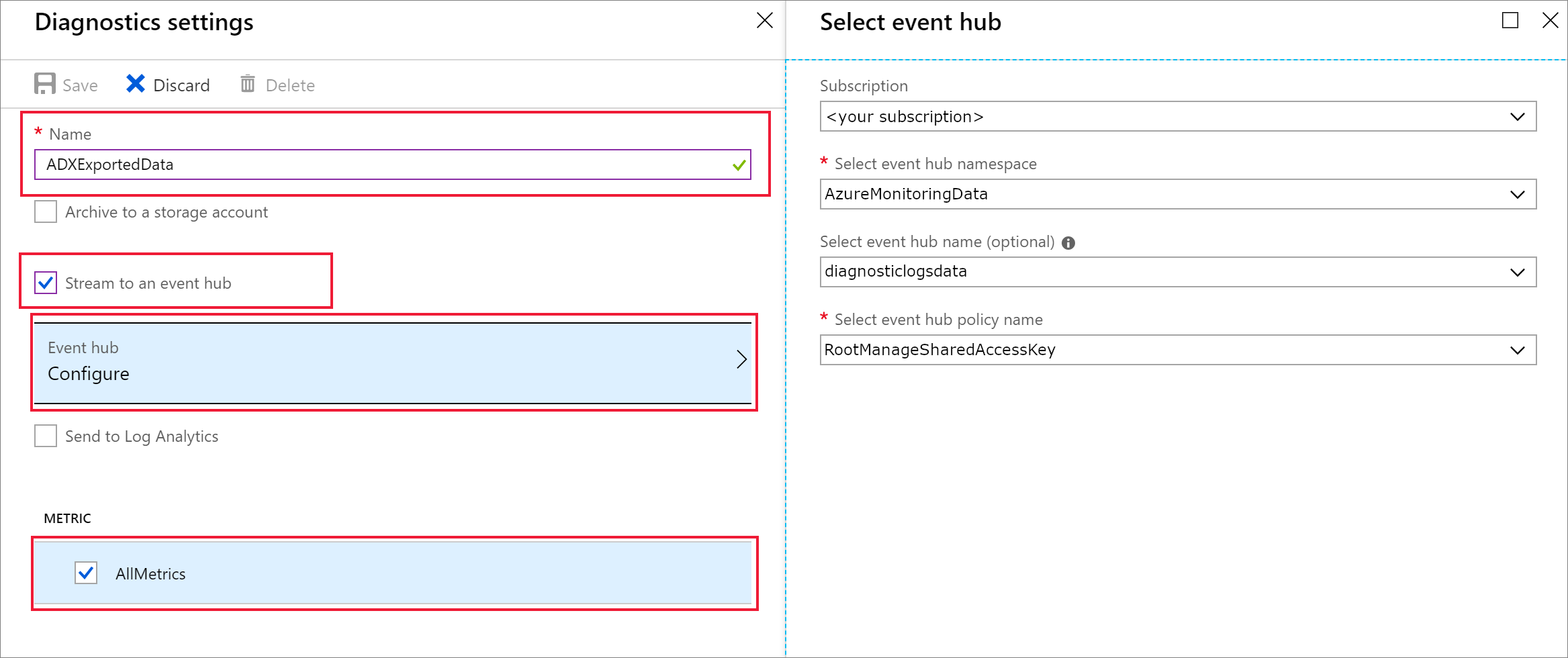

The Diagnostics settings pane opens. Take the following steps:

Give your diagnostics log data the name ADXExportedData.

Under LOG, select both SucceededIngestion and FailedIngestion check boxes.

Under METRIC, select the Query performance check box.

Select the Stream to an event hub check box.

Select Configure.

In the Select event hub pane, configure how to export data from diagnostic logs to the event hub you created:

- In the Select event hub namespace list, select AzureMonitoringData.

- In the Select event hub name list, select DiagnosticData.

- In the Select event hub policy name list, select RootManagerSharedAccessKey.

- Select OK.

Select Save.

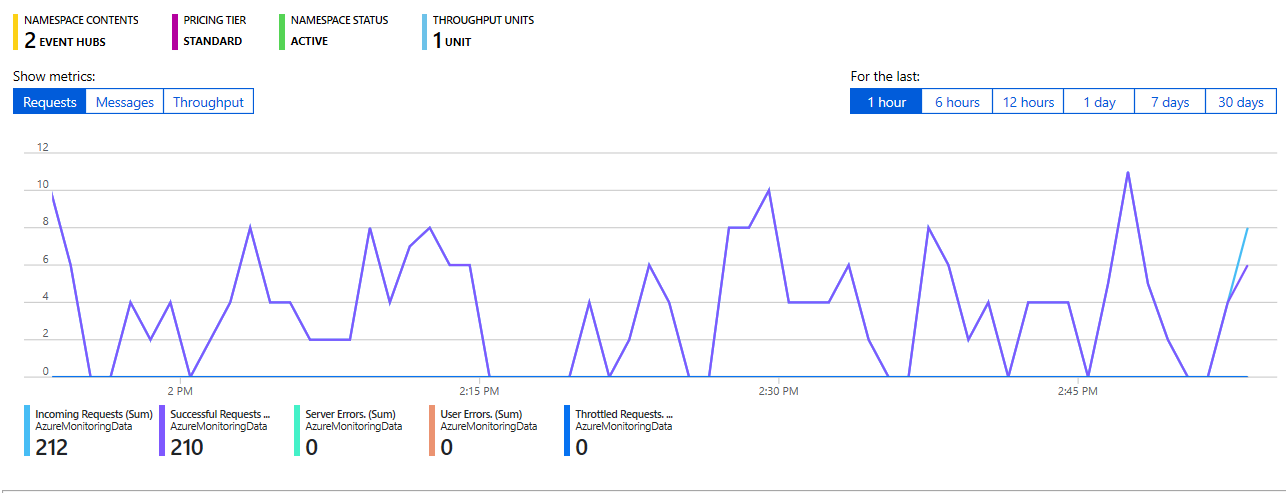

See data flowing to your event hubs

Wait a few minutes until the connection is defined, and the activity-log export to the event hub is finished. Go to your event hubs namespace to see the event hubs you created.

See data flowing to your event hub:

Connect an event hub to Azure Data Explorer

Now you need to create the data connections for your diagnostic metrics and logs and activity logs.

Create the data connection for diagnostic metrics and logs and activity logs

In your Azure Data Explorer cluster named kustodocs, select Databases in the left menu.

In the Databases window, select your TestDatabase database.

In the left menu, select Data ingestion.

In the Data ingestion window, select + Add Data Connection.

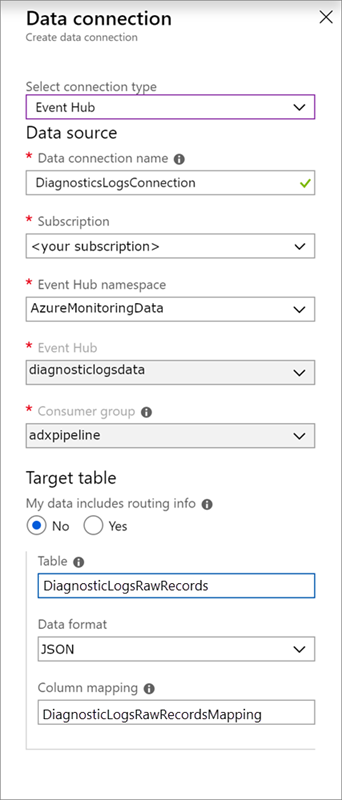

In the Data connection window, enter the following information:

Use the following settings in the Data Connection window:

Data source:

Setting Suggested value Field description Data connection name DiagnosticsLogsConnection The name of the connection you want to create in Azure Data Explorer. Event hub namespace AzureMonitoringData The name you chose earlier that identifies your namespace. Event hub DiagnosticData The event hub you created. Consumer group adxpipeline The consumer group defined in the event hub you created. Target table:

There are two options for routing: static and dynamic. For this tutorial, you'll use static routing (the default), where you specify the table name, the data format, and the mapping. Leave My data includes routing info unselected.

Setting Suggested value Field description Table DiagnosticRawRecords The table you created in the TestDatabase database. Data format JSON The format used in the table. Column mapping DiagnosticRawRecordsMapping The mapping you created in the TestDatabase database, which maps incoming JSON data to the column names and data types of the DiagnosticRawRecords table. Select Create.

Query the new tables

You now have a pipeline with data flowing. Ingestion via the cluster takes 5 minutes by default, so allow the data to flow for a few minutes before beginning to query.

Query the diagnostic metrics table

The following query analyzes query duration data from diagnostic metric records in Azure Data Explorer:

DiagnosticMetrics

| where Timestamp > ago(15m) and MetricName == 'QueryDuration'

| summarize avg(Average)

Query results:

| avg_Average |

|---|

| 00:06.156 |