Import DAGs by using Azure Blob Storage

Note

This feature is in public preview. Workflow Orchestration Manager is powered by Apache Airflow.

This article shows you step-by-step instructions on how to import directed acyclic graphs (DAGs) into Workflow Orchestration Manager by using Azure Blob Storage.

Prerequisites

- Azure subscription: If you don't have an Azure subscription, create a free Azure account before you begin.

- Azure Data Factory: Create or select an existing Data Factory instance in a region where the Workflow Orchestration Manager preview is supported.

- Azure Storage account: If you don't have a storage account, see Create an Azure Storage account for steps to create one. Ensure the storage account allows access only from selected networks.

Blob Storage behind virtual networks isn't supported during the preview. Azure Key Vault configuration in storageLinkedServices isn't supported to import DAGs.

Import DAGs

Copy either Sample Apache Airflow v2.x DAG or Sample Apache Airflow v1.10 DAG based on the Airflow environment that you set up. Paste the content into a new file called tutorial.py.

Upload the tutorial.py file to Blob Storage. For more information, see Upload a file into a blob.

Note

You need to select a directory path from a Blob Storage account that contains folders named dags and plugins to import them into the Airflow environment. Plugins aren't mandatory. You can also have a container named dags and upload all Airflow files within it.

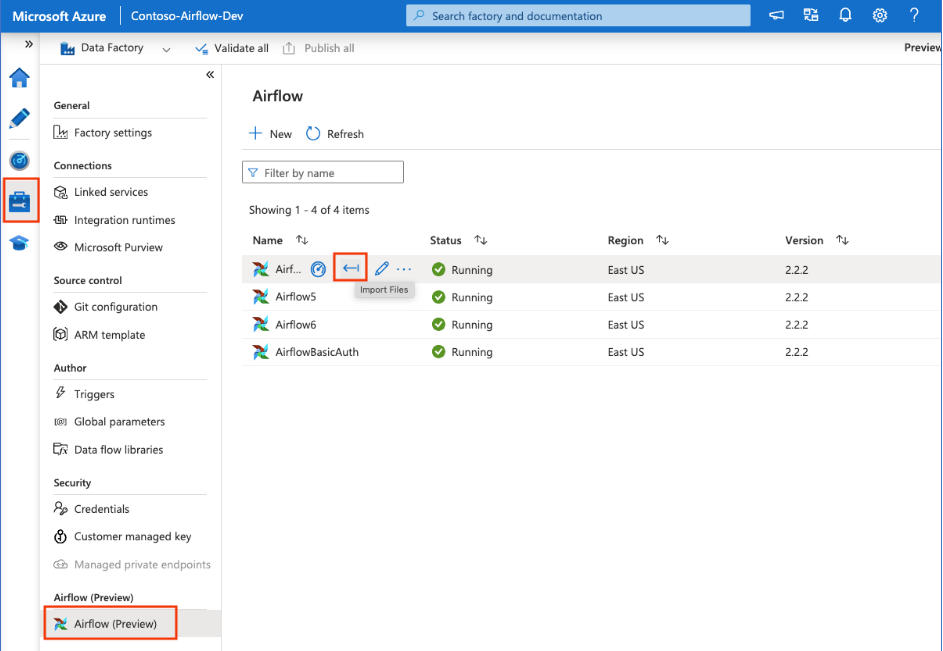

Under the Manage hub, select Apache Airflow. Then hover over the previously created Airflow environment and select Import files to import all DAGs and dependencies into the Airflow environment.

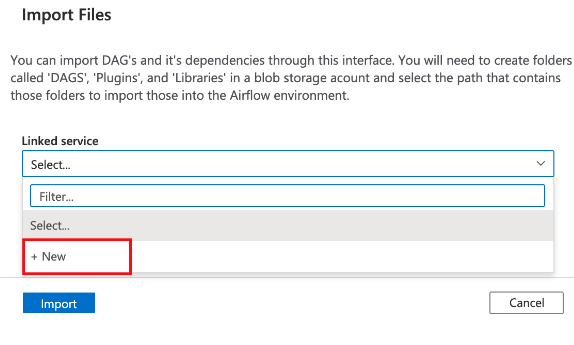

Create a new linked service to the accessible storage account mentioned in the "Prerequisites" section. You can also use an existing one if you already have your own DAGs.

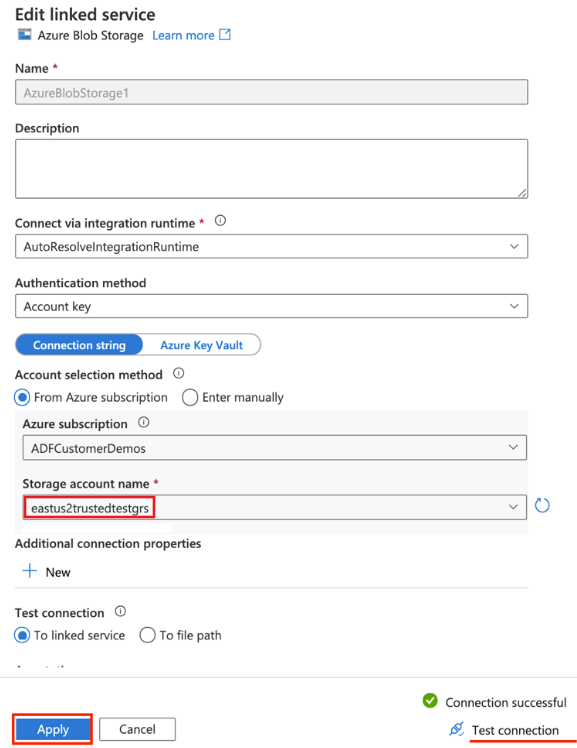

Use the storage account where you uploaded the DAG. (Check the "Prerequisites" section.) Test the connection and then select Create.

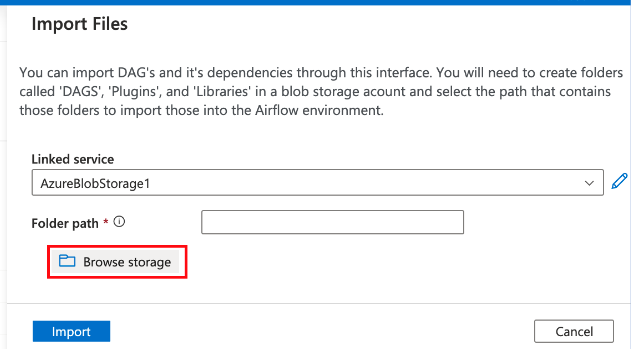

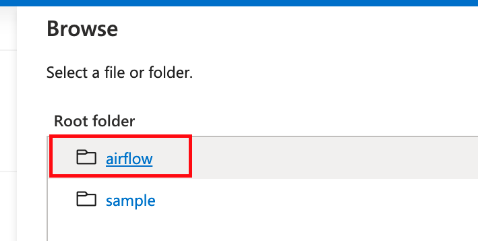

Browse and select airflow if you're using the sample SAS URL. You can also select the folder that contains the dags folder with DAG files.

Note

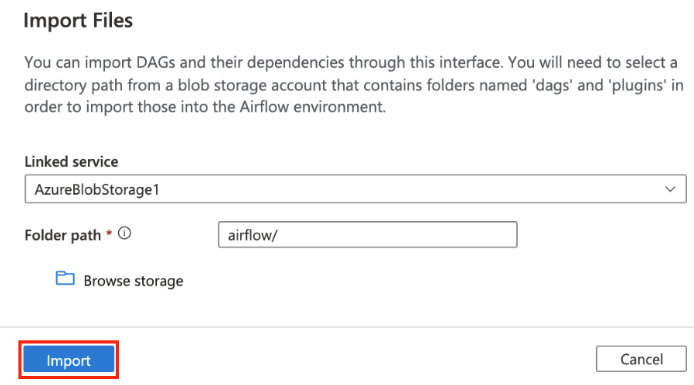

You can import DAGs and their dependencies through this interface. You need to select a directory path from a Blob Storage account that contains folders named dags and plugins to import those into the Airflow environment. Plugins aren't mandatory.

Select Import to import files.

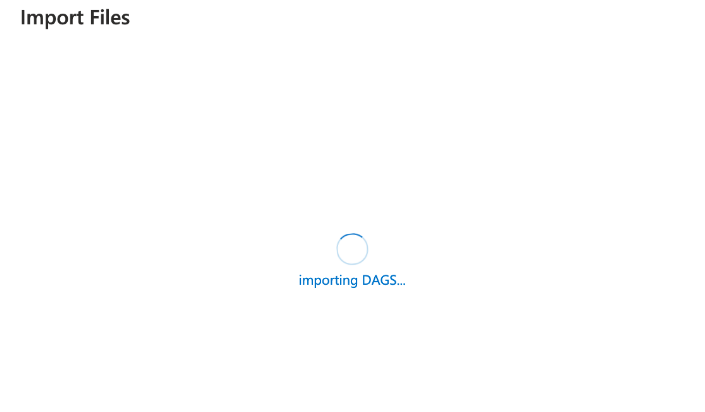

Importing DAGs could take a couple of minutes during the preview. You can use the notification center (bell icon in the Data Factory UI) to track import status updates.

משוב

בקרוב: במהלך 2024, נפתור בעיות GitHub כמנגנון המשוב לתוכן ונחליף אותו במערכת משוב חדשה. לקבלת מידע נוסף, ראה: https://aka.ms/ContentUserFeedback.

שלח והצג משוב עבור