הערה

גישה לעמוד זה דורשת אישור. אתה יכול לנסות להיכנס או לשנות תיקיות.

גישה לעמוד זה דורשת אישור. אתה יכול לנסות לשנות מדריכים.

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

Tip

Try out Data Factory in Microsoft Fabric, an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free!

You use data transformation activities in a Data Factory or Synapse pipeline to transform and process raw data into predictions and insights. The Script activity is one of the transformation activities that pipelines support. This article builds on the transform data article, which presents a general overview of data transformation and the supported transformation activities.

Using the script activity, you can execute common operations with Data Manipulation Language (DML), and Data Definition Language (DDL). DML statements like INSERT, UPDATE, DELETE and SELECT let users insert, modify, delete, and retrieve data in the database. DDL statements like CREATE, ALTER and DROP allow a database manager to create, modify, and remove database objects such as tables, indexes, and users.

You can use the Script activity to invoke a SQL script in one of the following data stores in your enterprise or on an Azure virtual machine (VM):

- Azure Database for PostgreSQL (Version 2.0)

- Azure SQL Database

- Azure Synapse Analytics

- SQL Server Database. If you're using SQL Server, install Self-hosted integration runtime on the same machine that hosts the database or on a separate machine that has access to the database. Self-Hosted integration runtime is a component that connects data sources on-premises/on Azure VM with cloud services in a secure and managed way. See the Self-hosted integration runtime article for details.

- Oracle

- Snowflake

The script can contain either a single SQL statement or multiple SQL statements that run sequentially. You can use the Script task for the following purposes:

- Truncate a table in preparation for inserting data.

- Create, alter, and drop database objects such as tables and views.

- Re-create fact and dimension tables before loading data into them.

- Run stored procedures. If the SQL statement invokes a stored procedure that returns results from a temporary table, use the WITH RESULT SETS option to define metadata for the result set.

- Save the rowset returned from a query as activity output for downstream consumption.

Syntax details

Here's the JSON format for defining a Script activity:

{

"name": "<activity name>",

"type": "Script",

"linkedServiceName": {

"referenceName": "<name>",

"type": "LinkedServiceReference"

},

"typeProperties": {

"scripts" : [

{

"text": "<Script Block>",

"type": "<Query> or <NonQuery>",

"parameters":[

{

"name": "<name>",

"value": "<value>",

"type": "<type>",

"direction": "<Input> or <Output> or <InputOutput>",

"size": 256

},

...

]

},

...

],

...

]

},

"scriptBlockExecutionTimeout": "<time>",

"logSettings": {

"logDestination": "<ActivityOutput> or <ExternalStore>",

"logLocationSettings":{

"linkedServiceName":{

"referenceName": "<name>",

"type": "<LinkedServiceReference>"

},

"path": "<folder path>"

}

}

}

}

The following table describes these JSON properties:

| Property name | Description | Required |

|---|---|---|

| name | The name of the activity. | Yes |

| type | The type of the activity, set to "Script". | Yes |

| typeProperties | Specify properties to configure the Script Activity. | Yes |

| linkedServiceName | The target database the script runs on. It should be a reference to a linked service. | Yes |

| scripts | An array of objects to represent the script. | No |

| scripts.text | The plain text of a block of queries. | No |

| scripts.type | The type of the block of queries. It can be Query or NonQuery. Default: Query. | No |

| scripts.parameter | The array of parameters of the script. | No |

| scripts.parameter.name | The name of the parameter. | No |

| scripts.parameter.value | The value of the parameter. | No |

| scripts.parameter.type | The data type of the parameter. The type is logical type and follows type mapping of each connector. | No |

| scripts.parameter.direction | The direction of the parameter. It can be Input, Output, InputOutput. The value is ignored if the direction is Output. ReturnValue type isn't supported. Set the return value of SP to an output parameter to retrieve it. | No |

| scripts.parameter.size | The max size of the parameter. Only applies to Output/InputOutput direction parameter of type string/byte[]. | No |

| scriptBlockExecutionTimeout | The wait time for the script block execution operation to complete before it times out. | No |

| logSettings | The settings to store the output logs. If not specified, script log is disabled. | No |

| logSettings.logDestination | The destination of log output. It can be ActivityOutput or ExternalStore. Default: ActivityOutput. | No |

| logSettings.logLocationSettings | The settings of the target location if logDestination is ExternalStore. | No |

| logSettings.logLocationSettings.linkedServiceName | The linked service of the target location. Only blob storage is supported. | No |

| logSettings.logLocationSettings.path | The folder path under which to store logs. | No |

Activity output

Sample output:

{

"resultSetCount": 2,

"resultSets": [

{

"rowCount": 10,

"rows":[

{

"<columnName1>": "<value1>",

"<columnName2>": "<value2>",

...

}

]

},

...

],

"recordsAffected": 123,

"outputParameters":{

"<parameterName1>": "<value1>",

"<parameterName2>": "<value2>"

},

"outputLogs": "<logs>",

"outputLogsLocation": "<folder path>",

"outputTruncated": true,

...

}

| Property name | Description | Condition |

|---|---|---|

| resultSetCount | The count of result sets returned by the script. | Always |

| resultSets | The array that contains all the result sets. | Always |

| resultSets.rowCount | Total rows in the result set. | Always |

| resultSets.rows | The array of rows in the result set. | Always |

| recordsAffected | The row count of affected rows by the script. | If scriptType is NonQuery |

| outputParameters | The output parameters of the script. | If parameter type is Output or InputOutput. |

| outputLogs | The logs written by the script, for example, print statement. | If connector supports log statement and enableScriptLogs is true and logLocationSettings isn't provided. |

| outputLogsPath | The full path of the log file. | If enableScriptLogs is true and logLocationSettings is provided. |

| outputTruncated | Indicator of whether the output exceeds the limits and get truncated. | If output exceeds the limits. |

Note

- The output is collected every time a script block is executed. The final output is the merged result of all script block outputs. The output parameter with same name in different script block will get overwritten.

- Since the output has size / rows limitation, the output will be truncated in the following order: logs -> parameters -> rows. This applies to a single script block, which means the output rows of next script block won’t evict previous logs.

- Any error caused by log won’t fail the activity.

- For consuming activity output resultSets in down stream activity, refer to the Lookup activity result documentation.

- Use outputLogs when you're using 'PRINT' statements for logging purpose. If query returns resultSets, it will be available in the activity output and will be limited to 5000 rows/ 4MB size limit.

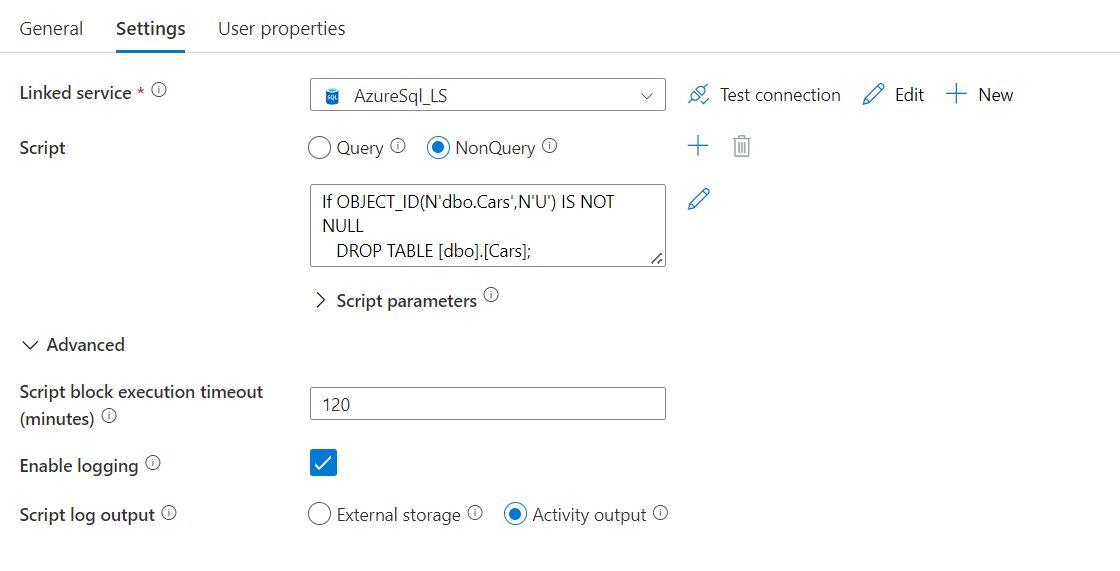

Configure the Script activity using UI

Inline script

Inline scripts integrate well with Pipeline CI/CD since the script is stored as part of the pipeline metadata.

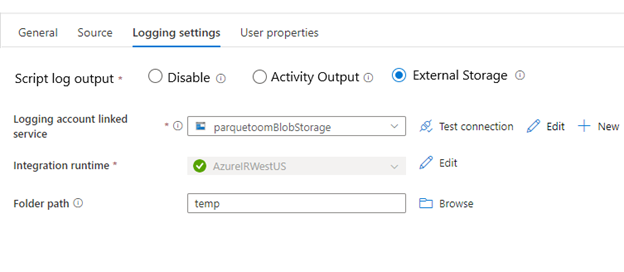

Logging

Logging options:

- Disable - No execution output is logged.

- Activity output - The script execution output is appended to the activity output. Downstream activities can then consume it. The output size is limited to 4 MB.

- External storage - Persists output to storage. Use this option if the output size is greater than 2 MB or you would like to explicitly persist the output on your storage account.

Note

Billing - The Script activity will be billed as Pipeline activities.

Related content

See the following articles that explain how to transform data in other ways: