How to access on-premises data sources in Data Factory for Microsoft Fabric

Data Factory for Microsoft Fabric is a powerful cloud-based data integration service that allows you to create, schedule, and manage workflows for various data sources. In scenarios where your data sources are located on-premises, Microsoft provides the On-Premises Data Gateway to securely bridge the gap between your on-premises environment and the cloud. This document guides you through the process of accessing on-premises data sources within Data Factory for Microsoft Fabric using the On-Premises Data Gateway.

Create an on-premises data gateway

An on-premises data gateway is a software application designed to be installed within a local network environment. It provides a means to directly install the gateway onto your local machine. For detailed instructions on how to download and install the on-premises data gateway, refer to Install an on-premises data gateway.

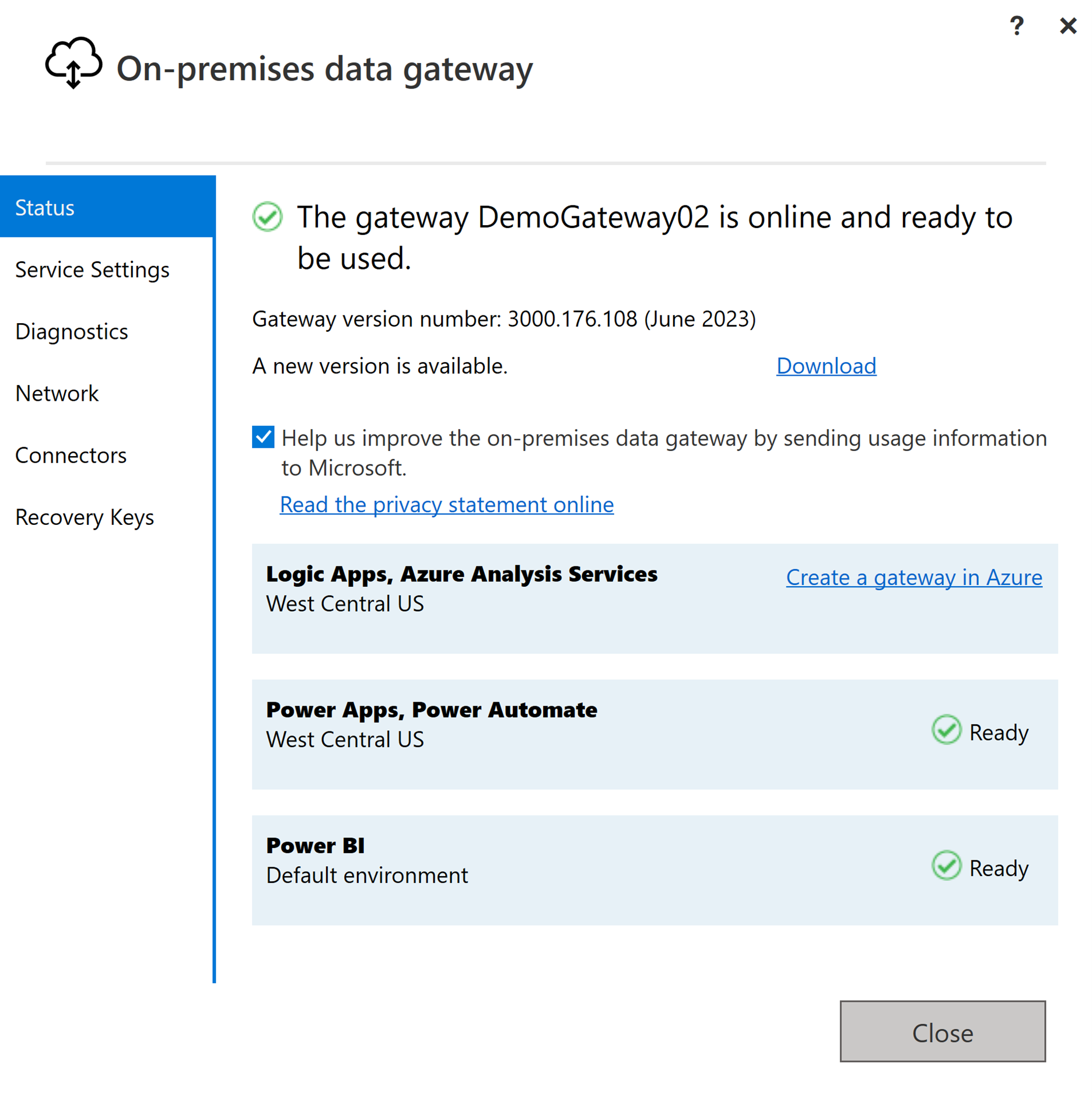

Sign-in using your user account to access the on-premises data gateway, after which it's prepared for utilization.

Note

An on-premises data gateway of version higher than or equal to 3000.214.2 is required to support Fabric pipelines.

Create a connection for your on-premises data source

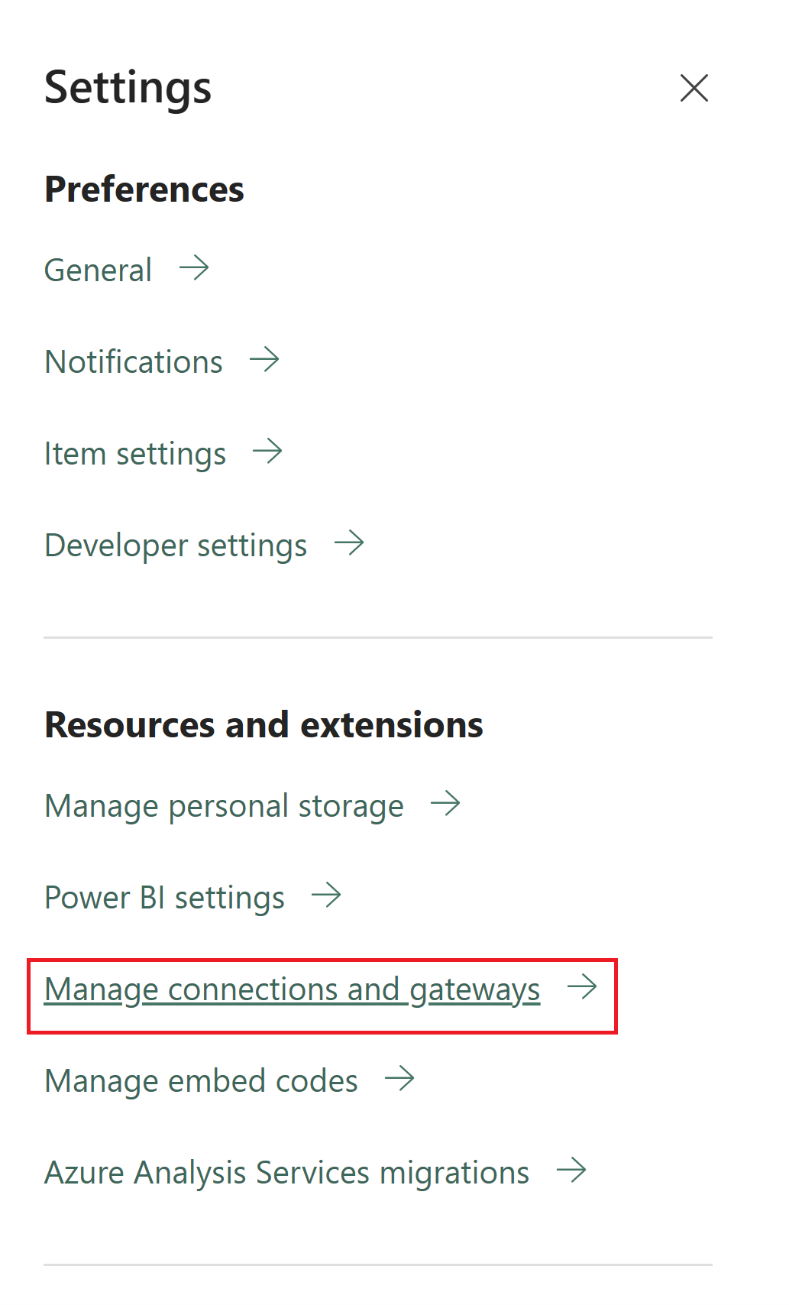

Navigate to the admin portal and select the settings button (an icon that looks like a gear) at the top right of the page. Then choose Manage connections and gateways from the dropdown menu that appears.

On the New connection dialog that appears, select On-premises and then provide your gateway cluster, along with the associated resource type and relevant information.

Available connection types supported for on-premises connections include:

- Entra ID

- Adobe Analytics

- Analysis Services

- Azure Blob Storage

- Azure Data Lake Storage Gen2

- Azure Table Storage

- Essbase

- File

- Folder

- Google Analytics

- IBM DB2

- MySQL

- OData

- ODBC

- OLE DB

- Oracle

- PostgreSQL

- Salesforce

- SAP Business Warehouse Message Server

- SAP Business Warehouse Server

- SAP HANA

- SharePoint

- SQL Server

- Sybase

- Teradata

- Web

For a comprehensive list of the connectors supported for on-premises data types, refer to Data pipeline connectors in Microsoft Fabric.

Connect your on-premises data source to a Dataflow Gen2 in Data Factory for Microsoft Fabric

Go to your workspace and create a Dataflow Gen2.

Add a new source to the dataflow and select the connection established in the previous step.

You can use the Dataflow Gen2 to perform any necessary data transformations based on your requirements.

Use the Add data destination button on the Home tab of the Power Query editor to add a destination for your data from the on-premises source.

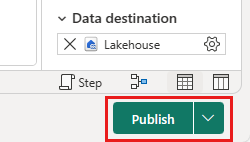

Publish the Dataflow Gen2.

Now you've created a Dataflow Gen2 to load data from an on-premises data source into a cloud destination.

Using on-premises data in a pipeline

Go to your workspace and create a data pipeline.

Note

You need to configure the firewall to allow outbound connections *.frontend.clouddatahub.net from the gateway for Fabric pipeline capabilities.

From the Home tab of the pipeline editor, select Copy data and then Use copy assistant. Add a new source to the activity in the assistant's Choose data source page, then select the connection established in the previous step.

Select a destination for your data from the on-premises data source.

Run the pipeline.

Now you've created and ran a pipeline to load data from an on-premises data source into a cloud destination.

Note

Local access to the machine with the on-premises data gateway installed is not allowed in data pipelines.