Add Apache Kafka source to an eventstream (preview)

This article shows you how to add Apache Kafka source to an eventstream.

Apache Kafka is an open-source, distributed platform for building scalable, real-time data systems. By integrating Apache Kafka as a source within your eventstream, you can seamlessly bring real-time events from your Apache Kafka and process them before routing to multiple destinations within Fabric.

Important

Enhanced capabilities of Fabric event streams are currently in preview.

Note

This source is not supported in the following regions of your workspace capacity: West US3, Switzerland West.

Prerequisites

- Access to the Fabric premium workspace with Contributor or above permissions.

- An Apache Kafka cluster running.

- Your Apache Kafka must be publicly accessible and not be behind a firewall or secured in a virtual network.

Note

The maximum number of sources and destinations for one eventstream is 11.

Add Apache Kafka as a source

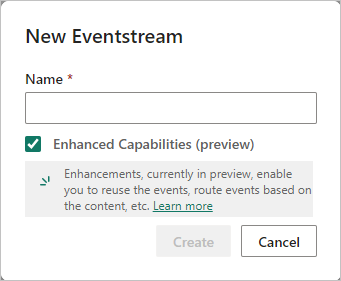

In Fabric Real-Time Intelligence, select Eventstream to create a new eventstream. Make sure the Enhanced Capabilities (preview) option is enabled.

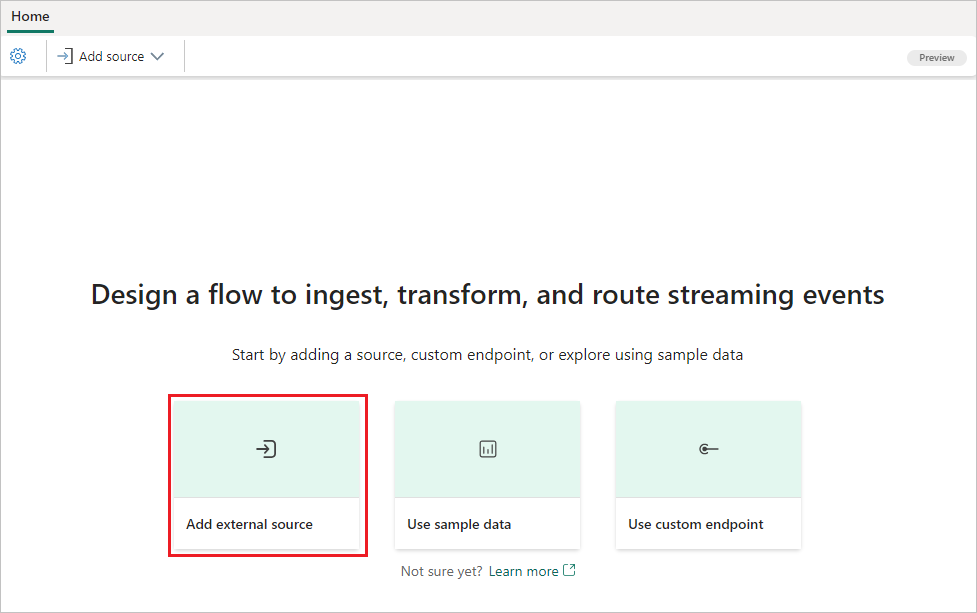

On the next screen, select Add external source.

Configure and connect to Apache Kafka

On the Select a data source page, select Apache Kafka.

On the Connect page, select New connection.

In the Connection settings section, for Bootstrap Server, enter your Apache Kafka server address.

In the Connection credentials section, If you have an existing connection to the Apache Kafka cluster, select it from the drop-down list for Connection. Otherwise, follow these steps:

- For Connection name, enter a name for the connection.

- For Authentication kind, confirm that API Key is selected.

- For Key and Secret, enter API key and key Secret.

Select Connect.

Now, on the Connect page, follow these steps.

For Topic, enter the Kafka topic.

For Consumer group, enter the consumer group of your Apache Kafka cluster. This field provides you with a dedicated consumer group for getting events.

Select Reset auto offset to specify where to start reading offsets if there's no commit.

For Security protocol, the default value is SASL_PLAINTEXT.

Note

The Apache Kafka source currently supports only unencrypted data transmission (SASL_PLAINTEXT and PLAINTEXT) between your Apache Kafka cluster and Eventstream. Support for encrypted data transmission via SSL will be available soon.

The default SASL mechanism is typically PLAIN, unless configured otherwise. You can select the SCRAM-SHA-256 or SCRAM-SHA-512 mechanism that suits your security requirements.

Select Next. On the Review and create screen, review the summary, and then select Add.

You can see the Apache Kafka source added to your eventstream in Edit mode.

After you complete these steps, the Apache Kafka source is available for visualization in Live view.

Related content

Other connectors: