Top Questions from New Users of Azure SQL Database

Reviewed by: Kun Cheng, John Hoang, Denzil Ribeiro, Rajesh Setlem

Over the years, we have been engaged with many customers who were either already using, or were in the process of adopting Azure SQL Database. Most of those engagements involved deep technical work in the areas of performance, resiliency, connectivity, and general database architecture. During these engagements, and in our interactions with the wider database community, a set of common questions about Azure SQL Database kept coming up.

This blog attempts to answer these questions, while also providing additional background and references for more information. Our goal is to help new and existing users of the service to implement their data tier on Azure SQL Database quickly and efficiently, while avoiding common mistakes.

We should add a caveat that Azure SQL Database is a fast-changing service, with improvements rolled out continuously. This blog is based on the state of the service as of October 2017. It has been updated in July 2018 to reflect recent changes in the service. Some of the information in this blog could become obsolete or superseded in the future. In case of discrepancies between this blog and Azure SQL DB documentation, the latter should take precedence.

Use the links below to quickly get to a particular question.

Q1. How can I check if my SQL Server database is compatible with Azure SQL Database? Q2. How should I choose the service tier and the performance level for my database(s)? Q3. How do I monitor resource consumption and query performance? Q4. Should I use Elastic Pools? Q5. How should I configure firewall rules? Q6. Can I create a database in a VNet? Q7. Can I use Windows Authentication in Azure SQL Database? Q8. How should I implement retry logic? Q9. What is the best way to load large amounts of data in Azure SQL Database? Q10. What if my application is chatty? Q11. What kind of workload is appropriate for Azure SQL Database? Q12. How do I scale out my database?

Q1. How can I check if my SQL Server database is compatible with Azure SQL Database?

While built from a common code base, SQL Server and Azure SQL Database have differences in supported features, behaviors, and best practices. The Azure SQL Database features documentation topic is a great starting point for research in this area.

Today, the Data Migration Assistant (DMA) tool is the simplest way to discover a wide variety of compatibility issues in databases that are candidates for migration to Azure SQL Database. The tool can access the source SQL Server database to discover potential migration blockers, unsupported features, and other compatibility issues, providing detailed findings and recommendations. DMA can also be used to migrate your database schema and data in an efficient way. Data is streamed from source to destination and is bulk loaded into the target database.

That said, for many existing applications built on SQL Server over the last years and decades, migrating to Azure SQL Database requires changes, needed to address the differences and gaps in features and behaviors. The new Managed Instance offering of Azure SQL Database, in private preview as of this writing, is intended to simplify this migration effort as much as possible. With Managed Instance, instead of provisioning one or more standalone and isolated databases, you provision a SQL Server instance in the cloud. That instance is very similar in features and behavior to SQL Server running on-premises. Conceptually, this is the same model that has been used to provision SQL Server over the last few decades, but with all the advantages of a PaaS service.

Initial information about Managed Instance is available in this blog, and more information will become available later in 2017 and 2018. For now, the rest of this blog will target the current Azure SQL Database service.

Q2. How should I choose the service tier and the performance level for my database(s)?

In today’s Azure SQL Database, there are four service tiers: Basic, Standard, Premium, and Premium RS. Each service tier has different capabilities and goals, described in What are Azure SQL Database service tiers. [July 2018 update] : The new vCore purchasing model for Azure SQL Database introduced the General Purpose and Business Critical service tiers, which are analogous to Standard and Premium service tiers. The Premium RS service tier has been deprecated.

Within each service tier, several performance levels are available. Each performance level comes with a certain allocation of resources, and can therefore sustain a certain level of performance for a given database workload. The performance level determines the cost of the database. [July 2018 update] : Resources available in each performance level are documented for the DTU purchasing model, and for vCore purchasing model.

Clearly, how to choose the most appropriate service tier and performance level is a top question for most customers, whether using Azure SQL Database for a new application, or migrating an existing application. At the same time, the answer is rarely straightforward. Even when you know the resource demands of the existing database in the on-premises environment exactly, multiple factors can change these demands during cloud migration, making the choice of the performance level an art, rather than science. For a more detailed discussion of this topic, refer to the Service Tier Considerations section of this blog.

Nevertheless, there is a silver lining in every cloud: the switching from one performance level to another in Azure SQL Database is, from a user’s perspective, a very simple process. While the duration of the scaling operation from one performance level to another can be variable, ranging from minutes to hours, the database remains online at the original performance level for the duration of the scaling operation. There is a short (seconds) period of unavailability at the end of this operation, after which the database is at the new performance level.

The strategy of making an educated guess of the performance level, monitoring performance, and adjusting it up or down has worked quite well for many customers, reducing the importance of getting the performance level “just right” from the outset.

Here, we’ll mention a few suggestions that should help in selecting the service tier, keeping in mind that these are not firm rules, and that exceptions do exist:

1. The Basic tier should not be used for production workloads. Available resources are minimal, and even simple queries can run with greatly varying durations. Storage performance is highly variable. At the same time, this is a good choice for databases used for simple development or functional testing activities, or for sufficiently small databases that are not used for long periods of time, and can therefore be scaled down to the Basic tier to save costs.

2. The Standard tier is suitable for a broad variety of common database workloads that do not have high performance demands. While storage performance in the Standard tier is very predictable compared to Basic, storage latency stays in the 2-10 ms range. A recent improvement greatly increased the variety of workloads suitable for the Standard tier. Now, the compute resources available to a Standard database can range to the same upper limit as in the Premium tier. At the same time, additional storage up to 1 TB becomes available in Standard as well. This makes it possible to use the Standard tier for both the “fast and small” and “slow and large” databases, as long as the additional capabilities of the Premium tier are not required.

3. The Premium tier is used for workloads that are the most demanding in terms of performance and availability. Its most distinguishing features are local SSD storage with sub-millisecond latency, and the use of multiple online replicas for fast failover, typically within single seconds. Additionally, certain database engine features such as In-Memory OLTP and Columnstore Indexes are only available in Premium. [July 2018 update] : Columnstore indexes are now also supported in Standard databases (starting with S3), and in all General Purpose databases.

4. The Premium RS tier, currently in preview, provides the same performance and feature capabilities as Premium (within the P1-P6 subset of performance levels), but without the availability SLA that Premium and other tiers have. It is suitable for non-production workloads that still require high performance, e.g. for load testing, or for workloads where RPO measured in minutes (i.e. the possibility of losing the most recent data changes in case of a crash) is acceptable. Premium RS is significantly less expensive than Premium.

[July 2018 update] : The vCore purchasing model simplifies the choice of performance level by allowing the customers to select the actual number of logical CPUs (vCores) for their database, and use specific hardware generations with documented per-core ratios for memory and IOPS. The transaction log and tempdb limits are also documented for each performance level.

Q3. How do I monitor resource consumption and query performance?

Monitoring database performance in Azure SQL Database provides detailed instructions on how to monitor resource consumption of your database. Here we will mention just a few additional considerations.

The standard measure of resource consumption in Azure SQL Database is known as Database Transaction Unit (DTU). Each performance level has a certain maximum DTU value, corresponding to the maximum amount of resources (CPU, memory, data IO, log write) that the workload can consume. When workload demands exceed this maximum, queries continue to run, however they run slower due to limited resources. In extreme cases, queries can time out, causing exceptions in the application.

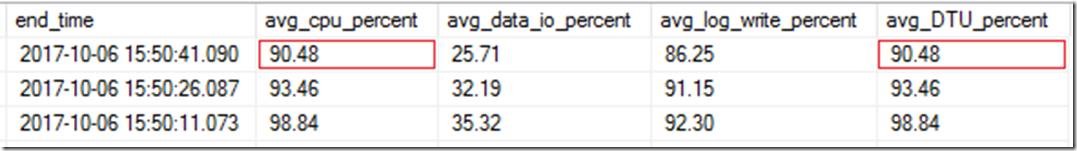

For monitoring purposes, each measure that contributes to the DTU value (CPU, data IO, log write) is expressed as a percentage of its maximum for the current performance level. DTU itself is defined simply as the highest of these three percentages at a given point in time. For example, in the following result set, obtained from the sys.dm_db_resource_stats DMV, the average DTU for the 15-second period ending on 2017-10-06 15:50:41.090 (top row) is 90.48%, because that is the highest value (avg_cpu_percent) in the preceding three columns:

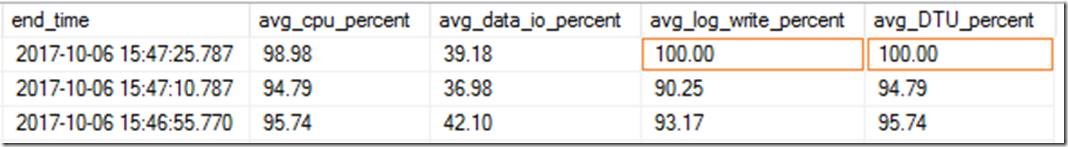

However, in the following example, DTU is at 100%, because the maximum value is avg_log_write_percent:

Here is a sample monitoring query to obtain these results:

SELECT end_time,

avg_cpu_percent,

avg_data_io_percent,

avg_log_write_percent,

(

SELECT MAX(v)

FROM (

VALUES (avg_cpu_percent),

(avg_data_io_percent),

(avg_log_write_percent)

) AS dv(v)

) AS avg_DTU_percent

FROM sys.dm_db_resource_stats;

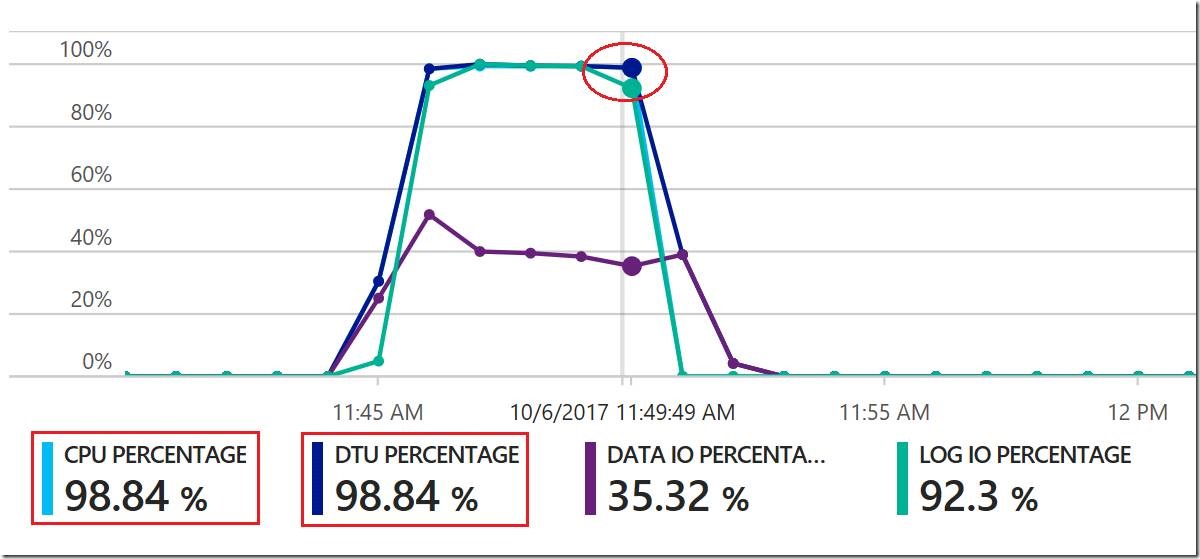

If you see that DTU consumption is close to 100%, it is often helpful to look at its three components to better understand the nature of the resource constraint the workload is facing. Here is an example from Azure portal showing that DTU is at 98.84%, because CPU is at 98.84%:

By default, the only metric shown on the database metrics graph in the portal is DTU consumption. In the above example, the CPU percentage, Data IO percentage, and Log IO percentage metrics have been added to the graph, making it clear that the workload is CPU bound, with log write a close secondary bottleneck.

If you find that the performance of your application is negatively affected because of a resource constraint, i.e. if you see that DTU is close to 100%, you can either scale up the database to a higher performance level, and possibly a higher service tier, to increase available resources, or tune the workload to perform efficiently within available resource bounds.

The latter is nothing more than the traditional query and index tuning that most DBAs are very familiar with. While many older tuning techniques are still applicable to Azure SQL Database, the availability of Query Store greatly simplifies the first part of this process, namely finding the top resource consuming queries. Query Store data can be accessed from SSMS (either from the UI, or querying Query Store views directly). Azure portal also provides a UI to examine and act on Query Store data under the Query Performance Insight blade.

Once you know the top resource consumer queries, you can examine their plans and tune them, i.e. via query rewrite or via indexing changes. If multiple plans for the same query exist, Query Store provides the ability to force the most efficient plan. Azure SQL Database can automate the process of forcing optimal plans on an ongoing basis, effectively resulting in a self-tuning database. For more details, see Automatic tuning. This is also available in the portal under the Automatic tuning blade.

For some performance troubleshooting scenarios, it may be necessary to trace individual query executions and other relevant events occurring in the database engine. Similarly to SQL Server, this can be achieved using Extended Events, with some notable differences:

1. Event sessions are always scoped to a database

2. The file target writes data to Azure Blob Storage, rather than a file

3. Not all events available in SQL Server are available in Azure SQL Database

The xel files written to a storage container can be copied locally and opened in the SSMS XEvents UI, or queried from T-SQL.

DBAs experienced in performance troubleshooting in SQL Server often use PerfMon to diagnose performance problems. While PerfMon is not available in Azure SQL Database, the sys.dm_os_performance_counters DMV provides all database engine performance counters (though the Windows counters are not available). This blog includes a script that uses this DMV to collect performance counter data in a table.

Q4. Should I use Elastic Pools?

Elastic Pool is a special type of performance level that exists in all service tiers. In an elastic pool, the resources (CPU, memory, IOPS/throughput, disk space) are shared among all databases in the pool. This can result in significant cost savings from buying less resources than would be needed for the same number of standalone databases.

Elastic pools are particularly attractive when the application uses a very large number of databases, e.g. for multi-tenant applications that use the tenant-per-database model. This is not the only use case for elastic pools though. In general, they should be considered whenever the number of deployed databases is greater than two. The important caveat here is that if spikes in the workloads of multiple databases happen at the same time, they could compete for the same resources, possibly resulting in lower throughput for all databases. In extreme cases, if cumulative workload demands exceed certain pool-level limits (i.e. the worker limit), all databases in a pool could become unavailable for new queries.

For additional details about elastic pools, see Elastic pools help you manage and scale multiple Azure SQL databases.

Q5. How should I configure firewall rules?

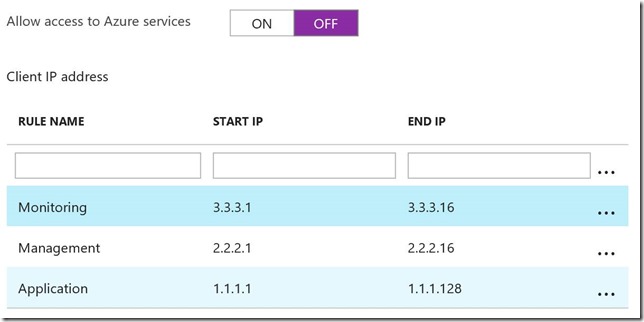

When an Azure SQL Database logical server is created initially, a special firewall rule that allows access to the entire Azure IP address space is enabled. In the portal, it looks like this:

This may be required for some applications and scenarios, e.g. if you are planning to use the Import/Export service to import bacpacs, or if the application tier is deployed in Azure and does not have a static IP address.

This firewall rule is very broad. For many applications, leaving it enabled permanently would violate the principle of least privilege. If the logical server will only be accessed from a small set of IP addresses, disable this rule, and instead create rules for each source IP address or range, using descriptive names. A simple example would look like this:

By default, the source IP address of connections originating from Azure VMs is dynamic, which makes it necessary to enable the special “Azure services” rule to allow access from your Azure VMs. However, by assigning a reserved IP address to a virtual machine or a cloud service, it is possible to create a much narrower set of rules for a small set of reserved IP addresses instead.

So far, we have been talking about server-level firewall rules. In addition, or instead of server-level rules, database-level rules can be used as well. For many scenarios, database-level rules have an advantage. Specifically, they make the database more portable. If the database is moved to a different server, or if a replica of the database is created, the firewall rules are preserved, and no additional configuration is required on the new server. On the other hand, if you have many databases on a logical server, then it may be easier to manage firewall rules at the server level, rather than in each database. For more details on firewall rules, see Azure SQL Database server-level and database-level firewall rules. One important point to note is that server-level and database-level rules are not “one on top of the other”. A connection attempt is allowed if either a server-level or a database-level rule allows it.

Q6. Can I create a database in a VNet?

Until recently, the answer to this very frequent question was an unqualified no. Today, there are two relevant developments in this area.

VNet Service Endpoints is a new option for managing network security in Azure SQL Database, currently in preview in a subset of regions. [July 2018 update] : VNet Service Endpoints are now Generally Available. This feature lets a network administrator allow connections to the database server only from a particular subnet in an Azure VNet. This allows a commonly asked for scenario where no IP address-based firewall rules are created at all, and only connections from a private VNet (or several such VNets) are allowed. Importantly, the public IP endpoint for the database still exists; however, access to this endpoint is controlled by specifying VNet/subnet identifiers, rather than by specifying public IP addresses.

Managed Instance, once available, will allow the instance to be created within a VNet, and accessible only via a private IP address, resolving the security and compliance concerns that many customers have today.

Q7. Can I use Windows Authentication in Azure SQL Database?

The short answer is no. Therefore, if you are migrating an application dependent on Windows Authentication from SQL Server to Azure SQL Database, you may have to either switch to SQL Authentication (i.e. use a separate login and password for database access), or use Azure Active Directory Authentication (AAD Authentication).

The latter is conceptually similar to Windows Authentication in the sense that connections from directory principals are authenticated without the need to provide additional secrets, such as a password. Since Azure Active Directory can be federated with the on-premises Active Directory Domain Services, it can effectively authenticate the same Active Directory principals that could access the database prior to migration. However, the authentication flow for AAD Authentication is significantly different, so the analogy with Windows Authentication only goes so far.

Q8. How should I implement retry logic?

It is widely recognized that applications running in public cloud should be resilient to transient faults. This requires implementing retry logic in the application. For applications using Azure SQL Database, there are two kinds of operations that may have to be retried when the database is temporarily unavailable. One is opening a connection to the database, and the other is executing a command against the database (e.g. running a query or making a procedure call).

Retrying connection attempts is relatively straightforward, though there are some things worth mentioning.

One is that some client libraries, specifically the ones wrapping ODBC and SqlClient drivers, may take advantage of the Idle Connection Resiliency feature in SQL Server and Azure SQL Database. For ADO.NET, this is available starting with version 4.5.1. This feature can transparently reestablish some broken database connections without any additional code in the application. Does this mean that retry logic for opening connections is no longer needed? Not really – there are still cases when even with Idle Connection Resiliency, a broken connection cannot be recovered and needs to be opened by the application again, i.e. because connection state cannot be fully reestablished (consider a temp table that no longer exists on the server once the connection is broken).

Another note is related to ADO.NET connection pooling, which implements a behavior called “blocking period”. For details, see SQL Server Connection Pooling (ADO.NET). In summary, if an error occurs when a connection is being opened, connection attempts made in the next five seconds will fail fast without actually attempting to connect to the database. Furthermore, if connection attempts made after five seconds continue to fail, the blocking period will be progressively increased. As a practical outcome, this means that with blocking period enabled, connection failures should be initially retried after at least five seconds have elapsed, to avoid being further delayed by the blocking period.

Starting with ADO.NET 4.6.2, the blocking period behavior is configurable via a connection string attribute. By default in that version, blocking period is disabled for Azure SQL Database, but enabled for other SQL Server connections. If blocking period is disabled, it becomes feasible to retry connections more frequently. For Premium databases, most failovers by far complete within ten seconds. If a connection attempt fails with a timeout during a failover, and the timeout is set to a sufficiently large value (30 seconds is recommended), then by the time the application gets the timeout error, the failover has likely completed, and the connection can be retried right away.

Improvements in the way client libraries handle transient faults in Azure SQL Database have been made in recent releases. We recommend using the latest libraries, i.e. ADO.NET 4.6.2 or later, and JDBC 6.2.2 or later.

Azure SQL Database implements a mechanism that guards against Denial Of Service (DOS) attacks. This mechanism may be triggered if the number of connection attempts per unit time exceeds a certain threshold. This is relevant in the context of implementing retry logic. If retries are too aggressive, i.e. if the application attempts to reestablish all connections at the same time or in a tight loop, then the DOS mechanism may be triggered. The symptom of triggering DOS protection is getting error 18456, "Login failed for user ...", with state code 113. If this happens, consider adding a randomized delay before attempting to reconnect, or implement an exponential back-off policy for retries.

Unlike connections, retrying database commands is much more complicated. Whether you should retry a particular command depends not only on the kind of error you get, but also on the business logic being implemented by the command, the state of the application (i.e. is there an open transaction), and the state of the data in the database (i.e. have other processes modified the data in an unexpected way). Due to these complexities, a general recommendation on command retry other than “it depends” would be fraught with peril. One approach to consider is to retry not individual database commands, but the higher level “application transactions”, such as creating a user account, or checking in a code changeset, or transferring points from one account to another.

Before implementing retry logic in your application, we strongly recommend reviewing Troubleshoot, diagnose, and prevent SQL connection errors and transient errors for SQL Database for detailed info.

Q9. What is the best way to load large amounts of data in Azure SQL Database?

In principle, the answer is the same as it is for the traditional SQL Server. Bulk load still provides the highest throughput. This includes methods such as BCP, BULK INSERT, OLEDB Destination in SSIS, the SqlBulkCopy class in ADO.NET, and SQLServerBulkCopy class in JDBC Driver.

One significant difference between SQL Server and Azure SQL Database is that the latter uses resource governance to limit the amount of resources available in each performance level. Transaction log write throughput is one of governed resource types, and it is often 100% utilized during bulk load, limiting load throughput. If your large data load is an infrequent event, consider temporarily scaling up to a higher performance level that allows higher transaction log write throughput. To monitor resource consumption during data load and see if log write is the bottleneck, use the sys.dm_db_resource_stats DMV, as described in Monitoring database performance in Azure SQL Database.

Another difference is that in traditional SQL Server, an improvement in data load throughput can be achieved if minimal logging is used. However, that requires the database to use either Simple or Bulk-Logged recovery model. All Azure SQL DB databases use Full recovery model to support RPO and RTO goals; therefore, data load is always fully logged, potentially reducing load throughput.

Note that you may be able to reduce log usage by using clustered columnstore indexes and using batch sizes larger than 102400 rows, to load directly into compressed row groups. For details, see Columnstore indexes - data loading guidance.

One new method for loading data into Azure SQL Database is using BULK INSERT to load data from Azure Blob Storage. This supports both native BCP and CSV files as the source. If copying data from an on-premises database, an efficient way would be to export data in native BCP format, upload to a storage account in the same region as the target Azure SQL DB database using AzCopy, and then load into the target database using BULK INSERT.

Q10. What if my application is chatty?

A chatty application makes frequent small requests to the database, resulting in many network round trips. For example, the application could be inserting or updating data one row at a time, or using client-side cursors. In general, such patterns are much less efficient than using set-based statements that affect multiple rows, or sending multiple SQL statements to the server in a single batch, rather than in a separate batch per statement.

For Azure SQL Database, the usual advice for this type of applications has been to refactor code to batch database requests as much as possible, as described in How to use batching to improve SQL Database application performance. That advice is still sound (and not just for Azure SQL Database), and should be followed when developing new applications.

At the same time, making an existing application less chatty may not be a small task. There are improvements in Azure SQL Database that allow chatty applications to perform better today by reducing network latency, compared to the early days of the service.

One improvement has been in place for some time, and is known as client redirection. This removes the regional gateway from the network path for established connections, thus significantly reducing network latency. Client redirection is described in Azure SQL Database Connectivity Architecture, with additional details available in Connect to Azure SQL Database V12 via Redirection. By default, client redirection is enabled only when the client IP address is a part of Azure IP address space. If the client is outside of Azure, the latency of the WAN link is likely large enough to make the improvement from using client redirection insignificant. Nevertheless, if warranted, client redirection can be enabled for all clients, as described in the previous two links.

Azure SQL Database also takes advantage of the Accelerated Networking (AN) improvement in Azure. While this is not yet available for all databases, the observed roundtrip network latency for databases on AN-enabled infrastructure that also use client redirection is below 1 ms. [July 2018 update] : If using the vCore purchasing model, customers can choose Gen5 as the hardware generation. All Gen5 capacity in Azure SQL Database is AN-enabled.

Q11. What kind of workload is appropriate for Azure SQL Database?

Most of the time, Azure SQL Database is positioned as most appropriate for transactional OLTP workloads, i.e. the workloads consisting primarily of many short transactions occurring at a high rate. While such workloads do indeed run well in Azure SQL Database, some types of analytical and data warehousing workloads can also use the service. In the Premium service tier, Azure SQL Database supports columnstore indexes, providing high compression ratios for large data volumes, and memory-optimized non-durable tables, which can be used for staging data without persisting it to disk. [July 2018 update] : Columnstore indexes are now also supported in Standard databases (starting with S3), and in all General Purpose databases. At higher performance levels, the level of query concurrency is similar to what can be achieved on a traditional SQL Server instance in a large VM, albeit without the ability to fine-tune resource consumption using Resource Governor. While SQL Server in an Azure VM always uses remote storage for database files, the Premium/Business Critical service tier in Azure SQL Database uses local SSD storage, which provides much higher IOPS/throughput, and makes Azure SQL Database attractive for small to medium scale analytical workloads.

There are several limiting factors that determine whether an analytical workload can run efficiently, or at all, in Azure SQL Database:

1. Database size limitations. Today, the largest available database size is 4 TB, currently ruling out the service as the platform for larger data warehouses. At the same time, if your data warehouse database exceeds this limit, but currently does not use columnstore indexes, then the compression benefit of columnstore may well put it comfortably within the limit even if the actual uncompressed data volume is much higher than 4 TB.

2. Analytical workloads often use tempdb heavily. However, the size of tempdb is limited in Azure SQL Database.

3. As mentioned in the earlier question about large data loads, the governance of transaction log writes, and mandatory full recovery model can make data loads slower.

If your analytical or data warehousing workload can work within these limits, then Azure SQL Database may be a good choice. Otherwise, you may consider SQL Server running in an Azure VM, or, for larger data volumes measured in multiple terabytes, Azure SQL Data Warehouse with its MPP architecture.

Q12. How do I scale out my database?

Scaling out in this context refers to using multiple similarly structured databases to serve the application workload. The goal is to use much higher (in theory, limitless) amounts of compute and storage resources than what is available to a single database even in the top performance tier, and thus achieve much higher degree of performance and concurrency. There are two Azure SQL Database features to consider here: Geo-replication and Elastic Scale.

Geo-replication allows you to create multiple read-only replicas of the primary read-write database, maintained asynchronously with five second data latency SLA. Even though the name of the feature implies that these replicas are geographically dispersed, they can be created in any region, including the same region where the primary is located. In Azure portal, up to four replicas can be created, however more replicas can be created using PowerShell or ARM API, by specifying one of the four secondary replicas as the primary for a new set of replicas. Note that this configuration is intended to support scale-out for read-only workloads; not all failover scenarios are supported. This configuration has been tested for up to 16 replicas, with each of the four original secondaries being the primary for a set of up to three replicas.

Elastic Scale, or more specifically Elastic Database Client Library, allows you to shard your data over many databases. Thousands of databases are supported. Unlike Geo-replication, these databases are not read-only replicas; each database contains a distinct subset of data, and is both readable and writeable. The client library facilitates querying of these databases by using a shard map, which is stored in a separate database, describing the allocation of data across database shards.

Comments

- Anonymous

November 21, 2017

Re "Q8. How should I implement retry logic" there is no mention of using Enterprise Library Transient Fault Handling on the client side. Is this still valid, or does ADO.NET 4.6.2 encapsulate this functionality and make it redundant?Thanks- Anonymous

November 22, 2017

Transient Fault Handling Block (TFHB) is a general framework for implementing retries against several Azure services, including Azure SQL Database. It was originally developed when transient faults in Azure SQL Database (v11) were much more common than in the current v12 version.The scope of retry logic implemented in ADO.NET is much narrower; all it does is transparently re-establish some types of broken connections. If your applications use TFHB taking advantage of its capabilities, i.e. multiple detection and retry strategies that are available, they do not have to change. For new applications, depending on their fault resiliency requirements, it may be simpler to rely on ADO.NET for retrying connection attempts, and implement the rest of retry logic at the application transaction level, particularly if you want to avoid taking a dependency on Enterprise Library, which is no longer developed by Microsoft.

- Anonymous

- Anonymous

May 04, 2018

A really important question is how can I (preferably with Azure Powershell since it doesn't seem to be native at this point in time) create an alert that will email/text me when one of my customer databases has a Resource Health Event?Over that last three weeks we have had two of these with extended outage times.If I can create alerts (they are not native that I can see) then I can arise at 0-dark-thirty, remove the SECONDARY relationship on the impacted database, point my application to the former-secondary (now primary) database, and create a secondary on another elastic pool, then open a Premier support ticket and wait for the call...- Anonymous

May 04, 2018

Today, one way to achieve this is to write an Azure Automation PowerShell runbook that will poll the Resource Health REST API, and send notifications accordingly. The cmdlet to use is Invoke-RestMethod. Here is one example of a PS script that calls REST API.That said, I agree that native notification capability for Resource Health events would be very useful. There is an existing feedback item for this, please vote.- Anonymous

May 07, 2018

I have voted. Thank you. - Anonymous

May 08, 2018

I am not looking forward to having to actively poll 300 customer databases across five elastic pools on a periodic basis.

- Anonymous

- Anonymous