Nóta

Aðgangur að þessari síðu krefst heimildar. Þú getur prófað aðskrá þig inn eða breyta skráasöfnum.

Aðgangur að þessari síðu krefst heimildar. Þú getur prófað að breyta skráasöfnum.

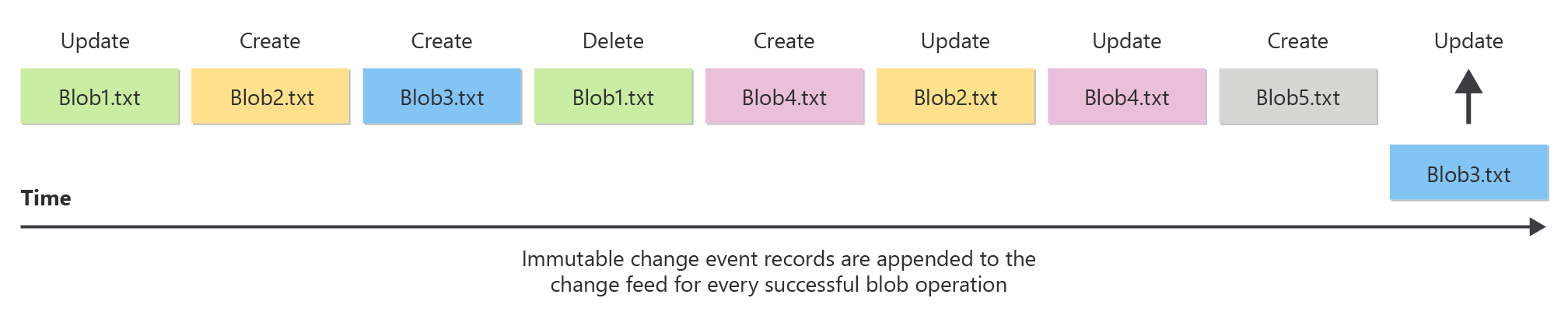

The purpose of the change feed is to provide transaction logs of all the changes that occur to the blobs and the blob metadata in your storage account. The change feed provides ordered, guaranteed, durable, immutable, read-only logs of these changes. Client applications can read these logs at any time, either in streaming or in batch mode. Each change generates exactly one transaction log entry, so you won't have to manage multiple log entries for the same change. The change feed enables you to build efficient and scalable solutions that process change events that occur in your Blob Storage account at a low cost.

To learn how to process records in the change feed, see Process change feed in Azure Blob Storage.

How the change feed works

Change feed records are stored as blobs in a special container in your storage account at standard blob pricing cost. You can control the retention period of these files based on your requirements (See the conditions of the current release). Change events are appended to the change feed as records in the Apache Avro format specification: a compact, fast, binary format that provides rich data structures with inline schema. This format is widely used in the Hadoop ecosystem, Stream Analytics, and Azure Data Factory.

You can process these logs asynchronously, incrementally or in-full. Any number of client applications can independently read the change feed, in parallel, and at their own pace. Analytics applications such as Apache Drill or Apache Spark can consume logs directly as Avro files, which let you process them at a low-cost, with high-bandwidth, and without having to write a custom application.

The following diagram shows how records are added to the change feed:

Change feed support is well-suited for scenarios that process data based on objects that have changed. For example, applications can:

- Update a secondary index, synchronize with a cache, search-engine, or any other content-management scenarios.

- Extract business analytics insights and metrics, based on changes that occur to your objects, either in a streaming manner or batched mode.

- Store, audit, and analyze changes to your objects, over any period of time, for security, compliance or intelligence for enterprise data management.

- Build solutions to backup, mirror, or replicate object state in your account for disaster management or compliance.

- Build connected application pipelines that react to change events or schedule executions based on created or changed object.

Change feed is a prerequisite feature for Object Replication and Point-in-time restore for block blobs.

Note

Change feed provides a durable, ordered log model of the changes that occur to a blob. Changes are written and made available in your change feed log within an order of a few minutes of the change. If your application has to react to events much quicker than this, consider using Blob Storage events instead. Blob Storage Events provides real-time one-time events which enable your Azure Functions or applications to quickly react to changes that occur to a blob.

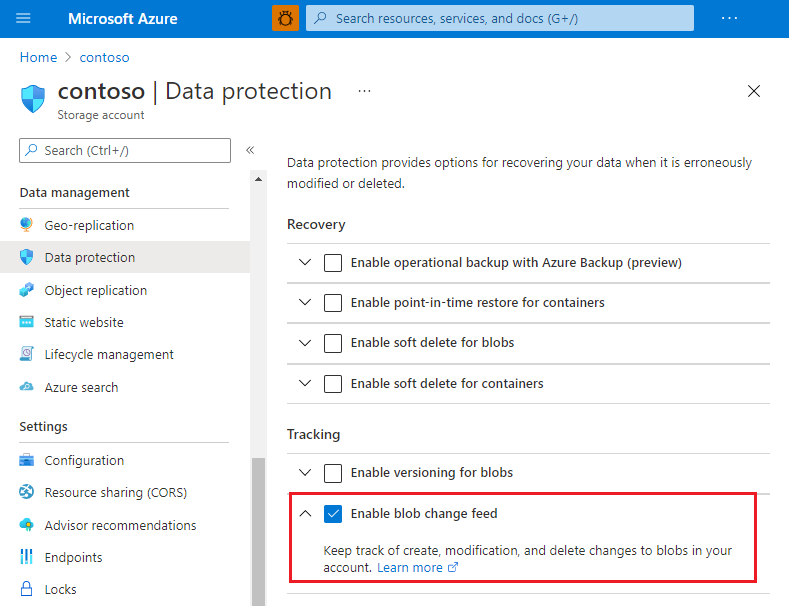

Enable and disable the change feed

You must enable the change feed on your storage account to begin capturing and recording changes. Disable the change feed to stop capturing changes. You can enable and disable changes by using Azure Resource Manager templates on Portal or PowerShell.

Here's a few things to keep in mind when you enable the change feed.

There's only one change feed for the blob service in each storage account. Change feed records are stored in the $blobchangefeed container.

Create, Update, and Delete changes are captured only at the blob service level.

The change feed captures all of the changes for all of the available events that occur on the account. Client applications can filter out event types as required. (See the conditions of the current release).

Only standard general-purpose v2, premium block blob, and Blob storage accounts can enable the change feed. Accounts with a hierarchical namespace enabled are not currently supported. General-purpose v1 storage accounts are not supported but can be upgraded to general-purpose v2 with no downtime, see Upgrade to a GPv2 storage account for more information.

By default, Change Feed retains logs indefinitely. Retention period for Change Feed logs can be configured in terms of days, allowing to define how long the logs are preserved before automatic deletion. This retention behavior can be modified either during the initial configuration of Change Feed or subsequently through the Data Protection option under the Data Management section in the Azure portal.

To delete all existing Change Feed logs, update the retention period to 1 day, and subsequently disable the Change Feed feature.

Enable change feed on your storage account by using Azure portal:

In the Azure portal, select your storage account.

Navigate to the Data protection option under Data Management.

Under Tracking, select Enable blob change feed.

Choose the Save button to confirm your data protection settings.

Consume the change feed

The change feed produces several metadata and log files. These files are located in the $blobchangefeed container of the storage account. The $blobchangefeed container can be viewed either via the Azure portal or via Azure Storage Explorer.

Your client applications can consume the change feed by using the blob change feed processor library that is provided with the change feed processor SDK. To learn how to process records in the change feed, see Process change feed logs in Azure Blob Storage.

Change feed segments

The change feed is a log of changes that are organized into hourly segments but appended to and updated every few minutes. These segments are created only when there are blob change events that occur in that hour. This enables your client application to consume changes that occur within specific ranges of time without having to search through the entire log. To learn more, see the Specifications.

An available hourly segment of the change feed is described in a manifest file that specifies the paths to the change feed files for that segment. The listing of the $blobchangefeed/idx/segments/ virtual directory shows these segments ordered by time. The path of the segment describes the start of the hourly time-range that the segment represents. You can use that list to filter out the segments of logs that are of interest to you.

Name Blob Type Blob Tier Length Content Type

---------------------------------------------------------------------- ----------- ----------- -------- ----------------

$blobchangefeed/idx/segments/1601/01/01/0000/meta.json BlockBlob 584 application/json

$blobchangefeed/idx/segments/2019/02/22/1810/meta.json BlockBlob 584 application/json

$blobchangefeed/idx/segments/2019/02/22/1910/meta.json BlockBlob 584 application/json

$blobchangefeed/idx/segments/2019/02/23/0110/meta.json BlockBlob 584 application/json

Note

The $blobchangefeed/idx/segments/1601/01/01/0000/meta.json is automatically created when you enable the change feed. You can safely ignore this file. It is an always empty initialization file.

The segment manifest file (meta.json) shows the path of the change feed files for that segment in the chunkFilePaths property. Here's an example of a segment manifest file.

{

"version": 0,

"begin": "2019-02-22T18:10:00.000Z",

"intervalSecs": 3600,

"status": "Finalized",

"config": {

"version": 0,

"configVersionEtag": "0x8d698f0fba563db",

"numShards": 2,

"recordsFormat": "avro",

"formatSchemaVersion": 1,

"shardDistFnVersion": 1

},

"chunkFilePaths": [

"$blobchangefeed/log/00/2019/02/22/1810/",

"$blobchangefeed/log/01/2019/02/22/1810/"

],

"storageDiagnostics": {

"version": 0,

"lastModifiedTime": "2019-02-22T18:11:01.187Z",

"data": {

"aid": "55e507bf-8006-0000-00d9-ca346706b70c"

}

}

}

Note

The $blobchangefeed container appears only after you've enabled the change feed feature on your account. You'll have to wait a few minutes after you enable the change feed before you can list the blobs in the container.

Change event records

The change feed files contain a series of change event records. Each change event record corresponds to one change to an individual blob. The records are serialized and written to the file using the Apache Avro format specification. The records can be read by using the Avro file format specification. There are several libraries available to process files in that format.

Change feed files are stored in the $blobchangefeed/log/ virtual directory as append blobs. The first change feed file under each path will have 00000 in the file name (For example 00000.avro). The name of each subsequent log file added to that path will increment by 1 (For example: 00001.avro).

Event record schemas

For a description of each property, see Azure Event Grid event schema for Blob Storage. The BlobPropertiesUpdated and BlobSnapshotCreated events are currently exclusive to change feed and not yet supported for Blob Storage Events.

Note

The change feed files for a segment don't immediately appear after a segment is created. The length of delay is within the normal interval of publishing latency of the change feed which is within a few minutes of the change.

Schema version 1

The following event types may be captured in the change feed records with schema version 1:

- BlobCreated

- BlobDeleted

- BlobPropertiesUpdated

- BlobSnapshotCreated

The following example shows a change event record in JSON format that uses event schema version 1:

{

"schemaVersion": 1,

"topic": "/subscriptions/<subscription>/resourceGroups/<resource-group>/providers/Microsoft.Storage/storageAccounts/<storage-account>",

"subject": "/blobServices/default/containers/<container>/blobs/<blob>",

"eventType": "BlobCreated",

"eventTime": "2022-02-17T12:59:41.4003102Z",

"id": "322343e3-8020-0000-00fe-233467066726",

"data": {

"api": "PutBlob",

"clientRequestId": "f0270546-168e-4398-8fa8-107a1ac214d2",

"requestId": "322343e3-8020-0000-00fe-233467000000",

"etag": "0x8D9F2155CBF7928",

"contentType": "application/octet-stream",

"contentLength": 128,

"blobType": "BlockBlob",

"url": "https://www.myurl.com",

"sequencer": "00000000000000010000000000000002000000000000001d",

"storageDiagnostics": {

"bid": "9d725a00-8006-0000-00fe-233467000000",

"seq": "(2,18446744073709551615,29,29)",

"sid": "4cc94e71-f6be-75bf-e7b2-f9ac41458e5a"

}

}

}

Schema version 3

The following event types may be captured in the change feed records with schema version 3:

- BlobCreated

- BlobDeleted

- BlobPropertiesUpdated

- BlobSnapshotCreated

The following example shows a change event record in JSON format that uses event schema version 3:

{

"schemaVersion": 3,

"topic": "/subscriptions/<subscription>/resourceGroups/<resource-group>/providers/Microsoft.Storage/storageAccounts/<storage-account>",

"subject": "/blobServices/default/containers/<container>/blobs/<blob>",

"eventType": "BlobCreated",

"eventTime": "2022-02-17T13:05:19.6798242Z",

"id": "eefe8fc8-8020-0000-00fe-23346706daaa",

"data": {

"api": "PutBlob",

"clientRequestId": "00c0b6b7-bb67-4748-a3dc-86464863d267",

"requestId": "eefe8fc8-8020-0000-00fe-233467000000",

"etag": "0x8D9F216266170DC",

"contentType": "application/octet-stream",

"contentLength": 128,

"blobType": "BlockBlob",

"url": "https://www.myurl.com",

"sequencer": "00000000000000010000000000000002000000000000001d",

"previousInfo": {

"SoftDeleteSnapshot": "2022-02-17T13:08:42.4825913Z",

"WasBlobSoftDeleted": "true",

"BlobVersion": "2024-02-17T16:11:52.0781797Z",

"LastVersion" : "2022-02-17T16:11:52.0781797Z",

"PreviousTier": "Hot"

},

"snapshot": "2022-02-17T16:09:16.7261278Z",

"blobPropertiesUpdated" : {

"ContentLanguage" : {

"current" : "pl-Pl",

"previous" : "nl-NL"

},

"CacheControl" : {

"current" : "max-age=100",

"previous" : "max-age=99"

},

"ContentEncoding" : {

"current" : "gzip, identity",

"previous" : "gzip"

},

"ContentMD5" : {

"current" : "Q2h1Y2sgSW51ZwDIAXR5IQ==",

"previous" : "Q2h1Y2sgSW="

},

"ContentDisposition" : {

"current" : "attachment",

"previous" : ""

},

"ContentType" : {

"current" : "application/json",

"previous" : "application/octet-stream"

}

},

"storageDiagnostics": {

"bid": "9d726370-8006-0000-00ff-233467000000",

"seq": "(2,18446744073709551615,29,29)",

"sid": "4cc94e71-f6be-75bf-e7b2-f9ac41458e5a"

}

}

}

Schema version 4

The following event types may be captured in the change feed records with schema version 4:

- BlobCreated

- BlobDeleted

- BlobPropertiesUpdated

- BlobSnapshotCreated

- BlobTierChanged

- BlobAsyncOperationInitiated

- RestorePointMarkerCreated

The following example shows a change event record in JSON format that uses event schema version 4:

{

"schemaVersion": 4,

"topic": "/subscriptions/<subscription>/resourceGroups/<resource-group>/providers/Microsoft.Storage/storageAccounts/<storage-account>",

"subject": "/blobServices/default/containers/<container>/blobs/<blob>",

"eventType": "BlobCreated",

"eventTime": "2022-02-17T13:08:42.4835902Z",

"id": "ca76bce1-8020-0000-00ff-23346706e769",

"data": {

"api": "PutBlob",

"clientRequestId": "58fbfee9-6cf5-4096-9666-c42980beee65",

"requestId": "ca76bce1-8020-0000-00ff-233467000000",

"etag": "0x8D9F2169F42D701",

"contentType": "application/octet-stream",

"contentLength": 128,

"blobType": "BlockBlob",

"blobVersion": "2022-02-17T16:11:52.5901564Z",

"containerVersion": "0000000000000001",

"blobTier": "Archive",

"url": "https://www.myurl.com",

"sequencer": "00000000000000010000000000000002000000000000001d",

"previousInfo": {

"SoftDeleteSnapshot": "2022-02-17T13:08:42.4825913Z",

"WasBlobSoftDeleted": "true",

"BlobVersion": "2024-02-17T16:11:52.0781797Z",

"LastVersion" : "2022-02-17T16:11:52.0781797Z",

"PreviousTier": "Hot"

},

"snapshot": "2022-02-17T16:09:16.7261278Z",

"blobPropertiesUpdated" : {

"ContentLanguage" : {

"current" : "pl-Pl",

"previous" : "nl-NL"

},

"CacheControl" : {

"current" : "max-age=100",

"previous" : "max-age=99"

},

"ContentEncoding" : {

"current" : "gzip, identity",

"previous" : "gzip"

},

"ContentMD5" : {

"current" : "Q2h1Y2sgSW51ZwDIAXR5IQ==",

"previous" : "Q2h1Y2sgSW="

},

"ContentDisposition" : {

"current" : "attachment",

"previous" : ""

},

"ContentType" : {

"current" : "application/json",

"previous" : "application/octet-stream"

}

},

"asyncOperationInfo": {

"DestinationTier": "Hot",

"WasAsyncOperation": "true",

"CopyId": "copyId"

},

"storageDiagnostics": {

"bid": "9d72687f-8006-0000-00ff-233467000000",

"seq": "(2,18446744073709551615,29,29)",

"sid": "4cc94e71-f6be-75bf-e7b2-f9ac41458e5a"

}

}

}

Schema version 5

The following event types may be captured in the change feed records with schema version 5:

- BlobCreated

- BlobDeleted

- BlobPropertiesUpdated

- BlobSnapshotCreated

- BlobTierChanged

- BlobAsyncOperationInitiated

The following example shows a change event record in JSON format that uses event schema version 5:

{

"schemaVersion": 5,

"topic": "/subscriptions/<subscription>/resourceGroups/<resource-group>/providers/Microsoft.Storage/storageAccounts/<storage-account>",

"subject": "/blobServices/default/containers/<container>/blobs/<blob>",

"eventType": "BlobCreated",

"eventTime": "2022-02-17T13:12:11.5746587Z",

"id": "62616073-8020-0000-00ff-233467060cc0",

"data": {

"api": "PutBlob",

"clientRequestId": "b3f9b39a-ae5a-45ac-afad-95ac9e9f2791",

"requestId": "62616073-8020-0000-00ff-233467000000",

"etag": "0x8D9F2171BE32588",

"contentType": "application/octet-stream",

"contentLength": 128,

"blobType": "BlockBlob",

"blobVersion": "2022-02-17T16:11:52.5901564Z",

"containerVersion": "0000000000000001",

"blobTier": "Archive",

"url": "https://www.myurl.com",

"sequencer": "00000000000000010000000000000002000000000000001d",

"previousInfo": {

"SoftDeleteSnapshot": "2022-02-17T13:12:11.5726507Z",

"WasBlobSoftDeleted": "true",

"BlobVersion": "2024-02-17T16:11:52.0781797Z",

"LastVersion" : "2022-02-17T16:11:52.0781797Z",

"PreviousTier": "Hot"

},

"snapshot" : "2022-02-17T16:09:16.7261278Z",

"blobPropertiesUpdated" : {

"ContentLanguage" : {

"current" : "pl-Pl",

"previous" : "nl-NL"

},

"CacheControl" : {

"current" : "max-age=100",

"previous" : "max-age=99"

},

"ContentEncoding" : {

"current" : "gzip, identity",

"previous" : "gzip"

},

"ContentMD5" : {

"current" : "Q2h1Y2sgSW51ZwDIAXR5IQ==",

"previous" : "Q2h1Y2sgSW="

},

"ContentDisposition" : {

"current" : "attachment",

"previous" : ""

},

"ContentType" : {

"current" : "application/json",

"previous" : "application/octet-stream"

}

},

"asyncOperationInfo": {

"DestinationTier": "Hot",

"WasAsyncOperation": "true",

"CopyId": "copyId"

},

"blobTagsUpdated": {

"previous": {

"Tag1": "Value1_3",

"Tag2": "Value2_3"

},

"current": {

"Tag1": "Value1_4",

"Tag2": "Value2_4"

}

},

"restorePointMarker": {

"rpi": "cbd73e3d-f650-4700-b90c-2f067bce639c",

"rpp": "cbd73e3d-f650-4700-b90c-2f067bce639c",

"rpl": "test-restore-label",

"rpt": "2022-02-17T13:56:09.3559772Z"

},

"storageDiagnostics": {

"bid": "9d726db1-8006-0000-00ff-233467000000",

"seq": "(2,18446744073709551615,29,29)",

"sid": "4cc94e71-f6be-75bf-e7b2-f9ac41458e5a"

}

}

}

Schema version 6

The following event types may be captured in the change feed records with schema version 6:

- BlobCreated

- BlobDeleted

- BlobPropertiesUpdated

- BlobSnapshotCreated

- BlobTierChanged

- BlobAsyncOperationInitiated

Schema version 6 adds support for cold tier.

The following example shows a change event record in JSON format that uses event schema version 6:

{

"schemaVersion": 6,

"topic": "/subscriptions/<subscription>/resourceGroups/<resource-group>/providers/Microsoft.Storage/storageAccounts/<storage-account>",

"subject": "/blobServices/default/containers/<container>/blobs/<blob>",

"eventType": "BlobCreated",

"eventTime": "2023-10-11T13:12:11.5746587Z",

"id": "62616073-8020-0000-00ff-233467060cc0",

"data": {

"api": "PutBlob",

"clientRequestId": "b3f9b39a-ae5a-45ac-afad-95ac9e9f2791",

"requestId": "62616073-8020-0000-00ff-233467000000",

"etag": "0x8D9F2171BE32588",

"contentType": "application/octet-stream",

"contentLength": 128,

"blobType": "BlockBlob",

"blobVersion": "2023-10-11T16:11:52.5901564Z",

"containerVersion": "0000000000000001",

"blobTier": "Archive",

"url": "https://www.myurl.com",

"sequencer": "00000000000000010000000000000002000000000000001d",

"previousInfo": {

"SoftDeleteSnapshot": "2023-10-11T13:12:11.5726507Z",

"WasBlobSoftDeleted": "true",

"BlobVersion": "2024-02-17T16:11:52.0781797Z",

"LastVersion" : "2023-10-11T16:11:52.0781797Z",

"PreviousTier": "Hot"

},

"snapshot" : "2023-10-11T16:09:16.7261278Z",

"blobPropertiesUpdated" : {

"ContentLanguage" : {

"current" : "pl-Pl",

"previous" : "nl-NL"

},

"CacheControl" : {

"current" : "max-age=100",

"previous" : "max-age=99"

},

"ContentEncoding" : {

"current" : "gzip, identity",

"previous" : "gzip"

},

"ContentMD5" : {

"current" : "Q2h1Y2sgSW51ZwDIAXR5IQ==",

"previous" : "Q2h1Y2sgSW="

},

"ContentDisposition" : {

"current" : "attachment",

"previous" : ""

},

"ContentType" : {

"current" : "application/json",

"previous" : "application/octet-stream"

}

},

"asyncOperationInfo": {

"DestinationTier": "Hot",

"WasAsyncOperation": "true",

"CopyId": "copyId"

},

"blobTagsUpdated": {

"previous": {

"Tag1": "Value1_3",

"Tag2": "Value2_3"

},

"current": {

"Tag1": "Value1_4",

"Tag2": "Value2_4"

}

},

"restorePointMarker": {

"rpi": "cbd73e3d-f650-4700-b90c-2f067bce639c",

"rpp": "cbd73e3d-f650-4700-b90c-2f067bce639c",

"rpl": "test-restore-label",

"rpt": "2023-10-11T13:56:09.3559772Z"

},

"storageDiagnostics": {

"bid": "9d726db1-8006-0000-00ff-233467000000",

"seq": "(2,18446744073709551615,29,29)",

"sid": "4cc94e71-f6be-75bf-e7b2-f9ac41458e5a"

}

}

}

Specifications

Change events records are only appended to the change feed. Once these records are appended, they are immutable and record-position is stable. Client applications can maintain their own checkpoint on the read position of the change feed.

Change event records are appended within an order of few minutes of the change. Client applications can choose to consume records as they are appended for streaming access or in bulk at any other time.

Change event records are ordered by modification order per blob. Order of changes across blobs is undefined in Azure Blob Storage. All changes in a prior segment are before any changes in subsequent segments.

Change event records are serialized into the log file by using the Apache Avro 1.8.2 format specification.

Change event records where the

eventTypehas a value ofControlare internal system records and don't reflect a change to objects in your account. You can safely ignore those records.Values in the

storageDiagnosticsproperty bag are for internal use only and not designed for use by your application. Your applications shouldn't have a contractual dependency on that data. You can safely ignore those properties.The time represented by the segment is approximate with bounds of 15 minutes. So to ensure consumption of all records within a specified time, consume the consecutive previous and next hour segment.

Each segment can have a different number of

chunkFilePathsdue to internal partitioning of the log stream to manage publishing throughput. The log files in eachchunkFilePathare guaranteed to contain mutually exclusive blobs and can be consumed and processed in parallel without violating the ordering of modifications per blob during the iteration.The Segments start out in

Publishingstatus. Once the appending of the records to the segment is complete, it will beFinalized. Log files in any segment that is dated after the date of theLastConsumableproperty in the$blobchangefeed/meta/Segments.jsonfile, should not be consumed by your application. Here's an example of theLastConsumableproperty in a$blobchangefeed/meta/Segments.jsonfile:

{

"version": 0,

"lastConsumable": "2019-02-23T01:10:00.000Z",

"storageDiagnostics": {

"version": 0,

"lastModifiedTime": "2019-02-23T02:24:00.556Z",

"data": {

"aid": "55e551e3-8006-0000-00da-ca346706bfe4",

"lfz": "2019-02-22T19:10:00.000Z"

}

}

}

Conditions and known issues

This section describes known issues and conditions in the current release of the change feed.

- If you enable firewall rules for your storage account, lifecycle management requests to delete blobs within $blobchangefeed container might be blocked. You can unblock these requests by providing exceptions for trusted Microsoft services. For more information, see the Exceptions section in Configure firewalls and virtual networks.

- The

LastConsumableproperty of the segments.json file does not list the very first segment that the change feed finalizes. This issue occurs only after the first segment is finalized. All subsequent segments after the first hour are accurately captured in theLastConsumableproperty. - You currently cannot see the $blobchangefeed container when you call the ListContainers API. You can view the contents by calling the ListBlobs API on the $blobchangefeed container directly.

- Storage account failover of geo-redundant storage accounts with the change feed enabled may result in inconsistencies between the change feed logs and the blob data and/or metadata. For more information about such inconsistencies, see Change feed and blob data inconsistencies.

- You might see 404 (Not Found) and 412 (Precondition Failed) errors reported on the $blobchangefeed containers. You can safely ignore these errors.

- BlobDeleted events are not generated when blob versions or snapshots are deleted. A BlobDeleted event is added only when a base (root) blob is deleted.

- Event records are added only for changes to blobs that result from requests to the Blob Service endpoint (

blob.core.windows.net). Changes that result from requests to the Data Lake Storage endpoint (dfs.core.windows.net) endpoint aren't logged and won't appear in change feed records.

Frequently asked questions (FAQ)

Feature support

Support for this feature might be impacted by enabling Data Lake Storage Gen2, Network File System (NFS) 3.0 protocol, or the SSH File Transfer Protocol (SFTP). If you've enabled any of these capabilities, see Blob Storage feature support in Azure Storage accounts to assess support for this feature.