Configure voice capabilities

This article describes the features available in Copilot Studio for interactive voice response with Dynamics 365 for Customer Service.

To get your copilot ready for voice services, see Integrate a voice-enabled copilot with Dynamics 365 for Customer Service.

For an overview of the voice services, see Use interactive voice response in your copilots.

Speech & DTMF modality

A voice-enabled copilot is different from a chat-based copilot. The voice-enabled copilot includes specific voice system topics for handling voice scenarios. A chat-based copilot uses the text modality as the default. A voice-enabled copilot uses the Speech & DTMF modality. The two modalities aren't compatible with each other.

Optimize for voice allows you to author voice-enabled copilots across different modalities and ensures speech-related features are authored correctly.

Optimize for voice

If you didn't start your copilot with the Voice template, you must enable the Optimize for voice option in the copilot's Settings.

With a copilot open, go to Settings > Voice.

Select Optimize for voice. The Use voice as primary authoring mode option is also set by default.

Your copilot gets the following updates when you enable Optimize for voice and Use voice as primary authoring mode options:

- The ability to author voice features when switched from text to Speech & DTMF.

- The voice System topics Silence detection, Speech unrecognized, and Unknown dialpad press are automatically added to handle speech related scenarios.

- Increase accuracy with copilot data (on by default), which improves speech recognition accuracy.

- There’s no change to the existing copilot flow, such as the Main Menu topic to start conversations with mapped DTMF triggers.

Important

- The Optimize for voice setting only changes the voice authoring capabilities, not the channel setting. Turn on the Telephony channel for a fully voice-enabled copilot.

- In addition, setting Optimize for voice on a copilot that wasn't originally configured for voice features means that the copilot won't have the Main Menu (preview) topic. You must recreate that topic, if needed.

Disable optimization for voice

You can disable Optimize for voice in copilot authoring if you don’t enable the Telephony channel. After you disable Optimize for voice, you get the following changes:

- No copilot authoring for voice features, such as DTMF and barge-in.

- The default text modality is set.

- No improvement to speech recognition, since there's no speech recognition.

- No voice system topics or global DTMF topic.

Note

Some topics might report errors during publish if the disabled DTMF topic is referenced in other topics.

- No change to your copilot flow and channel setting, since disabling optimization doesn't turn off the Telephony channel.

- Enabling or disabling the optimize for voice option doesn't take effect until you publish your copilot. If the copilot is enabled or disabled accidentally and switches between modalities, you have time to fix it.

Important

If your Telephony channels are enabled, disabling Optimize for voice can break your copilot, since all DTMF triggers are automatically disabled.

Use voice as your primary authoring mode

The Speech & DTMF modality should be selected for each node in voice feature authoring. You can select the copilot authoring preference as use voice as primary authoring mode. This setting ensures all input fields have the right modality. If you already enabled Optimize for voice, the Use voice as primary authoring mode option is enabled by default.

Message availability

Using the text or speech modality can affect your channel differently.

| Text modality | Speech modality | Copilot text & speech channel |

|---|---|---|

| Message available | Message empty | Message available |

| Message empty | Message available | Message not available |

Customized automatic speech recognition

Voice-enabled copilots for a specific domain, such as medical or finance, might see users use finance terms or medical jargon. Some terms and jargon are hard for the voice-enabled copilot to convert from speech to text.

To ensure the speech input is recognized accurately, you can improve speech recognition:

With your copilot open, select Settings > Voice.

Select Increase accuracy with copilot data to enable the copilot's default customized automatic speech recognition settings.

Select Save to commit your changes.

Publish your copilot to see the new changes.

Copilot-level voice options reference

The Copilot details settings page lets you configure timeouts for various voice-related features. Settings applied in this page become the default for topics created in your copilot.

To make changes to the copilot-level timeout options:

With a copilot open, select Settings > Voice.

Select the settings you want and adjust the copilot's default settings.

Select Save to commit your changes.

Copilot-level settings

The following table lists each option and how it relates to node-level settings.

| Voice-enabled copilot-level section | Setting | Description | Default value | Node-level override |

|---|---|---|---|---|

| DTMF | Interdigit timeout | Maximum time (milliseconds) allowed while waiting for the next DTMF key input. Applies multi-digit DTMF input only when users don't meet the maximum input length. | 3000 ms | Question node with voice properties for Multi-digit DTMF input |

| DTMF | Termination timeout | Maximum duration (milliseconds) to wait for a DTMF termination key. Limit applies when user reaches maximum input length and didn't press termination key. Applies only to multi-digit DTMF input. After the limit times out and terminating DTMF key doesn't arrive, copilot ends the recognition and returns the result up to that point. If set to “continue without waiting,” the copilot doesn't wait for termination key. Copilot returns immediately after user inputs the max length. |

2000 ms | Question node with voice properties for Multi-digit DTMF input |

| Silence detection | Silence detection timeout | Maximum silence (milliseconds) allowed while waiting for user input. Limit applies when copilot doesn't detect any user input. The default is "no silence timeout." Copilot waits infinitely for user’s input. Silence detection for voice times the period after the voice finishes speaking. |

No silence timeout | Question node with voice properties for Multi-digit DTMF input System Topic (silence detection trigger properties) for Configure silence detection and timeouts |

| Speech collection | Utterance end timeout | Limit applies when user pauses during or after speech. If pause is longer than timeout limit, copilot presumes user finished speaking. The maximum value for utterance end timeout is 3000 milliseconds. Anything above 3000 ms reduces to 3000 milliseconds. |

1500 ms | Question node with voice properties |

| Speech collection | Speech recognition timeout | Determines how much time the copilot allows for the user's input once they begin speaking. The default value is 12000 milliseconds (about 12 seconds). No recognition timeout means infinite time. Copilot reprompts the question. If no response, the voice is beyond Speech recognition timeout. | 12,000 ms | Question node with voice properties |

| Latency messaging | Send message delay | Determines how long the copilot waits before delivering the latency message after a background operation request started. The timing is set in milliseconds. | 500 ms | Action node properties for long-running operation |

| Latency messaging | Minimum playback time | The latency message plays for a minimum amount of time, even if the background operation completes while the message is playing. The timing is set in milliseconds. | 5000 ms | Action mode properties for long-running operation |

| Speech sensitivity | Sensitivity | Controls how the system balances detection of speech and background noise. Lower the sensitivity for noisy environments, public spaces, and hands-free operation. Increase the sensitivity for quiet environments, soft-spoken users, or voice-command detection. The default setting is 0.5. | 0.5 | There are no node-level overrides for this control. |

Enable barge-in

Enabling barge-in allows your copilot users to interrupt your copilot. This feature can be useful when you don't need the copilot user to hear the entire message. For example, callers might already know the menu options, because they heard them in the past. With barge-in, the copilot user can enter the option they want, even if the copilot isn't finished listing all the options.

Barge-in disable scenarios

- Disable barge-in if you recently updated a copilot message or if the compliance message shouldn't be interrupted.

- Disable barge-in for the first copilot message to ensure copilot users are aware of new or essential information.

Specifications

Barge-in supports DTMF-based and voice-based interruptions from the copilot user.

Barge-in can be controlled with each message, in one batch. Place

barge-in-disablednodes in sequence before each node where barge-in is allowed. Otherwise, barge-in-disabled is treated as an allow-barge-in message.Once one batch queue is finished, then the barge-in automatic setting is reset for the next batch, and controlled by the barge-in flag at each subsequent message. You can place barge-in disabled nodes as the sequence starts again.

Tip

If there are consecutive message nodes, followed by a question node, voice messages for these nodes are defined as one batch. One batch starts with a message node and stops at the question node, which is waiting for the user’s input.

Avoid disabling barge-in for lengthy messages, especially if you expect copilot users to be interacting with the copilot often. If your copilot user already knows the menu options, let them self-service where they want to go.

Set up barge-in

With a Message or Question node selected, set the desired modality to Speech & DTMF.

Select the More icon (…) of the node, and then select Properties.

For Message nodes, the Send activity properties panel opens on the side of the authoring canvas.

Select Allow barge-in.

For Question nodes, the Question properties panel opens, then select Voice.

From the Voice properties, select Allow barge-in.

Save the topic to commit your changes.

Configure silence detection and timeouts

Silence detection lets you configure how long the copilot waits for user input and the action it takes if no input is received. Silence detection is most useful in response to a question at the node level or when the copilot waits for a trigger phrase to begin a new topic.

You can configure the default timeouts for topics.

To override the defaults for a node:

Select the More icon (…) of the node, and then select Properties.

The Question properties panel opens.

Select Voice and make adjustments to the following settings:

Silence detection timeout option Description Use copilot setting Node uses the global setting for silence detection. Disable for this node The copilot waits indefinitely for a response. Customize in milliseconds The copilot waits for a specified time before repeating the question.

Fallback action

You can configure some behaviors as a fallback action:

- How many times the copilot should repeat a question

- What the reprompt message should say

- What the copilot should do after a specified number of repeats

Speech input

For speech input you can specify:

- Utterance end timeout: How long the copilot waits after the user finishes speaking

- Speech recognition timeout: How much time the copilot gives to the user once they start responding

To configure silence detection behavior when your copilot waits for a trigger phrase, adjust the settings in the On silence system topic.

Add a latency message for long running operations

For long backend operations, your copilot can send a message to users to notify them of the longer processes. Copilots on a messaging channel can also send a latency message.

| Latency message audio playback | Latency message in chat |

|---|---|

| Continues to loop until the operation completes. | Sent only once when the specified latency is hit. |

In Copilot Studio, your copilot can repeat a message after triggering a Power Automate flow:

Select the More icon (…) of the node, and then select Properties. The Action properties panel opens.

Select Send a message.

In the Message section, enter what you want the copilot to say. You can use SSML to modify the sound of the message. The copilot repeats the message until the flow is complete.

You can adjust how long the copilot should wait before repeating the message under the Delay section. You can set a minimum amount of time to wait, even if the flow completes.

Configure call termination

To configure your copilot to end the call and hang up, add a new node (+) then select Topic management > End conversation.

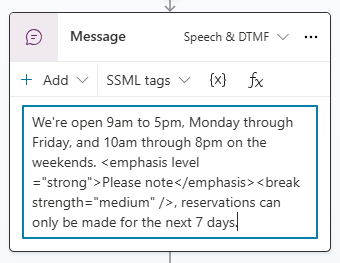

Format speech synthesis with SSML

You can use speech synthesis markup language (SSML) to change how the copilot sounds when it reads messages out loud. For example, you can change the pitch or frequency of the spoken words, the speed, and the volume.

SSML uses tags to enclose the text you want to modify, similar to HTML. You can use the following tags in Copilot Studio:

| SSML tag | Description | Link to speech service documentation |

|---|---|---|

<audio src="_URL to an audio file_"/> |

Add the URL to an audio file within the tag. The file must be accessible by the copilot user. | Add recorded audio |

<break /> |

Insert pauses or breaks between words. Insert break options within the tag. | Add a break |

<emphasis> Text you want to modify </emphasis> |

Add levels of stress to words or phrases. Add emphasis options in the opening tag. Add the closing tag after the text you want to modify. | Adjust emphasis options |

<prosody> Text you want to modify </prosody> |

Specify changes to pitch, contour, range, rate, and volume. Add prosody options in the opening tag. Add the closing tag after the text you want to modify. | Adjust prosody options |

Note

For multilingual copilots, you must incorporate the <lang xml:lang> SSML tag. For more information, see Multilingual voices with the lang element.

Find and use a tag

SSML uses tags to enclose the text you want to modify, like HTML.

You can use the following tags in Copilot Studio:

With a Message or Question node selected, change the mode to Speech & DTMF.

Select the SSML tags menu and select a tag.

The message box is populated with the tag. If you already have text in the message box, the tag's code is appended to the end of your message.

Surround the text you want to modify with the opening and closing tags. You can combine multiple tags and customize individual parts of the message with individual tags.

Tip

You can manually enter SSML tags that don't appear in the helper menu. To learn more about other tags you can use, see Improve synthesis with Speech Synthesis Markup Language.

Transfer a call to a representative or external phone number

You can have the copilot transfer the call to an external phone number. Copilot Studio supports blind transfer to a PSTN phone number and the Direct routing number.

To transfer to an external phone number:

In the topic you want to modify, add a new node (+). In the node menu, select Topic management and then Transfer conversation.

Under Transfer type, select External phone number transfer and enter the transfer number.

(Optionally) add an SIP UUI header to the phone call.

This header is a string of

key=valuepairs, without spaces or special characters, displayed for external systems to read.Select the More icon (…) of the node, and then select Properties. The Transfer conversation properties panel opens.

Under SIP UUI header, enter the information you want to send with the call transfer. Variables aren't supported when transferring to an external phone number.

Caution

Only the first 128 characters in the string are sent.

The header only accepts numbers, letters, equal signs (

=), and semicolons (;). All other characters, including spaces, braces, and brackets, or formulas aren't supported and can cause the transfer to fail.

Tip

Include a + in your phone number for the corresponding country code.

Transfer egress with SIP UUI for the target phone number must use direct routing. Public switched telephone network (PSTN) phone numbers don't support SIP UUI header transfers.

To transfer to a representative, see Explicit triggers.

Use voice variables

Copilot Studio supports the population of variables. You can use predefined variables, or create custom ones.

Note

- For more information on how to use and create variables in Copilot Studio, see Work with variables.

- For information about additional activity and conversation variables available for voice-enabled copilots, see Variables for voice-enabled copilots.

A voice-enabled copilot in Copilot Studio supports context variables. These variables help you integrate your copilot conversations with Dynamics 365 for Customer Service when transferring a call.

For more information about context variables in Dynamics 365 for Customer Service, see Context variables for Copilot Studio bots.

This integration supports these scenarios with the following variables when you transfer:

| Variable | Type | Description |

|---|---|---|

System.Activity.From.Name |

String | The copilot user's caller ID |

System.Activity.Recipient.Name |

String | The number used to call or connect to the copilot |

System.Conversation.SipUuiHeaderValue |

String | SIP header value when transferring through a direct routing phone number |

System.Activity.UserInputType |

String | Whether the copilot user used DTMF or speech in the conversation |

System.Activity.InputDTMFKey |

String | The copilot user's raw DTMF input |

System.Conversation.OnlyAllowDTMF |

Boolean | Voice ignores speech input when set to true |

System.Activity.SpeechRecognition.Confidence |

Number | The confidence value (between 0 and 1) from the last speech recognition event |

System.Activity.SpeechRecognition.MinimalFormattedText |

String | Speech recognition results (as raw text) before Copilot Studio applied its dedicated natural language understanding model |

Note

- A copilot with large trigger phrases and entity sizing takes longer to publish.

- If multiple users publish the same copilot at the same time, your publish action is blocked. You need to republish the copilot after others finish their existing copilot edits.

To learn more about the fundamentals of publishing, see Key concepts - Publish and deploy your copilot.