Digital Audio: Aliasing

Sampling a continuous waveform into discrete digital samples results in lost information. Discrete samples can only tell what the wave is doing at periodic instants in time, and not what's happening between them. The continuous sampled wave could be doing anything between samples. We simply don't know.

The problem here is that when we want to get back the data that we threw away and turn samples back into a continuous wave, we must interpolate between samples to find the lost information. Unfortunately, there are an infinite number of signals that could have produced the samples that we want. Fortunately, if we accept a small restriction on our continuous waveform, we can get back to one unique signal.

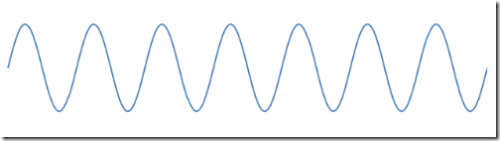

I think an example is in order. Consider the 7kHz sine wave below, shown over a 1ms interval.

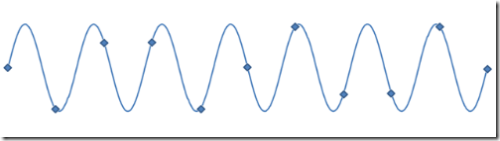

If we sample this sine wave with a sampling rate of 10kHz, we get 11 samples in this interval (counting the endpoints), as shown below.

But when we play back these samples, you won't get 7kHz out of the speaker. What you hear is the 3kHz wave described by the red curve below.

So what happened? Notice that both curves produce exactly the same samples, when sampled at 10kHz. At this sample rate, there is no way to tell the difference between 3kHz, 7kHz, or even the 13kHz wave below.

This effect is known as aliasing, and these frequencies are aliases of each other. In fact, for any frequency f and sample rate S, all frequencies of the form (N*S ± f) for some integer N are aliases.

There is hope, though. For given f and S, there is one unique frequency of the type (N*S ± f) that is between 0 and S/2. Looking at the samples in the example above, the 3kHz wave is between zero and half the sampling rate. No other frequency between 0 and 5kHz can be produced from those samples, and the wave is unique. The frequency S/2, called the Nyquist frequency, is the upper limit of alias-free signal frequency.

All of the information lost from sampling a continuous waveform has a frequency higher than S/2. Therefore, the process of sampling is lossless, as long as all of the frequencies you're interested in are below half the sample rate.

But wait, you may ask. What if you're interested in preserving higher frequencies? Just increase the sample rate to more than twice the highest frequency you're interested in. The human ear can hear frequencies of up to about 20kHz, and it's no coincidence that CD Audio samples at 44.1kHz, providing bit-perfect representation of any frequency humans can hear.

There is one more problem when sampling. Sampling just your frequencies of interest isn't always enough. High frequencies don't just go away when you sample. This out-of-band noise (Noise because it's not part of the signal we want to preserve) in your continuous signal will still be sampled, only now it will alias down below S/2 and contaminate the digital signal you want to keep.

For this reason, any time you sample a continuous signal, you must first band-limit the signal with a low-pass filter to block (attenuate) all frequencies above the Nyquist frequency, so that there is no out-of-band noise at the sampling stage. More on what that means in another post.

Comments

- Anonymous

February 16, 2007

The comment has been removed - Anonymous

August 02, 2007

Thank you so much for showing these coders how digital (pcm) audio REALLY works.And hopefully for showing the need for much higher bit depths and sample rates.There is a reason that analog audio sounds as good as it does.