HDR and Color Spaces

HD Photo supports high dynamic range wide gamut color (HDR/WG) image content using the scRGB color profile with multiple fixed point or floating point numerical formats in 16 or 32 bits per channel (bpc). HDR/WG introduces a fundamentally different way to manage image information compared to the 8bpc unsigned integer RGB pixel format that is common to JPEG and many other formats. This article will take a closer look at color management in general and the specific issues associated with scRGB and HDR/WG images.

What is Red?

What is Red?

This sounds like a simple question, but it's actually very hard to answer accurately within today's digital photography ecosystem. In most cases, we use a standardized definition (typically the sRGB color profile) and rely on all the components of the ecosystem to understand this standard and act accordingly. By doing so, we accept some significant limitations, because sRGB was designed to be a "least common denominator, specifying a subset of the total visible color spectrum that could reasonably be managed by all components in an ecosystem. The diagram shows the sRGB color space in reference to the entire visible color spectrum. More specifically, sRGB was defined to specify colors based on how CRT monitors work, maximizing the probability that images distributed across multiple systems would still appear with the correct colors without a lot of special software or circuitry to adjust colors for proper appearance on standard computer displays.

sRGB is an "output referred" color space, designed to match the color performance and gamma of a CRT monitor under typical home or office viewing conditions. Since it's impractical to expect the vast majority of users to measure the actual color profile of their computer monitor, sRGB provided a normalized reference standard. Monitor makers build monitors that will ideally match the sRGB standard, and image devices and applications encode images based on the sRGB color profile to help insure that colors will appear correctly.

sRGB is an "output referred" color space, designed to match the color performance and gamma of a CRT monitor under typical home or office viewing conditions. Since it's impractical to expect the vast majority of users to measure the actual color profile of their computer monitor, sRGB provided a normalized reference standard. Monitor makers build monitors that will ideally match the sRGB standard, and image devices and applications encode images based on the sRGB color profile to help insure that colors will appear correctly.

So, for the vast majority of computer users, the sRGB color profile defines "what is red?" along with the other primary colors (blue and green) and the color temperature of white (6500K). This is the color space that is the standard in all JPEG files, and the default color space for most other image file formats. Because the sRGB color space is based on the capabilities of an output device (a computer screen), it's an output referred color space.

(sRGB gamut diagram courtesy of Wikipedia.)

SIDE NOTE: What's a little ironic is that today, most computer displays are LCD's, not CRT monitors. LCD's have a very different color characteristic and gamma than CRT's. However, every LCD display includes circuitry to accept an input signal (either digital or analog) as if it was a CRT monitor, and convert that sRGB content to the monitors own specific output referred color space. It's a huge disadvantage to use sRGB as the intermediate color space, but since this is still the universal standard, LCD display manufacturers have little choice.

A Camera Sensor's View of Red

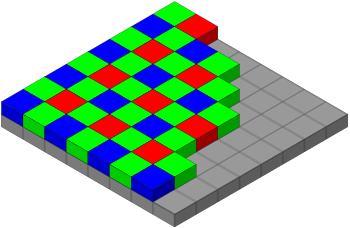

When a typical digital camera captures an image, some of the light that falls on the sensor goes through a red filter and hits a photo sensor cell designed to measure the luminance of this red wavelength light. (There are separate color filters for blue and green.) So, every camera sensor has it's own definition of red based on the specific characteristics of the sensor and color filter.

When a typical digital camera captures an image, some of the light that falls on the sensor goes through a red filter and hits a photo sensor cell designed to measure the luminance of this red wavelength light. (There are separate color filters for blue and green.) So, every camera sensor has it's own definition of red based on the specific characteristics of the sensor and color filter.

(Bayer pattern sensor diagram courtesy of Wikipedia.)

SIDE NOTE: The X3 sensor technology from Foveon handles this in a very different way, but we'll save that discussion for another day. Though the capture method is different, the fundamental color space principals are the same.

The camera's definition of red is based on it's own specific color profile, and is referred to as an "input referred" (or sometimes "camera referred") color space. The numerical value that represents red in this color space is based on how the camera sensor works, not on how we ultimately want to view the color red. In fact, the camera's "input referred" color space is independent of specific lighting conditions; these conditions must be taken into account as part of the processing of the input referred image information to accurately reproduce colors.

Imagine that you take multiple pictures of the same shiny red apple under different lighting conditions -- florescent, incandescent, etc...

Ok, let's not image it; let's try it out. I rummaged around in my refrigerator and found an aging apple. So, while it's not the most photogenic piece of fruit, it will serve to demonstrate what we're talking about. Here's an example of two different versions of "red" based on photos taken under different lighting conditions:

Because of these different light sources, the camera will measure different values for the red color of the apple. However, as far as we're concerned, the apple is always the same red color, regardless of the lighting conditions. So, the input referred color information from the camera sensor must be converted based on the lighting conditions to the output referred color space that will be used to view the different photos of the apple on a computer display.

Fortunately, cameras do this for us; we typically refer to this process as white balance. In most cases, it's automatic; the camera finds something in the scene it believes to be white and adjusts the various color channels accordingly. Or the photographer may choose to manually select the white balance setting, either based on a pre-defined reference value or by manually measuring a white reference. Regardless of the process, this is one of the essential steps to convert images from the input referred color space of the camera sensor to the output referred sRGB color space that is the standard for JPEG files.

Many digital cameras (including virtually all DSLR's and many advanced point-and-shoot cameras) provide an option to save images in a RAW file format. This RAW format contains the actual measured sensor data in the format as captured by the sensor, and is therefore an input referred representation of the image content. Since the definition of colors in a camera's input referred color space is based on the specific characteristics of the camera's sensor system, RAW files are always unique to a specific make and model of camera. By capturing and preserving the RAW sensor data, a photographer can prevent the camera from discarding information from the camera's input referred color space when it is converted to the output referred sRGB color space of a JPEG file. The photographer is reserving the right to make their own decisions on what information to discard by manually processing the RAW image file to produce the desired output referred image to view or print.

Something Between Input and Output

With everything we've discussed so far, we still haven't done a very good job of answering the (presumably) simple question we started with: what is red?

The typical digital camera shooting JPEG photos relies on two different definitions. The camera's input referred color space provides a definition of red based on the characteristics of the camera's sensor. The camera uses white balance information to convert this to sRGB, an output referred color space based on the typical characteristics of a CRT monitor.

Using an input referred color space will result in different color values to represent the red apple based on the device and the lighting. Using an output referred color space will result in different color values to represent the red apple based on the specific output device (for example, a display vs. a printer.) But what we really want is a numerical value that is specific to the color of the red apple, regardless of the input or output conditions!

The solution lies in the use of an intermediate color space that is not defined based on specific input or output devices. A "scene referred" color space defines colors in a meaningful way to us, based on standard reference of the actual colors contained in an image. A scene referred color space typically has a very wide color gamut, since it's purpose is to accurately describe the full palette of colors that could appear within the scene, regardless of what device was used to capture the image, or how the image will ultimately be rendered. A scene referred color space provides the best and most accurate definition of "what is red?".

In most cases (unless we take explicit steps to do otherwise with tools that enable this capability) we simply use the output referred sRGB color space as this intermediate color space for manipulating the image. While this makes things very simple (avoiding the need to then convert to an output referred color space for display to the screen or sharing the photo), it forces us to accept the limited color gamut of sRGB.

Adobe® Photoshop® refers to this intermediate scene-referred color space as the "working space". By default, most Photoshop users have the working space set to sRGB, again for simplicity. However, Photoshop offers several different color profiles specifically designed to be a better working space than sRGB.

"Adobe RGB" is a working space that provides a slightly wider gamut than sRGB, with more color spectrum that is appropriate for printers. (Remember, sRGB is defined based on the characteristics of display monitors.) But Adobe RGB still limits the color gamut so it can be effectively used with 8bpc images. If the gamut is defined too large, then with 8bpc values, the steps between each of the 256 unique color values become apparent, creating unwanted image artifacts.

By using 16bpc unsigned integers, a larger color gamut for the scene referred color space is possible. Adobe Photoshop provides the "Adobe Pro Photo RGB" color profile specifically for this purpose. It provides a much better working space for editing digital photos, but requires the user to manually convert to sRGB to create a final JPEG output file.

The latest trend for serious digital photographers are RAW workflow image processing applications, including Adobe® Lightroom® or Apple® Aperture™. With these applications, the photographer works directly with the RAW file until the final image is rendered for display or printing. In actuality, it's impossible to work directly with the RAW file for image processing because its not even an image yet. It must first be de-mosaiced from the source Bayer pattern format and transformed from the unique input referred color space for the particular camera. Otherwise, the same color editing operation would produce different results based on the camera used. These applications have their own internal wide gamut scene referred intermediate working color space where all image processing operations are performed. The process of converting from the RAW image to this intermediate scene referred color space is performed automatically every time a RAW file is accessed, with no need for the user to be involved, or even know that it's happening.

Go Ahead, Let Yourself Float

With Windows Vista (and HD Photo), Microsoft has introduced comprehensive support for the scRGB scene referred color profile. Unlike other color profiles that rely on unsigned integers to represent the color values, scRGB uses floating point numerical values. (scRGB can also used fixed point representation, which is simply a method of using signed integer numbers to represent fractional values.) The idea behind scRGB is not to redefine the color space, but to simply remove it's boundary limitations.

A color space is defined by the specific color values of it's primary colors (red, green and blue for the color spaces we're discussing) and the color temperature that defines white. As we've discussed, the vast majority of digital images use the sRGB color space as a universal reference that maximizes color compatibility across applications, platforms and devices, but in doing so, defines rather harsh limits.

scRGB uses the same color primaries and white point as sRGB, but uses fixed point or floating point numbers to allow color values that are beyond the gamut limits defined by these primaries. This provides a color space that is fully compatible with the well-established sRGB standard, but removes restrictions imposed by using unsigned integer numerical values. Conversion between sRGB and scRGB is simply a matter of changing numerical formats; no complex color value transformations are required. scRGB offers all the ease and simplicity of sRGB, but removes it's limitations. This makes it the ideal intermediate scene referred working space for digital photography. A (virtually) unlimited color gamut and dynamic range is available to preserve all image content, but the same file can be treated as if it's a standard sRGB image for display and sharing using a simple numerical conversion to unsigned integer values.

Don't Forget the Gamma

Another aspect of the definition of a color profile is the gamma. This specifies the linearity (or more specifically, the non-linearity) of the transform from input luminance levels to output numerical values. it's an important component when using only 8bpc to represent color values, but it also represents another compromise based on technical limitations.

Let's suppose that you take a photo of a white object and carefully set you exposure so the resulting 8bpc sRGB image file has a value of 255 in all channels to represent this white color. Then you changed your lighting (or your camera exposure controls) to take another photo that was 1/2 the brightness of the original white reference photo. You might expect that the resulting 8bpc sRGB image file would show a value of around 128 in all channels for this 1/2 brightness gray image. You would be wrong.

Most color profiles employ a non-linear gamma curve to the luminance levels of all channels to redistribute the 256 unique values to produce better visual results. In the case of sRGB, this also mimics how a CRT monitor works.

For sRGB, the 1/2 brightness gray image will result in a numerical value of around 186, not the midpoint value of 128. This is based on an sRGB gamma of approximately 2.2. (The actual gamma calculation for the sRGB color profile is a little more complex, but we can use a value of 2.2 for an approximation for our purposes.) This means that the sRGB profile uses 186 steps to represent the lower half of the luminance spectrum, and only 69 steps (255-186) to represent the upper half of the luminance spectrum. sRGB defines a non-linear luminance curve to provide more detailed information in the darker or shadow areas at the expense of the brighter or highlight areas. Since we're far more likely to see visual differences between each of the 255 total luminance steps, this non-linear representations significantly reduces the chance of seeing those artifacts.

For sRGB, the 1/2 brightness gray image will result in a numerical value of around 186, not the midpoint value of 128. This is based on an sRGB gamma of approximately 2.2. (The actual gamma calculation for the sRGB color profile is a little more complex, but we can use a value of 2.2 for an approximation for our purposes.) This means that the sRGB profile uses 186 steps to represent the lower half of the luminance spectrum, and only 69 steps (255-186) to represent the upper half of the luminance spectrum. sRGB defines a non-linear luminance curve to provide more detailed information in the darker or shadow areas at the expense of the brighter or highlight areas. Since we're far more likely to see visual differences between each of the 255 total luminance steps, this non-linear representations significantly reduces the chance of seeing those artifacts.

While this is great for efficiently using only 8bpc to represent color values, it distorts the numerical representation of color and impacts the proper calculation of color values for image processing. For example, if an application tries to combine two 1/2 luminance images to create a full luminance image, adding the two sRGB values together will result in a total that is much greater than the correct full luminance value. Likewise, simply dividing an sRGB luminance value by two will produce a result that is much darker than the correct 1/2 luminance image level.

Many imaging applications simply ignore this problem and provide the appropriate user interface controls to allow the user to visually manipulate the image independent of the numerical values. (Move a slider until you see the brightness level you want.) In other cases, applications "do the right thing" by first converting non-linear color profile content to linear values (using higher precision numerical formats) to perform image processing mathematics, then convert the result back to the desired non-linear color profile.

The scRGB color profile is designed to always be used with higher precision (typically 16bpc or 32bpc) fixed or floating point numerical formats, and therefore it's not necessary to use a gamma correction to compensate for the limitations of 8bpc formats. The scRGB color profile has a gamma of 1.0, meaning that there is a linear relationship between scene illumination the encoded numerical value. Going back to our example, The numerical value for the gray image that was 1/2 the exposure level of the reference white image would result in a numerical scRGB color space value that was 1/2 the value for the reference white level.

Camera sensors are linear response devices. LCD displays are linear response devices. The correct method for performing image processing mathematics is to use a linear representation. It makes far more sense to store image information using a linear luminance values rather than distorting them with a gamma curve designed to compensate for the limitations of a particular numerical format or device. By not restricting ourselves to the limitations of an 8bpc format, we can dramatically simplify and improve end-to-end photo manipulation and processing.

Big Changes Takes Time

The scRGB color space and HDR/WG image formats will dramatically change the way most digital imaging is performed. The vast majority of imaging software, devices and services are currently based on the use of unsigned integer, gamma adjusted, sRGB image formats. It's going to take some time for all these components in the digital photography ecosystem to migrate to this improved methodology.

Adobe Photoshop CS3 (and CS2) are the current leaders in this space. These newer versions of Photoshop support 32bpc mode, using floating point values and what Adobe calls the "Linear RGB" color profile. This is the equivalent of scRGB, and using Photoshop CS3 (or CS2), it's possible to create, edit and save HDR/WG scRGB compatible images. With the HD Photo plug-in for Adobe Photoshop, you can use HD Photo to efficiently store these HDR/WG images in a variety of fixed or floating point numerical formats.

But we still have a long way to go. There are still lots of issues and limitations in using HDR/WG image formats.

Photoshop CS3 only offers a subset of image adjustments and filters when using 32-bit mode. Many Photoshop functions have not been enhanced to handle HDR/WG content.

Photoshop CS3 doesn't allow color profile conversion in 32-bit mode, making it very problematic to move between other scene referred color profiles (such as Pro Photo RGB) and scRGB.

There are no RAW conversion applications or utilities (including the Adobe Camera RAW module in Photoshop CS3) that allow input referred RAW files to be converted directly to a scene referred scRGB (or Linear RGB) color space.

Adobe Photoshop allows 32bpc floating point content to be stored in a TIFF file, but few other applications can understand floating point TIFF files. The Macintosh OS X operating system has support for HDR/WG color spaces as part of Core Image, and even provides support for 32bpc floating point TIFF files. However Core Image interprets these files differently; it assumes the use of the sRGB color profile and that the floating point HDR/WG image data is gamma adjusted. Photoshop stores these TIFF files in an scRGB compatible linear gamma format. The result is that the image looks dramatically different when opened in Photoshop vs. the Mac OS X viewer.

Most other photo and imaging applications have no support at all for HDR/WG pixel formats and expect all images are stored using unsigned integer values. Many applications further assume that the precision is always limited to 8bpc and that the color space is always sRGB. While there are applications adding support for HD Photo, in many cases, they only support limited pixel formats and have no support for HDR/WG content or image processing capabilities.

That said, we have to start somewhere and I'm very pleased to see the progress that's been made so far. There are enough pieces in place that you can work with these formats today, albeit with some caveats and limitations. I'm really excited about the progress being made to create the new JPEG XR standard, based on HD Photo. When this standard is finalized it will go a long way to "legitimize" HDR/WG image formats and the associated application support, and will provide the needed reference to motivate the development of compatible software, devices, services and platforms that take full advantage of the powerful capabilities provided by HDR/WG image formats and the scRGB color space.

HD Photo and the new imaging infrastructure in Windows Vista represent the leading edge of this next generation of digital photography. In the near future, it will be a whole lot easier to more accurately answer the simple question "what is red?". It's going to be very cool to watch all of this develop in the months ahead. Get ready for some exciting changes!

Comments

Anonymous

October 25, 2007

PingBack from http://www.imaginginsider.com/?p=64647Anonymous

October 25, 2007

Thank you for an excellent article. Let's hope that camera manufacturers start using JPEG XR instead of RAW. That would make life much easier.Anonymous

November 01, 2007

If Microsoft was taking color management seriously, it would offer full ICCv4 color profile support in:IE7/8Windows XP (which has 80% of Windows marketshare) Forget Windows-only, MS's solutions are Vista-only. Technical reasons are no excuse if properly supporting color management across all platforms is important.Anonymous

December 06, 2007

Bill Crow, best known for his work on HDPhoto/JPEG-XR, has a great post about dynamic range and colorAnonymous

December 06, 2007

Bill Crow, best known for his work on HDPhoto/JPEG-XR, has a great post about dynamic range and colorAnonymous

January 24, 2008

This is an absolutly excellent blog post!!!