Notitie

Voor toegang tot deze pagina is autorisatie vereist. U kunt proberen u aan te melden of de directory te wijzigen.

Voor toegang tot deze pagina is autorisatie vereist. U kunt proberen de mappen te wijzigen.

De PPL (Parallel Patterns Library) biedt algoritmen die gelijktijdig werken aan verzamelingen gegevens. Deze algoritmen lijken op de algoritmen die worden geleverd door de C++-standaardbibliotheek.

De parallelle algoritmen bestaan uit bestaande functionaliteit in de Gelijktijdigheidsruntime. De concurrency::parallel_for-algoritme maakt bijvoorbeeld gebruik van een concurrency::structured_task_group-object om de parallelle lusiteraties uit te voeren. De parallel_for algoritmepartities werken op een optimale manier op basis van het beschikbare aantal rekenresources.

Afdelingen

Het parallel_for-algoritme

De concurrency::parallel_for algoritme voert herhaaldelijk dezelfde taak parallel uit. Elk van deze taken wordt geparameteriseerd met een iteratiewaarde. Dit algoritme is handig wanneer u een luslichaam hebt dat geen resources deelt tussen de iteraties van die lus.

Het parallel_for algoritme partitioneert taken op een optimale manier voor parallelle uitvoering. Het maakt gebruik van een werkdiefstalalgoritme en bereikdiefstal om deze partities te balanceren wanneer de werkbelastingen onevenwichtig zijn. Wanneer een lusiteratie op een coöperatieve manier blokkeert, wijst de runtime het bereik van iteraties dat aan de huidige thread is toegewezen opnieuw toe aan andere threads of processors. Wanneer een thread een reeks iteraties voltooit, verdeelt de runtime ook werk van andere threads naar die thread. Het parallel_for algoritme ondersteunt ook geneste parallelisering. Wanneer één parallelle lus een andere parallelle lus bevat, coördineert de runtime de verwerkingsbronnen tussen de luslichamen op een efficiënte manier voor parallel uitvoering.

Het parallel_for algoritme heeft verschillende overbelaste versies. De eerste versie heeft een beginwaarde, een eindwaarde en een werkfunctie (een lambda-expressie, functieobject of functieaanwijzer). De tweede versie neemt een beginwaarde, een eindwaarde, een waarde waarmee moet worden gestapt en een werkfunctie. De eerste versie van deze functie gebruikt 1 als stapwaarde. De overige versies maken gebruik van partitionerobjecten, waarmee u kunt opgeven hoe parallel_for bereiken tussen threads moeten worden gepartitioneert. Partitioneerfuncties worden uitgebreid beschreven in de sectie Partitioneringswerk in dit document.

U kunt veel for lussen converteren naar het gebruik van parallel_for. Het parallel_for algoritme verschilt echter op de volgende manieren van de for instructie:

Het

parallel_foralgoritmeparallel_forvoert de taken niet uit in een vooraf bepaalde volgorde.Het

parallel_foralgoritme biedt geen ondersteuning voor willekeurige beëindigingsvoorwaarden. Hetparallel_foralgoritme stopt wanneer de huidige waarde van de iteratievariabele één kleiner is danlast.De

_Index_typetypeparameter moet een integraal type zijn. Dit integrale type kan worden ondertekend of niet ondertekend.De iteratie van de lus moet vooruit gaan. Het

parallel_foralgoritme genereert een uitzondering van het type std::invalid_argument als de_Stepparameter kleiner is dan 1.Het mechanisme voor het afhandelen van uitzonderingen voor het

parallel_foralgoritme verschilt van die van eenforlus. Als er meerdere uitzonderingen tegelijk optreden in een parallel luslichaam, wordt slechts één van de uitzonderingen doorgegeven aan de thread dieparallel_forheeft aangeroepen. Bovendien stopt de runtime de volledige lus niet onmiddellijk wanneer één lusiteratie een exceptie genereert. In plaats daarvan wordt de lus in de geannuleerde status geplaatst en laat de runtime alle taken die nog niet zijn gestart, weg. Zie De afhandeling van uitzonderingen en parallelle algoritmen voor meer informatie over het verwerken van uitzonderingen en parallelle algoritmen.

Hoewel het parallel_for algoritme geen willekeurige beëindigingsvoorwaarden ondersteunt, kunt u annulering gebruiken om alle taken te stoppen. Zie Annulering in de PPL voor meer informatie over annulering.

Opmerking

De planneringskosten die het gevolg zijn van belastingverdeling en de ondersteuning voor functies zoals annulering, kunnen de voordelen van het parallel uitvoeren van het luslichaam tenietdoen, met name wanneer het luslichaam relatief klein is. U kunt deze overhead minimaliseren met behulp van een partitioner in uw parallelle lus. Zie Partitioneringswerk verderop in dit document voor meer informatie.

Voorbeeld

In het volgende voorbeeld ziet u de basisstructuur van het parallel_for algoritme. In dit voorbeeld wordt elke waarde in het bereik [1, 5] parallel in de console afgedrukt.

// parallel-for-structure.cpp

// compile with: /EHsc

#include <ppl.h>

#include <array>

#include <sstream>

#include <iostream>

using namespace concurrency;

using namespace std;

int wmain()

{

// Print each value from 1 to 5 in parallel.

parallel_for(1, 6, [](int value) {

wstringstream ss;

ss << value << L' ';

wcout << ss.str();

});

}

In dit voorbeeld wordt de volgende voorbeelduitvoer geproduceerd:

1 2 4 3 5

Omdat het parallel_for algoritme op elk item parallel werkt, varieert de volgorde waarin de waarden naar de console worden afgedrukt.

Zie parallel_for voor een volledig voorbeeld dat gebruikmaakt van het -algoritme.

[Boven]

Het "parallel_for_each"-algoritme

Het algoritme concurrency::parallel_for_each voert taken parallel uit op een iteratieve container, zoals die wordt geleverd door de C++-standaardbibliotheek. Het maakt gebruik van dezelfde partitioneringslogica die door het parallel_for algoritme wordt gebruikt.

Het parallel_for_each algoritme lijkt op het C++- standaardbibliotheek-std::for_each-algoritme , behalve dat het parallel_for_each algoritme de taken gelijktijdig uitvoert. Net als bij andere parallelle algoritmen worden parallel_for_each de taken niet in een specifieke volgorde uitgevoerd.

Hoewel het parallel_for_each algoritme werkt met zowel doorloop-iterators als iterators voor willekeurige toegang, presteert het beter met iterators voor willekeurige toegang.

Voorbeeld

In het volgende voorbeeld ziet u de basisstructuur van het parallel_for_each algoritme. In dit voorbeeld wordt elke waarde in een std::array-object parallel in de console afgedrukt.

// parallel-for-each-structure.cpp

// compile with: /EHsc

#include <ppl.h>

#include <array>

#include <sstream>

#include <iostream>

using namespace concurrency;

using namespace std;

int wmain()

{

// Create an array of integer values.

array<int, 5> values = { 1, 2, 3, 4, 5 };

// Print each value in the array in parallel.

parallel_for_each(begin(values), end(values), [](int value) {

wstringstream ss;

ss << value << L' ';

wcout << ss.str();

});

}

/* Sample output:

5 4 3 1 2

*/

In dit voorbeeld wordt de volgende voorbeelduitvoer geproduceerd:

4 5 1 2 3

Omdat het parallel_for_each algoritme op elk item parallel werkt, varieert de volgorde waarin de waarden naar de console worden afgedrukt.

Bekijk voor een compleet voorbeeld dat gebruikmaakt van het parallel_for_each algoritme, Hoe kunt u een parallel_for_each-lus schrijven.

[Boven]

Het parallel_invoke-algoritme

Het algoritme concurrency::parallel_invoke voert een set taken parallel uit. Het keert pas terug als elke taak is beëindigd. Dit algoritme is handig wanneer u meerdere onafhankelijke taken hebt die u tegelijkertijd wilt uitvoeren.

Het parallel_invoke algoritme gebruikt als parameters een reeks werkfuncties (lambda-functies, functieobjecten of functiepunten). Het parallel_invoke algoritme is overbelast om tussen twee en tien parameters te nemen. Elke functie die u doorgeeft parallel_invoke , moet nul parameters hebben.

Net als bij andere parallelle algoritmen worden parallel_invoke de taken niet in een specifieke volgorde uitgevoerd. In het onderwerp Taakparallelisme wordt uitgelegd hoe het parallel_invoke algoritme zich verhoudt tot taken en taakgroepen.

Voorbeeld

In het volgende voorbeeld ziet u de basisstructuur van het parallel_invoke algoritme. In dit voorbeeld wordt de twice functie gelijktijdig aangeroepen op drie lokale variabelen en wordt het resultaat afgedrukt naar de console.

// parallel-invoke-structure.cpp

// compile with: /EHsc

#include <ppl.h>

#include <string>

#include <iostream>

using namespace concurrency;

using namespace std;

// Returns the result of adding a value to itself.

template <typename T>

T twice(const T& t) {

return t + t;

}

int wmain()

{

// Define several values.

int n = 54;

double d = 5.6;

wstring s = L"Hello";

// Call the twice function on each value concurrently.

parallel_invoke(

[&n] { n = twice(n); },

[&d] { d = twice(d); },

[&s] { s = twice(s); }

);

// Print the values to the console.

wcout << n << L' ' << d << L' ' << s << endl;

}

In dit voorbeeld wordt de volgende uitvoer gegenereerd:

108 11.2 HelloHello

Zie Procedures voor volledige voorbeelden die gebruikmaken van het parallel_invoke algoritme : Parallel_invoke gebruiken om een parallelle sorteerroutine te schrijven en procedures: gebruik parallel_invoke om parallelle bewerkingen uit te voeren.

[Boven]

De algoritmen parallel_transform en parallel_reduce

De concurrency::parallel_transform en concurrency::parallel_reduce-algoritmen zijn parallelle versies van de C++ Standaardbibliotheekalgoritmen std::transform en std::accumulate. De gelijktijdigheidsruntimeversies gedragen zich als de versies van de C++ Standard-bibliotheek, behalve dat de bewerkingsvolgorde niet wordt bepaald omdat deze parallel worden uitgevoerd. Gebruik deze algoritmen wanneer u met een set werkt die groot genoeg is om prestaties en schaalbaarheidsvoordelen te krijgen van parallelle verwerking.

Belangrijk

De parallel_transform en parallel_reduce algoritmen ondersteunen alleen willekeurige toegang, bidirectionele en voorwaartse iterators, omdat deze iterators stabiele geheugenadressen produceren. Deze iterators moeten ook niet-l-waardenconst produceren.

Het parallel_transform-algoritme

U kunt het parallel transform algoritme gebruiken om veel gegevensparallellisatiebewerkingen uit te voeren. U kunt bijvoorbeeld het volgende doen:

Pas de helderheid van een afbeelding aan en voer andere bewerkingen voor afbeeldingsverwerking uit.

Som of neem het puntproduct tussen twee vectoren en voer andere numerieke berekeningen uit op vectoren.

Voer 3D-raytracering uit, waarbij elke iteratie verwijst naar één pixel die moet worden weergegeven.

In het volgende voorbeeld ziet u de basisstructuur die wordt gebruikt om het parallel_transform algoritme aan te roepen. In dit voorbeeld wordt elk element van een std::vectorobject op twee manieren genegeerd. De eerste manier maakt gebruik van een lambda-expressie. De tweede manier maakt gebruik van std::negate, die is afgeleid van std::unary_function.

// basic-parallel-transform.cpp

// compile with: /EHsc

#include <ppl.h>

#include <random>

using namespace concurrency;

using namespace std;

int wmain()

{

// Create a large vector that contains random integer data.

vector<int> values(1250000);

generate(begin(values), end(values), mt19937(42));

// Create a vector to hold the results.

// Depending on your requirements, you can also transform the

// vector in-place.

vector<int> results(values.size());

// Negate each element in parallel.

parallel_transform(begin(values), end(values), begin(results), [](int n) {

return -n;

});

// Alternatively, use the negate class to perform the operation.

parallel_transform(begin(values), end(values), begin(values), negate<int>());

}

Waarschuwing

In dit voorbeeld ziet u het basisgebruik van parallel_transform. Omdat de werkfunctie geen aanzienlijke hoeveelheid werk uitvoert, wordt in dit voorbeeld geen aanzienlijke toename van de prestaties verwacht.

Het parallel_transform algoritme heeft twee overbelastingen. De eerste overbelasting heeft één invoerbereik en een unaire functie. De unaire functie kan een lambda-expressie zijn die één argument, een functieobject of een type gebruikt dat is afgeleid van unary_function. De tweede overbelasting heeft twee invoerbereiken en een binaire functie. De binaire functie kan een lambda-expressie zijn die twee argumenten neemt, een functieobject, of een type dat is afgeleid van std::binary_function. In het volgende voorbeeld ziet u deze verschillen.

//

// Demonstrate use of parallel_transform together with a unary function.

// This example uses a lambda expression.

parallel_transform(begin(values), end(values),

begin(results), [](int n) {

return -n;

});

// Alternatively, use the negate class:

parallel_transform(begin(values), end(values),

begin(results), negate<int>());

//

// Demonstrate use of parallel_transform together with a binary function.

// This example uses a lambda expression.

parallel_transform(begin(values), end(values), begin(results),

begin(results), [](int n, int m) {

return n * m;

});

// Alternatively, use the multiplies class:

parallel_transform(begin(values), end(values), begin(results),

begin(results), multiplies<int>());

Belangrijk

De iterator die u voor de uitvoer parallel_transform opgeeft, moet de invoer-iterator volledig overlappen of helemaal niet overlappen. Het gedrag van dit algoritme is niet gespecificeerd als de invoer- en uitvoer-iterators gedeeltelijk overlappen.

Het parallel_reduce-algoritme

Het parallel_reduce algoritme is handig wanneer u een reeks bewerkingen hebt die voldoen aan de associatieve eigenschap. (Voor dit algoritme is de commutatieve eigenschap niet vereist.) Hier volgen enkele van de bewerkingen die u kunt uitvoeren met parallel_reduce:

Vermenigvuldig de reeksen matrices om een matrix te produceren.

Vermenigvuldig een vector met een reeks matrices om een vector te produceren.

De lengte van een reeks tekenreeksen berekenen.

Combineer een lijst met elementen, zoals tekenreeksen, in één element.

In het volgende basisvoorbeeld ziet u hoe u het parallel_reduce algoritme gebruikt om een reeks tekenreeksen te combineren tot één tekenreeks. Net als bij de voorbeelden voor parallel_transform, worden prestatieverbeteringen niet verwacht in dit basisvoorbeeld.

// basic-parallel-reduce.cpp

// compile with: /EHsc

#include <ppl.h>

#include <iostream>

#include <string>

#include <vector>

using namespace concurrency;

using namespace std;

int wmain()

{

// Create a vector of strings.

vector<wstring> words{

L"Lorem ",

L"ipsum ",

L"dolor ",

L"sit ",

L"amet, ",

L"consectetur ",

L"adipiscing ",

L"elit."};

// Reduce the vector to one string in parallel.

wcout << parallel_reduce(begin(words), end(words), wstring()) << endl;

}

/* Output:

Lorem ipsum dolor sit amet, consectetur adipiscing elit.

*/

In veel gevallen kunt u parallel_reduce beschouwen als een verkorting voor het gebruik van het parallel_for_each algoritme in combinatie met de concurrency::combinable klasse.

Voorbeeld: Kaart en reductie parallel uitvoeren

Met een map-bewerking wordt een functie toegepast op elke waarde in een sequentie. Een reductiebewerking combineert de elementen van een reeks in één waarde. U kunt de C++ Standaardbibliotheek std::transform en std::accumulate functies gebruiken om transformatie- en reductiebewerkingen uit te voeren. Voor veel problemen kunt u echter het parallel_transform algoritme gebruiken om de map-bewerking parallel uit te voeren en om het parallel_reduce algoritme de reductiebewerking parallel uit te voeren.

In het volgende voorbeeld wordt de tijd vergeleken die nodig is om de som van priemgetallen serieel en parallel te berekenen. De map-fase transformeert niet-priemwaarden naar 0 en de reductiefase optelt de waarden.

// parallel-map-reduce-sum-of-primes.cpp

// compile with: /EHsc

#include <windows.h>

#include <ppl.h>

#include <array>

#include <numeric>

#include <iostream>

using namespace concurrency;

using namespace std;

// Calls the provided work function and returns the number of milliseconds

// that it takes to call that function.

template <class Function>

__int64 time_call(Function&& f)

{

__int64 begin = GetTickCount();

f();

return GetTickCount() - begin;

}

// Determines whether the input value is prime.

bool is_prime(int n)

{

if (n < 2)

return false;

for (int i = 2; i < n; ++i)

{

if ((n % i) == 0)

return false;

}

return true;

}

int wmain()

{

// Create an array object that contains 200000 integers.

array<int, 200000> a;

// Initialize the array such that a[i] == i.

iota(begin(a), end(a), 0);

int prime_sum;

__int64 elapsed;

// Compute the sum of the numbers in the array that are prime.

elapsed = time_call([&] {

transform(begin(a), end(a), begin(a), [](int i) {

return is_prime(i) ? i : 0;

});

prime_sum = accumulate(begin(a), end(a), 0);

});

wcout << prime_sum << endl;

wcout << L"serial time: " << elapsed << L" ms" << endl << endl;

// Now perform the same task in parallel.

elapsed = time_call([&] {

parallel_transform(begin(a), end(a), begin(a), [](int i) {

return is_prime(i) ? i : 0;

});

prime_sum = parallel_reduce(begin(a), end(a), 0);

});

wcout << prime_sum << endl;

wcout << L"parallel time: " << elapsed << L" ms" << endl << endl;

}

/* Sample output:

1709600813

serial time: 7406 ms

1709600813

parallel time: 1969 ms

*/

Voor een ander voorbeeld waarin een toewijzings- en reductiebewerking parallel wordt uitgevoerd, raadpleegt u Instructies: Toewijzings- en reductiebewerkingen parallel uitvoeren.

[Boven]

Verdeling van Werk

Als u een bewerking op een gegevensbron wilt parallelliseren, moet u de bron partitioneren in meerdere secties die gelijktijdig kunnen worden geopend door meerdere threads. Een partitioner geeft aan hoe een parallel algoritme bereiken tussen threads moet partitioneren. Zoals eerder in dit document is uitgelegd, maakt de PPL gebruik van een standaardpartitioneringsmechanisme dat een eerste workload maakt en vervolgens gebruikmaakt van een algoritme voor het stelen van werk en bereikdiefstal om deze partities te verdelen wanneer workloads niet in balans zijn. Wanneer een lus-iteratie bijvoorbeeld een reeks iteraties voltooit, verdeelt de runtime het werk opnieuw van andere threads naar die specifieke thread. Voor sommige scenario's wilt u echter mogelijk een ander partitioneringsmechanisme opgeven dat beter geschikt is voor uw probleem.

De parallel_for, parallel_for_eachen parallel_transform algoritmen bieden overbelaste versies die een extra parameter gebruiken. _Partitioner Deze parameter definieert het type partitioneerfunctie dat werk verdeelt. Hier volgen de soorten partitioneerfuncties die door de PPL worden gedefinieerd:

concurrentie::affinity_partitioner

Verdeelt werk in een vast aantal intervallen (meestal het aantal werkthreads dat beschikbaar is om de lus uit te voeren). Dit type partitioner lijkt op static_partitioner, maar verbetert de cacheaffiniteit door de manier waarop het bereiken toewijst aan werkthreads. Dit type partitioneerfunctie kan de prestaties verbeteren wanneer een lus meerdere keren via dezelfde gegevensset wordt uitgevoerd (zoals een lus binnen een lus) en de gegevens in de cache passen. Deze partitioner neemt niet volledig deel aan annulering. Het maakt ook geen gebruik van coöperatief blokkerende semantiek en kan daarom niet worden gebruikt met parallelle lussen die een voorwaartse afhankelijkheid hebben.

concurrentie::auto_partitioner

Verdeelt werk in een eerste aantal reeksen (meestal het aantal worker threads dat beschikbaar is om aan de lus te werken). De runtime gebruikt dit type standaard wanneer u geen overbelast parallel algoritme aanroept dat een _Partitioner parameter gebruikt. Elk bereik kan worden onderverdeeld in subbereiken, waardoor taakverdeling kan plaatsvinden. Wanneer een werkbereik is voltooid, herverdeelt de runtime subbereiken van werk van andere threads naar die thread. Gebruik deze partitioner als uw workload niet onder een van de andere categorieën valt of als u volledige ondersteuning nodig hebt voor annulering of coöperatief blokkeren.

gelijktijdigheid::simple_partitioner

Verdeelt werk in bereiken, zodat elk bereik ten minste het aantal iteraties heeft dat is bepaald door de gespecificeerde segmentgrootte. Dit type partitioner neemt deel aan lastverdeling; de runtime verdeelt echter geen bereiken in subbereiken. Voor elke werknemer controleert de runtime op annulering en voert taakverdeling uit nadat _Chunk_size iteraties zijn voltooid.

gelijktijdigheid::static_partitioner

Verdeelt werk in een vast aantal intervallen (meestal het aantal werkthreads dat beschikbaar is om de lus uit te voeren). Dit type partitioner kan de prestaties verbeteren omdat het geen gebruik maakt van werk stelen en daarom minder overhead heeft. Gebruik dit type partitioneerfunctie wanneer elke iteratie van een parallelle lus een vaste en uniforme hoeveelheid werk uitvoert en u geen ondersteuning nodig hebt voor annuleringen of vooruitstrevende blokkeringen.

Waarschuwing

De parallel_for_each en parallel_transform algoritmen ondersteunen alleen containers die gebruikmaken van iterators voor willekeurige toegang (zoals std::vector) voor de statische, eenvoudige en affiniteitspartitioneerfuncties. Het gebruik van containers die bidirectionele en doorstuur-iterators gebruiken, produceert een compileertijdfout. De standaardpartitie, auto_partitionerondersteunt alle drie deze iterator-typen.

Deze partities worden doorgaans op dezelfde manier gebruikt, met uitzondering van affinity_partitioner. De meeste partitietypen behouden de status niet en worden niet gewijzigd door de runtime. Daarom kunt u deze partitionerobjecten maken op de aanroepsite, zoals wordt weergegeven in het volgende voorbeeld.

// static-partitioner.cpp

// compile with: /EHsc

#include <ppl.h>

using namespace concurrency;

void DoWork(int n)

{

// TODO: Perform a fixed amount of work...

}

int wmain()

{

// Use a static partitioner to perform a fixed amount of parallel work.

parallel_for(0, 100000, [](int n) {

DoWork(n);

}, static_partitioner());

}

U moet echter een affinity_partitioner object doorgeven als een niet-const, l-value-referentie, zodat het algoritme de status kan opslaan voor toekomstig hergebruik in lussen. In het volgende voorbeeld ziet u een eenvoudige toepassing waarmee dezelfde bewerking op een gegevensset meerdere keren parallel wordt uitgevoerd. Het gebruik van affinity_partitioner kan de prestaties verbeteren omdat de matrix waarschijnlijk in de cache past.

// affinity-partitioner.cpp

// compile with: /EHsc

#include <ppl.h>

#include <array>

using namespace concurrency;

using namespace std;

int wmain()

{

// Create an array and fill it with zeroes.

array<unsigned char, 8 * 1024> data;

data.fill(0);

// Use an affinity partitioner to perform parallel work on data

// that is likely to remain in cache.

// We use the same affinitiy partitioner throughout so that the

// runtime can schedule work to occur at the same location for each

// iteration of the outer loop.

affinity_partitioner ap;

for (int i = 0; i < 100000; i++)

{

parallel_for_each(begin(data), end(data), [](unsigned char& c)

{

c++;

}, ap);

}

}

Waarschuwing

Wees voorzichtig wanneer u bestaande code wijzigt die afhankelijk is van coöperatieve blokkerende semantiek om te gebruiken static_partitioner of affinity_partitioner. Deze partitionertypen maken geen gebruik van taakverdeling of bereikdiefstal en kunnen daarom het gedrag van uw toepassing wijzigen.

De beste manier om te bepalen of een partitioner in een bepaald scenario moet worden gebruikt, is experimenteren en meten hoe lang het duurt voordat bewerkingen worden voltooid onder representatieve belastingen en computerconfiguraties. Statische partitionering kan bijvoorbeeld een aanzienlijke versnelling bieden op een computer met meerdere kernen met slechts een paar kernen, maar dit kan leiden tot vertragingen op computers met relatief veel kernen.

[Boven]

Parallelle sortering

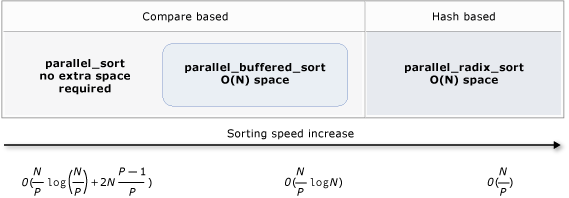

De PPL biedt drie sorteeralgoritmen: concurrency::parallel_sort, concurrency::parallel_buffered_sort en concurrency::parallel_radixsort. Deze sorteeralgoritmen zijn handig wanneer u een gegevensset hebt die kan profiteren van parallel sorteren. Het parallel sorteren is met name handig wanneer u een grote gegevensset hebt of wanneer u een rekenkundige vergelijkingsbewerking gebruikt om uw gegevens te sorteren. Elk van deze algoritmen sorteert de elementen intern.

De parallel_sort en parallel_buffered_sort algoritmen zijn beide op vergelijking gebaseerde algoritmen. Dat wil gezegd, ze vergelijken elementen op waarde. Het parallel_sort algoritme heeft geen extra geheugenvereisten en is geschikt voor het sorteren van algemeen gebruik. Het parallel_buffered_sort algoritme kan beter presteren dan parallel_sort, maar hiervoor is O(N) ruimte vereist.

Het parallel_radixsort algoritme is gebaseerd op hash. Dat wil gezegd, er worden gehele getallen gebruikt om elementen te sorteren. Met behulp van sleutels kan dit algoritme de bestemming van een element rechtstreeks berekenen in plaats van vergelijkingen te gebruiken. Net zoals parallel_buffered_sortvereist dit algoritme O(N) ruimte.

De volgende tabel bevat een overzicht van de belangrijke eigenschappen van de drie parallelle sorteeralgoritmen.

| Algoritme | Beschrijving | Sorteermechanisme | Stabiliteit sorteren | Vereisten voor geheugen | Tijdcomplexiteit | Toegang tot iterator |

|---|---|---|---|---|---|---|

parallel_sort |

Algemene sortering op basis van vergelijkingen. | Op basis van vergelijken (oplopend) | Instabiel | Geen | O((N/P)log(N/P) + 2N(P-1)/P)) | Willekeurig |

parallel_buffered_sort |

Snellere op algemene doeleinden gebaseerde sortering waarvoor O(N) ruimte is vereist. | Op basis van vergelijken (oplopend) | Instabiel | Vereist extra O(N)-ruimte | O((N/P)log(N)) | Willekeurig |

parallel_radixsort |

Sorteren op basis van integersleutels waarvoor O(N) ruimte vereist is. | Op basis van hash | Stabiel | Vereist extra O(N)-ruimte | O(N/P) | Willekeurig |

In de volgende afbeelding ziet u de belangrijke eigenschappen van de drie parallelle sorteeralgoritmen grafischer.

Deze parallelle sorteeralgoritmen volgen de regels voor de verwerking van annuleringen en uitzonderingen. Zie Parallelle Algoritmen Annuleren en Uitzonderingsafhandeling voor meer informatie over het annuleren en afhandelen van uitzonderingen in de Concurrency Runtime.

Aanbeveling

Deze parallelle sorteeralgoritmen ondersteunen beweegsemantiek. U kunt een operator voor verplaatsingstoewijzing definiëren om wisselbewerkingen efficiënter uit te voeren. Zie Rvalue Reference Declarator: &&en Move Constructors and Move Assignment Operators (C++) voor meer informatie over verplaatsingssemantiek en de operator voor verplaatsingstoewijzingen. Als u geen operator voor verplaatsingstoewijzing of wisselfunctie opgeeft, gebruiken de sorteeralgoritmen de kopieerconstructor.

In het volgende basisvoorbeeld ziet u hoe u parallel_sort gebruikt om een vector van int waarden te sorteren.

parallel_sort Standaard wordt std::less gebruikt om waarden te vergelijken.

// basic-parallel-sort.cpp

// compile with: /EHsc

#include <ppl.h>

#include <random>

#include <iostream>

using namespace concurrency;

using namespace std;

int wmain()

{

// Create and sort a large vector of random values.

vector<int> values(25000000);

generate(begin(values), end(values), mt19937(42));

parallel_sort(begin(values), end(values));

// Print a few values.

wcout << "V(0) = " << values[0] << endl;

wcout << "V(12500000) = " << values[12500000] << endl;

wcout << "V(24999999) = " << values[24999999] << endl;

}

/* Output:

V(0) = -2147483129

V(12500000) = -427327

V(24999999) = 2147483311

*/

In dit voorbeeld ziet u hoe u een aangepaste vergelijkingsfunctie kunt opgeven. Hierbij wordt de methode std::complex::real gebruikt om de dubbele< waarden std::complex> in oplopende volgorde te sorteren.

// For this example, ensure that you add the following #include directive:

// #include <complex>

// Create and sort a large vector of random values.

vector<complex<double>> values(25000000);

generate(begin(values), end(values), mt19937(42));

parallel_sort(begin(values), end(values),

[](const complex<double>& left, const complex<double>& right) {

return left.real() < right.real();

});

// Print a few values.

wcout << "V(0) = " << values[0] << endl;

wcout << "V(12500000) = " << values[12500000] << endl;

wcout << "V(24999999) = " << values[24999999] << endl;

/* Output:

V(0) = (383,0)

V(12500000) = (2.1479e+009,0)

V(24999999) = (4.29497e+009,0)

*/

In dit voorbeeld ziet u hoe u een hash-functie aan het parallel_radixsort algoritme kunt leveren. In dit voorbeeld worden 3D-punten gesorteerd. De punten worden gesorteerd op basis van hun afstand tot een referentiepunt.

// parallel-sort-points.cpp

// compile with: /EHsc

#include <ppl.h>

#include <random>

#include <iostream>

using namespace concurrency;

using namespace std;

// Defines a 3-D point.

struct Point

{

int X;

int Y;

int Z;

};

// Computes the Euclidean distance between two points.

size_t euclidean_distance(const Point& p1, const Point& p2)

{

int dx = p1.X - p2.X;

int dy = p1.Y - p2.Y;

int dz = p1.Z - p2.Z;

return static_cast<size_t>(sqrt((dx*dx) + (dy*dy) + (dz*dz)));

}

int wmain()

{

// The central point of reference.

const Point center = { 3, 2, 7 };

// Create a few random Point values.

vector<Point> values(7);

mt19937 random(42);

generate(begin(values), end(values), [&random] {

Point p = { random()%10, random()%10, random()%10 };

return p;

});

// Print the values before sorting them.

wcout << "Before sorting:" << endl;

for_each(begin(values), end(values), [center](const Point& p) {

wcout << L'(' << p.X << L"," << p.Y << L"," << p.Z

<< L") D = " << euclidean_distance(p, center) << endl;

});

wcout << endl;

// Sort the values based on their distances from the reference point.

parallel_radixsort(begin(values), end(values),

[center](const Point& p) -> size_t {

return euclidean_distance(p, center);

});

// Print the values after sorting them.

wcout << "After sorting:" << endl;

for_each(begin(values), end(values), [center](const Point& p) {

wcout << L'(' << p.X << L"," << p.Y << L"," << p.Z

<< L") D = " << euclidean_distance(p, center) << endl;

});

wcout << endl;

}

/* Output:

Before sorting:

(2,7,6) D = 5

(4,6,5) D = 4

(0,4,0) D = 7

(3,8,4) D = 6

(0,4,1) D = 7

(2,5,5) D = 3

(7,6,9) D = 6

After sorting:

(2,5,5) D = 3

(4,6,5) D = 4

(2,7,6) D = 5

(3,8,4) D = 6

(7,6,9) D = 6

(0,4,0) D = 7

(0,4,1) D = 7

*/

Ter illustratie gebruikt dit voorbeeld een relatief kleine gegevensset. U kunt de eerste grootte van de vector vergroten om te experimenteren met prestatieverbeteringen ten opzichte van grotere gegevenssets.

In dit voorbeeld wordt een lambda-expressie gebruikt als hash-functie. U kunt ook een van de ingebouwde implementaties van de std::hash-klasse gebruiken of uw eigen specialisatie definiëren. U kunt ook een aangepast hash-functieobject gebruiken, zoals wordt weergegeven in dit voorbeeld:

// Functor class for computing the distance between points.

class hash_distance

{

public:

hash_distance(const Point& reference)

: m_reference(reference)

{

}

size_t operator()(const Point& pt) const {

return euclidean_distance(pt, m_reference);

}

private:

Point m_reference;

};

// Use hash_distance to compute the distance between points.

parallel_radixsort(begin(values), end(values), hash_distance(center));

De hash-functie moet een integraal type retourneren (std::is_integral::value moet zijn true). Dit integrale type moet converteerbaar zijn naar type size_t.

Een sorteeralgoritmen kiezen

In veel gevallen parallel_sort biedt u de beste balans tussen snelheid en geheugenprestaties. Wanneer u echter de grootte van uw gegevensset vergroot, het aantal beschikbare processors of de complexiteit van uw vergelijkingsfunctie vergroot, parallel_buffered_sort of parallel_radixsort beter kan presteren. De beste manier om te bepalen welk sorteeralgoritme in een bepaald scenario moet worden gebruikt, is te experimenteren en te meten hoe lang het duurt om typische gegevens te sorteren onder representatieve computerconfiguraties. Houd rekening met de volgende richtlijnen wanneer u een sorteerstrategie kiest.

De grootte van uw gegevensset. In dit document bevat een kleine gegevensset minder dan 10000 elementen, een middelgrote gegevensset bevat tussen 10.000 en 100.000 elementen en een grote gegevensset bevat meer dan 100.000 elementen.

De hoeveelheid werk die uw vergelijkingsfunctie of hashfunctie uitvoert.

De hoeveelheid beschikbare rekenresources.

De kenmerken van uw gegevensset. Een algoritme kan bijvoorbeeld goed presteren voor gegevens die al bijna zijn gesorteerd, maar niet zo goed voor gegevens die volledig niet zijn gesorteerd.

De segmentgrootte. Het optionele

_Chunk_sizeargument geeft aan wanneer het algoritme overschakelt van een parallelle naar een seriële sorteeruitvoering, omdat het de algehele sortering onderverdeelt in kleinere werkeenheden. Als u bijvoorbeeld 512 opgeeft, schakelt het algoritme over naar seriële implementatie wanneer een werkeenheid 512 of minder elementen bevat. Een seriële implementatie kan de algehele prestaties verbeteren, omdat hiermee de overhead wordt geëlimineerd die nodig is om gegevens parallel te verwerken.

Het is misschien niet de moeite waard om een kleine gegevensset parallel te sorteren, zelfs wanneer u een groot aantal beschikbare rekenkracht hebt of wanneer uw vergelijkingsfunctie of hash-functie een relatief grote hoeveelheid werk uitvoert. U kunt de functie std::sort gebruiken om kleine gegevenssets te sorteren. (parallel_sort en parallel_buffered_sort roept sort aan wanneer u een segmentgrootte opgeeft die groter is dan de gegevensset; echter moet parallel_buffered_sort O(N)-ruimte toewijzen, wat extra tijd kan kosten vanwege vergrendelingsconflicten of geheugentoewijzing.)

Als u geheugen moet besparen of als uw geheugenverdelingsprogramma onderhevig is aan vergrendelingsconflicten, moet u parallel_sort gebruiken om een middelgrote gegevensset te sorteren.

parallel_sort vereist geen extra ruimte; voor de andere algoritmen is O(N) ruimte vereist.

Gebruik parallel_buffered_sort om middelgrote datasets te sorteren wanneer uw toepassing voldoet aan de aanvullende ruimtevereiste van O(N).

parallel_buffered_sort kan vooral handig zijn wanneer u een groot aantal rekenresources of een dure vergelijkingsfunctie of hash-functie hebt.

Gebruik parallel_radixsort om grote datasets te sorteren wanneer uw applicatie aan de extra ruimtevereiste van O(N) voldoet.

parallel_radixsort kan vooral nuttig zijn wanneer de equivalente vergelijkingsbewerking duurder is of wanneer beide bewerkingen duur zijn.

Waarschuwing

Voor het implementeren van een goede hash-functie moet u het gegevenssetbereik kennen en hoe elk element in de gegevensset wordt getransformeerd naar een overeenkomstige niet-ondertekende waarde. Omdat de hash-bewerking werkt op niet-ondertekende waarden, kunt u een andere sorteerstrategie overwegen als niet-ondertekende hashwaarden niet kunnen worden geproduceerd.

In het volgende voorbeeld worden de prestaties van sort, parallel_sorten parallel_buffered_sorten parallel_radixsort vergeleken met dezelfde grote set willekeurige gegevens.

// choosing-parallel-sort.cpp

// compile with: /EHsc

#include <ppl.h>

#include <random>

#include <iostream>

#include <windows.h>

using namespace concurrency;

using namespace std;

// Calls the provided work function and returns the number of milliseconds

// that it takes to call that function.

template <class Function>

__int64 time_call(Function&& f)

{

__int64 begin = GetTickCount();

f();

return GetTickCount() - begin;

}

const size_t DATASET_SIZE = 10000000;

// Create

// Creates the dataset for this example. Each call

// produces the same predefined sequence of random data.

vector<size_t> GetData()

{

vector<size_t> data(DATASET_SIZE);

generate(begin(data), end(data), mt19937(42));

return data;

}

int wmain()

{

// Use std::sort to sort the data.

auto data = GetData();

wcout << L"Testing std::sort...";

auto elapsed = time_call([&data] { sort(begin(data), end(data)); });

wcout << L" took " << elapsed << L" ms." <<endl;

// Use concurrency::parallel_sort to sort the data.

data = GetData();

wcout << L"Testing concurrency::parallel_sort...";

elapsed = time_call([&data] { parallel_sort(begin(data), end(data)); });

wcout << L" took " << elapsed << L" ms." <<endl;

// Use concurrency::parallel_buffered_sort to sort the data.

data = GetData();

wcout << L"Testing concurrency::parallel_buffered_sort...";

elapsed = time_call([&data] { parallel_buffered_sort(begin(data), end(data)); });

wcout << L" took " << elapsed << L" ms." <<endl;

// Use concurrency::parallel_radixsort to sort the data.

data = GetData();

wcout << L"Testing concurrency::parallel_radixsort...";

elapsed = time_call([&data] { parallel_radixsort(begin(data), end(data)); });

wcout << L" took " << elapsed << L" ms." <<endl;

}

/* Sample output (on a computer that has four cores):

Testing std::sort... took 2906 ms.

Testing concurrency::parallel_sort... took 2234 ms.

Testing concurrency::parallel_buffered_sort... took 1782 ms.

Testing concurrency::parallel_radixsort... took 907 ms.

*/

In dit voorbeeld wordt ervan uitgegaan dat het acceptabel is om O(N) ruimte toe te wijzen tijdens het sorteren, parallel_radixsort het beste presteert op deze gegevensset op deze computerconfiguratie.

[Boven]

Verwante onderwerpen

| Titel | Beschrijving |

|---|---|

| Procedure: Een parallel_for-lus schrijven | Laat zien hoe u het parallel_for algoritme gebruikt om matrixvermeniging uit te voeren. |

| Procedure: Een parallel_for_each-lus schrijven | Laat zien hoe u het parallel_for_each algoritme gebruikt om het aantal priemgetallen in een std::array-object parallel te berekenen. |

| Procedure: parallel_invoke gebruiken om een parallelle sorteerroutine te schrijven | Laat zien hoe u het parallel_invoke algoritme gebruikt om de prestaties van het bitonische sorteeralgoritmen te verbeteren. |

| Procedure: parallel_invoke gebruiken om parallelle bewerkingen uit te voeren | Laat zien hoe u het parallel_invoke algoritme gebruikt om de prestaties van een programma te verbeteren dat meerdere bewerkingen uitvoert op een gedeelde gegevensbron. |

| Hoe kaart- en reduceerbewerkingen parallel uitvoeren | Laat zien hoe u de parallel_transform en parallel_reduce algoritmen gebruikt om een kaart uit te voeren en een reductiebewerking uit te voeren waarmee het aantal woorden in bestanden wordt geteld. |

| Bibliotheek met parallelle patronen (PPL) | Beschrijft de PPL, die een imperatief programmeermodel biedt dat schaalbaarheid en gebruiksgemak bevordert voor het ontwikkelen van gelijktijdige toepassingen. |

| Annulering in de PPL | Hierin wordt de rol van annulering in de PPL uitgelegd, hoe u parallel werk annuleert en hoe u kunt bepalen wanneer een taakgroep wordt geannuleerd. |

| afhandeling van uitzonderingen | Legt de rol van foutafhandeling in de Concurrency Runtime uit. |