Notitie

Voor toegang tot deze pagina is autorisatie vereist. U kunt proberen u aan te melden of de directory te wijzigen.

Voor toegang tot deze pagina is autorisatie vereist. U kunt proberen de mappen te wijzigen.

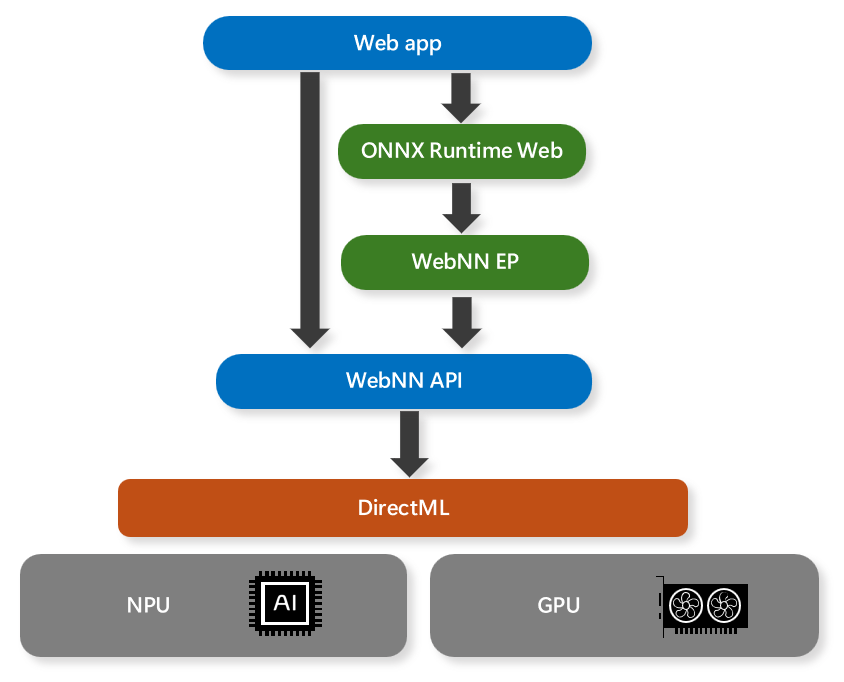

De Web Neural Network-API (WebNN) is een opkomende webstandaard waarmee web-apps en -frameworks deep neurale netwerken kunnen versnellen met GPU's, CPU's of doelgerichte AI-accelerators zoals NPU's. De WebNN-API maakt gebruik van de DirectML-API in Windows voor toegang tot de systeemeigen hardwaremogelijkheden en optimaliseert de uitvoering van neurale netwerkmodellen.

Naarmate het gebruik van AI/ML in apps populairder wordt, biedt de WebNN-API de volgende voordelen:

- Performance Optimizations – Door DirectML te gebruiken, helpt WebNN om web-apps en frameworks in staat te stellen te profiteren van de best beschikbare hardware- en softwareoptimalisaties voor elk platform en apparaat, zonder dat complexe en platformspecifieke code nodig is.

- lage latentie - In-browserdeductie helpt nieuwe gebruiksvoorbeelden mogelijk te maken met lokale mediabronnen, zoals realtime videoanalyse, gezichtsdetectie en spraakherkenning, zonder gegevens naar externe servers te hoeven verzenden en te wachten op antwoorden.

- privacybehoud: gebruikersgegevens blijven op het apparaat staan en behouden de privacy van gebruikers, omdat web-apps en frameworks geen gevoelige of persoonlijke gegevens hoeven te uploaden naar cloudservices voor verwerking.

- hoge beschikbaarheid: er is geen vertrouwen op het netwerk na het initiële opslaan van assets in cache voor offlinecases, omdat web-apps en frameworks neurale netwerkmodellen lokaal kunnen uitvoeren, zelfs als de internetverbinding niet beschikbaar of onbetrouwbaar is.

- lage serverkosten : computing op clientapparaten betekent geen servers nodig, waardoor web-apps de operationele en onderhoudskosten voor het uitvoeren van AI/ML-services in de cloud kunnen verlagen.

AI/ML-scenario's die door WebNN worden ondersteund, omvatten generatieve AI, persoonsdetectie, gezichtsdetectie, semantische segmentatie, skeletdetectie, stijloverdracht, superresolutie, afbeeldingsbijschriften, machinevertaling en ruisonderdrukking.

Notitie

De WebNN-API wordt nog steeds uitgevoerd, met GPU- en NPU-ondersteuning in een preview-status. De WebNN-API mag momenteel niet worden gebruikt in een productieomgeving.

Framework-ondersteuning

WebNN is ontworpen als een back-end-API voor webframeworks. Voor Windows raden we aan om ONNX Runtime Webte gebruiken. Dit biedt een vertrouwde ervaring voor het gebruik van DirectML en ONNX Runtime, zodat u een consistente ervaring kunt hebben met het implementeren van AI in ONNX-indeling in webtoepassingen en systeemeigen toepassingen.

WebNN-vereisten

U kunt informatie over uw browser controleren door te navigeren naar about://version in de adresbalk van uw chromium-browser.

| Apparatuur | Webbrowsers | Windows-versie | ONNX Runtime-webversie | Stuurprogrammaversie |

|---|---|---|---|---|

| GPU | WebNN vereist een Chromium-browser*. Gebruik de meest recente versie van Microsoft Edge Beta. | Minimale versie: Windows 11, versie 21H2. | Minimale versie: 1.18 | Installeer het meest recente stuurprogramma voor uw hardware. |

| NPU | WebNN vereist een Chromium-browser*. Gebruik de meest recente versie van Microsoft Edge Canary. Zie de onderstaande opmerking voor het uitschakelen van de GPU-bloklijst. | Minimale versie: Windows 11, versie 21H2. | Minimale versie: 1.18 | Intel-stuurprogrammaversie: 32.0.100.2381. Zie veelgestelde vragen over de stappen voor het bijwerken van het stuurprogramma. |

Notitie

Browsers op basis van Chromium kunnen momenteel WebNN ondersteunen, maar zijn afhankelijk van de implementatiestatus van de afzonderlijke browser.

Notitie

Voor NPU-ondersteuning start u edge vanaf de opdrachtregel met de volgende vlag: msedge.exe --disable_webnn_for_npu=0

Modelondersteuning

GPU (voorbeeld):

Bij uitvoering op GPU's ondersteunt WebNN momenteel de volgende modellen:

- Stabiele Diffusie Turbo

- Stable Diffusion 1,5

- fluisterbasis

- MobileNetv2

- alles segmenteren

- ResNet

- EfficientNet-

- SqueezeNet

WebNN werkt ook met aangepaste modellen zolang operatorondersteuning voldoende is. Controleer de status van operators hier.

NPU (voorbeeldweergave):

Op de Core™ Ultra-processors van® Intel met Intel® AI Boost NPU ondersteunt WebNN:

Veelgestelde vragen

Hoe kan ik een probleem met WebNN indienen?

Voor algemene problemen met WebNN dient u een probleem in op onze WebNN Developer Preview GitHub

Ga naar de ONNXRuntime Github-voor problemen met het ONNX Runtime-web of de WebNN-uitvoeringsprovider.

Hoe kan ik problemen met WebNN opsporen?

De WebNN W3C Spec- bevat informatie over foutdoorgifte, meestal via DOM-uitzonderingen. Het logboek aan het einde van about://gpu kan ook nuttige informatie bevatten. Voor verdere problemen kunt u een probleem melden via de bovenstaande link.

Ondersteunt WebNN andere besturingssystemen?

WebNN ondersteunt momenteel het Windows-besturingssysteem het beste. Versies voor andere besturingssystemen worden uitgevoerd.

Welke hardware-backends zijn momenteel beschikbaar? Worden bepaalde modellen alleen ondersteund met specifieke back-ends van hardware?

U vindt informatie over operatorondersteuning in WebNN op Implementatiestatus van WebNN-bewerkingen | Web Machine Learning.

Wat zijn de stappen voor het bijwerken van het Intel-stuurprogramma voor NPU-ondersteuning (preview)?

- Vind het bijgewerkte stuurprogramma op de Intel-stuurprogrammawebsite.

- Decomprimeren van het ZIP-bestand.

- Druk op Win+R om het dialoogvenster Uitvoeren te openen.

- Typ devmgmt.msc in het tekstveld.

- Druk op Enter of klik op OK.

- Open in Apparaatbeheer het knooppunt Neurale processors

- Klik met de rechtermuisknop op de NPU waarvan u het stuurprogramma wilt bijwerken.

- Kies "Stuurprogramma bijwerken" in het contextmenu

- Selecteer 'Bladeren op mijn computer voor stuurprogramma's'

- Selecteer 'Ik kies uit een lijst met beschikbare stuurprogramma's op mijn computer'

- Druk op de knop Schijf hebben

- Druk op de knop Bladeren

- Navigeer naar de plaats waar u het bovengenoemde zip-bestand hebt gedecomprimeerd.

- Druk op OK.