PAGELATCH_EX waits and heavy inserts

Hello all,

Recently I came across an issue over a table that was being inserted into quite intensively, by concurrent operations. The issue, which is not that uncommon, is dealing with PAGELATCH_EX contention, namely when a table has a clustering key that conforms with the concept of a small and monotonically increasing value, such as IDENTITY, and the table itself is somewhat narrow.

First, what is a PAGELATCH? In a nutshell, a PAGELATCH is used to synchronize short term access to database pages that reside in the Buffer cache, as opposed to a PAGEIOLATCH, which is used to synchronize physical access to pages in disk. These are normal in every system, the problem being when there is contention. In this case when there are many concurrent accesses to a single page, causing waits and hindering the ability to perform these inserts efficiently.

So let’s create a table to repro the issue:

IF EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'dbo.HeavyInsert') AND type in (N'U'))

DROP TABLE dbo.HeavyInsert

IF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'dbo.HeavyInsert') AND type in (N'U'))

CREATE TABLE dbo.HeavyInsert(

ID int IDENTITY(1,1) NOT NULL

, col1 VARCHAR(50) NOT NULL)

GO

CREATE UNIQUE CLUSTERED INDEX CIX

ON dbo.HeavyInsert (ID)

GO

As you can see there is only one column that is a usual candidate for the clustering key (ID) and a single column where data is inserted, making this a narrow table. Now what happens when 120 concurrent connections insert 100,000 rows each? For that purpose we will use the code below:

SET NOCOUNT ON;

DECLARE @i int = 1

WHILE @i < 100000

BEGIN

INSERT INTO dbo.HeavyInsert (col1) VALUES ('testing heavy')

SET @i += 1

END;

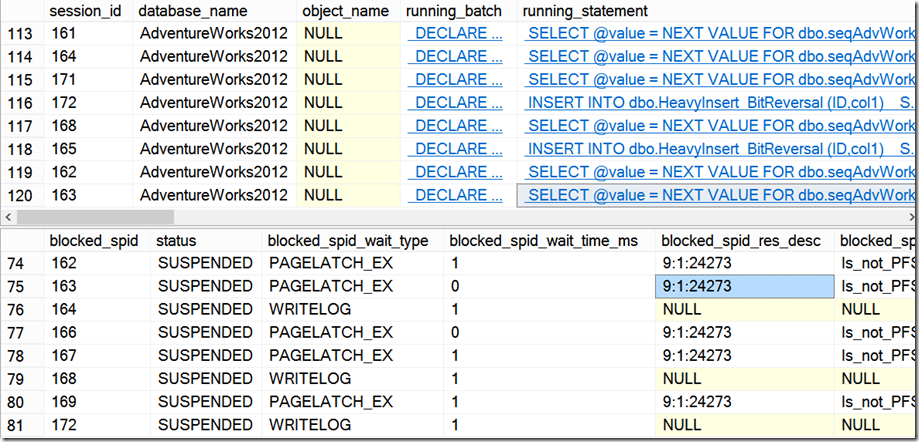

Using one of our SQL Swiss Army Knife scripts to check for locking and blocking, we immediately see that of the 120 concurrent sessions running the same INSERTs (1st half of the image below), 119 are being blocked (2nd half of the image below), and most of these with wait type PAGELATCH_EX:

The wait resource in this case is 9:1:197381, meaning database id 9, file 1 and page 197381. We can take a look inside that page to determine what it is by using the DBCC PAGE command:

DBCC TRACEON(3604)

GO

DBCC PAGE(9,1,197381,0)

GO

Which gives us the page header, were we can see that it is a data page (type 1) belonging to index id 1, on object id 1967280201:

The object id can be matched to an actual object name using the OBJECT_NAME function, which gives us the HeavyInsert table we created:

SELECT OBJECT_NAME(1967280201,9)

GO

On my quad-core hyper-threaded laptop with 16GB of RAM and SSDs, these 11,999,880 inserts took a little over 5 minutes (05:20.623).

So how to minimize this kind of bottleneck?

There is a really good whitepaper that about this contention scenario, with several approaches to dealing with this type of contention that work really well. But I also found another solution that works really well, namely one that can be implemented specifically in SQL Server 2012, and does not use an Enterprise-only feature. But more on that ahead…

These are the alternatives the whitepaper provides:

- Using a GUID as the leading key column of an index. I’m not very fond of that strategy as it presents other challenges in itself, namely for reads in my OLTP system, so I won’t test that one.

- Another alternative is using a non-sequential value as the leading index key (a hash), which will also spread out the inserts. The caveat is that being the lead column, this can also result in less than efficient clustered indexes, namely when the clustering key is also the primary key (and it is also a natural key). But it is something to consider if you are running on a Standard Edition of SQL Server, that cannot support the next option.

- A third alternative implies using hash partitioning with a computed column, which uses table partitioning, and is therefore only usable in Enterprise Editions of SQL Server. Let’s explore this one.

First we create a table with an extra computed column, which is marked as persisted.

IF EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'dbo.HeavyInsert_Hash') AND type in (N'U'))

DROP TABLE dbo.HeavyInsert_Hash

IF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'dbo.HeavyInsert_Hash') AND type in (N'U'))

CREATE TABLE dbo.HeavyInsert_Hash(

ID INT IDENTITY(1,1) NOT NULL

, col1 VARCHAR(50) NOT NULL

, HashID AS CONVERT(tinyint, ABS(ID % 8)) PERSISTED NOT NULL)

GO

Now we will create the partitioning function and schema, on where we will create the clustered index:

CREATE PARTITION FUNCTION pf_hash (tinyint) AS RANGE LEFT FOR VALUES (0,1,2,3,4,5,6,7)

GO

CREATE PARTITION SCHEME ps_hash AS PARTITION pf_hash ALL TO ([PRIMARY])

GO

CREATE UNIQUE CLUSTERED INDEX CIX_Hash

ON dbo.HeavyInsert_Hash (ID, HashID) ON ps_hash(HashID)

GO

As you can see, the hash will be the partitioning key, and that will conceptually allow the inserts to be distributed over several partitions in a pseudo-round robin fashion (not exactly, but close enough for the purpose of this discussion). Let’s determine if that is the behavior using the code below, again with the same 120 concurrent connections:

DECLARE @i int = 1

WHILE @i < 100000

BEGIN

INSERT INTO dbo.HeavyInsert_Hash (col1) VALUES ('testing heavy')

SET @i += 1

END;

GO

Checking for contention, we see on the top the same 120 concurrent sessions, and in the bottom we have some 65 blocked spids, but now the prevalent wait is WRITELOG, and much less PAGELATCH_EX contention. For the one seen below, using the same DBCC PAGE we can see it is again a data page, this time in the HeavyInsert_Hash table.

We also see WRITELOG waits are occurring, and when this happens we should check what are the latencies we are getting from the volume that holds the relevant transaction logs.

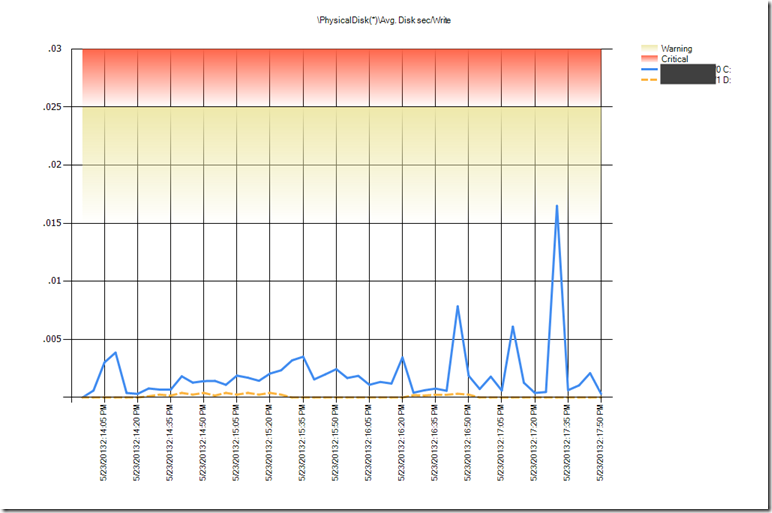

Remember that while I’m running this test on an SSD, it is a laptop, not an enterprise-class storage. Still, for the elapsed time of this test, the counter Avg. Disk sec/Write for the transaction log drive (yes, it’s on C) averaged to just 2ms, although it spiked at 17ms:

This insert cycle took a little over 2 minutes (02:13.055) and we have effectively minimized the PAGELATCH_EX contention in our table, while being able to insert much faster. With the same 11,999,880 inserted records, we can see how these were spread out by partitioning our table, although still writing to the same file and filegroup:

Much better, but I mentioned a different solution that did not require using an Enterprise-only feature, while still taking care of this contention.

The alternative is using bit reversal using the SEQUENCE feature introduced in SQL Server 2012, credits to DangerousDBA (blog) for the original idea.

For this, we will create a sequence to generate the incremental values we need as our clustering key:

IF EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'dbo.seqAdvWorks') AND type in (N'SO'))

DROP SEQUENCE dbo.seqAdvWorks

IF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'dbo.seqAdvWorks') AND type in (N'SO'))

CREATE SEQUENCE dbo.seqAdvWorks AS int

START WITH 1

INCREMENT BY 1

GO

And the bit reversal function that will generate an integer value:

IF EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'[dbo].[ufn_BitReverse]') AND type in (N'FN', N'IF', N'TF', N'FS', N'FT'))

DROP FUNCTION [dbo].[ufn_BitReverse]

GO

CREATE FUNCTION ufn_BitReverse (@InputVal int)

RETURNS int

WITH SCHEMABINDING

AS

BEGIN

DECLARE @WorkValue int = @InputVal

DECLARE @Result int = 0;

DECLARE @Counter tinyint = 0;

WHILE @Counter < 31 -- 63 for bigint

BEGIN

SET @Result = @Result*2

IF (@WorkValue&1) = 1

BEGIN

SET @Result = @Result+1

SET @WorkValue = @WorkValue-1

END

SET @WorkValue = @WorkValue/2

SET @Counter = @Counter+1

END

RETURN @Result

END;

GO

And a new table for this test, which is the very same as our initial table:

IF EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'dbo.HeavyInsert_BitReversal') AND type in (N'U'))

DROP TABLE dbo.HeavyInsert_BitReversal

IF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'dbo.HeavyInsert_BitReversal') AND type in (N'U'))

CREATE TABLE dbo.HeavyInsert_BitReversal(

ID int NOT NULL

, col1 VARCHAR(50) NOT NULL)

GO

CREATE UNIQUE CLUSTERED INDEX CIX_BitReversal

ON dbo.HeavyInsert_BitReversal (ID)

GO

The integer value generated by the function will be used during the inserts, and conceptually it is random enough to spread out the inserts by different pages. So we have to get the next value for the sequence, and use it in the insert statement:

DECLARE @value int

DECLARE @i int = 1

WHILE @i < 100000

BEGIN

SELECT @value = NEXT VALUE FOR dbo.seqAdvWorks

INSERT INTO dbo.HeavyInsert_BitReversal (ID,col1)

SELECT dbo.ufn_BitReverse(@value),'testing heavy'

SET @i += 1

END;

GO

Once the workload is running on the 120 sessions, while checking for contention we can see that PAGELATCH_EX contention is occurring on resource 9:1:24273:

What we can also determine, for example looking at spid 163, is that the running statement during the latch contention is the NEXT VALUE FOR sequence. Using DBCC PAGE, we can see that the object id is 60, which is the sysobjvalues system table. This table is used to hold metadata while accessing the sequence to get the current value.

So it seems that we are not incurring in PAGELATCH_EX waits in the data insertion itself, but while getting the next value for the sequence. That can be resolved using the sequence cache for a large value, like below:

IF EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'dbo.seqAdvWorks') AND type in (N'SO'))

DROP SEQUENCE dbo.seqAdvWorks

IF NOT EXISTS (SELECT * FROM sys.objects WHERE object_id = OBJECT_ID(N'dbo.seqAdvWorks') AND type in (N'SO'))

CREATE SEQUENCE dbo.seqAdvWorks AS int

START WITH 1

INCREMENT BY 1

START WITH 1

CACHE 10000

GO

We have set the value at 10,000 (mileage may vary) and this way we will have less frequent access to the sysobjvalues system table.

Running the same insert workload we have no visible contention as before. For the same 11,999,880 inserted records, the cycle took a little over 4 minutes (04:00.961), so I find this to be an elegant solution for addressing PAGELATCH_EX contention in this scenario.

As a summary, if we analyze the Wait Statistics: Page Latch waits performance counter for the duration of the tests, we find that while the hash partitioning scenario provides better performance, the bit reversal gets the lowest PAGELATCH waits.

Min |

Avg |

Max |

Time (mi:ss.ms) |

|

HeavyInsert |

0 |

226,726 |

339,493 |

05:20.623 |

HeavyInsert_Hash |

0 |

14,424 |

52,879 |

02:13.055 |

HeavyInsert_BitReversal |

0 |

2,124 |

13,003 |

04:00.961 |

Hope you find it useful!

Pedro Lopes (@SQLPedro)

Disclaimer: I hope that the information on these pages is valuable to you. Your use of the information contained in these pages, however, is at your sole risk. All information on these pages is provided "as -is", without any warranty, whether express or implied, of its accuracy, completeness, fitness for a particular purpose, title or non-infringement, and none of the third-party products or information mentioned in the work are authored, recommended, supported or guaranteed by Ezequiel. Further, Ezequiel shall not be liable for any damages you may sustain by using this information, whether direct, indirect, special, incidental or consequential, even if it has been advised of the possibility of such damages.

Comments

- Anonymous

May 24, 2013

Example using partitioned index - isn't better to create a partitioned table with index on the partition (re special guidelines fit partitioned indexes)? - Anonymous

May 25, 2013

Hello Brad,The unique clustered index is being created on the partition scheme, so could you please clarify what you mean? - Anonymous

June 09, 2013

The comment has been removed - Anonymous

June 10, 2013

Hi Kimberly,Indeed good points you make there.Thanks for the feedback!PL - Anonymous

October 22, 2013

The comment has been removed - Anonymous

October 23, 2013

Hi Colin,If you look at technet.microsoft.com/.../ms187745.aspx, you see that an int is 4 bytes (range -2^31 to 2^31-1), and a bigint 8 bytes (-2^63 to 2^63-1).Can you see why we need to iterate 31 or 63 times? It's equal to the number to the power of 31 or 63 depending on whether we are dealing with int or bigint. - Anonymous

October 25, 2013

thats precisely the bit i don't understand if you pass in the identity Value of table, (my math obviously isn't up to it.) if i pass in 0 i get result 0, 0^31 being 0, if i pass in 1 i get 1^31 fine, i then pass in the next int 2 and i get 2^31 the max value of an int, if i then pass in 3 don't i get a result of 3^31 and exceed the max permitted value of an int ? - Anonymous

October 25, 2013

Hi Colin,The purpose is not to multiply the Identity (or Sequence number in our case) and perform an exponentiation to 32 like you suggest, but rather take the bitmask that corresponds to our Identity, reverse that bitmask, and turn that bitmask to an integer again.For my explanation, keep in mind that an integer (int) has 32 bits, hence the 32 iterations (0 to 31).Let's now look at the next before last available number in an integer - 2147483646. This has a bitmask of 1111111111111111111111111111110. Reversing the bitmask we get 0111111111111111111111111111111, which back to decimal turns to our result of 1073741823.How is it done? We start the iteration first by multiplying our result by 2. The 1st time this is 0 (we have set it like that), so 02 = 0. Note that for such a large integer, at the 32nd iteration, this is 5368709112 = 1073741822 - remember this one.But let's get back to subject. Then we do a bitwise compare of the target Identity (called work value) and 1:- If the bitwise comparison is 1, then we increment that result2 by 1, and decrement our work value by 1.- If the bitwise comparison is 0, we skip the above logic.This is what is allowing us to work on the reverse bitmask.In the first iteration this bitwise comparison is 0 (recall the reverse bitmask for our Identity is 0111111111111111111111111111111).After that, we take our work value and divide by 2, and take that value to the new iteration.In our bitmask, the 2nd iteration the bitwise comparison is now 1 (and this goes on all the way to the 32nd iteration).So now we follow the logic: increment result2 by 1 (02 + 1 = 1) and decrement the work value by 1 (1073741823-1 = 1073741822).Now divide the work value by 2 (1073741822/2 = 536870911).On the 2nd iteration we do it again (bitwise is again 1). Increment result2 by 1 (12 + 1 = 3) and decrement the work value by 1 (536870911-1 = 536870910). Now divide the work value by 2 (536870910/2 = 268435455).And so on until we get the final iteration:Our bitwise comparison is still 1, so we take work value which is now 1. Increment result2 by 1 (536870911*2 + 1 = 1073741823) and decrement the work value by 1 (1-1 = 0).So we get our reversed bitmask decimal value, which is 1073741823.Take the following examples for added clarification:Identity_valueBinary Reverse_Binary New_value 347 0000000000000000000000101011011 1101101010000000000000000000000 1832910848 348 0000000000000000000000101011100 0011101010000000000000000000000 490733568 349 0000000000000000000000101011101 1011101010000000000000000000000 1564475392Hope you could now understand what we are doing.Cheers - Anonymous

July 30, 2014

I wonder - looping scalar function. Enough to explain why this "wins" with least latches - because most workloads are bottlenecked in the FN? Enter a deterministic inlinable bit reversal?IF OBJECT_ID(N'dbo.HeavyInsert_BitReversalInline',N'U') IS NOT NULL

CREATE TABLE dbo.HeavyInsert_BitReversalInline (DROP TABLE dbo.HeavyInsert_BitReversalInline;

);<your original workload>This actually runs on 2008R2 standard, but I haven't got the resources ready to benchmark this.ID int IDENTITY, col1 varchar(24), IDRev AS CASE ID & 1 WHEN 0 THEN 0 ELSE CAST(0x80000000 AS int) END | (((CAST(ID & 0xFE AS bigint) * 0x0202020202) & 0x010884422010) % 1023) * CAST(0x1000000 AS int) | (((CAST((ID & 0xFF00) / 0x100 AS bigint) * 0x0202020202) & 0x010884422010) % 1023) * CAST(0x10000 AS int) | (((CAST((ID & 0xFF0000) / 0x10000 AS bigint) * 0x0202020202) & 0x010884422010) % 1023) * CAST(0x100 AS int) | (((CAST((ID & 0x7F000000) / 0x1000000 AS bigint) * 0x0202020202) & 0x010884422010) % 1023) | CASE ID & 0x80000000 WHEN 0 THEN 0 ELSE 1 END, CONSTRAINT Tst_PK PRIMARY KEY NONCLUSTERED (ID), CONSTRAINT Tst_CL UNIQUE CLUSTERED (IDRev)