Nuta

Dostęp do tej strony wymaga autoryzacji. Możesz spróbować zalogować się lub zmienić katalogi.

Dostęp do tej strony wymaga autoryzacji. Możesz spróbować zmienić katalogi.

In this blog post, I will use matrix multiplication as an example to show you how to chunk big data to run your algorithm on an accelerator using C++ AMP. I will discuss and compare a few data chunking strategies.

Background

Most GPUs only have limited memory resource. For example AMD HD Radeon 5870 and Nvidia GTX 280 both have 1GB memory. To run applications with big data which doesn’t fit in the GPU memory, you have to chunk and stream the data to the GPU.

To chunk data, you need to keep performance in mind. Copying data between CPU memory and GPU memory is expensive because it has to go through the PCI express bus. So reducing the copy overhead is a major consideration when deciding the chunk strategies.

Dependent on the problems you are solving, and the algorithms you are using, you might need to consider different strategies in order to achieve better performance. Some algorithms have data reuse and some do not. Sometimes, you need to restructure your algorithm to relax data dependence or to change data access patterns when streaming the data in.

For example, while the chunking approach to matrices addition is straight forward, the approach to matrix transpose is relative complicated. While the approach to matrix multiplication requires considering data reuse, the approaches to LU and Cholesky decomposition require considering data dependence between triangular solver and GEMM. You might also need to consider data layout. Is the data continuous in memory? If not, do you need to pack the data? What are the best configurations of your algorithm, such as the tile size and alignment requirement, if the problem size can fit in the GPU? Is the chunking strategy related to the configuration?

In the following, I will use matrix multiplication as an example to discuss four chunking strategies.

Strategies

Assume we want to multiply matrix A and matrix B and assign the result to a matrix C. Assume none of the matrices can fit in the GPU memory.

C(MxN) = A(MxK) * B(KxN)

Strategy1

We chunk the matrices along all the dimensions using the same tile size (see the pseudo code below). For each iteration of the matrix_multiplication, the tiles of A and B are copied to the GPU. The tile of C is allocated on the GPU, and it is reused for each iteration. The result in the tile C is copied back to the CPU.

1: for (i = 0; i < M; i += tile_size) {

2: for (j = 0; j < N; i += tile_size) {

3: for (k = 0; k < K; k += tile_size) {

4: copy_tile_a_to_GPU( tile_origin(i,k) );

5: copy_tile_b_to_GPU( tile_origin(k,j) );

6: matrix_multiply(tile_a,tile_b,tile_c);

7: }

8: copy_tile_c_to_CPU( tile_origin(i,j) );

9: }

10: }

Strategy2

We chunk the matrices only along two dimensions (see the pseudo code below). For each iteration of the matrix_multiplication, the tile of B is copied to the GPU, the tile of A is reused and the result in the tile of C is copied back to the CPU.

1: for (i = 0; i < M; i += tile_size) {

2: copy_tile_a_to_GPU( tile_origin(i,0) );

3:

4: for (j = 0; j < N; i += tile_size) {

5: copy_tile_b_to_GPU( tile_origin(0,j) );

6:

7: matrix_multiply(tile_a,tile_b,tile_c);

8: copy_tile_c_to_CPU( tile_origin(i,j) );

9: }

10: }

Strategy3

This is a modification of strategy2. Instead of copying back a tile of C from the GPU to the CPU for each iteration of the matrix_multiplication, we allocate a panel tile for C on the GPU that can store multiple iterations of the computation. We copy the panel tile C back to the CPU when the computation complete. Note that, compared to Strategy2, while the total number of copies for C is reduced, the total amount of data copied back to the CPU is the same (see the pseudo code below).

1: for (i = 0; i < M; i += tile_size) {

2: copy_tile_a_to_GPU( tile_origin(i,0) );

3:

4: for (j = 0; j < N; i += tile_size) {

5: copy_tile_b_to_GPU( tile_origin(0,j) );

6:

7: matrix_multiply(tile_a,tile_b,tile_c);

8: }

9: copy_tile_c_to_CPU( tile_origin(i,0) );

10: }

Strategy4

This is another modification of strategy2. Instead of copying a tile of B to the GPU for each iteration of the matrix_multiplication, we copy a tile of A. This modification is effective when the memory layout of the matrices are row-major (see the pseudo code below).

1: for (j = 0; j < N; j += tile_size) {

2: copy_tile_b_to_GPU( tile_origin(0,j) );

3:

4: for (i = 0; i < M; i += tile_size) {

5: copy_tile_a_to_GPU( tile_origin(i,0) );

6:

7: matrix_multiply(tile_a,tile_b,tile_c);

8: copy_tile_c_to_CPU( tile_origin(i,j) );

9: }

10: }

Cost Analysis

The cost of each strategy comes from two parts: 1) copy overhead to transfer data between CPU and GPU, 2) data packing overhead where a tile has to be packed into a temporary array before it can be copied to the GPU if the tile is not continuous in memory.

In the following analysis, we assume the available GPU memory size is M, and the dimension of the three matrices is n. Assume M = n2 / 2. Then we are in a situation where none of the three matrices can fit in the GPU memory. We also assume all the matrices are row-major, which is the common case.

Strategy1: assume the tile size is T1.

- copy_overhead = A:n3 /T1 + B: n3/ T1+ C:n2 = 2n3/ T1+ n2

- pack_overhead = A:n3 /T1 + B: n3/ T1

The tiles of A and B are packed for n/ T1 times before being transferred to the GPU.

Strategy2: assume the tile size is T2.

- copy_overhead = A:n2 + B:n3/T2 + C:n2

- pack_overhead = B:n3/T2

Compared to Strategy1, given T2<=T1, the copy overhead for A is reduced while the overhead for B is increased. There is no pack overhead for A because the tile of A is continuous in memory.

Strategy3: the overhead is the same as for Strategy2 with smaller tile size. However, some of the GPU memory is not used efficiently because the GPU memory allocated for the panel tile of C is larger than the computation required.

Strategy4: the copy overhead is same as for Strategy2. But the pack overhead is n/T2 times less.

Implementation & Results

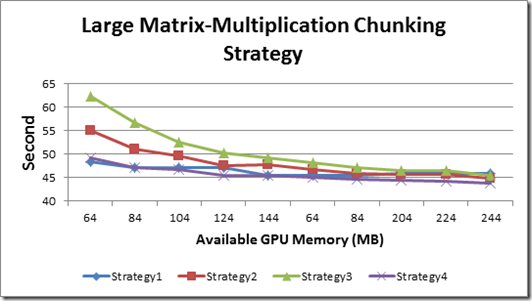

I implemented the four strategies and have attached the project for you to download (LargeMatrixMultiplication). For simplicity, I assume the matrices are square, and the matrix dimension n is multiple of 32, which is the tile size used for the tiled matrix multiplication kernel. I assumed the available GPU memory is from 64MB to 244MB, and assumed each matrix size is 1024x1024. So the total data size is 1.2GB.

In C++ AMP, you can query an accelerator’s property concurrency::accelerator.dedicated_memory, which returns the size of the GPU dedicated memory. That is the total physical memory, not necessary the available memory. Dependent on whether the GPU is exclusively used by you application or not, you might only be able to allocate a portion of the dedicated memory.

The following graph shows the performance results running on ATI HD Radeon 5870. The host machine is Alienware Aurora with 12.GB RAM, 3.2GHz i7 QuadCore, 64-bit Win7.

Overall, Strategy4 performs the best, and Strategy3 performs the worst. When the ratio of the data size to the GPU memory size is larger, Strategy4 performs better. When the ratio is decreased, the performance gap among the strategies is narrowed.

Summary

Here I have shown how to use C++ AMP to work on large data on GPU, with the discussion on issues to be considered when designing the chunking strategy. This is example code and has not been tuned to the any significant extent. Two areas can be further experimented. One is to use asynchronous copy to amortize the copy overhead by overlapping copy with computation. The second is to utilize your CPU computation power by partitioning data between CPU and GPU. We will cover those in future posts. As usual your feedback is welcome below or in our MSDN forum.