Considerations around validation errors 41305 and 41325 on memory optimized tables with foreign keys

Reviewed by: Jos de Bruijn; Joe Sack, Mike Weiner, Mike Ruthruff, Kun Cheng

Transactions on memory optimized tables in SQL Server 2014, SQL Server 2016 and Azure SQL Database are implemented with an optimistic concurrency model with multi-version concurrency control. Each transaction has its own transactionally consistent version of rows and the inherent assumption is that there aren't any conflicts. Unlike on-disk tables, there is no locking and write-write conflicts are detected (error 41302) if concurrent transactions update the same rows. Even to maintain higher isolation levels, locks aren't taken and hence validation has to occur at transaction commit time.

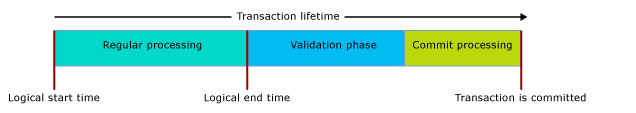

To recap a transaction lifetime there are 3 phases as described below, in this blog we will focus more on the validation phase

For more details see the books Online article : https://msdn.microsoft.com/en-us/library/dn133169.aspx

On transaction commit, you have to validate that no other transaction has updated or changed the rows you have read if using the Repeatable Read isolation level, or that no phantom rows were inserted into the range that you have read if the isolation level is Serializable. If all validation succeeds, then the transaction commits.

The summary of the validation errors is in the article Guidelines for Retry Logic for Transactions on Memory-Optimized Tables .

- 41305. The current transaction failed to commit due to a repeatable read validation failure.

- 41325. The current transaction failed to commit due to a serializable validation failure.

While testing a customer workload we encountered a delete transaction that was under the Snapshot Isolation level that was still failing with one of the validation errors 413235 listed above. This was puzzling, because under the Snapshot isolation level, you don't expect to fail with repeatable read validation errors.

SQL Server 2016 did introduce support for foreign keys on memory optimized tables along with many other surface area improvements for In-memory OLTP as detailed here. Foreign keys do introduce a difference in the behavior with regards to validation that you may not expect. If you update or delete data from a table which has foreign key relationships, validation for adherence of those relationships has to happen under a higher isolation level in order to ensure that no rows are changed such that the constraint is violated. This means that if a constraint is violated due to DML performed in concurrent transactions, then the commit will fail with a validation failure. On-disk tables on the other hand acquire row locks or key locks on the tables involved in foreign constraints and concurrent transactions trying to delete or update those rows will be blocked. Unlike on-disk tables, memory optimized tables are lock free and hence validations at the right isolation level are required to ensure correctness.

Let's take a look at a simple master/detail table relationship. Scripts to reproduce are at the end of the article in the appendix.

- tblMaster is the master table

- tblDetails is the child table has a foreign key that is defined on the tblMaster table's EventID column

Exhibit 1:Validation errors due to lack of supporting indexes

In the sequence defined in the diagram below, you see that the statements themselves were executed at the Snapshot Isolation Level and yet the error we get is a "repeatable read" validation error, something we do not necessarily expect. Also Transaction 1 below is actually updating a row with EventID =4, which isn't one of the rows that Transaction 2 is deleting and hence we did not expect this error. This is an example of a repeatable read validation error that was avoidable with proper indexing. When it comes to foreign keys always add an index on the Foreign key to support lookups as we will see shortly.

| Sequence | Transaction 1 | Transaction 2 |

| 1 | BEGIN TRAN | |

| 2 | DELETE FROM tblMaster WITH (SNAPSHOT) WHERE ID = 5 | |

| 3 | UPDATE tbldetails WITH (SNAPSHOT) SET EventName = 'Event22' WHERE EventID = 4 and languageID = 1 | |

| 4 | COMMIT | |

| 5 | Msg 41305, Level 16, State 0, Line 18 The current transaction failed to commit due to a repeatable read validation failure. |

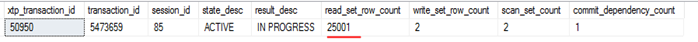

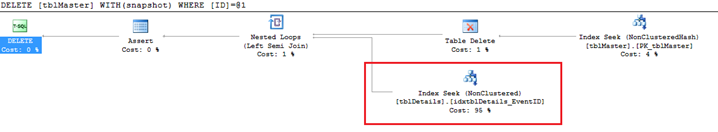

Given the errors, we then looked at the execution plan of the delete statement, which shed some light on the root cause. Looking at the plan below, you can see a scan on the tblDetails table that reads the entire table, and then applies a filter to get the few rows affected. Since the entire table is read, that qualifies as the "read set" of the delete. Updating a row in that read set results in a repeatable read validation though it technically does not violate the constraint.

The output of dm_db_xtp_transactions shows the same read set being shown if you capture this before the commit for the transaction.

SELECT xtp_transaction_id,transaction_id,session_id

,state_desc,result_desc,read_set_row_count,write_set_row_count

,scan_set_count,commit_dependency_count

FROM sys.dm_db_xtp_transactions

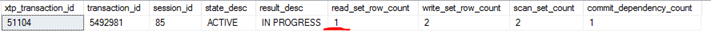

Seeing that the Plan was suboptimal and was acquiring a larger read set than was needed due to lack of proper indexing, creating an index on the appropriate foreign key columns resulted in a different plan and the read set was then much smaller as we aren't scanning the entire table.

ALTER TABLE tblDetails

ADD INDEX idxtblDetails_EventID NONCLUSTERED(EventID)

Adding this index removed these repeatable read validation errors resulting from rows being updated that were not in the range being deleted. This was a case of an avoidable validation error.

Exhibit 2: Serializable validation errors due to DML pattern

In the sequence below, the delete is done with SNAPSHOT isolation level, yet the error message that we get indicates a serializable validation error. This validation error occurs as we are inserting a value into the child table with the same key that we are deleting off the parent which would end up being a violation of the constraint. This is an expected validation error and is fundamentally due to application data entry order.

| Sequence | Transaction 1 | Transaction 2 |

| 1 | BEGIN TRAN | |

| 2 | DELETE FROM tblMaster WITH (SNAPSHOT) WHERE ID = 5 | |

| 3 | INSERT INTO tbldetails VALUES (5,900001,'Event1','US') | |

| 4 | COMMIT | |

| 5 | Msg 41325, Level 16, State 0, Line 39 The current transaction failed to commit due to a serializable validation failure. |

If you did the same sequence of operations on memory optimized tables serially from one transaction, you would get the following behavior where the insert would fail as it conflicted with the key in the master table.

| Sequence | Transaction 1 |

| 1 | BEGIN TRAN |

| 2 | DELETE FROM tblMaster WITH (SNAPSHOT) WHERE ID = 5 |

| 3 | INSERT INTO tbldetails VALUES (5,900001,'Event1','US') |

| 4 | COMMIT |

| 5 | Msg 547, Level 16, State 0, Line 56The INSERT statement conflicted with the FOREIGN KEY constraint "FK_tblDetails_tblMaster". The conflict occurred in database "HKCCI", table "dbo.tblMaster", column 'Id'.The statement has been terminated. |

If we did this same set of operations on disk based tables, at the default isolation level which is READCOMMITTED, the behavior would be below. Transaction 1 is the one that fails in this case and while Transaction 2 is open, transaction 1 is blocked.

| Sequence | Transaction 1 ( disk based ) | Transaction 2 ( disk based) |

| 1 | BEGIN TRAN | |

| 2 | DELETE FROM tblMaster_ondisk WHERE ID = 5 | |

| 3 | INSERT INTO tbldetails_ondisk VALUES (5,900001,'Event1','US') à Blocked | |

| 4 | COMMIT | |

| 5 | Msg 547, Level 16, State 0, Line 11 The INSERT statement conflicted with the FOREIGN KEY constraint "FK_tblDetails_tblMaster_ondisk". The conflict occurred in database "HKCCI", table "dbo.tblMaster_ondisk", column 'Id'. |

Exhibit 3: Repeatable read validation errors due to DML pattern

Below is an example of an expected occurrence of a repeatable read validation error. In this case a value is inserted into the read-set of the rows affected by the delete and specifically into that range being deleted. This is due to how data is being inserted by the application and has to be handled and retried with the guidelines specified in Guidelines for Retry Logic for Transactions on Memory-Optimized Tables

| Sequence | Transaction 1 (in-memory) | Transaction 2 (in-memory) |

| 1 | BEGIN TRAN | |

| 2 | INSERT tbldetails (EventID) VALUES (5) | |

| 3 | DELETE FROM tblMaster WITH (SNAPSHOT) WHERE ID = 5 | |

| 4 | COMMIT | |

| 5 | Msg 41305, Level 16, State 0, Line 18 The current transaction failed to commit due to a repeatable read validation failure. |

Doing this same sequence on an on-disk table results in the delete being blocked and finally failing with a constraint violation error.

| Sequence | Transaction 1 (on disk) | Transaction 2 ( on disk) |

| 1 | BEGIN TRAN | |

| 2 | INSERT tbldetails_ondisk (EventID) VALUES (5) | |

| 3 | DELETE FROM tblMaster_ondiskWHERE ID = 5 --> This statement is blocked | |

| 4 | COMMIT | |

| 5 | Msg 547, Level 16, State 0, Line 20The DELETE statement conflicted with the REFERENCE constraint "FK_tblDetails_tblMaster_ondisk". The conflict occurred in database "HKCCI", table "dbo.tblDetails_ondisk", column 'EventId' |

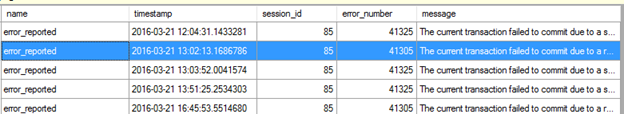

In either of the cases Extended Events with the error_number event can help in figuring out where in your application code validation errors are being raised to take the appropriate actions.

CREATE EVENT SESSION [TraceUserErrors_Validation] ON SERVER

ADD EVENT sqlserver.error_reported(

ACTION(package0.event_sequence,sqlserver.client_app_name,sqlserver.client_hostname

,sqlserver.query_hash_signed,sqlserver.query_plan_hash_signed,sqlserver.session_id

,sqlserver.sql_text,sqlserver.tsql_frame)

WHERE ([package0].[equal_int64]([error_number],(41325))

OR [package0].[equal_int64]([error_number],(41305))

OR [error_number]=(41300)

OR [error_number]=(41301)))

ADD TARGET package0.event_file (SET filename=N'c:\Temp\TraceUserErrors_Validation.xel'

,max_file_size=(250),max_rollover_files=(2)),

ADD TARGET package0.histogram (SET filtering_event_name=N'sqlserver.error_reported'

,source=N'error_number',source_type=(0))

WITH (EVENT_RETENTION_MODE=ALLOW_SINGLE_EVENT_LOSS)

GO

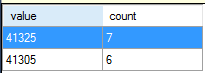

Output of the histogram target gives you a quick look at number of validation errors occurring grouped by the error number.

Summarizing, with the introduction of foreign key support for In-Memory OLTP, it is imperative to index the keys appropriately so that joins are efficient not only for explicitly defined joins, but also for validations that happen under the covers when you insert, update or delete a row so that we avoid unexpected validation errors.

For other validation errors, given the optimistic concurrency , applications using memory optimized tables should also include retry logic for valid cases of validation errors as specified in the article Guidelines for Retry Logic for Transactions on Memory-Optimized Tables

Appendix: TSQL Scripts to reproduce the behavior

Setup Script:

USE [master]

GO

/****** Object: Database [HKCCI] Script Date: 3/24/2016 9:37:53 AM ******/

CREATE DATABASE InMemoryOLTP

ON PRIMARY ( NAME = N'HKCCI', FILENAME = N'c:\temp\InMemoryOLTP.mdf'),

FILEGROUP [HKCCI_InMemory] CONTAINS MEMORY_OPTIMIZED_DATA DEFAULT

(NAME = N'HKCCI_InMemory', FILENAME = N'c:\temp\InMemoryOLTP' , MAXSIZE = UNLIMITED)

LOG ON ( NAME = N'HKCCI_log', FILENAME = N'c:\temp\InMemoryOLTP_log.ldf')

GO

USE InMemoryOLTP

go

-- Master

SET ANSI_NULLS ON

GO

SET QUOTED_IDENTIFIER ON

GO

CREATE TABLE [dbo].[tblMaster]

(

[Id] [int] NOT NULL,

[ExternalId] [int] NOT NULL,

[IsActive] [bit] NOT NULL,

[UTCStartTime] [datetime2](7) NOT NULL,

INDEX [NC_tblMaster_ExternalId] NONCLUSTERED ([ExternalId] ASC),

CONSTRAINT [PK_tblMaster] PRIMARY KEY NONCLUSTERED HASH ([Id]) WITH ( BUCKET_COUNT = 32000),

) WITH ( MEMORY_OPTIMIZED = ON , DURABILITY = SCHEMA_AND_DATA )

GO

-- Details table

CREATE TABLE [dbo].[tblDetails]

(

[DetailID] int identity,

[EventId] [int] NOT NULL,

[LanguageId] [int] NOT NULL,

[EventName] [nvarchar](512) COLLATE SQL_Latin1_General_CP1_CI_AS NULL,

[CountryName] [nvarchar](512) COLLATE SQL_Latin1_General_CP1_CI_AS NULL

INDEX [NC_tblDetails_LanguageId] NONCLUSTERED ([LanguageId] ASC),

CONSTRAINT [PK_tblDetails] PRIMARY KEY NONCLUSTERED HASH ([DetailID]) WITH ( BUCKET_COUNT = 32768),

) WITH ( MEMORY_OPTIMIZED = ON , DURABILITY = SCHEMA_AND_DATA )

GO

ALTER TABLE [dbo].[tblDetails] WITH CHECK ADD CONSTRAINT [FK_tblDetails_tblMaster] FOREIGN KEY([EventId])

REFERENCES [dbo].[tblMaster] ([Id])

GO

--insert some data

INSERT INTO tblmaster VALUES ( 1,1,1,getutcdate())

INSERT INTO tblmaster VALUES ( 2,2,1,getutcdate())

INSERT INTO tblmaster VALUES ( 3,3,1,getutcdate())

INSERT INTO tblmaster VALUES ( 4,4,1,getutcdate())

INSERT INTO tblmaster VALUES ( 5,5,1,getutcdate())

INSERT INTO tblmaster VALUES ( 6,6,1,getutcdate())

GO

-- Translations

WITH Nbrs_3( n ) AS ( SELECT 1 UNION SELECT 0 ),

Nbrs_2( n ) AS ( SELECT 1 FROM Nbrs_3 n1 CROSS JOIN Nbrs_3 n2 ),

Nbrs_1( n ) AS ( SELECT 1 FROM Nbrs_2 n1 CROSS JOIN Nbrs_2 n2 ),

Nbrs_0( n ) AS ( SELECT 1 FROM Nbrs_1 n1 CROSS JOIN Nbrs_1 n2 ),

Nbrs ( n ) AS ( SELECT 1 FROM Nbrs_0 n1 CROSS JOIN Nbrs_0 n2 )

INSERT INTO tblDetails SELECT top 5000 1,ROW_NUMBER() OVER (ORDER BY NEWID()) as LanguageID, 'Event1','AU' FROM Nbrs

GO

WITH Nbrs_3( n ) AS ( SELECT 1 UNION SELECT 0 ),

Nbrs_2( n ) AS ( SELECT 1 FROM Nbrs_3 n1 CROSS JOIN Nbrs_3 n2 ),

Nbrs_1( n ) AS ( SELECT 1 FROM Nbrs_2 n1 CROSS JOIN Nbrs_2 n2 ),

Nbrs_0( n ) AS ( SELECT 1 FROM Nbrs_1 n1 CROSS JOIN Nbrs_1 n2 ),

Nbrs ( n ) AS ( SELECT 1 FROM Nbrs_0 n1 CROSS JOIN Nbrs_0 n2 )

INSERT INTO tblDetails SELECT top 5000 2,ROW_NUMBER() OVER (ORDER BY NEWID()) as LanguageID, 'Event2','AU'FROM Nbrs

GO

--insert into EventTranslations values(2,3,'Event1','FR')

WITH Nbrs_3( n ) AS ( SELECT 1 UNION SELECT 0 ),

Nbrs_2( n ) AS ( SELECT 1 FROM Nbrs_3 n1 CROSS JOIN Nbrs_3 n2 ),

Nbrs_1( n ) AS ( SELECT 1 FROM Nbrs_2 n1 CROSS JOIN Nbrs_2 n2 ),

Nbrs_0( n ) AS ( SELECT 1 FROM Nbrs_1 n1 CROSS JOIN Nbrs_1 n2 ),

Nbrs ( n ) AS ( SELECT 1 FROM Nbrs_0 n1 CROSS JOIN Nbrs_0 n2 )

INSERT INTO tblDetails SELECT top 5000 3,ROW_NUMBER() OVER (ORDER BY NEWID()) as LanguageID, 'Event3','CA' FROM Nbrs

GO

WITH Nbrs_3( n ) AS ( SELECT 1 UNION SELECT 0 ),

Nbrs_2( n ) AS ( SELECT 1 FROM Nbrs_3 n1 CROSS JOIN Nbrs_3 n2 ),

Nbrs_1( n ) AS ( SELECT 1 FROM Nbrs_2 n1 CROSS JOIN Nbrs_2 n2 ),

Nbrs_0( n ) AS ( SELECT 1 FROM Nbrs_1 n1 CROSS JOIN Nbrs_1 n2 ),

Nbrs ( n ) AS ( SELECT 1 FROM Nbrs_0 n1 CROSS JOIN Nbrs_0 n2 )

INSERT INTO tblDetails

SELECT TOP 10000 4,ROW_NUMBER() OVER (ORDER BY NEWID()) as LanguageID, 'Event4','UK' FROM Nbrs

GO

SELECT COUNT(*) from tblDetails

GO

Validation Transaction 1 script:

--**********Step 1 ( Validation_transaction1.sql)

-- Open the Tran and Delete Parent Table

-- You can enable Execution Plans for this, does a Scan on EventTranslations

BEGIN TRAN

DELETE FROM tblMaster WITH (SNAPSHOT) WHERE ID = 5

GO

-- Now Execute Step2 from Validation_Transaction2.sql file

---************** Step 3

-- you should hit the error

/*

Msg 41305, Level 16, State 0, Line 6

The current transaction failed to commit due to a repeatable read validation failure. */

COMMIT

GO

---************** Step 4 ( Validation_transaction1.sql)

--- FIX and repeat Step 1 , Step 2, and Step 3.

ALTER TABLE tblDetails

ADD INDEX idxtblDetails_EventID NONCLUSTERED(EventID)

GO

-- ************** Step 5 ( Validation_transaction1.sql)

-- Lets try to repro the Serializable error

DELETE FROM tblDetails WITH (SNAPSHOT) WHERE EventID = 1

BEGIN TRAN

DELETE FROM tblMaster WITH (SNAPSHOT) WHERE ID = 1

GO

-- Now Execute ***Step6 **** from Validation_transaction2.sql

--******Step 7 ( Validation_transaction1.sql)

/*

Msg 41325, Level 16, State 0, Line 31

The current transaction failed to commit due to a serializable validation failure. */

COMMIT

GO

---************** Step 8 ( Validation_transaction1.sql)

delete from tblDetails where eventid = 5

delete from tblmaster where id = 5

go

insert into tblMaster WITH (SNAPSHOT) values(5,2,1,getdate())

GO

BEGIN TRAN

INSERT tbldetails WITH (SNAPSHOT) VALUES(5,-2,'Event111','US')

GO

--********** Step 10 ( Validation_transaction1.sql

/*

Msg 41305, Level 16, State 0, Line 58

The current transaction failed to commit due to a repeatable read validation failure.

*/

COMMIT

GO

Validation Transaction 2 script:

--***** Step 2 ( Validation transaction 2.sql)

UPDATE tbldetails WITH (SNAPSHOT)

SET EventName = 'Event221'

WHERE EventID = 4 and languageID = 1

--- Now Execute Step 3 from the Validation_transaction1.sql file

--********* Step 6 ( Validation_transaction2.sql)

INSERT INTO tbldetails VALUES (1,900001,'Event1','US')

--***** Step 9 ( Validation_transaction2.sql )

DELETE FROM tblMaster WITH (SNAPSHOT) WHERE ID = 5