Observação

O acesso a essa página exige autorização. Você pode tentar entrar ou alterar diretórios.

O acesso a essa página exige autorização. Você pode tentar alterar os diretórios.

Aplica-se a: ✅Microsoft Fabric✅Azure Data Explorer✅Azure Monitor✅Microsoft Sentinel

A KQL (Linguagem de Consulta Kusto) tem funções internas de detecção e previsão de anomalias para verificar o comportamento anômalo. Depois que esse padrão é detectado, uma RCA (Análise de Causa Raiz) pode ser executada para atenuar ou resolver a anomalia.

O processo de diagnóstico é complexo e demorado e feito por especialistas em domínio. Esse processo inclui:

- Buscar e unir mais dados de fontes diferentes para o mesmo período de tempo

- Procurando alterações na distribuição de valores em várias dimensões

- Mapeando mais variáveis

- Outras técnicas baseadas no conhecimento e na intuição do domínio

Como esses cenários de diagnóstico são comuns, os plug-ins de machine learning estão disponíveis para facilitar a fase de diagnóstico e reduzir a duração da RCA.

Todos os três dos seguintes plug-ins do Machine Learning implementam algoritmos de clustering: autocluster, baskete diffpatterns. O plug-in autocluster e o plug-in basket agrupam um único conjunto de registros, e o plug-in diffpatterns agrupa as diferenças entre dois conjuntos de registros.

Agrupamento de um único conjunto de registros

Um cenário comum inclui um conjunto de dados selecionado por um critério específico, como:

- Janela de tempo que mostra o comportamento anômalo

- Leituras de dispositivo de alta temperatura

- Comandos de longa duração

- Principais usuários de gastos

Você deseja uma maneira rápida e fácil de encontrar padrões comuns (segmentos) nos dados. Os padrões são um subconjunto do conjunto de dados cujos registros compartilham os mesmos valores em várias dimensões (colunas categóricas).

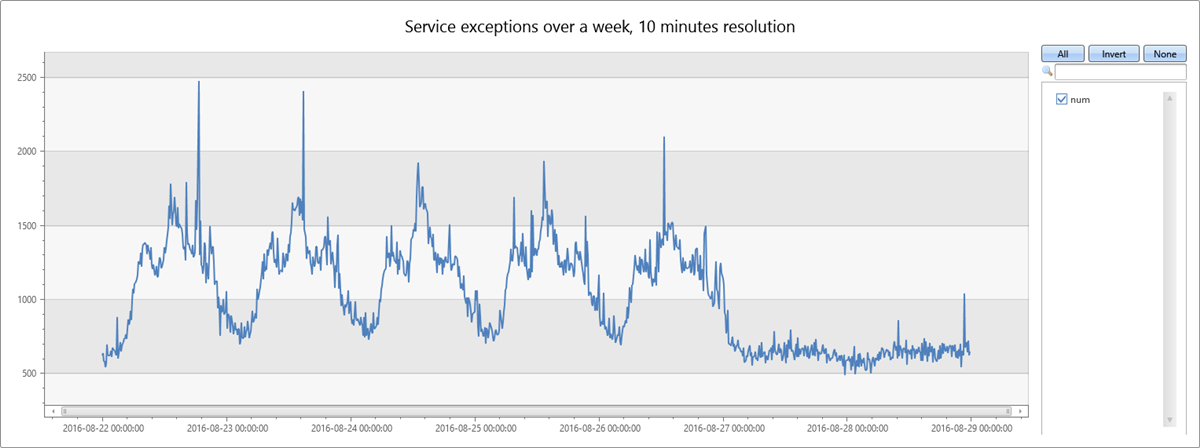

A consulta a seguir compila e mostra uma série temporal de exceções de serviço durante uma semana, em intervalos de dez minutos.

let min_t = toscalar(demo_clustering1 | summarize min(PreciseTimeStamp));

let max_t = toscalar(demo_clustering1 | summarize max(PreciseTimeStamp));

demo_clustering1

| make-series num=count() on PreciseTimeStamp from min_t to max_t step 10m

| render timechart with(title="Service exceptions over a week, 10 minutes resolution")

A contagem de exceções de serviço correlaciona-se com o tráfego de serviço geral. Você pode ver claramente o padrão diário para dias úteis, de segunda a sexta-feira. Há um aumento nas contagens de exceções de serviço no meio do dia e quedas nas contagens durante a noite. Contagens baixas estáveis são visíveis ao longo do fim de semana. Picos de exceção podem ser detectados usando a detecção de anomalias de série temporal.

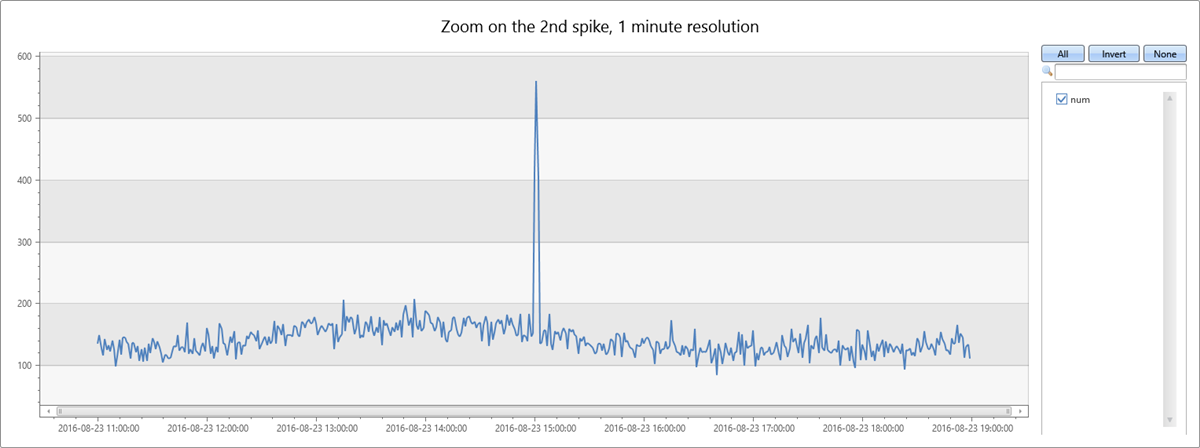

O segundo pico nos dados ocorre na tarde de terça-feira. A consulta a seguir é utilizada para analisar ainda mais e verificar se é um pico repentino. A consulta redesenhará o gráfico ao redor do pico em uma resolução mais alta de oito horas, com intervalos de um minuto. Você pode então estudar seus limites.

let min_t=datetime(2016-08-23 11:00);

demo_clustering1

| make-series num=count() on PreciseTimeStamp from min_t to min_t+8h step 1m

| render timechart with(title="Zoom on the 2nd spike, 1 minute resolution")

Você vê um pico curto de dois minutos das 15:00 às 15:02. Na consulta a seguir, conte as exceções nesta janela de dois minutos:

let min_peak_t=datetime(2016-08-23 15:00);

let max_peak_t=datetime(2016-08-23 15:02);

demo_clustering1

| where PreciseTimeStamp between(min_peak_t..max_peak_t)

| count

| Contagem |

|---|

| 972 |

Na consulta a seguir, selecione 20 exceções dentre 972.

let min_peak_t=datetime(2016-08-23 15:00);

let max_peak_t=datetime(2016-08-23 15:02);

demo_clustering1

| where PreciseTimeStamp between(min_peak_t..max_peak_t)

| take 20

| PreciseTimeStamp | Região | ScaleUnit | DeploymentId | Ponto de Rastreamento | ServiceHost |

|---|---|---|---|---|---|

| 2016-08-23 15:00:08.7302460 | scus | su5 | 9dbd1b161d5b4779a73cf19a7836ebd6 | 100005 | 00000000-0000-0000-0000-000000000000 |

| 2016-08-23 15:00:09.9496584 | scus | su5 | 9dbd1b161d5b4779a73cf19a7836ebd6 | 10007006 | 8d257da1-7a1c-44f5-9acd-f9e02ff507fd |

| 2016-08-23 15:00:10.5911748 | scus | su5 | 9dbd1b161d5b4779a73cf19a7836ebd6 | 100005 | 00000000-0000-0000-0000-000000000000 |

| 2016-08-23 15:00:12.2957912 | scus | su5 | 9dbd1b161d5b4779a73cf19a7836ebd6 | 10007007 | f855fcef-ebfe-405d-aaf8-9c5e2e43d862 |

| 2016-08-23 15:00:18.5955357 | scus | su5 | 9dbd1b161d5b4779a73cf19a7836ebd6 | 10007006 | 9d390e07-417d-42eb-bebd-793965189a28 |

| 2016-08-23 15:00:20.7444854 | scus | su5 | 9dbd1b161d5b4779a73cf19a7836ebd6 | 10007006 | 6e54c1c8-42d3-4e4e-8b79-9bb076ca71f1f1 |

| 2016-08-23 15:00:23.8694999 | eus2 | su2 | 89e2f62a73bb4efd8f545aeae40d7e51 | 36109 | 19422243-19b9-4d85-9ca6-bc961861d287 |

| 2016-08-23 15:00:26.4271786 | ncus | su1 | e24ef436e02b4823ac5d5b1465a9401e | 36109 | 3271bae4-1c5b-4f73-98ef-cc117e9be914 |

| 2016-08-23 15:00:27.8958124 | scus | su3 | 90d3d2fc7ecc430c9621ece335651a01 | 904498 | 8cf38575-fca9-48ca-bd7c-21196f6d6765 |

| 2016-08-23 15:00:32.9884969 | scus | su3 | 90d3d2fc7ecc430c9621ece335651a01 | 10007007 | d5c7c825-9d46-4ab7-a0c1-8e2ac1d83ddb |

| 2016-08-23 15:00:34.5061623 | scus | su5 | 9dbd1b161d5b4779a73cf19a7836ebd6 | 1002110 | 55a71811-5ec4-497a-a058-140fb0d611ad |

| 2016-08-23 15:00:37.4490273 | scus | su3 | 90d3d2fc7ecc430c9621ece335651a01 | 10007006 | f2ee8254-173c-477d-a1de-4902150ea50d |

| 2016-08-23 15:00:41.2431223 | scus | su3 | 90d3d2fc7ecc430c9621ece335651a01 | 103200 | 8cf38575-fca9-48ca-bd7c-21196f6d6765 |

| 2016-08-23 15:00:47.2983975 | ncus | su1 | e24ef436e02b4823ac5d5b1465a9401e | 423690590 | 00000000-0000-0000-0000-000000000000 |

| 2016-08-23 15:00:50.5932834 | scus | su5 | 9dbd1b161d5b4779a73cf19a7836ebd6 | 10007006 | 2a41b552-aa19-4987-8cdd-410a3af016ac |

| 2016-08-23 15:00:50.8259021 | scus | su5 | 9dbd1b161d5b4779a73cf19a7836ebd6 | 1002110 | 0d56b8e3-470d-4213-91da-97405f8d005e |

| 2016-08-23 15:00:53.2490731 | scus | su5 | 9dbd1b161d5b4779a73cf19a7836ebd6 | 36109 | 55a71811-5ec4-497a-a058-140fb0d611ad |

| 2016-08-23 15:00:57.0000946 | eus2 | su2 | 89e2f62a73bb4efd8f545aeae40d7e51 | 64038 | cb55739e-4afe-46a3-970f-1b49d8ee7564 |

| 2016-08-23 15:00:58.2222707 | scus | su5 | 9dbd1b161d5b4779a73cf19a7836ebd6 | 10007007 | 8215dcf6-2de0-42bd-9c90-181c70486c9c |

| 2016-08-23 15:00:59.9382620 | scus | su3 | 90d3d2fc7ecc430c9621ece335651a01 | 10007006 | 451e3c4c-0808-4566-a64d-84d85cf30978 |

Embora haja menos de mil exceções, ainda é difícil encontrar segmentos comuns, pois há vários valores em cada coluna. Você pode usar o autocluster() plug-in para extrair instantaneamente uma pequena lista de segmentos comuns e encontrar os clusters interessantes dentro dos dois minutos do pico, conforme visto na seguinte consulta:

let min_peak_t=datetime(2016-08-23 15:00);

let max_peak_t=datetime(2016-08-23 15:02);

demo_clustering1

| where PreciseTimeStamp between(min_peak_t..max_peak_t)

| evaluate autocluster()

| SegmentId | Contagem | Porcentagem | Região | ScaleUnit | DeploymentId | ServiceHost |

|---|---|---|---|---|---|---|

| 0 | 639 | 65.7407407407407 | Eau | su7 | b5d1d4df547d4a04ac15885617edba57 | e7f60c5d-4944-42b3-922a-92e98a8e7dec |

| 1 | 94 | 9.67078189300411 | scus | su5 | 9dbd1b161d5b4779a73cf19a7836ebd6 | |

| 2 | 82 | 8.43621399176955 | ncus | su1 | e24ef436e02b4823ac5d5b1465a9401e | |

| 3 | 68 | 6.99588477366255 | scus | su3 | 90d3d2fc7ecc430c9621ece335651a01 | |

| 4 | 55 | 5,65843621399177 | Ueo | su4 | be1d6d7ac9574cbc9a22cb8ee20f16fc |

Você pode ver nos resultados acima que o segmento mais dominante contém 65,74% do total de registros de exceção e compartilha quatro dimensões. O próximo segmento é muito menos comum. Ele contém apenas 9,67% dos registros e compartilha três dimensões. Os outros segmentos são ainda menos comuns.

O autocluster usa um algoritmo proprietário para minerar várias dimensões e extrair segmentos interessantes. "Interessante" significa que cada segmento tem uma cobertura significativa do conjunto de registros e do conjunto de características. Os segmentos também são divergentes, o que significa que cada um deles é diferente dos outros. Um ou mais desses segmentos podem ser relevantes para o processo de RCA. Para minimizar a revisão e a avaliação do segmento, o cluster automático extrai apenas uma pequena lista de segmentos.

Você também pode usar o basket() plug-in, conforme visto na seguinte consulta:

let min_peak_t=datetime(2016-08-23 15:00);

let max_peak_t=datetime(2016-08-23 15:02);

demo_clustering1

| where PreciseTimeStamp between(min_peak_t..max_peak_t)

| evaluate basket()

| SegmentId | Contagem | Porcentagem | Região | ScaleUnit | DeploymentId | Ponto de Rastreamento | ServiceHost |

|---|---|---|---|---|---|---|---|

| 0 | 639 | 65.7407407407407 | Eau | su7 | b5d1d4df547d4a04ac15885617edba57 | e7f60c5d-4944-42b3-922a-92e98a8e7dec | |

| 1 | 642 | 66.0493827160494 | Eau | su7 | b5d1d4df547d4a04ac15885617edba57 | ||

| 2 | 324 | 33.3333333333333 | Eau | su7 | b5d1d4df547d4a04ac15885617edba57 | 0 | e7f60c5d-4944-42b3-922a-92e98a8e7dec |

| 3 | 315 | 32.4074074074074 | Eau | su7 | b5d1d4df547d4a04ac15885617edba57 | 16108 | e7f60c5d-4944-42b3-922a-92e98a8e7dec |

| 4 | 328 | 33.7448559670782 | 0 | ||||

| 5 | 94 | 9.67078189300411 | scus | su5 | 9dbd1b161d5b4779a73cf19a7836ebd6 | ||

| 6 | 82 | 8.43621399176955 | ncus | su1 | e24ef436e02b4823ac5d5b1465a9401e | ||

| 7 | 68 | 6.99588477366255 | scus | su3 | 90d3d2fc7ecc430c9621ece335651a01 | ||

| oito | 167 | 17.1810699588477 | scus | ||||

| 9 | 55 | 5,65843621399177 | Ueo | su4 | be1d6d7ac9574cbc9a22cb8ee20f16fc | ||

| 10 | 92 | 9.46502057613169 | 10007007 | ||||

| 11 | 90 | 9,25925925925926 | 10007006 | ||||

| 12 | 57 | 5.8641975308642 | 00000000-0000-0000-0000-000000000000 |

Basket implementa o algoritmo "Apriori" para mineração de conjunto de itens. Ele extrai todos os segmentos cuja cobertura do conjunto de registros está acima de um limiar (padrão 5%). Você pode ver que mais segmentos foram extraídos com segmentos semelhantes, como os segmentos 0, 1 ou 2, 3.

Ambos os plug-ins são avançados e fáceis de usar. Sua limitação é que eles agrupam um único conjunto de registros de maneira não supervisionada sem rótulos. Não está claro se os padrões extraídos caracterizam o conjunto de registros selecionado, registros anômalos ou o conjunto de registros global.

Agrupamento da diferença entre dois conjuntos de dados

O diffpatterns() plug-in supera a limitação de autocluster e basket.

Diffpatterns usa dois conjuntos de registros e extrai os segmentos principais que são diferentes. Um conjunto geralmente contém o conjunto de registros anômalos que está sendo investigado. Um é analisado por autocluster e basket. O outro conjunto contém o conjunto de registros de referência, a linha de base.

Na consulta a seguir, diffpatterns localiza clusters interessantes dentro dos dois minutos do pico, que são diferentes dos clusters dentro da linha de base. A janela de linha de base é definida como os oito minutos antes das 15:00, momento em que o pico começou. Estenda por uma coluna binária (AB) e especifique se um registro específico pertence à linha de base ou ao conjunto anômalo.

Diffpatterns implementa um algoritmo de aprendizado supervisionado, no qual os dois rótulos de classe foram gerados pelo marcador anômalo em comparação com o marcador de linha de base (AB).

let min_peak_t=datetime(2016-08-23 15:00);

let max_peak_t=datetime(2016-08-23 15:02);

let min_baseline_t=datetime(2016-08-23 14:50);

let max_baseline_t=datetime(2016-08-23 14:58); // Leave a gap between the baseline and the spike to avoid the transition zone.

let splitime=(max_baseline_t+min_peak_t)/2.0;

demo_clustering1

| where (PreciseTimeStamp between(min_baseline_t..max_baseline_t)) or

(PreciseTimeStamp between(min_peak_t..max_peak_t))

| extend AB=iff(PreciseTimeStamp > splitime, 'Anomaly', 'Baseline')

| evaluate diffpatterns(AB, 'Anomaly', 'Baseline')

| SegmentId | CountA | CountB | Porcentagem | PercentB | PercentDiffAB | Região | ScaleUnit | DeploymentId | Ponto de Rastreamento |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 639 | 21 | 65.74 | 1,7 | 64.04 | Eau | su7 | b5d1d4df547d4a04ac15885617edba57 | |

| 1 | 167 | 544 | 17.18 | 44.16 | 26.97 | scus | |||

| 2 | 92 | 356 | 9.47 | 28,9 | 19.43 | 10007007 | |||

| 3 | 90 | 336 | 9.26 | 27.27 | 18.01 | 10007006 | |||

| 4 | 82 | 318 | 8.44 | 25,81 | 17.38 | ncus | su1 | e24ef436e02b4823ac5d5b1465a9401e | |

| 5 | 55 | 252 | 5,66 | 20.45 | 14,8 | Ueo | su4 | be1d6d7ac9574cbc9a22cb8ee20f16fc | |

| 6 | 57 | 204 | 5.86 | 16.56 | 10.69 |

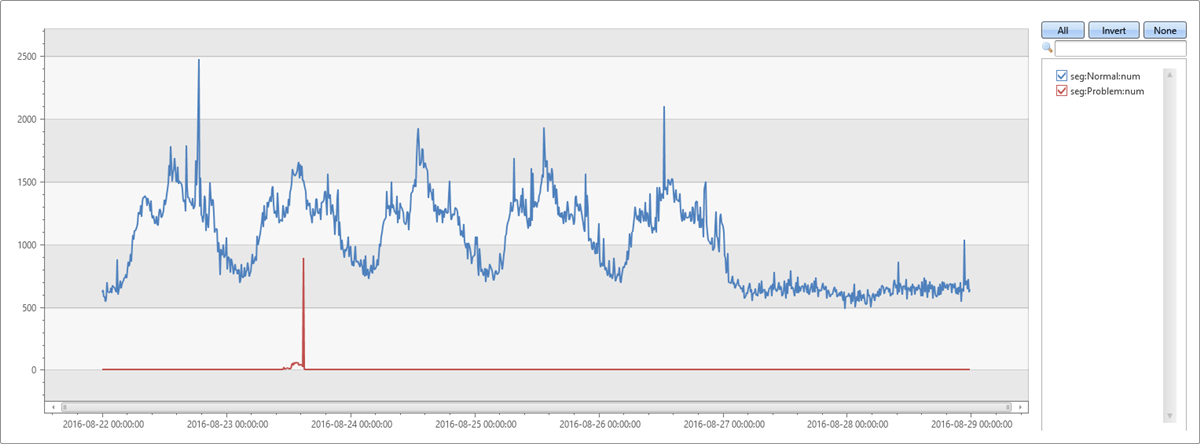

O segmento mais dominante é o mesmo segmento que foi extraído por autocluster. Sua cobertura na janela anômala de dois minutos também é de 65,74%. No entanto, sua cobertura na janela de linha de base de oito minutos é de apenas 1,7%. A diferença é 64,04%. Essa diferença parece estar relacionada ao pico anômômalo. Para verificar essa suposição, a consulta a seguir divide o gráfico original nos registros que pertencem a esse segmento problemático e registros de outros segmentos.

let min_t = toscalar(demo_clustering1 | summarize min(PreciseTimeStamp));

let max_t = toscalar(demo_clustering1 | summarize max(PreciseTimeStamp));

demo_clustering1

| extend seg = iff(Region == "eau" and ScaleUnit == "su7" and DeploymentId == "b5d1d4df547d4a04ac15885617edba57"

and ServiceHost == "e7f60c5d-4944-42b3-922a-92e98a8e7dec", "Problem", "Normal")

| make-series num=count() on PreciseTimeStamp from min_t to max_t step 10m by seg

| render timechart

Este gráfico nos permite ver que o pico na tarde de terça-feira foi devido a exceções deste segmento específico, descobertas usando o diffpatterns plug-in.

Resumo

Os plug-ins do Machine Learning são úteis para muitos cenários.

autocluster e basket implementam um algoritmo de aprendizado não supervisionado e são fáceis de usar.

Diffpatterns implementa um algoritmo de aprendizado supervisionado e, embora mais complexo, é mais poderoso para extrair segmentos de diferenciação para RCA.

Esses plug-ins são usados interativamente em cenários ad hoc e em serviços automáticos de monitoramento quase em tempo real. A detecção de anomalias de série temporal é seguida por um processo de diagnóstico. O processo é altamente otimizado para atender aos padrões de desempenho necessários.