Acompanhamento ocular estendido no Unity

Para acessar o repositório GitHub para o exemplo de acompanhamento ocular estendido:

O acompanhamento ocular estendido é uma nova funcionalidade em HoloLens 2. É um superconjunto do acompanhamento ocular padrão, que fornece apenas dados combinados de foco ocular. O acompanhamento ocular estendido também fornece dados individuais de foco ocular e permite que os aplicativos definam taxas de quadros diferentes para os dados de foco, como 30, 60 e 90fps. Outros recursos, como abertura ocular e vergence ocular, não são suportados por HoloLens 2 no momento.

O SDK de Acompanhamento Ocular Estendido permite que os aplicativos acessem dados e recursos de acompanhamento ocular estendido. Ele pode ser usado junto com APIs OpenXR ou APIs herdadas do WinRT.

Este artigo aborda as maneiras de usar o SDK de acompanhamento ocular estendido no Unity junto com o plug-in Realidade Misturada OpenXR.

Configuração do projeto

-

Configure o projeto do Unity para desenvolvimento do HoloLens.

- Selecione a funcionalidade Entrada de Foco

- Importe o plug-in Realidade Misturada OpenXR da ferramenta de recurso MRTK.

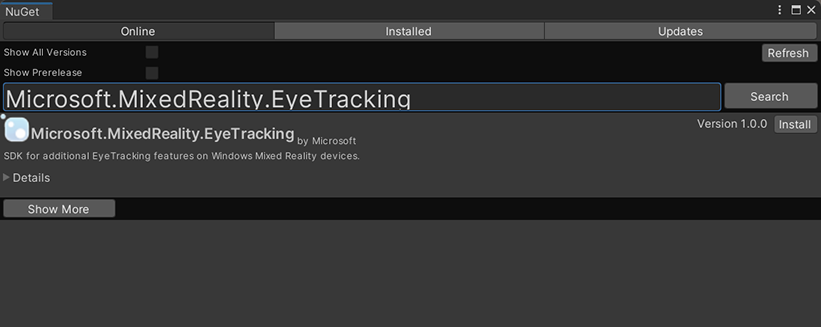

- Importe o pacote NuGet do SDK de Acompanhamento Ocular para seu projeto do Unity.

- Baixe e instale o pacote NuGetForUnity .

- No editor do Unity, acesse

NuGet->Manage NuGet Packagese pesquise porMicrosoft.MixedReality.EyeTracking - Clique no botão Instalar para importar a versão mais recente do pacote NuGet.

- Adicione os scripts auxiliares do Unity.

- Adicione o

ExtendedEyeGazeDataProvider.csscript aqui ao seu projeto do Unity. - Crie uma cena e anexe o

ExtendedEyeGazeDataProvider.csscript a qualquer GameObject.

- Adicione o

- Consuma as funções de

ExtendedEyeGazeDataProvider.cse implemente suas lógicas. - Compilar e implantar no HoloLens.

Consumir funções de ExtendedEyeGazeDataProvider

Observação

O ExtendedEyeGazeDataProvider script depende de algumas APIs do Realidade Misturada Plug-in OpenXR para converter as coordenadas dos dados de foco. Ele não poderá funcionar se o projeto do Unity usar o plug-in do Windows XR preterido ou o XR interno herdado na versão mais antiga do Unity. Para fazer com que o acompanhamento ocular estendido também funcione nesses cenários:

- Se você só precisar acessar as configurações de taxa de quadros, o Realidade Misturada Plug-in OpenXR não será necessário e você poderá modificar o

ExtendedEyeGazeDataProviderpara manter apenas a lógica relacionada à taxa de quadros. - Se você ainda precisar acessar dados individuais de foco ocular, precisará usar APIs do WinRT no Unity. Para ver como usar o SDK de acompanhamento ocular estendido com APIs do WinRT, consulte a seção "Veja também".

A ExtendedEyeGazeDataProvider classe encapsula as APIs do SDK de acompanhamento ocular estendido. Ele fornece funções para obter a leitura de foco no espaço do mundo do Unity ou em relação à câmera main.

Aqui estão exemplos de código a serem consumidos ExtendedEyeGazeDataProvider para obter os dados de foco.

ExtendedEyeGazeDataProvider extendedEyeGazeDataProvider;

void Update() {

timestamp = DateTime.Now;

var leftGazeReadingInWorldSpace = extendedEyeGazeDataProvider.GetWorldSpaceGazeReading(extendedEyeGazeDataProvider.GazeType.Left, timestamp);

var rightGazeReadingInWorldSpace = extendedEyeGazeDataProvider.GetWorldSpaceGazeReading(extendedEyeGazeDataProvider.GazeType.Right, timestamp);

var combinedGazeReadingInWorldSpace = extendedEyeGazeDataProvider.GetWorldSpaceGazeReading(extendedEyeGazeDataProvider.GazeType.Combined, timestamp);

var combinedGazeReadingInCameraSpace = extendedEyeGazeDataProvider.GetCameraSpaceGazeReading(extendedEyeGazeDataProvider.GazeType.Combined, timestamp);

}

Quando o ExtendedEyeGazeDataProvider script é executado, ele define a taxa de quadros de dados de foco como a opção mais alta, que atualmente é de 90fps.

Referência de API do SDK de acompanhamento ocular estendido

Além de usar o ExtendedEyeGazeDataProvider script, você também pode criar seu próprio script para consumir as APIs do SDK a seguir diretamente.

namespace Microsoft.MixedReality.EyeTracking

{

/// <summary>

/// Allow discovery of Eye Gaze Trackers connected to the system

/// This is the only class from the Extended Eye Tracking SDK that the application will instantiate,

/// other classes' instances will be returned by method calls or properties.

/// </summary>

public class EyeGazeTrackerWatcher

{

/// <summary>

/// Constructs an instance of the watcher

/// </summary>

public EyeGazeTrackerWatcher();

/// <summary>

/// Starts trackers enumeration.

/// </summary>

/// <returns>Task representing async action; completes when the initial enumeration is completed</returns>

public System.Threading.Tasks.Task StartAsync();

/// <summary>

/// Stop listening to trackers additions and removal

/// </summary>

public void Stop();

/// <summary>

/// Raised when an Eye Gaze tracker is connected

/// </summary>

public event System.EventHandler<EyeGazeTracker> EyeGazeTrackerAdded;

/// <summary>

/// Raised when an Eye Gaze tracker is disconnected

/// </summary>

public event System.EventHandler<EyeGazeTracker> EyeGazeTrackerRemoved;

}

/// <summary>

/// Represents an Eye Tracker device

/// </summary>

public class EyeGazeTracker

{

/// <summary>

/// True if Restricted mode is supported, which means the driver supports providing individual

/// eye gaze vector and frame rate

/// </summary>

public bool IsRestrictedModeSupported;

/// <summary>

/// True if Vergence Distance is supported by tracker

/// </summary>

public bool IsVergenceDistanceSupported;

/// <summary>

/// True if Eye Openness is supported by the driver

/// </summary>

public bool IsEyeOpennessSupported;

/// <summary>

/// True if individual gazes are supported

/// </summary>

public bool AreLeftAndRightGazesSupported;

/// <summary>

/// Get the supported target frame rates of the tracker

/// </summary>

public System.Collections.Generic.IReadOnlyList<EyeGazeTrackerFrameRate> SupportedTargetFrameRates;

/// <summary>

/// NodeId of the tracker, used to retrieve a SpatialLocator or SpatialGraphNode to locate the tracker in the scene

/// for the Perception API, use SpatialGraphInteropPreview.CreateLocatorForNode

/// for the Mixed Reality OpenXR API, use SpatialGraphNode.FromDynamicNodeId

/// </summary>

public Guid TrackerSpaceLocatorNodeId;

/// <summary>

/// Opens the tracker

/// </summary>

/// <param name="restrictedMode">True if restricted mode active</param>

/// <returns>Task representing async action; completes when the initial enumeration is completed</returns>

public System.Threading.Tasks.Task OpenAsync(bool restrictedMode);

/// <summary>

/// Closes the tracker

/// </summary>

public void Close();

/// <summary>

/// Changes the target frame rate of the tracker

/// </summary>

/// <param name="newFrameRate">Target frame rate</param>

public void SetTargetFrameRate(EyeGazeTrackerFrameRate newFrameRate);

/// <summary>

/// Try to get tracker state at a given timestamp

/// </summary>

/// <param name="timestamp">timestamp</param>

/// <returns>State if available, null otherwise</returns>

public EyeGazeTrackerReading TryGetReadingAtTimestamp(DateTime timestamp);

/// <summary>

/// Try to get tracker state at a system relative time

/// </summary>

/// <param name="time">time</param>

/// <returns>State if available, null otherwise</returns>

public EyeGazeTrackerReading TryGetReadingAtSystemRelativeTime(TimeSpan time);

/// <summary>

/// Try to get first first tracker state after a given timestamp

/// </summary>

/// <param name="timestamp">timestamp</param>

/// <returns>State if available, null otherwise</returns>

public EyeGazeTrackerReading TryGetReadingAfterTimestamp(DateTime timestamp);

/// <summary>

/// Try to get the first tracker state after a system relative time

/// </summary>

/// <param name="time">time</param>

/// <returns>State if available, null otherwise</returns>

public EyeGazeTrackerReading TryGetReadingAfterSystemRelativeTime(TimeSpan time);

}

/// <summary>

/// Represents a frame rate supported by an Eye Tracker

/// </summary>

public class EyeGazeTrackerFrameRate

{

/// <summary>

/// Frames per second of the frame rate

/// </summary>

public UInt32 FramesPerSecond;

}

/// <summary>

/// Snapshot of Gaze Tracker state

/// </summary>

public class EyeGazeTrackerReading

{

/// <summary>

/// Timestamp of state

/// </summary>

public DateTime Timestamp;

/// <summary>

/// Timestamp of state as system relative time

/// Its SystemRelativeTime.Ticks could provide the QPC time to locate tracker pose

/// </summary>

public TimeSpan SystemRelativeTime;

/// <summary>

/// Indicates of user calibration is valid

/// </summary>

public bool IsCalibrationValid;

/// <summary>

/// Tries to get a vector representing the combined gaze related to the tracker's node

/// </summary>

/// <param name="origin">Origin of the gaze vector</param>

/// <param name="direction">Direction of the gaze vector</param>

/// <returns></returns>

public bool TryGetCombinedEyeGazeInTrackerSpace(out System.Numerics.Vector3 origin, out System.Numerics.Vector3 direction);

/// <summary>

/// Tries to get a vector representing the left eye gaze related to the tracker's node

/// </summary>

/// <param name="origin">Origin of the gaze vector</param>

/// <param name="direction">Direction of the gaze vector</param>

/// <returns></returns>

public bool TryGetLeftEyeGazeInTrackerSpace(out System.Numerics.Vector3 origin, out System.Numerics.Vector3 direction);

/// <summary>

/// Tries to get a vector representing the right eye gaze related to the tracker's node position

/// </summary>

/// <param name="origin">Origin of the gaze vector</param>

/// <param name="direction">Direction of the gaze vector</param>

/// <returns></returns>

public bool TryGetRightEyeGazeInTrackerSpace(out System.Numerics.Vector3 origin, out System.Numerics.Vector3 direction);

/// <summary>

/// Tries to read vergence distance

/// </summary>

/// <param name="value">Vergence distance if available</param>

/// <returns>bool if value is valid</returns>

public bool TryGetVergenceDistance(out float value);

/// <summary>

/// Tries to get left Eye openness information

/// </summary>

/// <param name="value">Eye Openness if valid</param>

/// <returns>bool if value is valid</returns>

public bool TryGetLeftEyeOpenness(out float value);

/// <summary>

/// Tries to get right Eye openness information

/// </summary>

/// <param name="value">Eye openness if valid</param>

/// <returns>bool if value is valid</returns>

public bool TryGetRightEyeOpenness(out float value);

}

}