Image featurization with a pre-trained deep neural network model

With the new release of SQL Server vNext CTP 2.0 and Microsoft R Server 9.1, the MicrosoftML package has added support for pre-trained deep neural network models for image featurization. We can now use the following four deep neural network models in the featurizeImage machine learning transform to extract features from images.

These four state-of-the-art deep neural network models were originally trained and benchmarked on ImageNet data sets and out-performed their competitors by a large margin. Their architectures are explained in detail in their original publications linked in this paragraph. Specifically, ResNet-#s are residual neural network models in Deep Residual Learning for Image Recognition with 18, 50 and 101 layers respectively and AlexNet is the convolutional neural network model in ImageNet Classification with Deep Convolutional Neural Networks. Usage of these pre-trained models allows us to take advantage of their features hard learned from previous data sets which would be otherwise impossible or very inefficient to feature engineer. Heuristically, the larger the model, the better the performance but the longer it takes to run.

Below is a table of their input and output dimensions:

Input |

Output (Number of features) |

|

ResNet-18 |

224 by 224 |

512 |

ResNet-50 |

224 by 224 |

2048 |

ResNet-101 |

224 by 224 |

2048 |

AlexNet |

227 by 227 |

4096 |

When the input images differ from the input shape, a resizing step needs to be performed before featurization as demonstrated later in the code.

To see some sample features you can run the following code:

Below is an image featurization example that uses the CMU Face Images data set and classifies whether the person in an image is facing left or right.

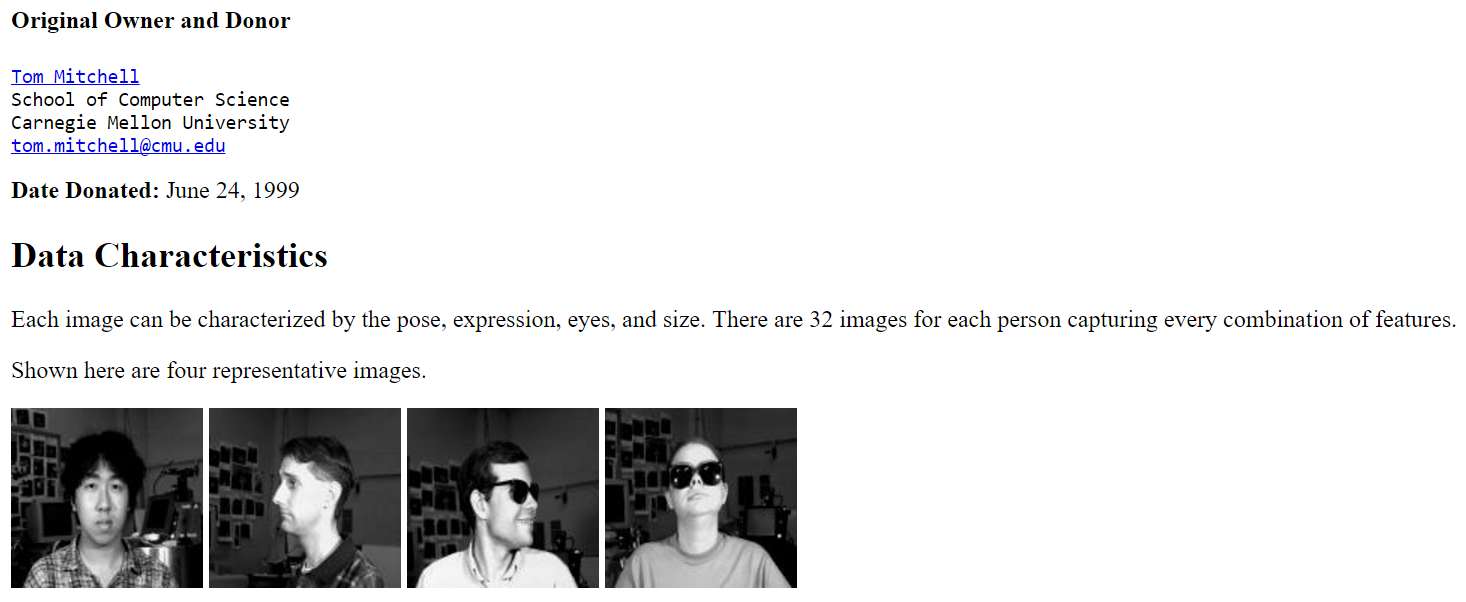

First, prepare the data. The images have three scales, full-resolution, half-resolution and quarter-resolution. The person is facing one of four directions, straight, left, right or up (see data description for details).

Below is a snapshot of the CMU Face Images data set description webpage.

We will use the full-resolution left/right ones. We then split the data into balanced training set and testing set that have 157 and 155 images respectively. The resulting data frames contain paths to these images as variables. As a side note, all code below is run in a local compute context.

Then define our machine learning transform which is a pipeline that takes image file paths as input and emits features produced by the specified pre-trained deep neural network model as output. The resulting features are then fed to a logistic regression classifier for training. At last, evaluate the model's performance on the testing set.

The above code embeds featurization in the machine learning transform. The features are thus not available for later use. Because featurization using deep neural network models is a slow process in general, it's useful to save the featurized data so we don't need to perform the same transform each time we train our model. As such, you can, alternatively, save the featurized data as either a data frame or a scalable data source in your compute context (MicrosoftML is supported in Hadoop/Spark compute context in this release) using the rxFeaturize function. The code below does so with an xdf data source.

For a comprehensive view of all the capabilities in Microsoft R Server 9.1, refer to this blog.

References Deep Residual Learning for Image Recognition ImageNet Classification with Deep Convolutional Neural Networks

MicrosoftML Manual

RevoScaleR Manual

Authored by Te Zhang and Premal Shah

Comments

- Anonymous

January 24, 2018

The comment has been removed