Quickstart: Vectorize text and images by using the Azure portal

Important

The Import and vectorize data wizard is in public preview under Supplemental Terms of Use. By default, it targets the 2024-05-01-Preview REST API.

This quickstart helps you get started with integrated vectorization (preview) by using the Import and vectorize data wizard in the Azure portal. The wizard chunks your content and calls an embedding model to vectorize content during indexing and for queries.

Key points about the wizard:

Source data is either Azure Blob Storage or OneLake files and shortcuts.

Document parsing mode is the default (one search document per blob or file).

Index schema is nonconfigurable. It provides vector and nonvector fields for chunked data.

Chunking is nonconfigurable. The effective settings are:

textSplitMode: "pages", maximumPageLength: 2000, pageOverlapLength: 500

Prerequisites

An Azure subscription. Create one for free.

Azure AI Search service in the same region as Azure AI. We recommend the Basic tier or higher.

Azure Blob Storage or a OneLake lakehouse.

Azure Storage must be a standard performance (general-purpose v2) account. Access tiers can be hot, cool, and cold. Don't use Azure Data Lake Storage Gen2 (a storage account with a hierarchical namespace). This version of the wizard doesn't support Data Lake Storage Gen2.

An embedding model on an Azure AI platform. Deployment instructions are in this article.

Provider Supported models Azure OpenAI Service text-embedding-ada-002, text-embedding-3-large, or text-embedding-3-small. Azure AI Studio model catalog Azure, Cohere, and Facebook embedding models. Azure AI services multiservice account Azure AI Vision multimodal for image and text vectorization. Azure AI Vision multimodal is available in selected regions. Check the documentation for an updated list. To use this resource, the account must be in an available region and in the same region as Azure AI Search.

Public endpoint requirements

All of the preceding resources must have public access enabled so that the portal nodes can access them. Otherwise, the wizard fails. After the wizard runs, you can enable firewalls and private endpoints on the integration components for security. For more information, see Secure connections in the import wizards.

If private endpoints are already present and you can't disable them, the alternative option is to run the respective end-to-end flow from a script or program on a virtual machine. The virtual machine must be on the same virtual network as the private endpoint. Here's a Python code sample for integrated vectorization. The same GitHub repo has samples in other programming languages.

Role-based access control requirements

We recommend role assignments for search service connections to other resources.

On Azure AI Search, enable roles.

Configure your search service to use a managed identity.

On your data source platform and embedding model provider, create role assignments that allow search service to access data and models. Prepare sample data provides instructions for setting up roles.

A free search service supports RBAC on connections to Azure AI Search, but it doesn't support managed identities on outbound connections to Azure Storage or Azure AI Vision. This level of support means you must use key-based authentication on connections between a free search service and other Azure services.

For more secure connections:

- Use the Basic tier or higher.

- Configure a managed identity and use roles for authorized access.

Note

If you can't progress through the wizard because options aren't available (for example, you can't select a data source or an embedding model), revisit the role assignments. Error messages indicate that models or deployments don't exist, when in fact the real cause is that the search service doesn't have permission to access them.

Check for space

If you're starting with the free service, you're limited to 3 indexes, data sources, skillsets, and indexers. Basic limits you to 15. Make sure you have room for extra items before you begin. This quickstart creates one of each object.

Check for semantic ranking

The wizard supports semantic ranking, but only on the Basic tier and higher, and only if semantic ranking is already enabled on your search service. If you're using a billable tier, check whether semantic ranking is enabled.

Prepare sample data

This section points you to data that works for this quickstart.

Sign in to the Azure portal with your Azure account, and go to your Azure Storage account.

On the left pane, under Data Storage, select Containers.

Create a new container and then upload the health-plan PDF documents used for this quickstart.

On the left pane, under Access control, assign the Storage Blob Data Reader role to the search service identity. Or, get a connection string to the storage account from the Access keys page.

Set up embedding models

The wizard can use embedding models deployed from Azure OpenAI, Azure AI Vision, or from the model catalog in Azure AI Studio.

The wizard supports text-embedding-ada-002, text-embedding-3-large, and text-embedding-3-small. Internally, the wizard calls the AzureOpenAIEmbedding skill to connect to Azure OpenAI.

Sign in to the Azure portal with your Azure account, and go to your Azure OpenAI resource.

Set up permissions:

On the left menu, select Access control.

Select Add, and then select Add role assignment.

Under Job function roles, select Cognitive Services OpenAI User, and then select Next.

Under Members, select Managed identity, and then select Members.

Filter by subscription and resource type (search services), and then select the managed identity of your search service.

Select Review + assign.

On the Overview page, select Click here to view endpoints or Click here to manage keys if you need to copy an endpoint or API key. You can paste these values into the wizard if you're using an Azure OpenAI resource with key-based authentication.

Under Resource Management and Model deployments, select Manage Deployments to open Azure AI Studio.

Copy the deployment name of

text-embedding-ada-002or another supported embedding model. If you don't have an embedding model, deploy one now.

Start the wizard

Sign in to the Azure portal with your Azure account, and go to your Azure AI Search service.

On the Overview page, select Import and vectorize data.

Connect to your data

The next step is to connect to a data source to use for the search index.

On the Set up your data connection page, select Azure Blob Storage.

Specify the Azure subscription.

Choose the storage account and container that provide the data.

Specify whether you want deletion detection support. On subsequent indexing runs, the search index is updated to remove any search documents based on soft-deleted blobs on Azure Storage.

- You're prompted to choose either Native blob soft delete or Soft delete using custom data.

- Your blob container must have deletion detection enabled before you run the wizard.

- Enable soft delete in Azure Storage, or add custom metadata to your blobs that indexing recognizes as a deletion flag.

- If you choose Soft delete using custom data, you're prompted to provide the metadata property name-value pair.

Specify whether you want your search service to connect to Azure Storage using its managed identity.

- You're prompted to choose either a system-managed or user-managed identity.

- The identity should have a Storage Blob Data Reader role on Azure Storage.

- Do not skip this option. A connection error occurs during indexing if the wizard can't connect to Azure Storage.

Select Next.

Vectorize your text

In this step, specify the embedding model for vectorizing chunked data.

On the Vectorize your text page, choose the source of the embedding model:

- Azure OpenAI

- Azure AI Studio model catalog

- An existing Azure AI Vision multimodal resource in the same region as Azure AI Search. If there's no Azure AI Services multi-service account in the same region, this option isn't available.

Choose the Azure subscription.

Make selections according to the resource:

For Azure OpenAI, choose an existing deployment of text-embedding-ada-002, text-embedding-3-large, or text-embedding-3-small.

For AI Studio catalog, choose an existing deployment of an Azure, Cohere, and Facebook embedding model.

For AI Vision multimodal embeddings, select the account.

For more information, see Set up embedding models earlier in this article.

Specify whether you want your search service to authenticate using an API key or managed identity.

- The identity should have a Cognitive Services OpenAI User role on the Azure AI multi-services account.

Select the checkbox that acknowledges the billing impact of using these resources.

Select Next.

Vectorize and enrich your images

If your content includes images, you can apply AI in two ways:

Use a supported image embedding model from the catalog, or choose the Azure AI Vision multimodal embeddings API to vectorize images.

Use optical character recognition (OCR) to recognize text in images. This option invokes the OCR skill to read text from images.

Azure AI Search and your Azure AI resource must be in the same region.

On the Vectorize your images page, specify the kind of connection the wizard should make. For image vectorization, the wizard can connect to embedding models in Azure AI Studio or Azure AI Vision.

Specify the subscription.

For the Azure AI Studio model catalog, specify the project and deployment. For more information, see Set up embedding models earlier in this article.

Optionally, you can crack binary images (for example, scanned document files) and use OCR to recognize text.

Select the checkbox that acknowledges the billing impact of using these resources.

Select Next.

Choose advanced settings

On the Advanced settings page, you can optionally add semantic ranking to rerank results at the end of query execution. Reranking promotes the most semantically relevant matches to the top.

Optionally, specify a run schedule for the indexer.

Select Next.

Finish the wizard

On the Review your configuration page, specify a prefix for the objects that the wizard will create. A common prefix helps you stay organized.

Select Create.

When the wizard completes the configuration, it creates the following objects:

Data source connection.

Index with vector fields, vectorizers, vector profiles, and vector algorithms. You can't design or modify the default index during the wizard workflow. Indexes conform to the 2024-05-01-preview REST API.

Skillset with the Text Split skill for chunking and an embedding skill for vectorization. The embedding skill is either the AzureOpenAIEmbeddingModel skill for Azure OpenAI or the AML skill for the Azure AI Studio model catalog.

Indexer with field mappings and output field mappings (if applicable).

Check results

Search Explorer accepts text strings as input and then vectorizes the text for vector query execution.

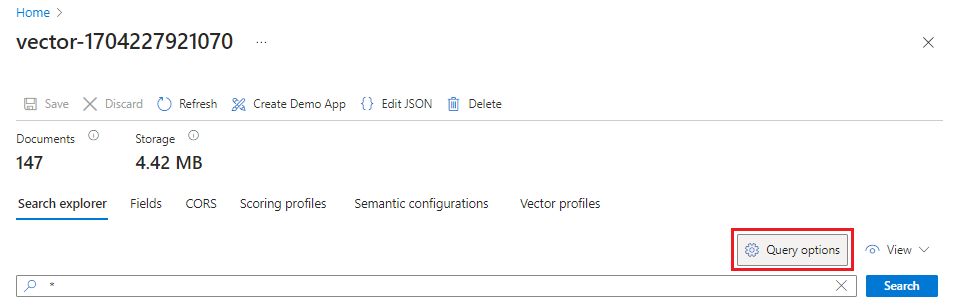

In the Azure portal, go to Search Management > Indexes, and then select the index that you created.

Optionally, select Query options and hide vector values in search results. This step makes your search results easier to read.

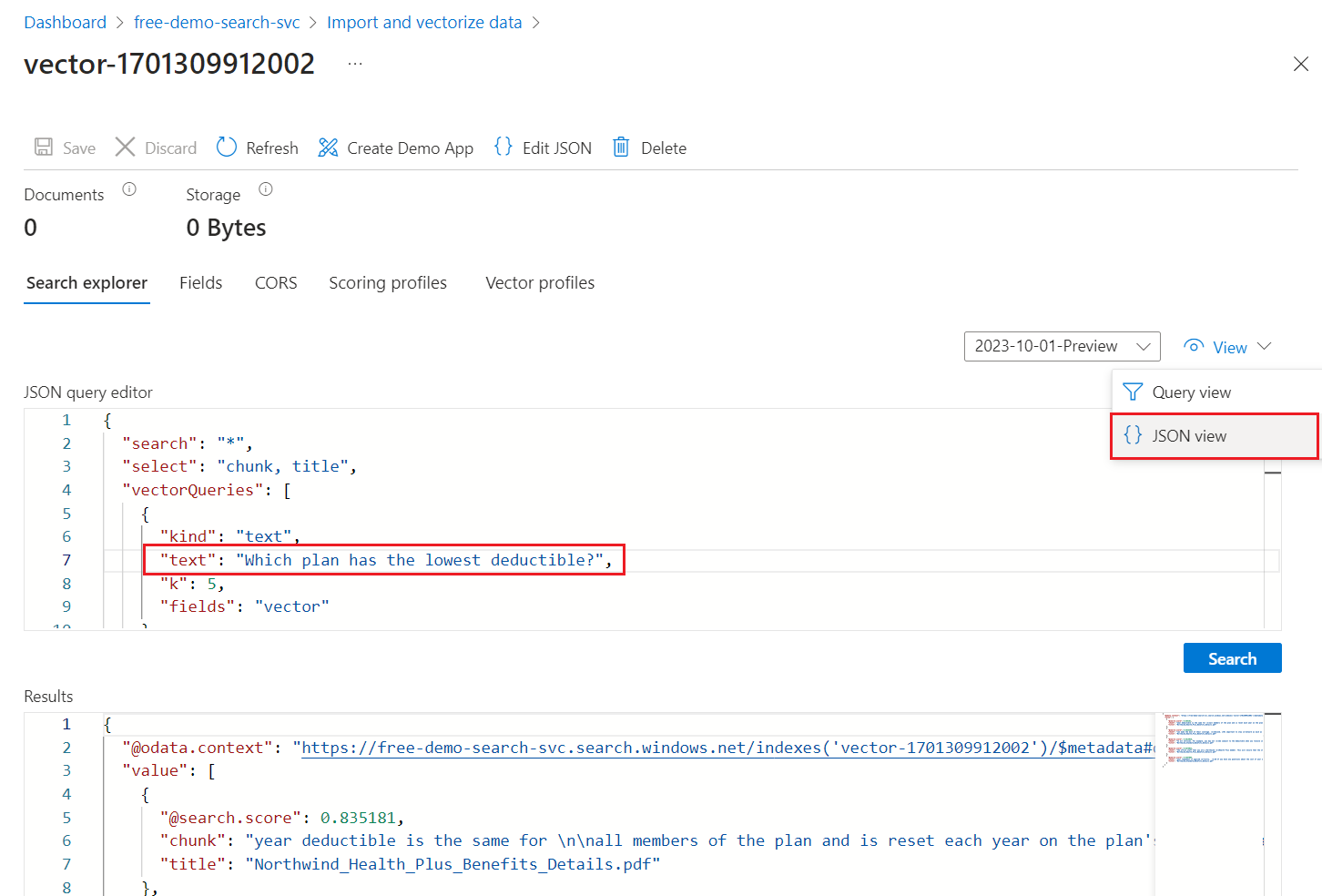

On the View menu, select JSON view so that you can enter text for your vector query in the

textvector query parameter.

The wizard offers a default query that issues a vector query on the

vectorfield and returns the five nearest neighbors. If you opted to hide vector values, your default query includes aselectstatement that excludes thevectorfield from search results.{ "select": "chunk_id,parent_id,chunk,title", "vectorQueries": [ { "kind": "text", "text": "*", "k": 5, "fields": "vector" } ] }For the

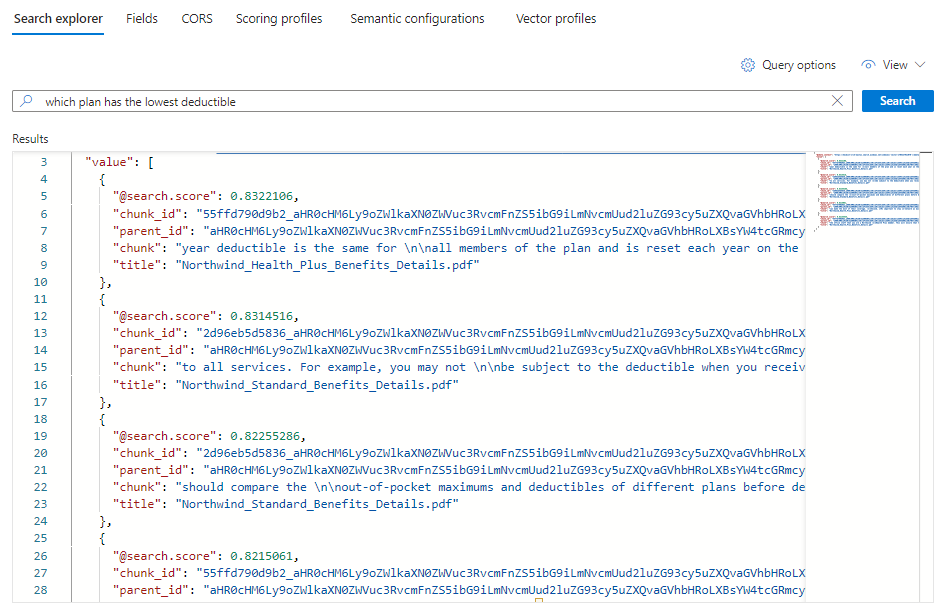

textvalue, replace the asterisk (*) with a question related to health plans, such asWhich plan has the lowest deductible?.Select Search to run the query.

Five matches should appear. Each document is a chunk of the original PDF. The

titlefield shows which PDF the chunk comes from.To see all of the chunks from a specific document, add a filter for the

titlefield for a specific PDF:{ "select": "chunk_id,parent_id,chunk,title", "filter": "title eq 'Benefit_Options.pdf'", "count": true, "vectorQueries": [ { "kind": "text", "text": "*", "k": 5, "fields": "vector" } ] }

Clean up

Azure AI Search is a billable resource. If you no longer need it, delete it from your subscription to avoid charges.

Next step

This quickstart introduced you to the Import and vectorize data wizard that creates all of the necessary objects for integrated vectorization. If you want to explore each step in detail, try an integrated vectorization sample.

Feedback

În curând: Pe parcursul anului 2024, vom elimina treptat Probleme legate de GitHub ca mecanism de feedback pentru conținut și îl vom înlocui cu un nou sistem de feedback. Pentru mai multe informații, consultați: https://aka.ms/ContentUserFeedback.

Trimiteți și vizualizați feedback pentru