Quickstart: Create an Azure Managed Instance for Apache Cassandra cluster from the Azure portal

Azure Managed Instance for Apache Cassandra is a fully managed service for pure open-source Apache Cassandra clusters. The service also allows configurations to be overridden, depending on the specific needs of each workload, allowing maximum flexibility and control where needed

This quickstart demonstrates how to use the Azure portal to create an Azure Managed Instance for Apache Cassandra cluster.

Prerequisites

If you don't have an Azure subscription, create a free account before you begin.

Create a managed instance cluster

Sign in to the Azure portal.

From the search bar, search for Managed Instance for Apache Cassandra and select the result.

Select Create Managed Instance for Apache Cassandra cluster button.

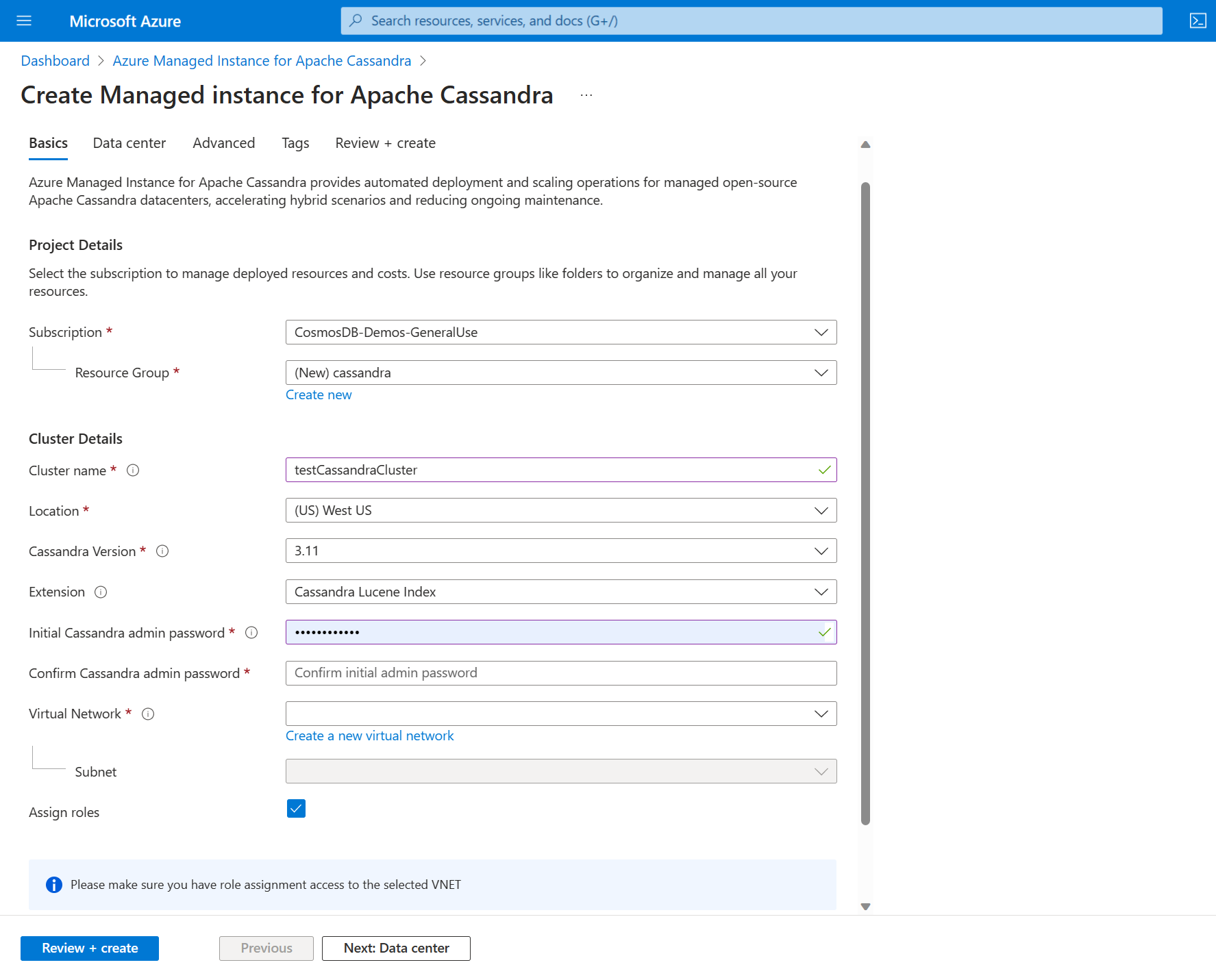

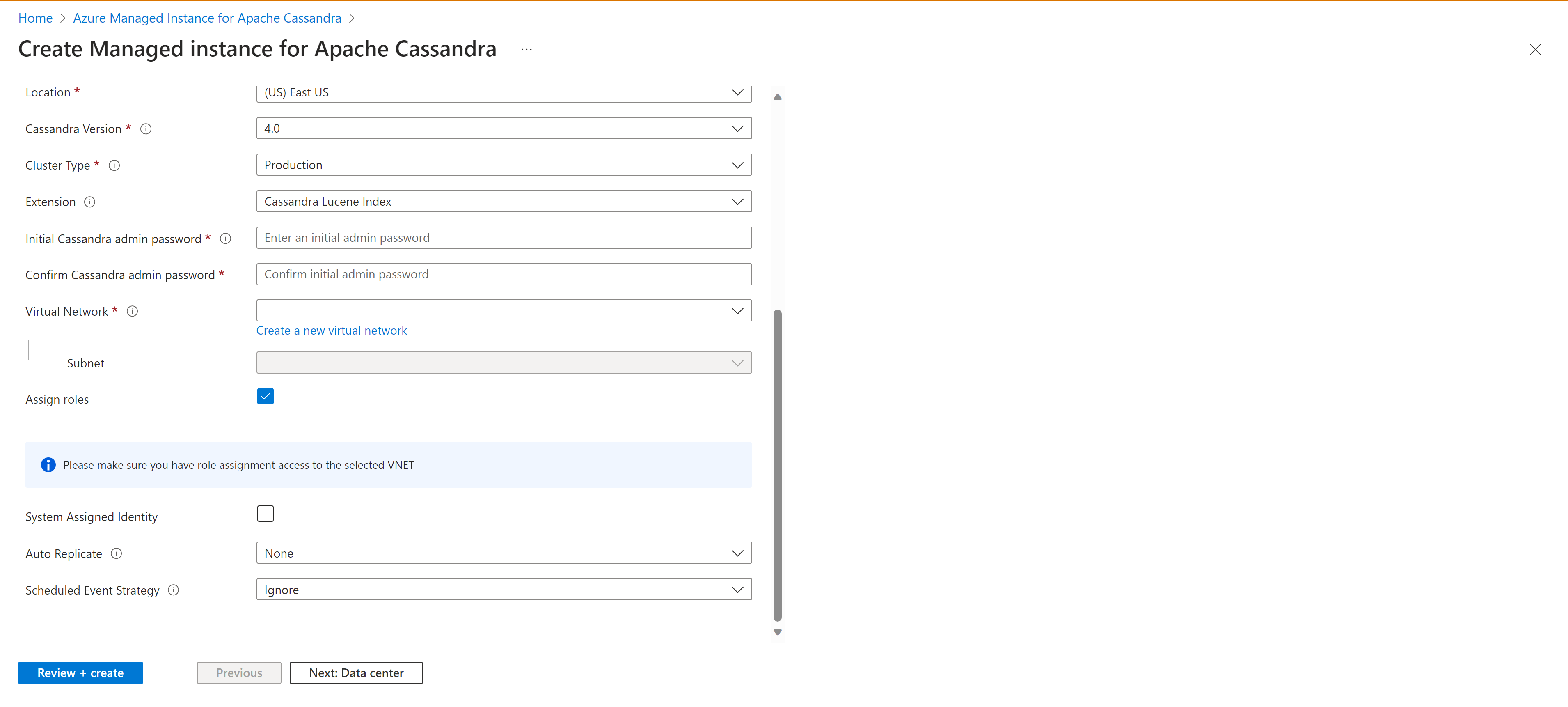

From the Create Managed Instance for Apache Cassandra pane, enter the following details:

- Subscription - From the drop-down, select your Azure subscription.

- Resource Group- Specify whether you want to create a new resource group or use an existing one. A resource group is a container that holds related resources for an Azure solution. For more information, see Azure Resource Group overview article.

- Cluster name - Enter a name for your cluster.

- Location - Location where your cluster will be deployed to.

- Cassandra version - Version of Apache Cassandra that will be deployed.

- Extention - Extensions that will be added, including Cassandra Lucene Index.

- Initial Cassandra admin password - Password that is used to create the cluster.

- Confirm Cassandra admin password - Reenter your password.

- Virtual Network - Select an Exiting Virtual Network and Subnet, or create a new one.

- Assign roles - Virtual Networks require special permissions in order to allow managed Cassandra clusters to be deployed. Keep this box checked if you're creating a new Virtual Network, or using an existing Virtual Network without permissions applied. If using a Virtual network where you have already deployed Azure SQL Managed Instance Cassandra clusters, uncheck this option.

Tip

If you use VPN then you don't need to open any other connection.

Note

The Deployment of a Azure Managed Instance for Apache Cassandra requires internet access. Deployment fails in environments where internet access is restricted. Make sure you aren't blocking access within your VNet to the following vital Azure services that are necessary for Managed Cassandra to work properly. See Required outbound network rules for more detailed information.

- Azure Storage

- Azure KeyVault

- Azure Virtual Machine Scale Sets

- Azure Monitoring

- Microsoft Entra ID

- Azure Security

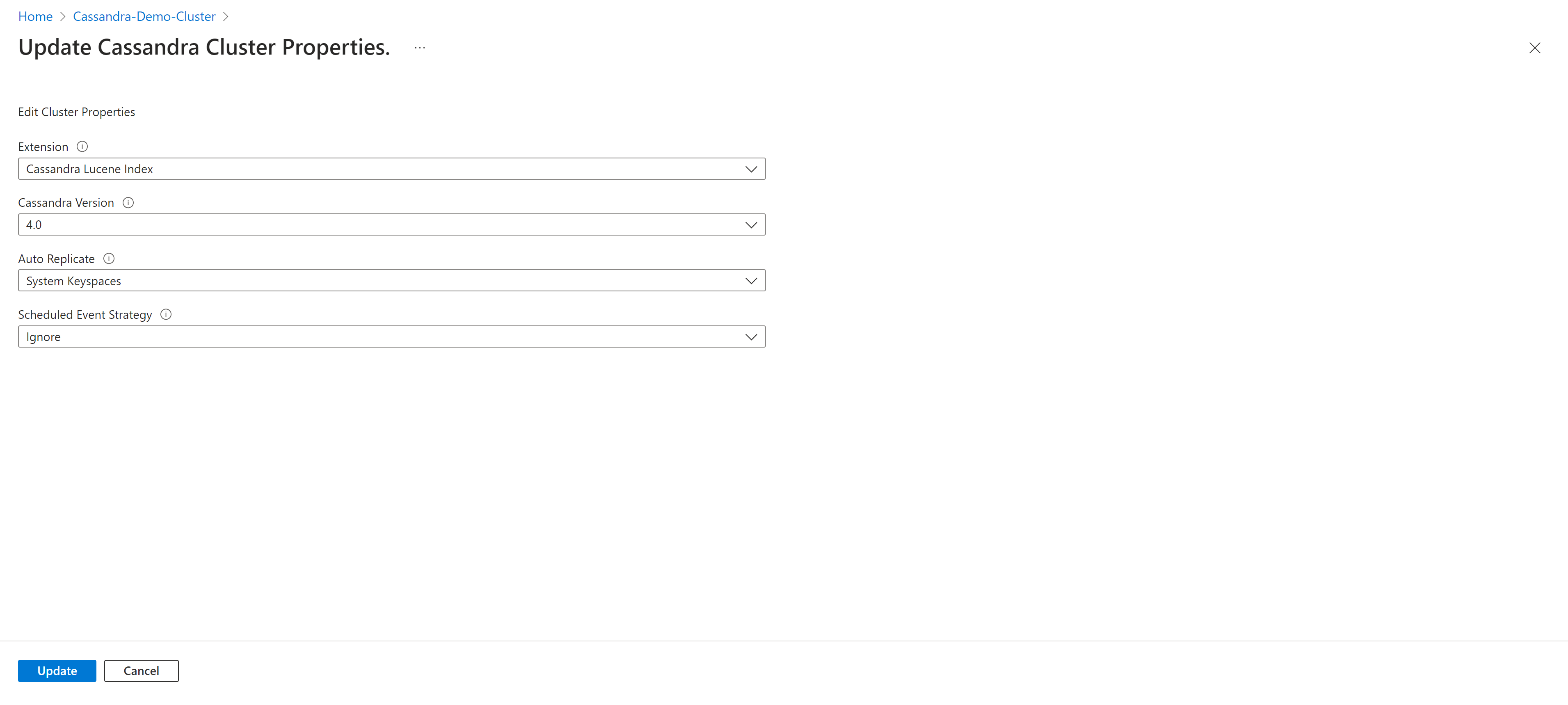

- Auto Replicate - Choose the form of auto-replication to be utilized. Learn more

- Schedule Event Strategy - The strategy to be used by the cluster for scheduled events.

Tip

- StopANY means stop any node when there is a scheduled even for the node.

- StopByRack means only stop node in a given rack for a given Scheduled Event, e.g. if two or more events are scheduled for nodes in different racks at the same time, only nodes in one rack will be stopped whereas the other nodes in other racks are delayed.

Next select the Data center tab.

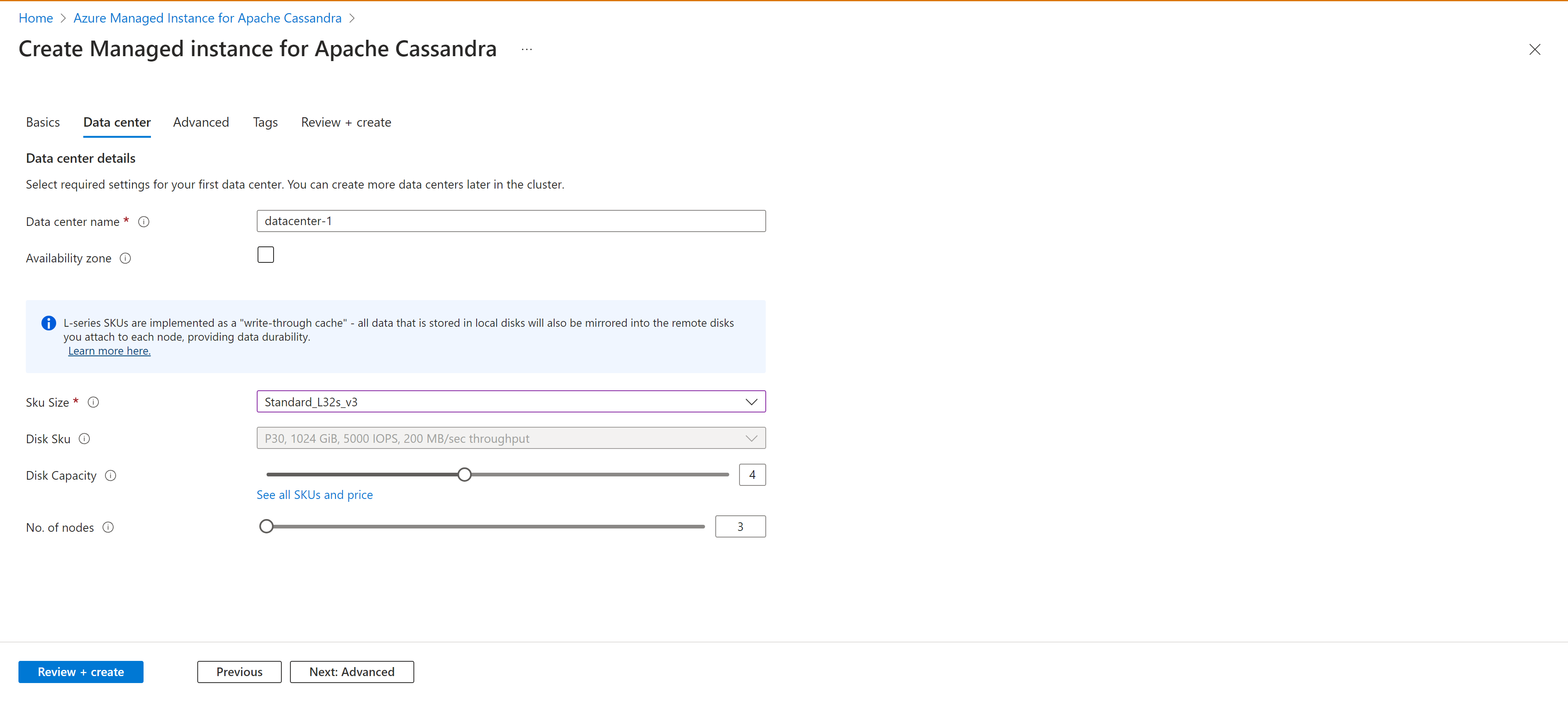

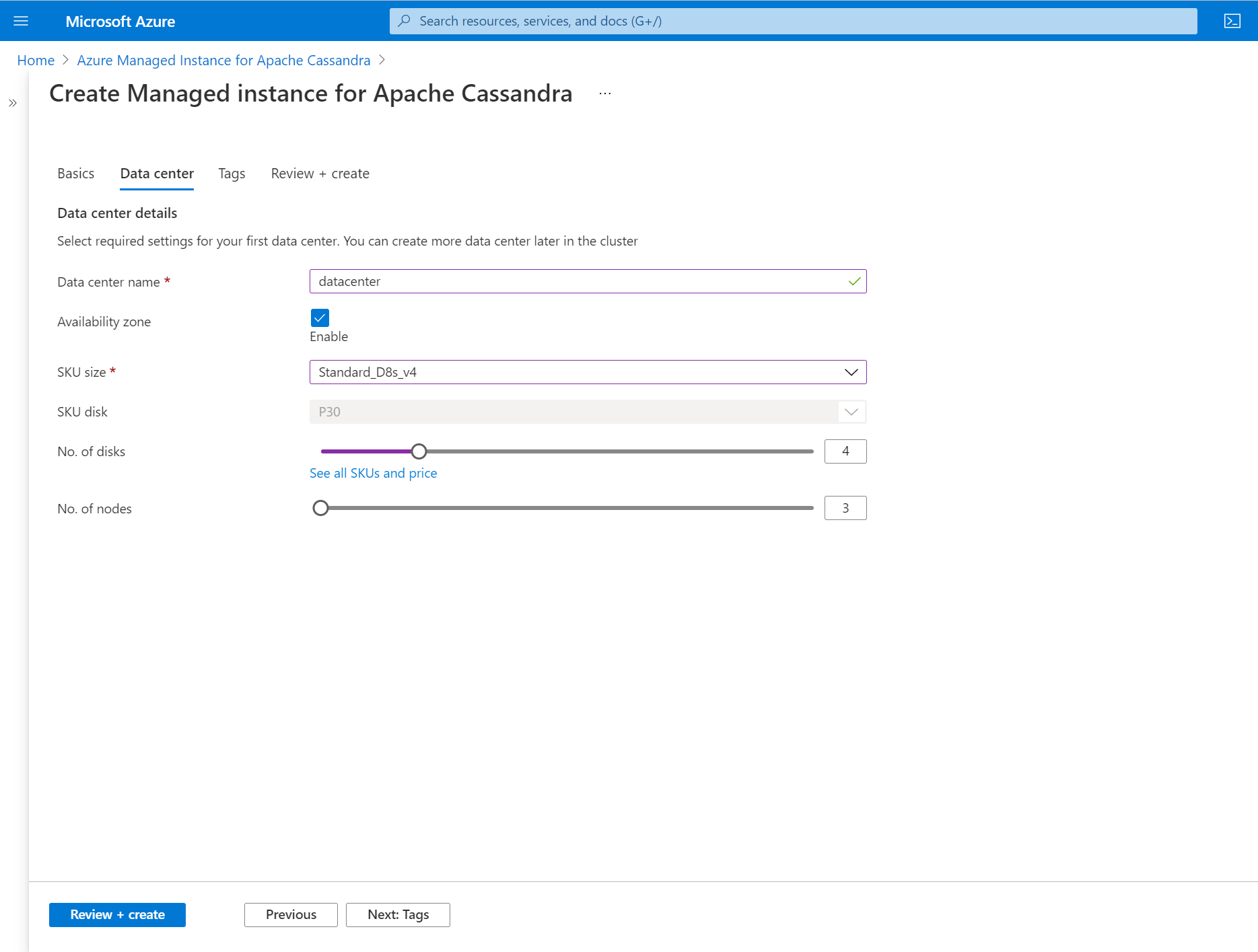

Enter the following details:

- Data center name - Type a data center name in the text field.

- Availability zone - Check this box if you want availability zones to be enabled.

- SKU Size - Choose from the available Virtual Machine SKU sizes.

Note

We have introduced write-through caching (Public Preview) through the utilization of L-series VM SKUs. This implementation aims to minimize tail latencies and enhance read performance, particularly for read intensive workloads. These specific SKUs are equipped with locally attached disks, ensuring hugely increased IOPS for read operations and reduced tail latency.

Important

Write-through caching, is in public preview. This feature is provided without a service level agreement, and it's not recommended for production workloads. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

- No. of disks - Choose the number of p30 disks to be attached to each Cassandra node.

- No. of nodes - Choose the number of Cassandra nodes that will be deployed to this datacenter.

Warning

Availability zones are not supported in all regions. Deployments will fail if you select a region where Availability zones are not supported. See here for supported regions. The successful deployment of availability zones is also subject to the availability of compute resources in all of the zones in the given region. Deployments may fail if the SKU you have selected, or capacity, is not available across all zones.

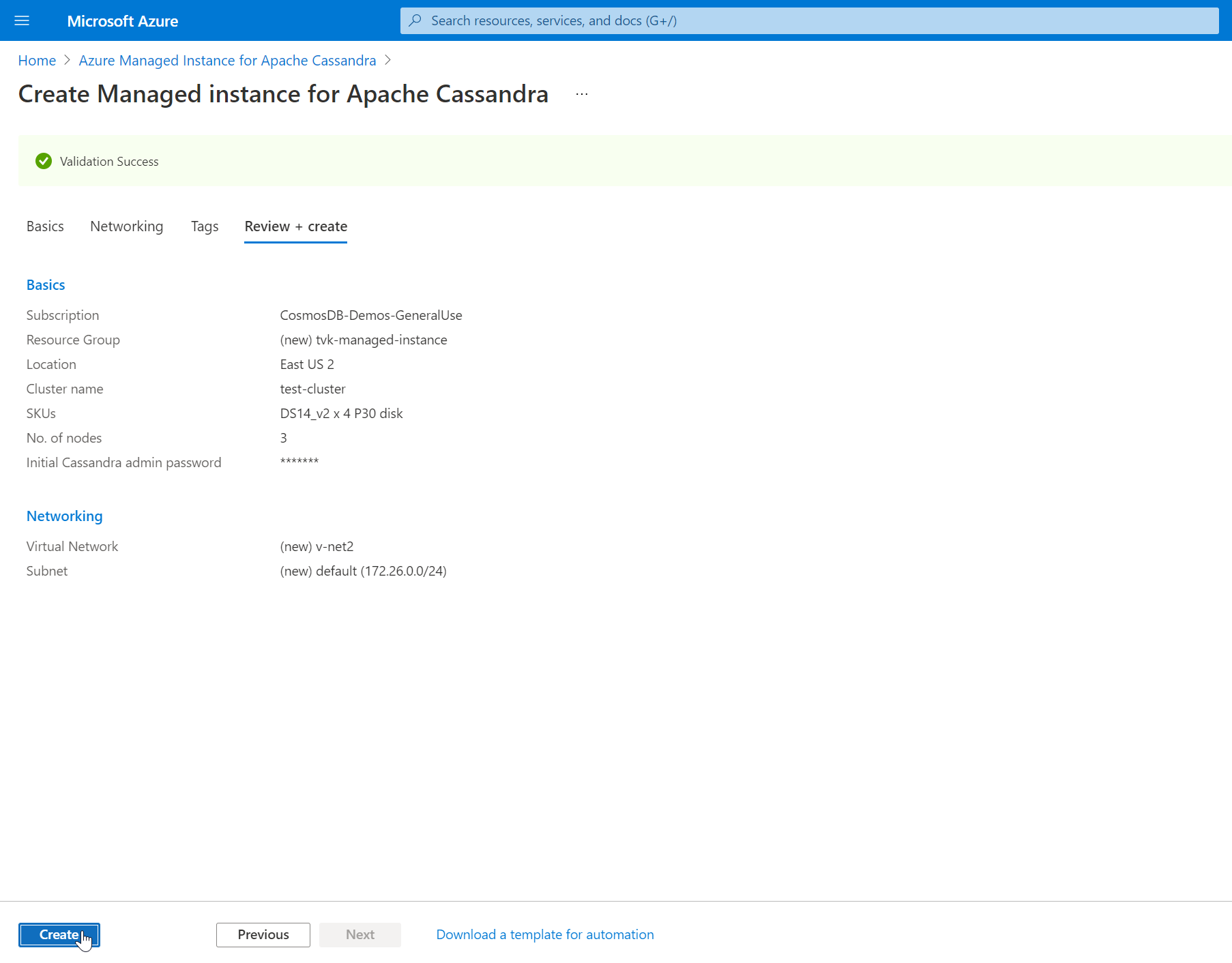

Next, select Review + create > Create

Note

It can take up to 15 minutes for the cluster to be created.

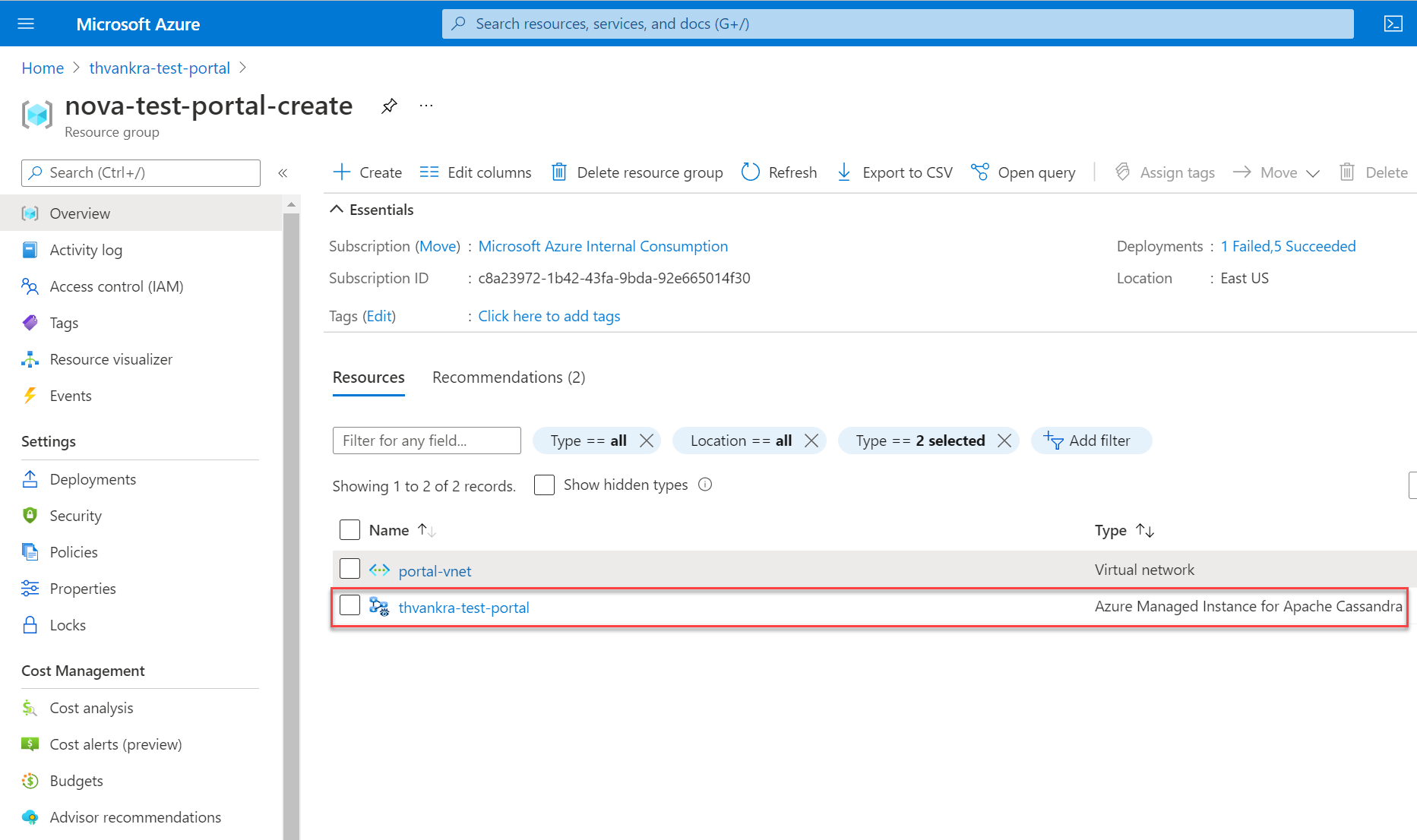

After the deployment has finished, check your resource group to see the newly created managed instance cluster:

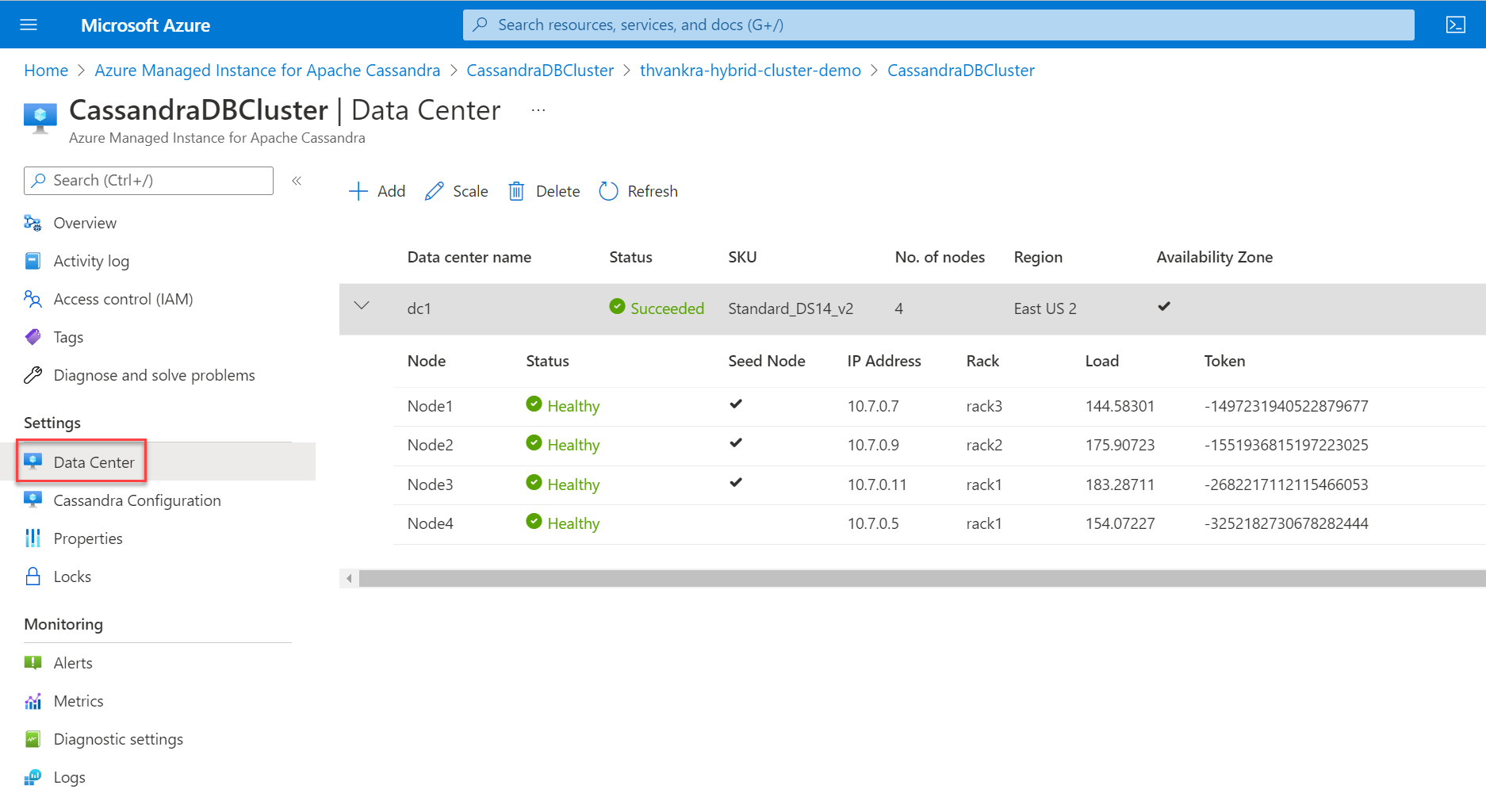

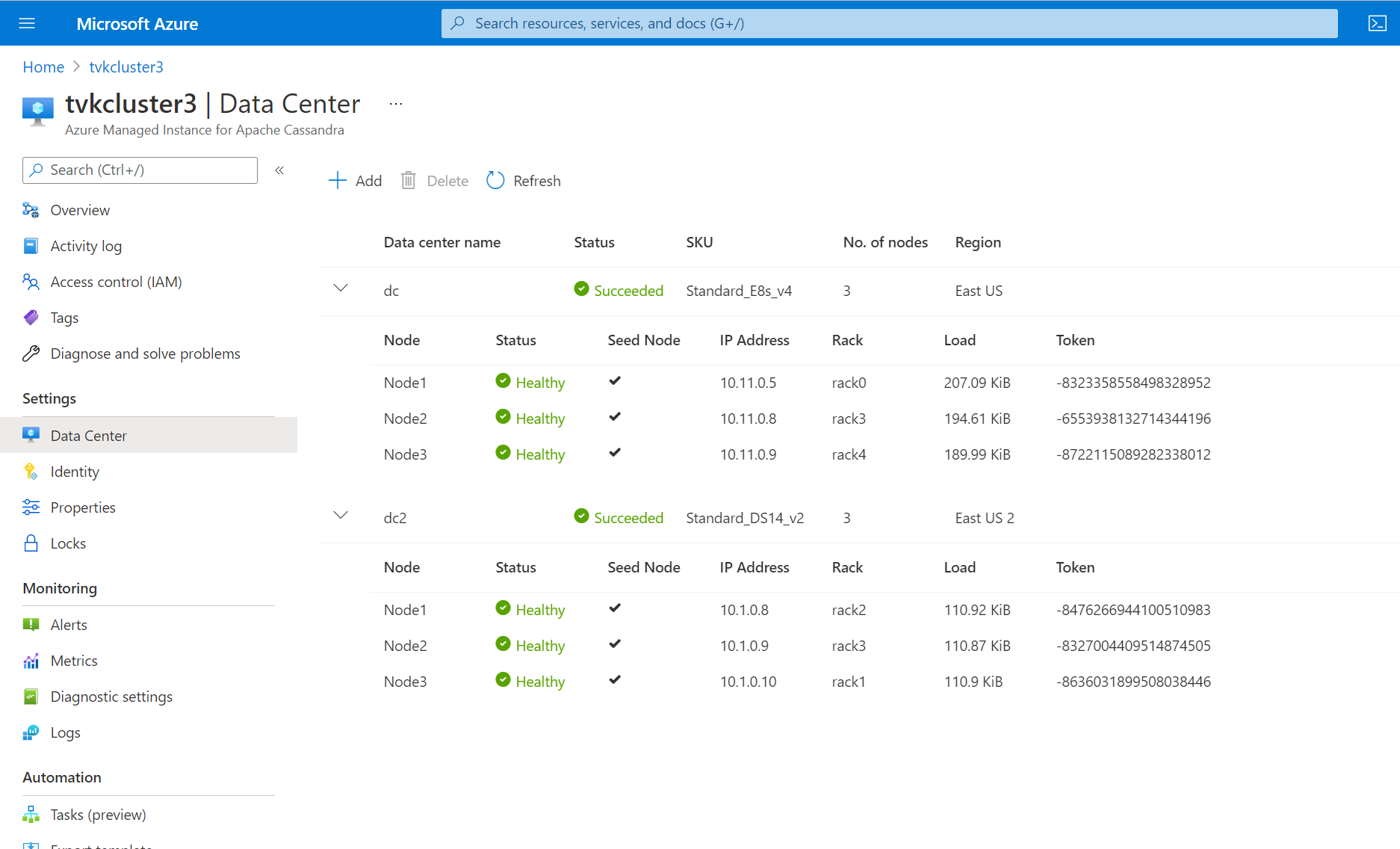

To browse through the cluster nodes, navigate to the cluster resource and open the Data Center pane to view them:

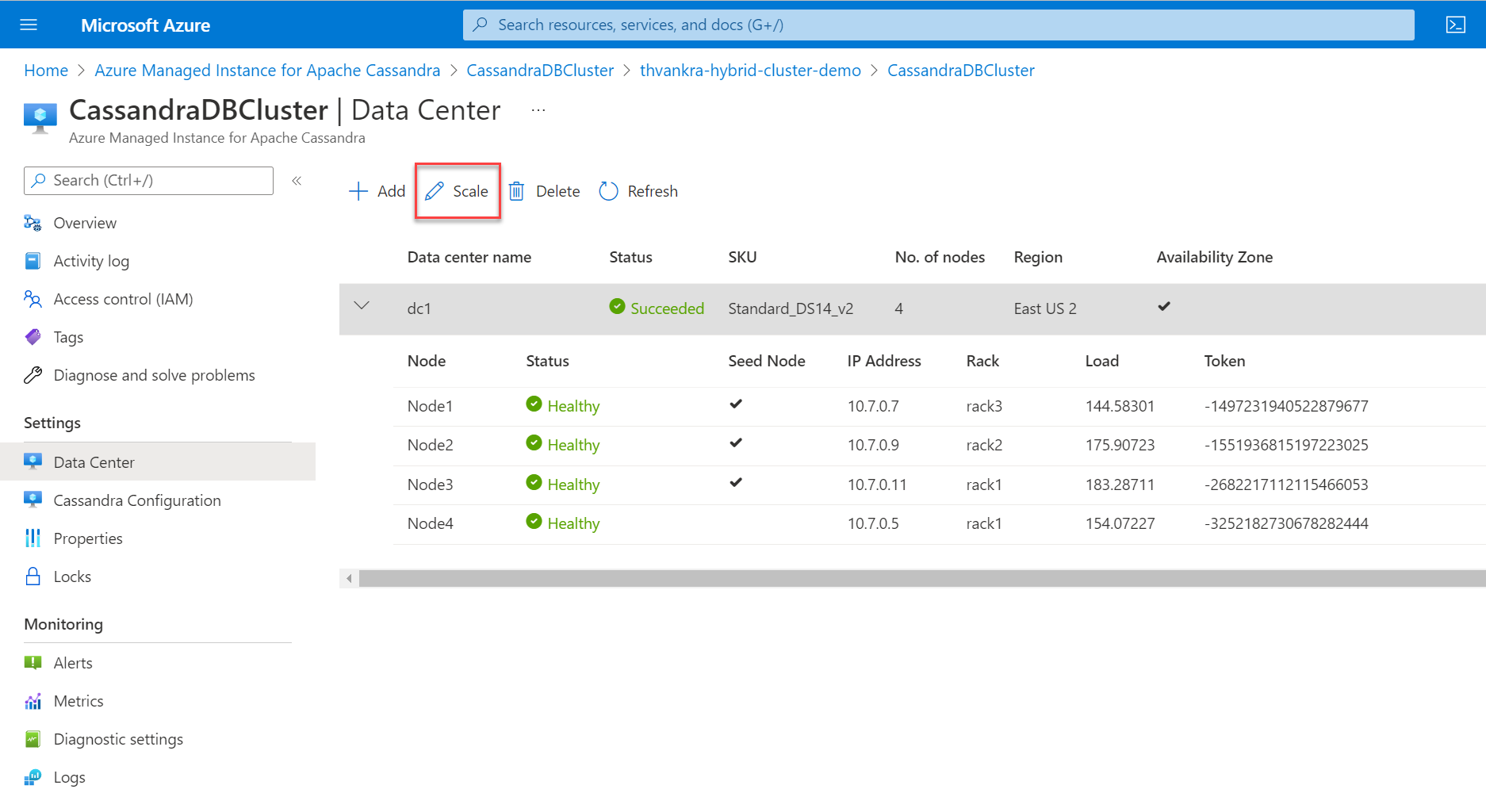

Scale a datacenter

Now that you have deployed a cluster with a single data center, you can either scale horizontally or vertically by highlighting the data center, and selecting the Scale button:

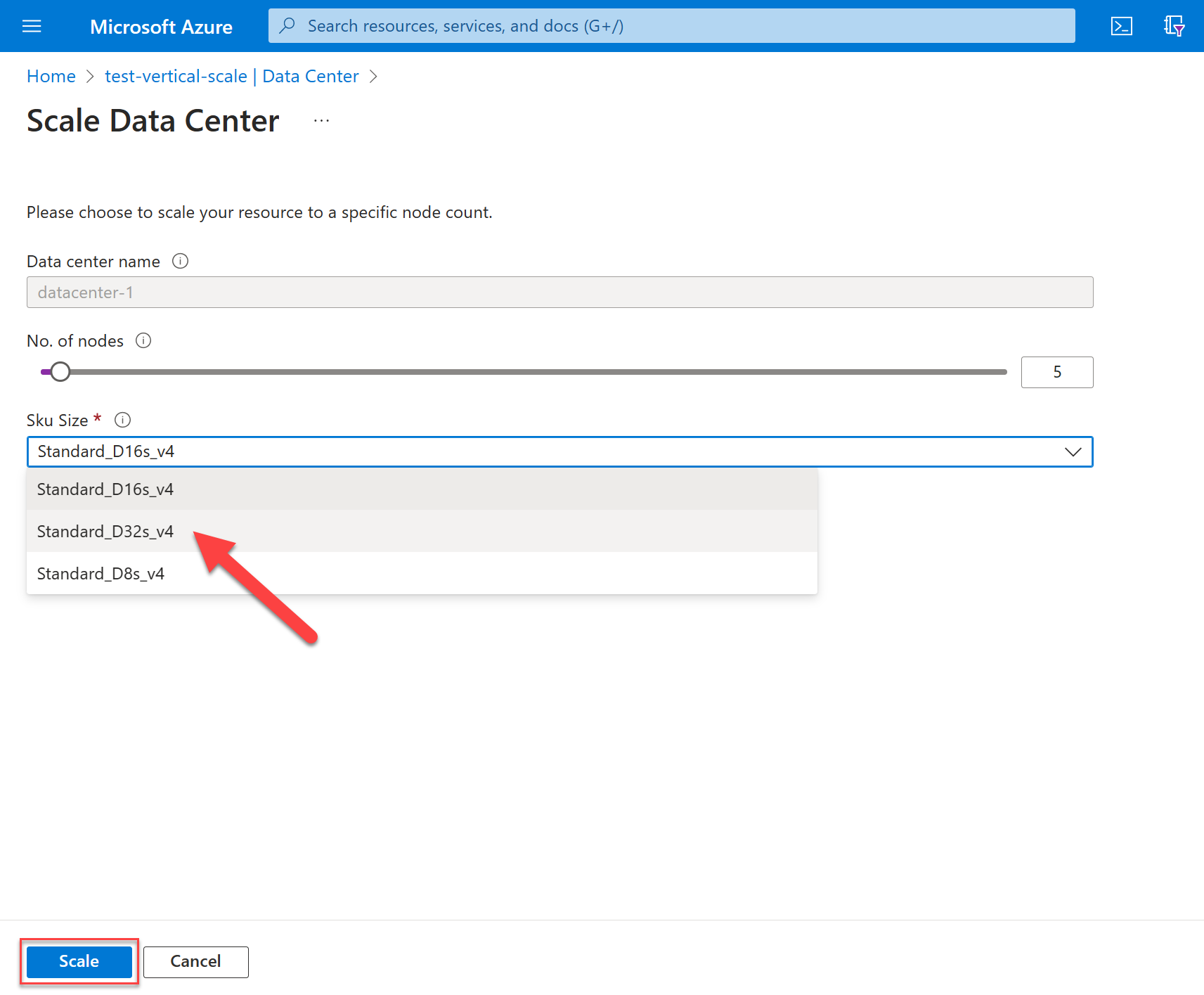

Horizontal scale

To scale out or scale in on nodes, move the slider to the desired number, or just edit the value. When finished, hit Scale.

Vertical scale

To scale up or to scale down SKU size for your nodes, select from the Sku Size dropdown. When finished, hit Scale.

Note

The length of time it takes for a scaling operation depends on various factors, it may take several minutes. When Azure notifies you that the scale operation has completed, this does not mean that all your nodes have joined the Cassandra ring. Nodes will be fully commissioned when they all display a status of "healthy", and the datacenter status reads "succeeded". Scaling is an online operation and works in the same manner as described for patching in Management operations

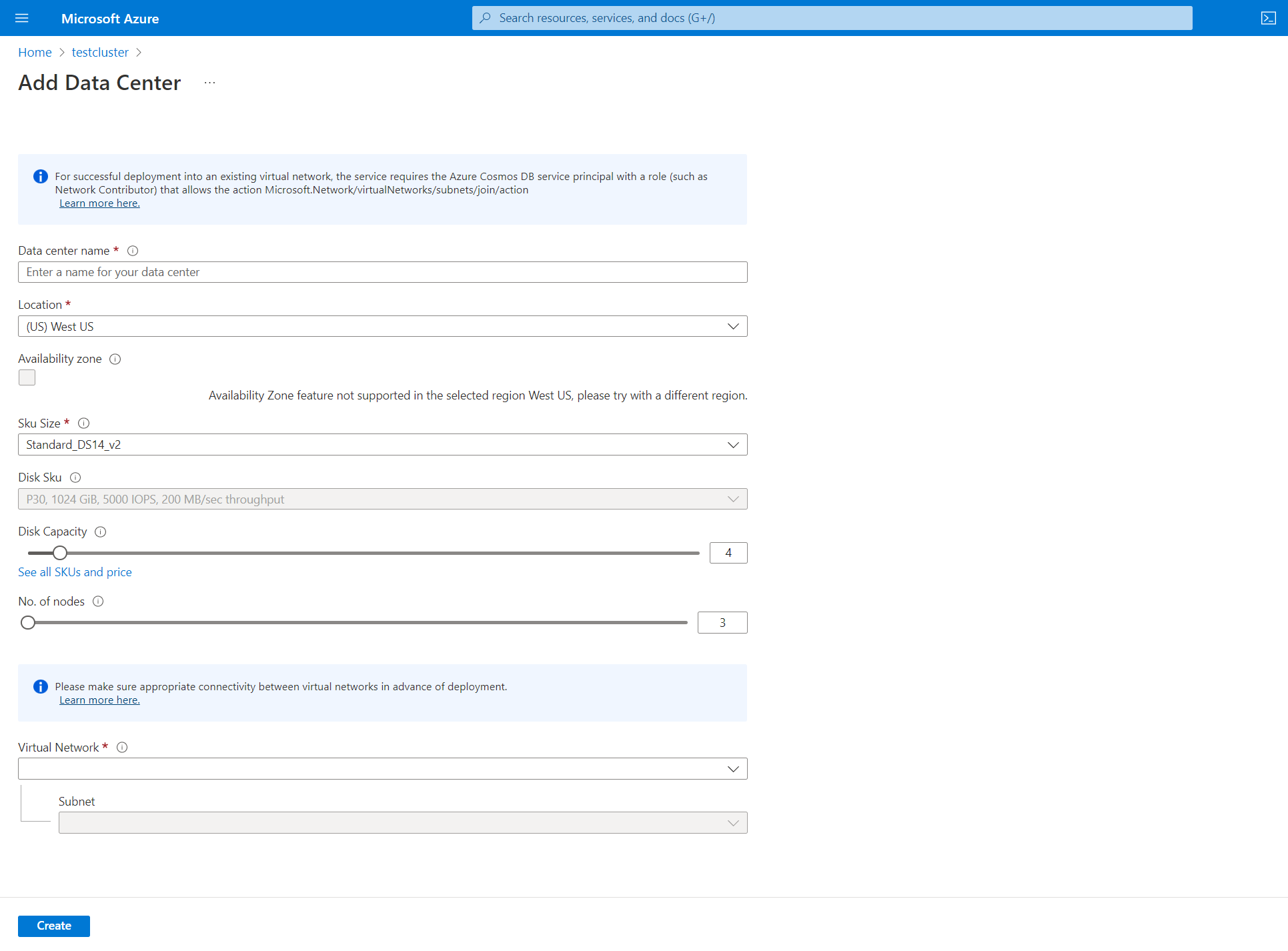

Add a datacenter

To add another datacenter, click the add button in the Data Center pane:

Warning

If you are adding a datacenter in a different region, you will need to select a different virtual network. You will also need to ensure that this virtual network has connectivity to the primary region's virtual network created above (and any other virtual networks that are hosting datacenters within the managed instance cluster). Take a look at this article to learn how to peer virtual networks using Azure portal. You also need to make sure you have applied the appropriate role to your virtual network before attempting to deploy a managed instance cluster, using the below CLI command.

az role assignment create \ --assignee a232010e-820c-4083-83bb-3ace5fc29d0b \ --role 4d97b98b-1d4f-4787-a291-c67834d212e7 \ --scope /subscriptions/<subscriptionID>/resourceGroups/<resourceGroupName>/providers/Microsoft.Network/virtualNetworks/<vnetName>Fill in the appropriate fields:

- Datacenter name - From the drop-down, select your Azure subscription.

- Availability zone - Check this box if you want availability zones to be enabled in this datacenter.

- Location - Location where your datacenter will be deployed to.

- SKU Size - Choose from the available Virtual Machine SKU sizes.

- No. of disks - Choose the number of p30 disks to be attached to each Cassandra node.

- No. of nodes - Choose the number of Cassandra nodes that will be deployed to this datacenter.

- Virtual Network - Select an Exiting Virtual Network and Subnet.

Warning

Notice that we do not allow creation of a new virtual network when adding a datacenter. You need to choose an existing virtual network, and as mentioned above, you need to ensure there is connectivity between the target subnets where datacenters will be deployed. You also need to apply the appropriate role to the VNet to allow deployment (see above).

When the datacenter is deployed, you should be able to view all datacenter information in the Data Center pane:

To ensure replication between data centers, connect to cqlsh and use the following CQL query to update the replication strategy in each keyspace to include all datacenters across the cluster (system tables will be updated automatically):

ALTER KEYSPACE "ks" WITH REPLICATION = {'class': 'NetworkTopologyStrategy', 'dc': 3, 'dc2': 3};If you are adding a data center to a cluster where there is already data, you need to run

rebuildto replicate the historical data. In Azure CLI, run the below command to executenodetool rebuildon each node of the new data center, replacing<new dc ip address>with the IP address of the node, and<olddc>with the name of your existing data center:az managed-cassandra cluster invoke-command \ --resource-group $resourceGroupName \ --cluster-name $clusterName \ --host <new dc ip address> \ --command-name nodetool --arguments rebuild="" "<olddc>"=""Warning

You should not allow application clients to write to the new data center until you have applied keyspace replication changes. Otherwise, rebuild won't work, and you will need to create a support request so our team can run

repairon your behalf.

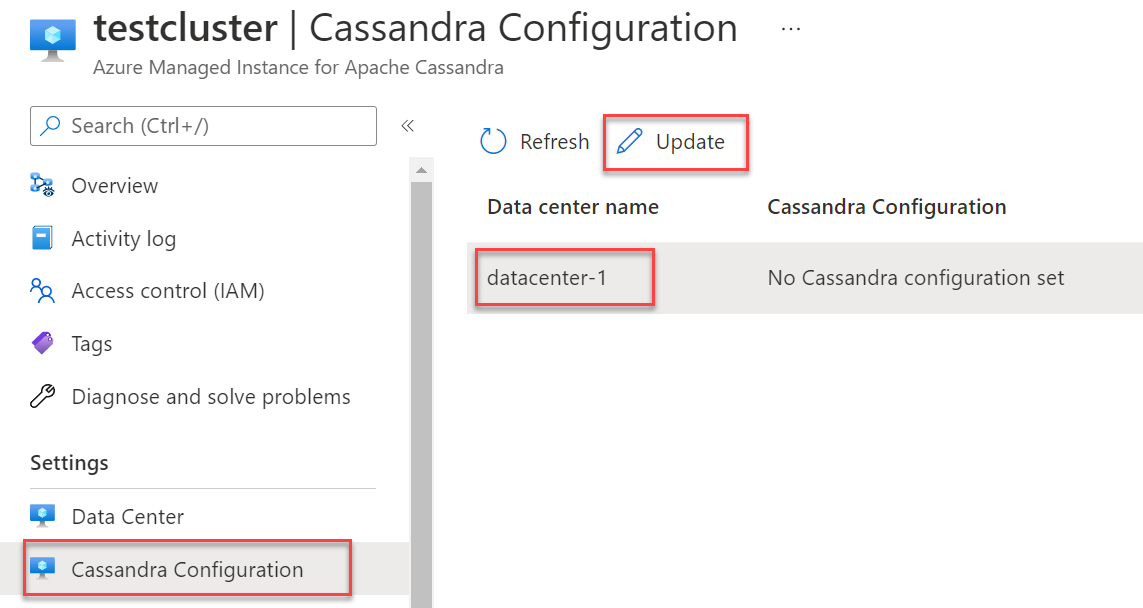

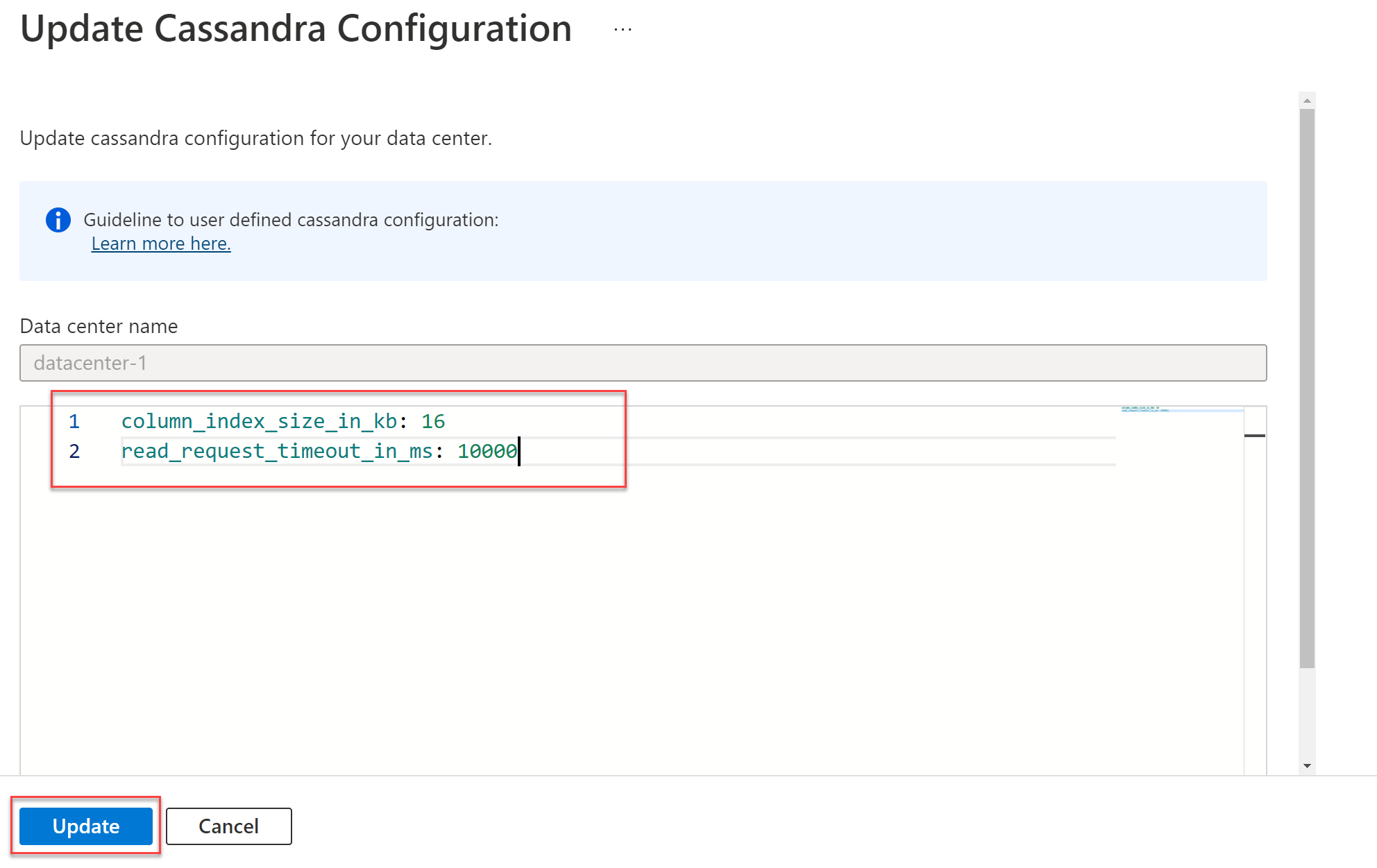

Update Cassandra configuration

The service allows update to Cassandra YAML configuration on a datacenter via the portal or by using CLI commands. To update settings in the portal:

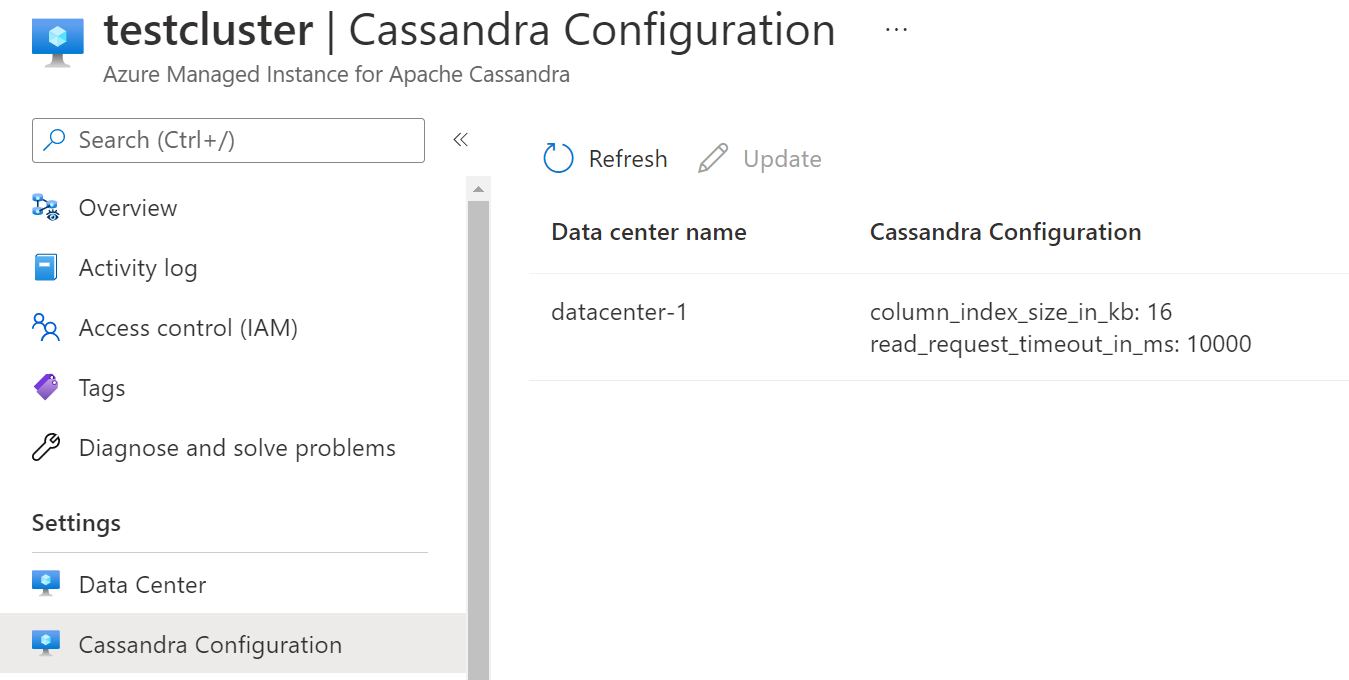

Find

Cassandra Configurationunder settings. Highlight the data center whose configuration you want to change, and click update:In the window that opens, enter the field names in YAML format, as shown below. Then click update.

When update is complete, the overridden values will show in the

Cassandra Configurationpane:Note

Only overridden Cassandra configuration values are shown in the portal.

Important

Ensure the Cassandra yaml settings you provide are appropriate for the version of Cassandra you have deployed. See here for Cassandra v3.11 settings and here for v4.0. The following YAML settings are not allowed to be updated:

- cluster_name

- seed_provider

- initial_token

- autobootstrap

- client_encryption_options

- server_encryption_options

- transparent_data_encryption_options

- audit_logging_options

- authenticator

- authorizer

- role_manager

- storage_port

- ssl_storage_port

- native_transport_port

- native_transport_port_ssl

- listen_address

- listen_interface

- broadcast_address

- hints_directory

- data_file_directories

- commitlog_directory

- cdc_raw_directory

- saved_caches_directory

- endpoint_snitch

- partitioner

- rpc_address

- rpc_interface

Update Cassandra version

Important

Cassandra 4.1, 5.0 and Turnkey Version Updates, are in public preview. These features are provided without a service level agreement, and it's not recommended for production workloads. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

You have the option to conduct in-place major version upgrades directly from the portal or through Az CLI, Terraform, or ARM templates.

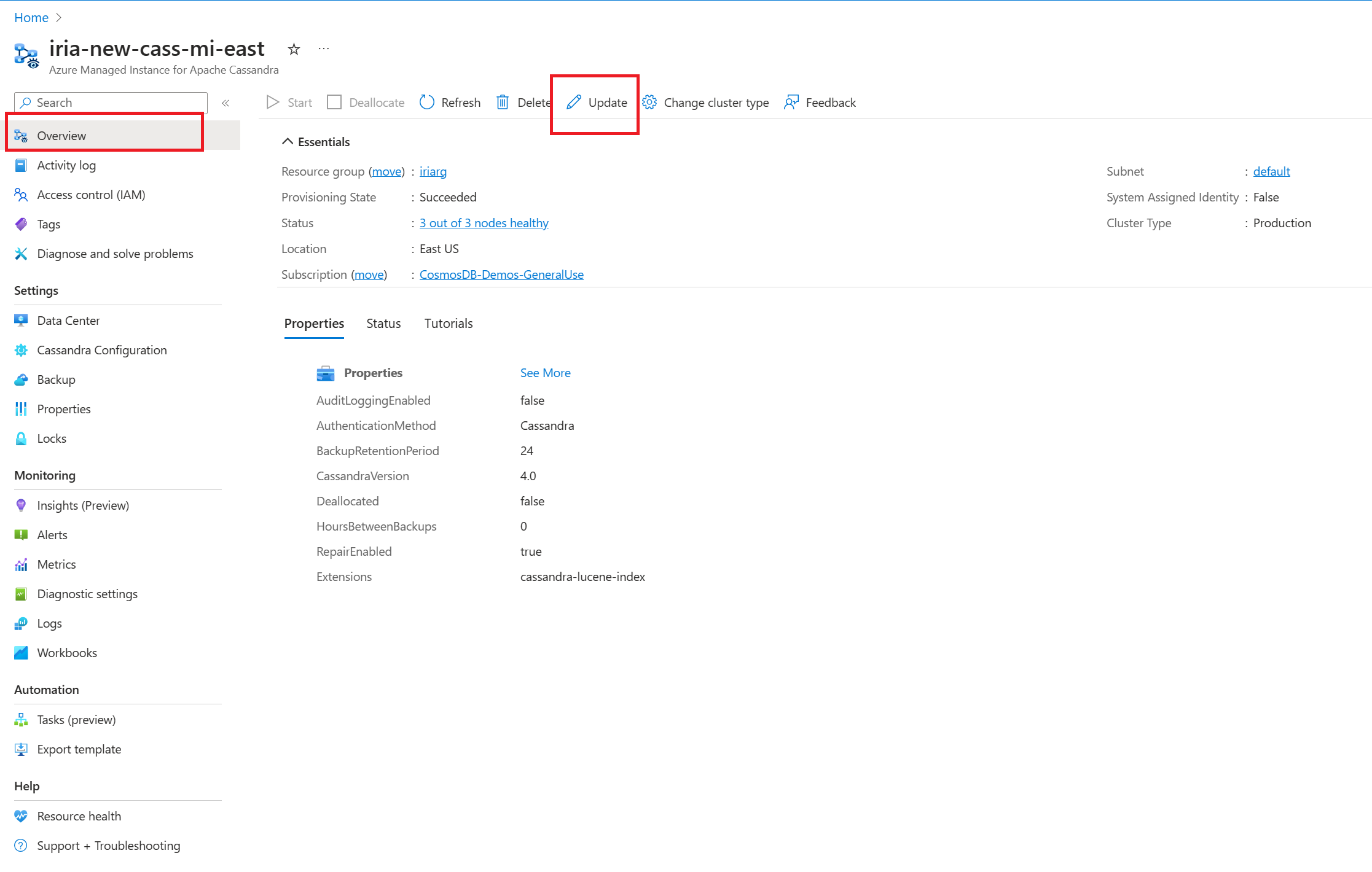

Find the

Updatepanel from the Overview tabSelect the Cassandra version from the dropdown.

Warning

Do not skip versions. We recommend to update only from one version to another example 3.11 to 4.0, 4.0 to 4.1.

Select on update to save.

Turnkey replication

Cassandra 5.0 introduces a streamlined approach for deploying multi-region clusters, offering enhanced convenience and efficiency. Using turnkey replication functionality, setting up and managing multi-region clusters has become more accessible, allowing for smoother integration and operation across distributed environments. This update significantly reduces the complexities traditionally associated with deploying and maintaining multi-region configurations, allowing users to use Cassandra's capabilities with greater ease and effectiveness.

Tip

- None: Auto replicate is set to none.

- SystemKeyspaces: Auto-replicate all system keyspaces (system_auth, system_traces, system_auth)

- AllKeyspaces: Auto-replicate all keyspaces and monitor if new keyspaces are created and then apply auto-replicate settings automatically.

Auto-replication scenarios

- When adding a new data center, the auto-replicate feature in Cassandra will seamlessly execute

nodetool rebuildto ensure the successful replication of data across the added data center. - Removing a data center triggers an automatic removal of the specific data center from the keyspaces.

For external data centers, such as those hosted on-premises, they can be included in the keyspaces through the utilization of the external data center property. This enables Cassandra to incorporate these external data centers as sources for the rebuilding process.

Warning

Setting auto-replicate to AllKeyspaces will change your keyspaces replication to include

WITH REPLICATION = { 'class' : 'NetworkTopologyStrategy', 'on-prem-datacenter-1' : 3, 'mi-datacenter-1': 3 }

If this is not the topology you want, you will need to use SystemKeyspaces, adjust them yourself, and run nodetool rebuild manually on the Azure Managed Instance for Apache Cassandra cluster.

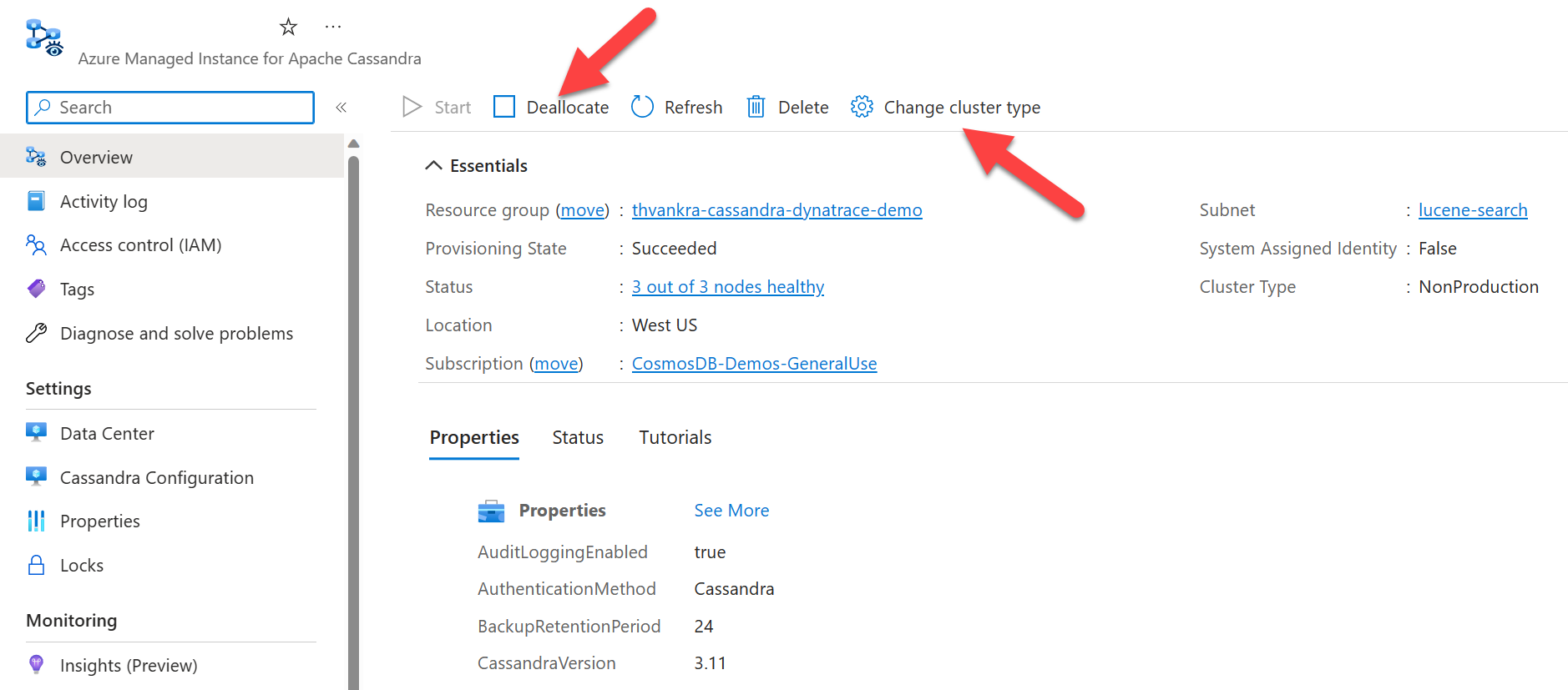

De-allocate cluster

- For non-production environments, you can pause/de-allocate resources in the cluster in order to avoid being charged for them (you will continue to be charged for storage). First change cluster type to

NonProduction, thendeallocate.

Warning

Do not execute any schema or write operations during de-allocation - this can lead to data loss and in rare cases schema corruption requiring manual intervention from the support team.

Troubleshooting

If you encounter an error when applying permissions to your Virtual Network using Azure CLI, such as Cannot find user or service principal in graph database for 'e5007d2c-4b13-4a74-9b6a-605d99f03501', you can apply the same permission manually from the Azure portal. Learn how to do this here.

Note

The Azure Cosmos DB role assignment is used for deployment purposes only. Azure Managed Instanced for Apache Cassandra has no backend dependencies on Azure Cosmos DB.

Connecting to your cluster

Azure Managed Instance for Apache Cassandra does not create nodes with public IP addresses, so to connect to your newly created Cassandra cluster, you will need to create another resource inside the VNet. This could be an application, or a Virtual Machine with Apache's open-source query tool CQLSH installed. You can use a template to deploy an Ubuntu Virtual Machine.

Connecting from CQLSH

After the virtual machine is deployed, use SSH to connect to the machine, and install CQLSH using the below commands:

# Install default-jre and default-jdk

sudo apt update

sudo apt install openjdk-8-jdk openjdk-8-jre

# Install the Cassandra libraries in order to get CQLSH:

echo "deb http://archive.apache.org/dist/cassandra/debian 311x main" | sudo tee -a /etc/apt/sources.list.d/cassandra.sources.list

curl https://downloads.apache.org/cassandra/KEYS | sudo apt-key add -

sudo apt-get update

sudo apt-get install cassandra

# Export the SSL variables:

export SSL_VERSION=TLSv1_2

export SSL_VALIDATE=false

# Connect to CQLSH (replace <IP> with the private IP addresses of a node in your Datacenter):

host=("<IP>")

initial_admin_password="Password provided when creating the cluster"

cqlsh $host 9042 -u cassandra -p $initial_admin_password --ssl

Connecting from an application

As with CQLSH, connecting from an application using one of the supported Apache Cassandra client drivers requires SSL encryption to be enabled, and certification verification to be disabled. See samples for connecting to Azure Managed Instance for Apache Cassandra using Java, .NET, Node.js and Python.

Disabling certificate verification is recommended because certificate verification will not work unless you map I.P addresses of your cluster nodes to the appropriate domain. If you have an internal policy which mandates that you do SSL certificate verification for any application, you can facilitate this by adding entries like 10.0.1.5 host1.managedcassandra.cosmos.azure.com in your hosts file for each node. If taking this approach, you would also need to add new entries whenever scaling up nodes.

For Java, we also highly recommend enabling speculative execution policy where applications are sensitive to tail latency. You can find a demo illustrating how this works and how to enable the policy here.

Note

In the vast majority of cases it should not be necessary to configure or install certificates (rootCA, node or client, truststores, etc) to connect to Azure Managed Instance for Apache Cassandra. SSL encryption can be enabled by using the default truststore and password of the runtime being used by the client (see Java, .NET, Node.js and Python samples), because Azure Managed Instance for Apache Cassandra certificates will be trusted by that environment. In rare cases, if the certificate is not trusted, you may need to add it to the truststore.

Configuring client certificates (optional)

Configuring client certificates is optional. A client application can connect to Azure Managed Instance for Apache Cassandra as long as the above steps have been taken. However, if preferred, you can also additionally create and configure client certificates for authentication. In general, there are two ways of creating certificates:

- Self signed certs. This means a private and public (no CA) certificate for each node - in this case we need all public certificates.

- Certs signed by a CA. This can be a self-signed CA or even a public one. In this case we need the root CA certificate (refer to instructions on preparing SSL certificates for production), and all intermediaries (if applicable).

If you want to implement client-to-node certificate authentication or mutual Transport Layer Security (mTLS), you need to provide the certificates via Azure CLI. The below command will upload and apply your client certificates to the truststore for your Cassandra Managed Instance cluster (i.e. you do not need to edit cassandra.yaml settings). Once applied, your cluster will require Cassandra to verify the certificates when a client connects (see require_client_auth: true in Cassandra client_encryption_options).

resourceGroupName='<Resource_Group_Name>'

clusterName='<Cluster Name>'

az managed-cassandra cluster update \

--resource-group $resourceGroupName \

--cluster-name $clusterName \

--client-certificates /usr/csuser/clouddrive/rootCert.pem /usr/csuser/clouddrive/intermediateCert.pem

Clean up resources

If you're not going to continue to use this managed instance cluster, delete it with the following steps:

- From the left-hand menu of Azure portal, select Resource groups.

- From the list, select the resource group you created for this quickstart.

- On the resource group Overview pane, select Delete resource group.

- In the next window, enter the name of the resource group to delete, and then select Delete.

Next steps

In this quickstart, you learned how to create an Azure Managed Instance for Apache Cassandra cluster using Azure portal. You can now start working with the cluster:

Pripomienky

Pripravujeme: V priebehu roka 2024 postupne zrušíme službu Problémy v službe GitHub ako mechanizmus pripomienok týkajúcich sa obsahu a nahradíme ju novým systémom pripomienok. Ďalšie informácie nájdete na stránke: https://aka.ms/ContentUserFeedback.

Odoslať a zobraziť pripomienky pre