opomba,

Dostop do te strani zahteva pooblastilo. Poskusite se vpisati alispremeniti imenike.

Dostop do te strani zahteva pooblastilo. Poskusite lahko spremeniti imenike.

Warning

End of Support for Microsoft Fabric Runtime 1.2 has been announced. Microsoft Fabric Runtime 1.2 will be deprecated and disabled 31, March 2026. We strongly recommend upgrading your Fabric workspace and environments to use Runtime 1.3 (Apache Spark 3.5 and Delta Lake 3.2).

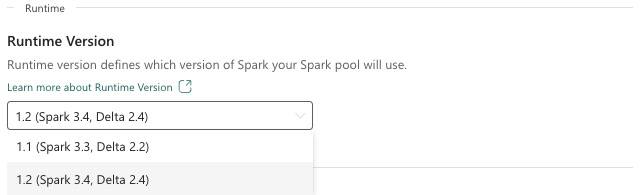

The Microsoft Fabric Runtime is an Azure-integrated platform based on Apache Spark that enables the execution and management of data engineering and data science experiences. This document covers the Runtime 1.2 components and versions.

The major components of Runtime 1.2 include:

- Apache Spark 3.4.1

- Operating System: Mariner 2.0

- Java: 11

- Scala: 2.12.17

- Python: 3.10

- Delta Lake: 2.4.0

- R: 4.2.2

Tip

Always use the most recent, GA runtime version for your production workload, which currently is Runtime 1.3.

Microsoft Fabric Runtime 1.2 comes with a collection of default level packages, including a full Anaconda installation and commonly used libraries for Java/Scala, Python, and R. These libraries are automatically included when using notebooks or jobs in the Microsoft Fabric platform. Refer to the documentation for a complete list of libraries. Microsoft Fabric periodically rolls out maintenance updates for Runtime 1.2, providing bug fixes, performance enhancements, and security patches. Staying up to date ensures optimal performance and reliability for your data processing tasks.

New features and improvements of Spark Release 3.4.1

Apache Spark 3.4.0 is the fifth release in the 3.x line. This release, driven by the open-source community, resolved over 2,600 Jira tickets. It introduces a Python client for Spark Connect, enhances Structured Streaming with async progress tracking and Python stateful processing. It expands Pandas API coverage with NumPy input support, simplifies migration from traditional data warehouses through ANSI compliance and new built-in functions. It also improves development productivity and debuggability with memory profiling. Additionally, Runtime 1.2 is based on Apache Spark 3.4.1, a maintenance release focused on stability fixes.

Key highlights

Read the full version of the release notes for a specific Apache Spark version by visiting both Spark 3.4.0 and Spark 3.4.1.

New custom query optimizations

Concurrent Writes Support in Spark

Encountering a 404 error with the message 'Operation failed: The specified path doesn't exist' is a common issue when performing parallel data insertions into the same table using a SQL INSERT INTO query. This error can result in data loss. Our new feature, the File Output Committer Algorithm, resolves this issue, allowing customers to perform parallel data insertion seamlessly.

To access this feature, enable the spark.sql.enable.concurrentWrites feature flag, which is enabled by default starting from Runtime 1.2 (Spark 3.4). While this feature is also available in other Spark 3 versions, it isn't enabled by default. This feature doesn't support parallel execution of INSERT OVERWRITE queries where each concurrent job overwrites data on different partitions of the same table dynamically. For this purpose, Spark offers an alternative feature, which can be activated by configuring the spark.sql.sources.partitionOverwriteMode setting to dynamic.

Smart reads, which skip files from failed jobs

In the current Spark committer system, when an insert into a table job fails but some tasks succeed, the files generated by the successful tasks coexist with files from the failed job. This coexistence can cause confusion for users as it becomes challenging to distinguish between files belonging to successful and unsuccessful jobs. Moreover, when one job reads from a table while another is inserting data concurrently into the same table, the reading job might access uncommitted data. If a write job fails, the reading job could process incorrect data.

The spark.sql.auto.cleanup.enabled flag controls our new feature, addressing this issue. When enabled, Spark automatically skips reading files that haven't been committed when it performs spark.read or selects queries from a table. Files written before enabling this feature continue to be read as usual.

Here are the visible changes:

- All files now include a

tid-{jobID}identifier in their filenames. - Instead of the

_successmarker typically created in the output location upon successful job completion, a new_committed_{jobID}marker is generated. This marker associates successful Job IDs with specific filenames. - We introduced a new SQL command that users can run periodically to manage storage and clean up uncommitted files. The syntax for this command is as follows:

- To clean up a specific directory:

CLEANUP ('/path/to/dir') [RETAIN number HOURS]; - To clean up a specific table:

CLEANUP [db_name.]table_name [RETAIN number HOURS];In this syntax,path/to/dirrepresents the location URI where cleanup is required, andnumberis a double type value representing the retention period. The default retention period is set to seven days.

- To clean up a specific directory:

- We introduced a new configuration option called

spark.sql.deleteUncommittedFilesWhileListing, which is set tofalseby default. Enabling this option results in the automatic deletion of uncommitted files during reads, but this scenario might slow down read operations. It's recommended to manually run the cleanup command when the cluster is idle instead of enabling this flag.

Migration guide from Runtime 1.1 to Runtime 1.2

When migrating from Runtime 1.1, powered by Apache Spark 3.3, to Runtime 1.2, powered by Apache Spark 3.4, review the official migration guide.

New features and improvements of Delta Lake 2.4

Delta Lake is an open source project that enables building a lakehouse architecture on top of data lakes. Delta Lake provides ACID transactions, scalable metadata handling, and unifies streaming and batch data processing on top of existing data lakes.

Specifically, Delta Lake offers:

- ACID transactions on Spark: Serializable isolation levels ensure that readers never see inconsistent data.

- Scalable metadata handling: Uses Spark distributed processing power to handle all the metadata for petabyte-scale tables with billions of files at ease.

- Streaming and batch unification: A table in Delta Lake is a batch table and a streaming source and sink. Streaming data ingest, batch historic backfill, interactive queries all just work out of the box.

- Schema enforcement: Automatically handles schema variations to prevent insertion of bad records during ingestion.

- Time travel: Data versioning enables rollbacks, full historical audit trails, and reproducible machine learning experiments.

- Upserts and deletes: Supports merge, update, and delete operations to enable complex use cases like change-data-capture, slowly changing dimension (SCD) operations, streaming upserts, and so on.

Read the full version of the release notes for Delta Lake 2.4.

Default level packages for Java, Scala, Python libraries

For a list of all the default level packages for Java, Scala, Python and their respective versions see the release notes.