Configure dataflows in Azure IoT Operations

Important

Azure IoT Operations Preview – enabled by Azure Arc is currently in preview. You shouldn't use this preview software in production environments.

You'll need to deploy a new Azure IoT Operations installation when a generally available release is made available. You won't be able to upgrade a preview installation.

See the Supplemental Terms of Use for Microsoft Azure Previews for legal terms that apply to Azure features that are in beta, preview, or otherwise not yet released into general availability.

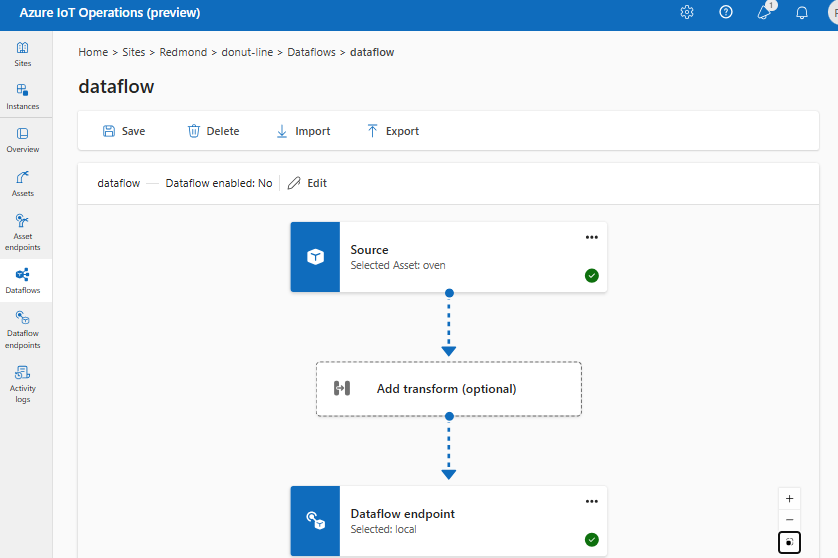

A dataflow is the path that data takes from the source to the destination with optional transformations. You can configure the dataflow by creating a Dataflow custom resource or using the Azure IoT Operations Studio portal. A dataflow is made up of three parts: the source, the transformation, and the destination.

To define the source and destination, you need to configure the dataflow endpoints. The transformation is optional and can include operations like enriching the data, filtering the data, and mapping the data to another field.

This article shows you how to create a dataflow with an example, including the source, transformation, and destination.

Prerequisites

- An instance of Azure IoT Operations Preview

- A configured dataflow profile

- Dataflow endpoints. For example, create a dataflow endpoint for the local MQTT broker. You can use this endpoint for both the source and destination. Or, you can try other endpoints like Kafka, Event Hubs, or Azure Data Lake Storage. To learn how to configure each type of dataflow endpoint, see Configure dataflow endpoints.

Create dataflow

Once you have dataflow endpoints, you can use them to create a dataflow. Recall that a dataflow is made up of three parts: the source, the transformation, and the destination.

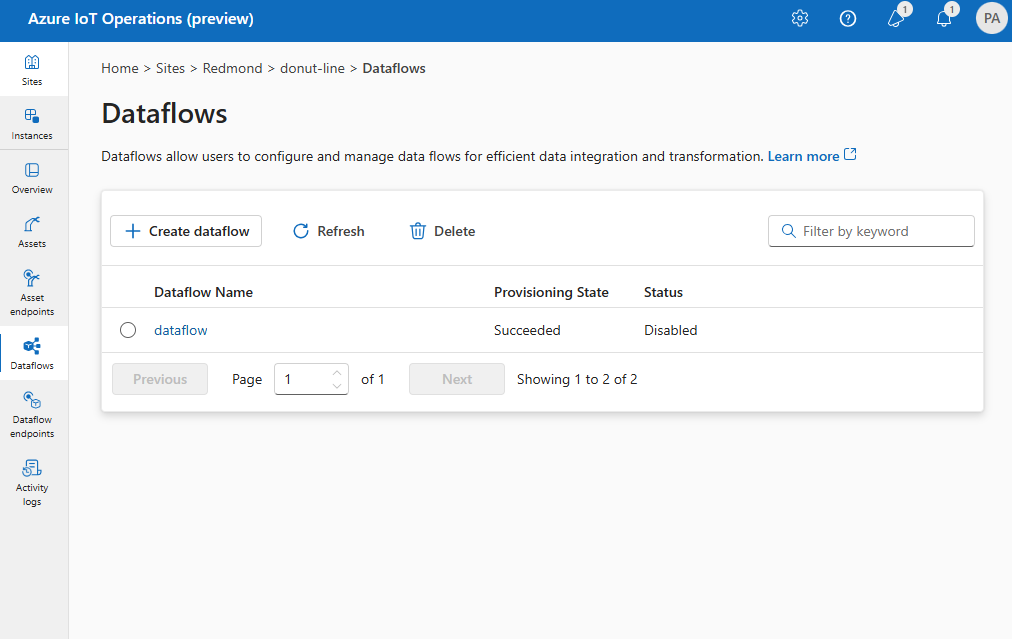

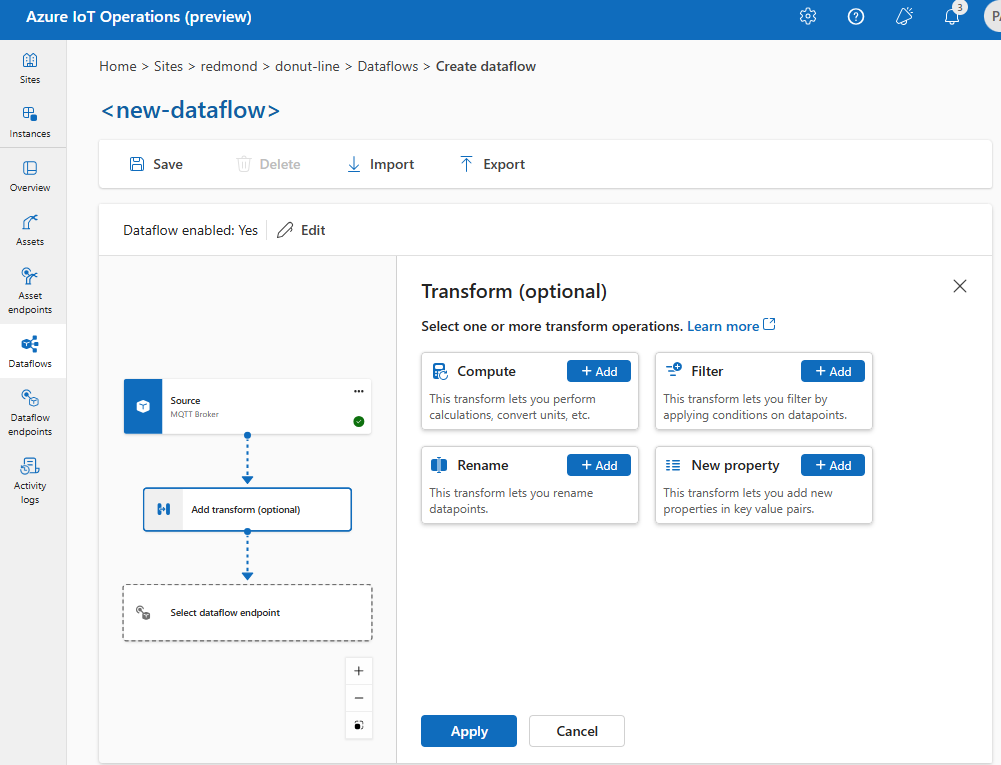

To create a dataflow in the operations experience portal, select Dataflow > Create dataflow.

Review the following sections to learn how to configure the operation types of the dataflow.

Configure a source with a dataflow endpoint to get data

To configure a source for the dataflow, specify the endpoint reference and data source. You can specify a list of data sources for the endpoint.

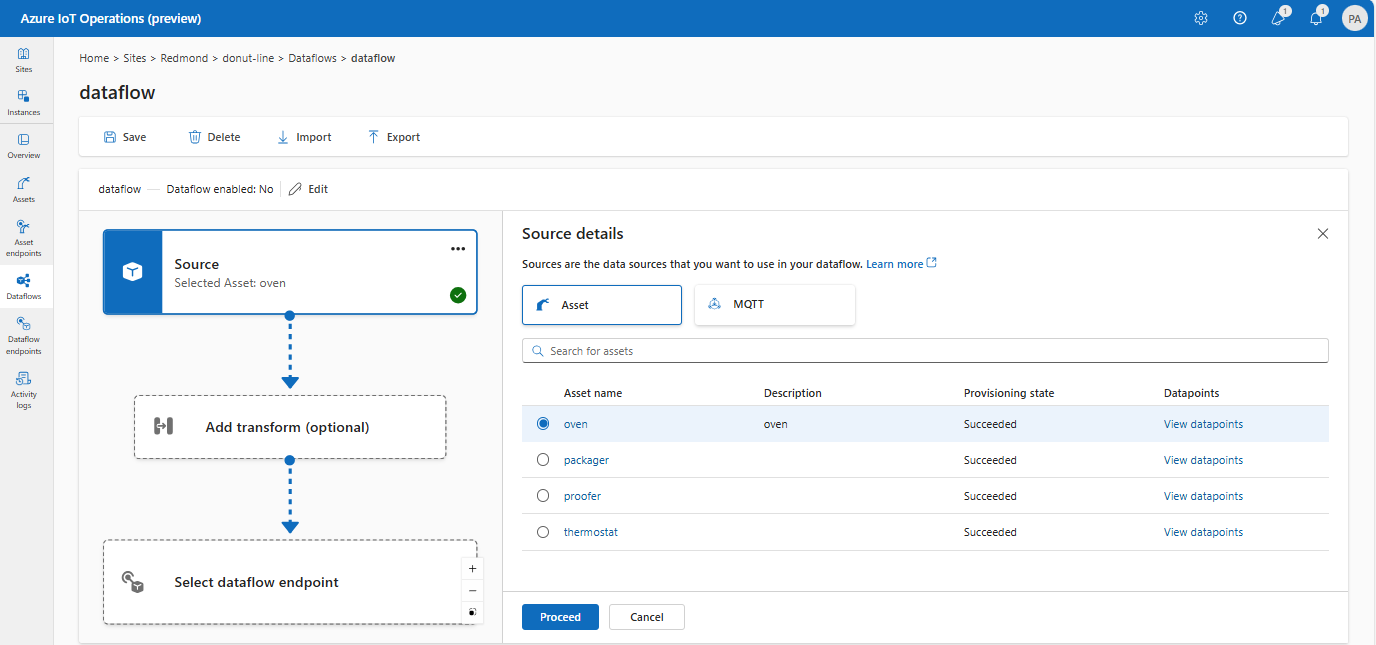

Use Asset as a source

You can use an asset as the source for the dataflow. This is only available in the operations experience portal.

Under Source details, select Asset.

Select the asset you want to use as the source endpoint.

Select Proceed.

A list of datapoints for the selected asset is displayed.

Select Apply to use the asset as the source endpoint.

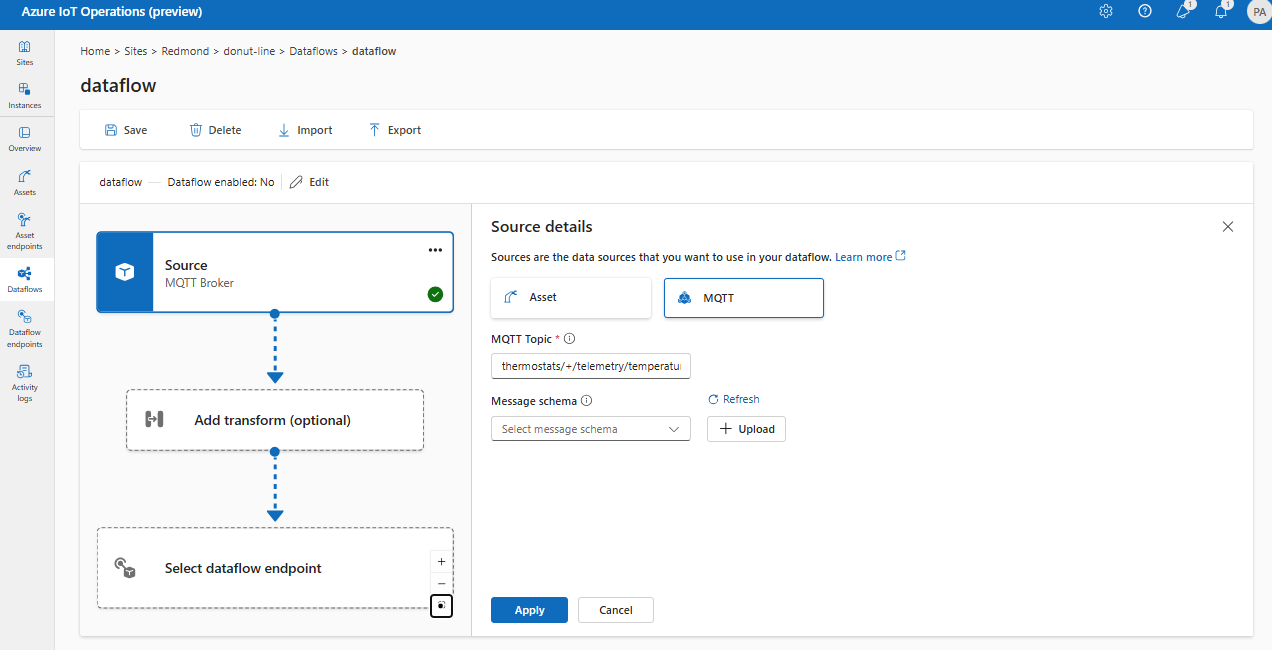

Use MQTT as a source

Under Source details, select MQTT.

Enter the MQTT Topic that you want to listen to for incoming messages.

Choose a Message schema from the dropdown list or upload a new schema. If the source data has optional fields or fields with different types, specify a deserialization schema to ensure consistency. For example, the data might have fields that aren't present in all messages. Without the schema, the transformation can't handle these fields as they would have empty values. With the schema, you can specify default values or ignore the fields.

Select Apply.

Configure transformation to process data

The transformation operation is where you can transform the data from the source before you send it to the destination. Transformations are optional. If you don't need to make changes to the data, don't include the transformation operation in the dataflow configuration. Multiple transformations are chained together in stages regardless of the order in which they're specified in the configuration. The order of the stages is always

- Enrich: Add additional data to the source data given a dataset and condition to match.

- Filter: Filter the data based on a condition.

- Map: Move data from one field to another with an optional conversion.

In the operations experience portal, select Dataflow > Add transform (optional).

Enrich: Add reference data

To enrich the data, you can use the reference dataset in the Azure IoT Operations distributed state store (DSS). The dataset is used to add extra data to the source data based on a condition. The condition is specified as a field in the source data that matches a field in the dataset.

Key names in the distributed state store correspond to a dataset in the dataflow configuration.

Currently, the enrich operation isn't available in the operations experience portal.

You can load sample data into the DSS by using the DSS set tool sample.

For more information about condition syntax, see Enrich data by using dataflows and Convert data using dataflows.

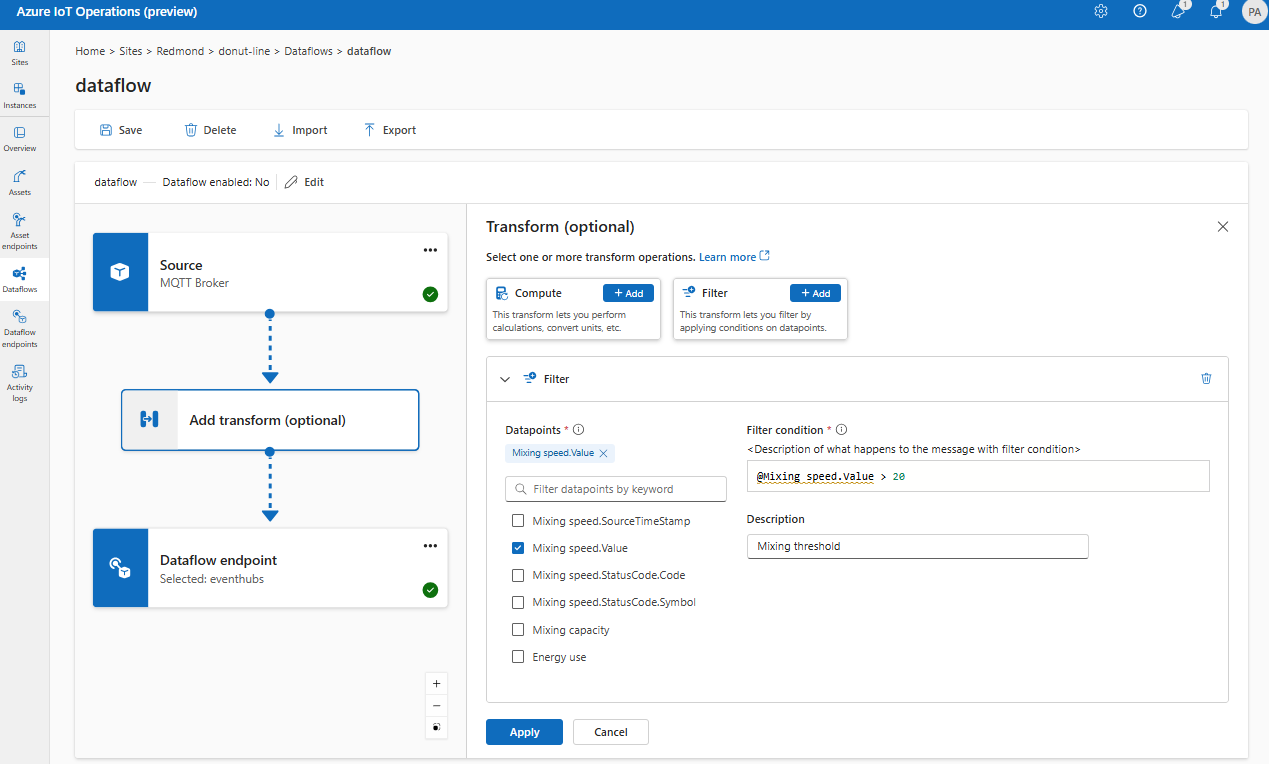

Filter: Filter data based on a condition

To filter the data on a condition, you can use the filter stage. The condition is specified as a field in the source data that matches a value.

Under Transform (optional), select Filter > Add.

Choose the datapoints to include in the dataset.

Add a filter condition and description.

Select Apply.

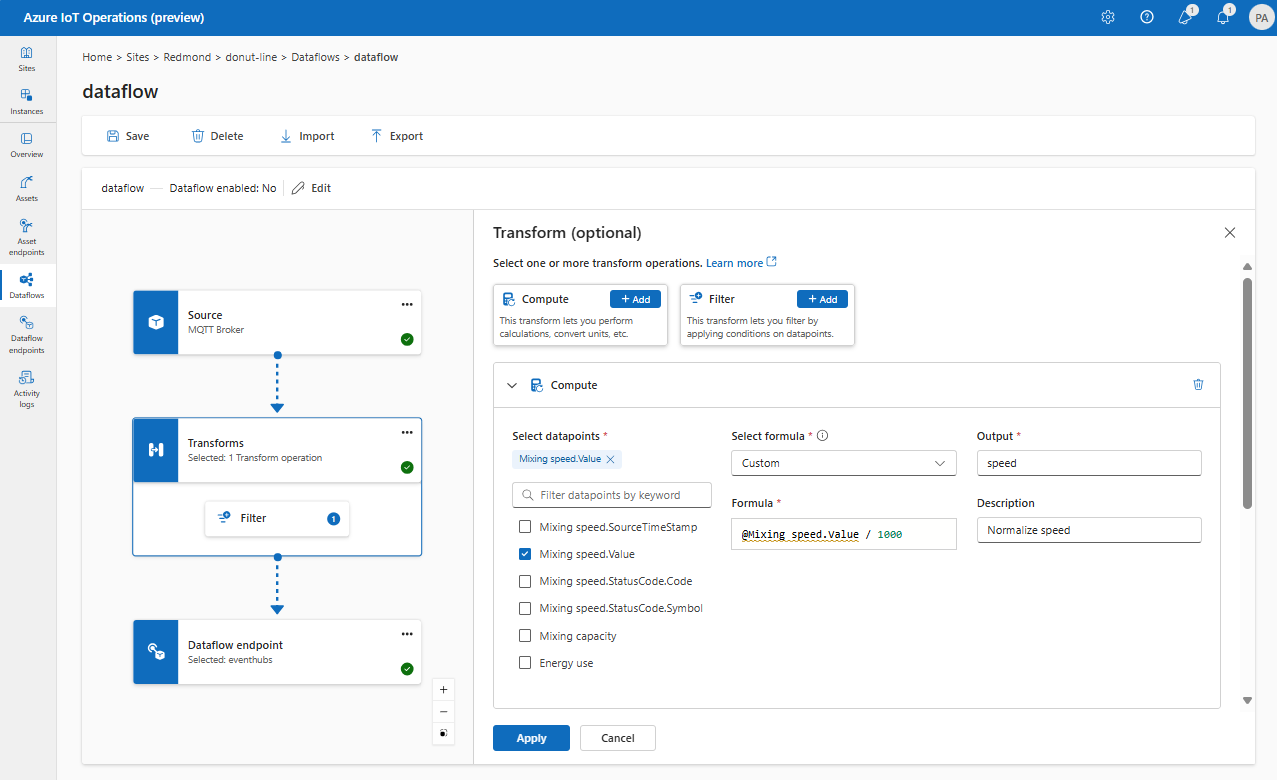

Map: Move data from one field to another

To map the data to another field with optional conversion, you can use the map operation. The conversion is specified as a formula that uses the fields in the source data.

In the operations experience portal, mapping is currently supported using Compute transforms.

Under Transform (optional), select Compute > Add.

Enter the required fields and expressions.

Select Apply.

To learn more, see Map data by using dataflows and Convert data by using dataflows.

Serialize data according to a schema

If you want to serialize the data before sending it to the destination, you need to specify a schema and serialization format. Otherwise, the data is serialized in JSON with the types inferred. Remember that storage endpoints like Microsoft Fabric or Azure Data Lake require a schema to ensure data consistency.

Specify the Output schema when you add the destination dataflow endpoint.

Supported serialization formats are JSON, Parquet, and Delta.

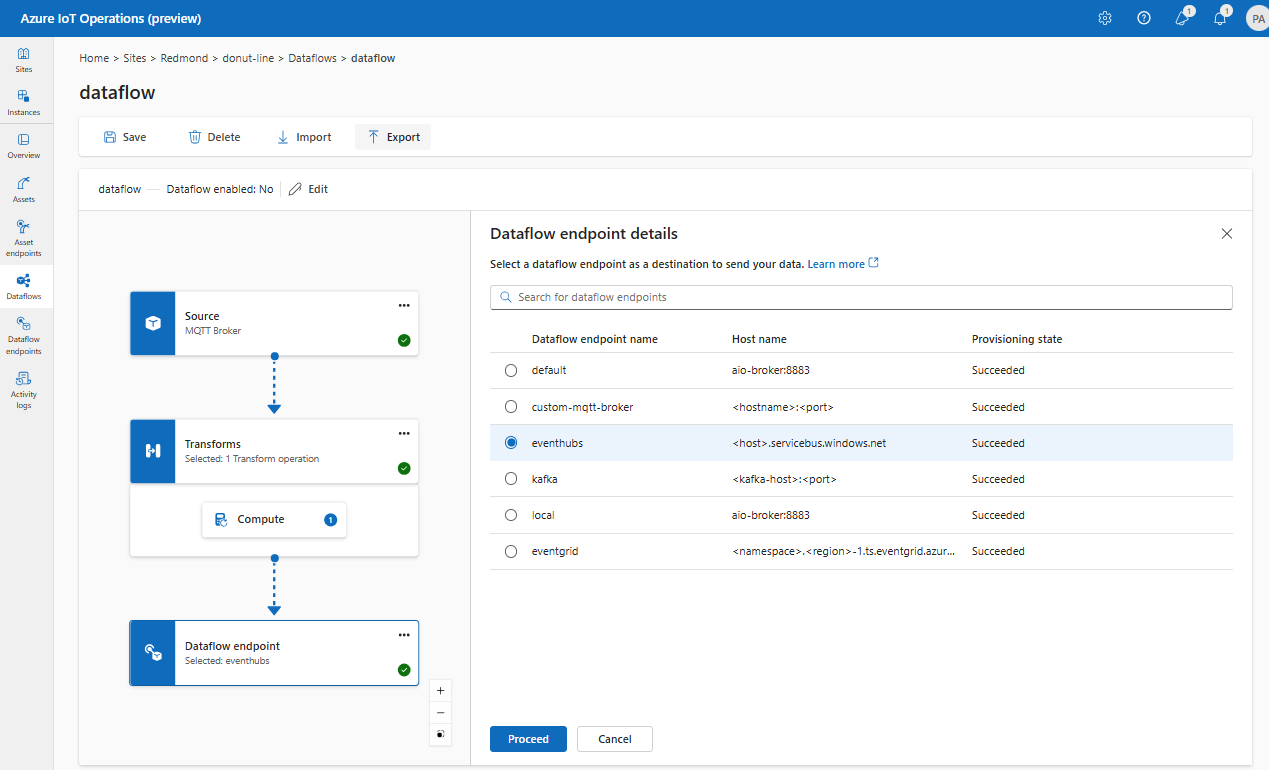

Configure destination with a dataflow endpoint to send data

To configure a destination for the dataflow, specify the endpoint reference and data destination. You can specify a list of data destinations for the endpoint which are MQTT or Kafka topics.

Select the dataflow endpoint to use as the destination.

Select Proceed to configure the destination.

Add the mapping details based on the type of destination.

Verify a dataflow is working

Follow Tutorial: Bi-directional MQTT bridge to Azure Event Grid to verify the dataflow is working.

Export dataflow configuration

To export the dataflow configuration, you can use the operations experience portal or by exporting the Dataflow custom resource.

Select the dataflow you want to export and select Export from the toolbar.