Tutorial: Use a notebook with Apache Spark to query a KQL database

Notebooks are both readable documents containing data analysis descriptions and results and executable documents that can be run to perform data analysis. In this article, you learn how to use a Microsoft Fabric notebook to read and write data to a KQL database using Apache Spark. This tutorial uses precreated datasets and notebooks in both the Real-Time Intelligence and the Data Engineering environments in Microsoft Fabric. For more information on notebooks, see How to use Microsoft Fabric notebooks.

Specifically, you learn how to:

- Create a KQL database

- Import a notebook

- Write data to a KQL database using Apache Spark

- Query data from a KQL database

Prerequisites

1- Create a KQL database

Select your workspace from the left navigation bar.

Follow one of these steps to start creating an eventstream:

- Select New item and then Eventhouse. In the Eventhouse name field, enter nycGreenTaxi, then select Create. A KQL database is generated with the same name.

- In an existing eventhouse, select Databases. Under KQL databases select +, in the KQL Database name field, enter nycGreenTaxi, then select Create.

Copy the Query URI from the database details card in the database dashboard and paste it somewhere, like a notepad, to use in a later step.

2- Download the NYC GreenTaxi notebook

We've created a sample notebook that takes you through all the necessary steps for loading data into your database using the Spark connector.

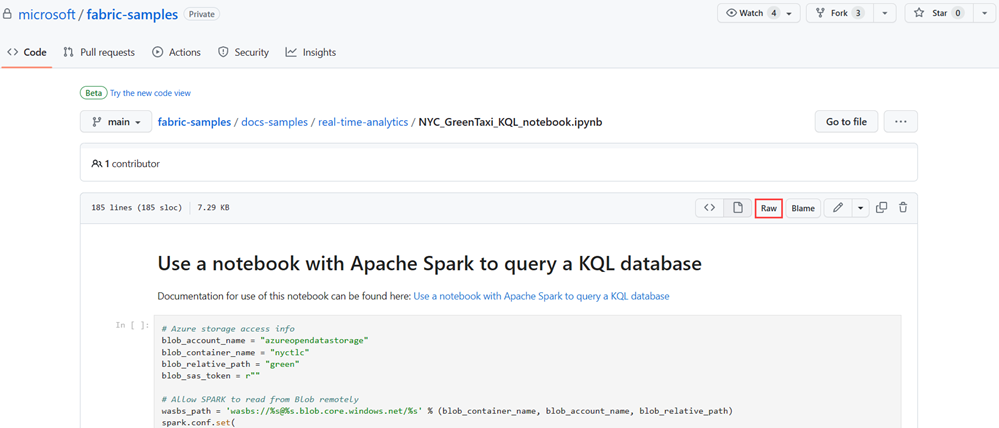

Open the Fabric samples repository on GitHub to download the NYC GreenTaxi KQL notebook..

Save the notebook locally to your device.

Note

The notebook must be saved in the

.ipynbfile format.

3- Import the notebook

The rest of this workflow occurs in the Data Engineering section of the product, and uses a Spark notebook to load and query data in your KQL database.

From your workspace select Import > Notebook > From this computer > Upload then choose the NYC GreenTaxi notebook you downloaded in a previous step.

Once the import is complete, open the notebook from your workspace.

4- Get data

To query your database using the Spark connector, you need to give read and write access to the NYC GreenTaxi blob container.

Select the play button to run the following cells, or select the cell and press Shift+ Enter. Repeat this step for each code cell.

Note

Wait for the completion check mark to appear before running the next cell.

Run the following cell to enable access to the NYC GreenTaxi blob container.

In KustoURI, paste the Query URI that you copied earlier instead of the placeholder text.

Change the placeholder database name to nycGreenTaxi.

Change the placeholder table name to GreenTaxiData.

Run the cell.

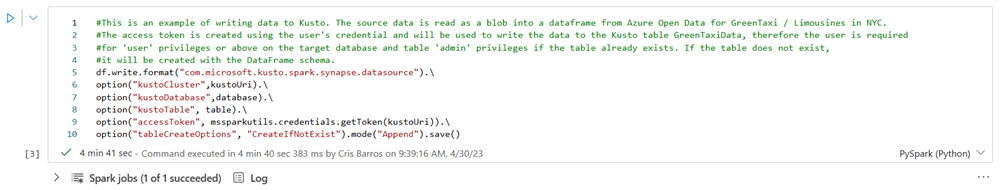

Run the next cell to write data to your database. It can take a few minutes for this step to complete.

Your database now has data loaded in a table named GreenTaxiData.

5- Run the notebook

Run the remaining two cells sequentially to query data from your table. The results show the top 20 highest and lowest taxi fares and distances recorded by year.

6- Clean up resources

Clean up the items created by navigating to the workspace in which they were created.

In your workspace, hover over the notebook you want to delete, select the More menu [...] > Delete.

Select Delete. You can't recover your notebook once you delete it.