Događaj

31. mar 23 - 2. apr 23

Najveći SKL, Fabric i Pover BI događaj učenja. 31. mart – 2. april. Koristite kod FABINSIDER da uštedite $400.

Registrujte se već danasOvaj pregledač više nije podržan.

Nadogradite na Microsoft Edge biste iskoristili najnovije funkcije, bezbednosne ispravke i tehničku podršku.

Applies to:

SQL Server

SSIS Integration Runtime in Azure Data Factory

SQL Server Integration Services (SSIS) Feature Pack for Azure is an extension that provides the components listed on this page for SSIS to connect to Azure services, transfer data between Azure and on-premises data sources, and process data stored in Azure.

The download pages also include information about prerequisites. Make sure you install SQL Server before you install the Azure Feature Pack on a server, or the components in the Feature Pack may not be available when you deploy packages to the SSIS Catalog database, SSISDB, on the server.

Connection Managers

Tasks

Data Flow Components

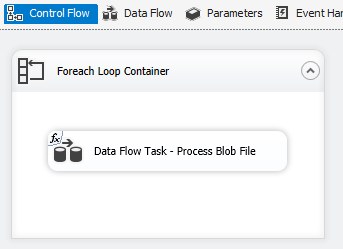

Azure Blob, Azure Data Lake Store, and Data Lake Storage Gen2 File Enumerator. See Foreach Loop Container

The TLS version used by Azure Feature Pack follows system .NET Framework settings.

To use TLS 1.2, add a REG_DWORD value named SchUseStrongCrypto with data 1 under the following two registry keys.

HKEY_LOCAL_MACHINE\SOFTWARE\WOW6432Node\Microsoft\.NETFramework\v4.0.30319HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\.NETFramework\v4.0.30319Java is required to use ORC/Parquet file formats with Azure Data Lake Store/Flexible File connectors.

The architecture (32/64-bit) of Java build should match that of the SSIS runtime to use.

The following Java builds have been tested.

sysdm.cpl.JAVA_HOME for the Variable name.jre subfolder.

Then select OK, and the Variable value is populated automatically.Napojnica

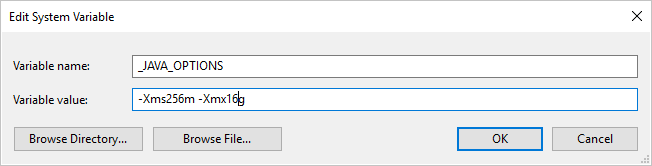

If you use Parquet format and hit error saying "An error occurred when invoking java, message: java.lang.OutOfMemoryError:Java heap space", you can add an environment variable _JAVA_OPTIONS to adjust the min/max heap size for JVM.

Example: set variable _JAVA_OPTIONS with value -Xms256m -Xmx16g. The flag Xms specifies the initial memory allocation pool for a Java Virtual Machine (JVM), while Xmx specifies the maximum memory allocation pool. This means that JVM will be started with Xms amount of memory and will be able to use a maximum of Xmx amount of memory. The default values are min 64MB and max 1G.

This should be done via custom setup interface for Azure-SSIS Integration Runtime.

Suppose zulu8.33.0.1-jdk8.0.192-win_x64.zip is used.

The blob container could be organized as follows.

main.cmd

install_openjdk.ps1

zulu8.33.0.1-jdk8.0.192-win_x64.zip

As the entry point, main.cmd triggers execution of the PowerShell script install_openjdk.ps1 which in turn extracts zulu8.33.0.1-jdk8.0.192-win_x64.zip and sets JAVA_HOME accordingly.

main.cmd

powershell.exe -file install_openjdk.ps1

Napojnica

If you use Parquet format and hit error saying "An error occurred when invoking java, message: java.lang.OutOfMemoryError:Java heap space", you can add command in main.cmd to adjust the min/max heap size for JVM. Example:

setx /M _JAVA_OPTIONS "-Xms256m -Xmx16g"

The flag Xms specifies the initial memory allocation pool for a Java Virtual Machine (JVM), while Xmx specifies the maximum memory allocation pool. This means that JVM will be started with Xms amount of memory and will be able to use a maximum of Xmx amount of memory. The default values are min 64MB and max 1G.

install_openjdk.ps1

Expand-Archive zulu8.33.0.1-jdk8.0.192-win_x64.zip -DestinationPath C:\

[Environment]::SetEnvironmentVariable("JAVA_HOME", "C:\zulu8.33.0.1-jdk8.0.192-win_x64\jre", "Machine")

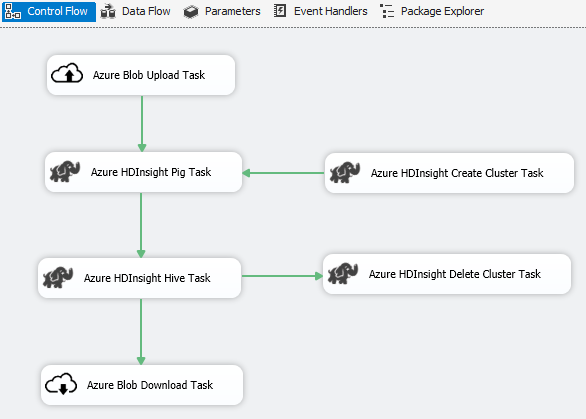

Use Azure Connector to complete following big data processing work:

Use the Azure Blob Upload Task to upload input data to Azure Blob Storage.

Use the Azure HDInsight Create Cluster Task to create an Azure HDInsight cluster. This step is optional if you want to use your own cluster.

Use the Azure HDInsight Hive Task or Azure HDInsight Pig Task to invoke a Pig or Hive job on the Azure HDInsight cluster.

Use the Azure HDInsight Delete Cluster Task to delete the HDInsight Cluster after use if you have created an on-demand HDInsight cluster in step #2.

Use the Azure HDInsight Blob Download Task to download the Pig/Hive output data from the Azure Blob Storage.

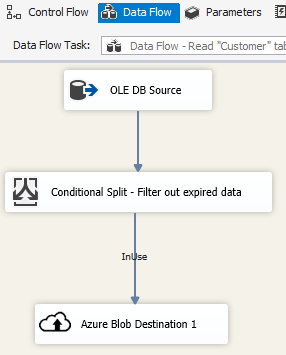

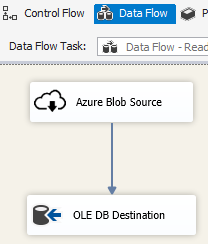

Use the Azure Blob Destination in an SSIS package to write output data to Azure Blob Storage, or use the Azure Blob Source to read data from an Azure Blob Storage.

Use the Foreach Loop Container with the Azure Blob Enumerator to process data in multiple blob files.

This is a hotfix version released for SQL Server 2019 only.

Attempted to access an element as a type incompatible with the array.Microsoft.DataTransfer.Common.Shared.HybridDeliveryException: An unknown error occurred. JNI.JavaExceptionCheckException.Događaj

31. mar 23 - 2. apr 23

Najveći SKL, Fabric i Pover BI događaj učenja. 31. mart – 2. april. Koristite kod FABINSIDER da uštedite $400.

Registrujte se već danasObuka

Putanja učenja

Use advance techniques in canvas apps to perform custom updates and optimization - Training

Use advance techniques in canvas apps to perform custom updates and optimization

Certifikacija

Microsoft Certified: Azure Data Engineer Associate - Certifications

Demonstrate understanding of common data engineering tasks to implement and manage data engineering workloads on Microsoft Azure, using a number of Azure services.

Dokumentacija

Azure Storage connection manager - SQL Server Integration Services (SSIS)

The Azure Storage connection manager enables an SSIS package to connect to an Azure Storage account.

Flexible File Task - SQL Server Integration Services (SSIS)

Flexible File Task

Flexible File Destination - SQL Server Integration Services (SSIS)

Flexible File Destination