Integrations for Semantic Kernel

Semantic Kernel provides a wide range of integrations to help you build powerful AI agents. These integrations include AI services, memory connectors. Additionally, Semantic Kernel integrates with other Microsoft services to provide additional functionality via plugins.

Out-of-the-box integrations

With the available AI and memory connectors, developers can easily build AI agents with swappable components. This allows you to experiment with different AI services and memory connectors to find the best combination for your use case.

AI Services

| Services | C# | Python | Java | Notes |

|---|---|---|---|---|

| Text Generation | ✅ | ✅ | ✅ | Example: Text-Davinci-003 |

| Chat Completion | ✅ | ✅ | ✅ | Example: GPT4, Chat-GPT |

| Text Embeddings (Experimental) | ✅ | ✅ | ✅ | Example: Text-Embeddings-Ada-002 |

| Text to Image (Experimental) | ✅ | ❌ | ❌ | Example: Dall-E |

| Image to Text (Experimental) | ✅ | ❌ | ❌ | Example: Pix2Struct |

| Text to Audio (Experimental) | ✅ | ❌ | ❌ | Example: Text-to-speech |

| Audio to Text (Experimental) | ✅ | ❌ | ❌ | Example: Whisper |

Memory Connectors (Experimental)

Vector databases have many use cases across different domains and applications that involve natural language processing (NLP), computer vision (CV), recommendation systems (RS), and other areas that require semantic understanding and matching of data.

One use case for storing information in a vector database is to enable large language models (LLMs) to generate more relevant and coherent text based on an AI plugin.

However, large language models often face challenges such as generating inaccurate or irrelevant information; lacking factual consistency or common sense; repeating or contradicting themselves; being biased or offensive. To overcome these challenges, you can use a vector database to store information about different topics, keywords, facts, opinions, and/or sources related to your desired domain or genre. Then, you can use a large language model and pass information from the vector database with your AI plugin to generate more informative and engaging content that matches your intent and style.

For example, if you want to write a blog post about the latest trends in AI, you can use a vector database to store the latest information about that topic and pass the information along with the ask to a LLM in order to generate a blog post that leverages the latest information.

Available connectors to vector databases

Today, Semantic Kernel offers several connectors to vector databases that you can use to store and retrieve information. These include:

| Service | C# | Python |

|---|---|---|

| Vector Database in Azure Cosmos DB for MongoDB (vCore) | Python | |

| Azure AI Search | C# | Python |

| Azure PostgreSQL Server | C# | |

| Azure SQL Database | C# | |

| Chroma | C# | Python |

| DuckDB | C# | |

| Milvus | C# | Python |

| MongoDB Atlas Vector Search | C# | Python |

| Pinecone | C# | Python |

| Postgres | C# | Python |

| Qdrant | C# | |

| Redis | C# | |

| Sqlite | C# | |

| Weaviate | C# | Python |

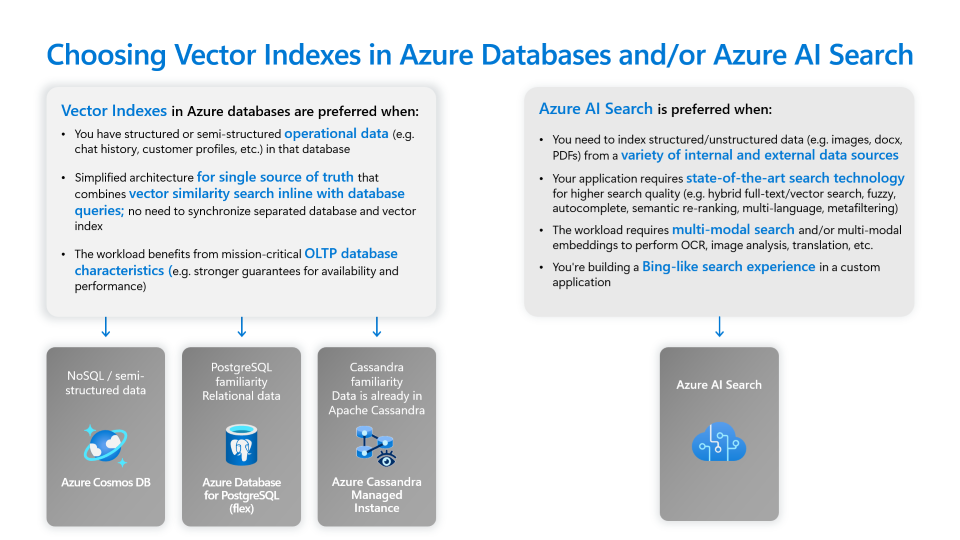

Vector database solutions

- Azure Cosmos DB for MongoDB Integrated Vector Database

- Azure SQL Database

- Azure PostgreSQL Server pgvector Extension

- Azure AI Search

- Open-source vector databases

Additional plugins

If you want to extend the functionality of your AI agent, you can use plugins to integrate with other Microsoft services. Here are some of the plugins that are available for Semantic Kernel:

| Plugin | C# | Python | Java | Description |

|---|---|---|---|---|

| Logic Apps | ✅ | ✅ | ✅ | Build workflows within Logic Apps using its available connectors and import them as plugins in Semantic Kernel. Learn more. |

| Azure Container Apps Dynamic Sessions | ✅ | ✅ | ❌ | With dynamic sessions, you can recreate the Code Interpreter experience from the Assistants API by effortlessly spinning up Python containers where AI agents can execute Python code. Learn more. |