QuickStart: Add 1:1 video calling to your app

Get started with Azure Communication Services by using the Communication Services calling SDK to add 1 on 1 video calling to your app. You learn how to start and answer a video call using the Azure Communication Services Calling SDK for JavaScript.

Sample Code

If you'd like to skip ahead to the end, you can download this quickstart as a sample on GitHub.

Note

Outbound calling to an Azure Communication Services user can be accessed using the Azure Communication Services UI Library. The UI Library enables developers to add a call client that is VoIP enabled into their application with only a couple lines of code.

Prerequisites

Obtain an Azure account with an active subscription. Create an account for free.

You need to have Node.js 18. You can use the msi installer to install it.

Create an active Communication Services resource. Create a Communication Services resource. You need to record your connection string for this quickstart.

Create a User Access Token to instantiate the call client. Learn how to create and manage user access tokens. You can also use the Azure CLI and run the command with your connection string to create a user and an access token.

az communication identity token issue --scope voip --connection-string "yourConnectionString"For details, see Use Azure CLI to Create and Manage Access Tokens.

Setting up

Create a new Node.js application

Open your terminal or command window create a new directory for your app, and navigate to it.

mkdir calling-quickstart && cd calling-quickstart

Run npm init -y to create a package.json file with default settings.

npm init -y

Install the package

Use the npm install command to install the Azure Communication Services Calling SDK for JavaScript.

npm install @azure/communication-common --save

npm install @azure/communication-calling --save

Set up the app framework

This quickstart uses webpack to bundle the application assets. Run the following command to install the webpack, webpack-cli and webpack-dev-server npm packages and list them as development dependencies in your package.json:

npm install copy-webpack-plugin@^11.0.0 webpack@^5.88.2 webpack-cli@^5.1.4 webpack-dev-server@^4.15.1 --save-dev

Here's the code:

Create an index.html file in the root directory of your project. We use this file to configure a basic layout that allows the user to place a 1:1 video call.

<!-- index.html -->

<!DOCTYPE html>

<html>

<head>

<title>Azure Communication Services - Calling Web SDK</title>

<link rel="stylesheet" type="text/css" href="styles.css"/>

</head>

<body>

<h4>Azure Communication Services - Calling Web SDK</h4>

<input id="user-access-token"

type="text"

placeholder="User access token"

style="margin-bottom:1em; width: 500px;"/>

<button id="initialize-call-agent" type="button">Initialize Call Agent</button>

<br>

<br>

<input id="callee-acs-user-id"

type="text"

placeholder="Enter callee's Azure Communication Services user identity in format: '8:acs:resourceId_userId'"

style="margin-bottom:1em; width: 500px; display: block;"/>

<button id="start-call-button" type="button" disabled="true">Start Call</button>

<button id="hangup-call-button" type="button" disabled="true">Hang up Call</button>

<button id="accept-call-button" type="button" disabled="true">Accept Call</button>

<button id="start-video-button" type="button" disabled="true">Start Video</button>

<button id="stop-video-button" type="button" disabled="true">Stop Video</button>

<br>

<br>

<div id="connectedLabel" style="color: #13bb13;" hidden>Call is connected!</div>

<br>

<div id="remoteVideosGallery" style="width: 40%;" hidden>Remote participants' video streams:</div>

<br>

<div id="localVideoContainer" style="width: 30%;" hidden>Local video stream:</div>

<!-- points to the bundle generated from client.js -->

<script src="./main.js"></script>

</body>

</html>

The following classes and interfaces handle some of the major features of the Azure Communication Services Calling SDK:

| Name | Description |

|---|---|

CallClient |

The main entry point to the Calling SDK. |

AzureCommunicationTokenCredential |

Implements the CommunicationTokenCredential interface, which is used to instantiate callAgent. |

CallAgent |

Used to start and manage calls. |

DeviceManager |

Used to manage media devices. |

Call |

Used for representing a Call |

LocalVideoStream |

Used for creating a local video stream for a camera device on the local system. |

RemoteParticipant |

Used for representing a remote participant in the Call |

RemoteVideoStream |

Used for representing a remote video stream from a Remote Participant. |

Create a file in the root directory of your project called index.js to contain the application logic for this quickstart. Add the following code to index.js:

// Make sure to install the necessary dependencies

const { CallClient, VideoStreamRenderer, LocalVideoStream } = require('@azure/communication-calling');

const { AzureCommunicationTokenCredential } = require('@azure/communication-common');

const { AzureLogger, setLogLevel } = require("@azure/logger");

// Set the log level and output

setLogLevel('verbose');

AzureLogger.log = (...args) => {

console.log(...args);

};

// Calling web sdk objects

let callAgent;

let deviceManager;

let call;

let incomingCall;

let localVideoStream;

let localVideoStreamRenderer;

// UI widgets

let userAccessToken = document.getElementById('user-access-token');

let calleeAcsUserId = document.getElementById('callee-acs-user-id');

let initializeCallAgentButton = document.getElementById('initialize-call-agent');

let startCallButton = document.getElementById('start-call-button');

let hangUpCallButton = document.getElementById('hangup-call-button');

let acceptCallButton = document.getElementById('accept-call-button');

let startVideoButton = document.getElementById('start-video-button');

let stopVideoButton = document.getElementById('stop-video-button');

let connectedLabel = document.getElementById('connectedLabel');

let remoteVideosGallery = document.getElementById('remoteVideosGallery');

let localVideoContainer = document.getElementById('localVideoContainer');

/**

* Using the CallClient, initialize a CallAgent instance with a CommunicationUserCredential which will enable us to make outgoing calls and receive incoming calls.

* You can then use the CallClient.getDeviceManager() API instance to get the DeviceManager.

*/

initializeCallAgentButton.onclick = async () => {

try {

const callClient = new CallClient();

tokenCredential = new AzureCommunicationTokenCredential(userAccessToken.value.trim());

callAgent = await callClient.createCallAgent(tokenCredential)

// Set up a camera device to use.

deviceManager = await callClient.getDeviceManager();

await deviceManager.askDevicePermission({ video: true });

await deviceManager.askDevicePermission({ audio: true });

// Listen for an incoming call to accept.

callAgent.on('incomingCall', async (args) => {

try {

incomingCall = args.incomingCall;

acceptCallButton.disabled = false;

startCallButton.disabled = true;

} catch (error) {

console.error(error);

}

});

startCallButton.disabled = false;

initializeCallAgentButton.disabled = true;

} catch(error) {

console.error(error);

}

}

/**

* Place a 1:1 outgoing video call to a user

* Add an event listener to initiate a call when the `startCallButton` is clicked:

* First you have to enumerate local cameras using the deviceManager `getCameraList` API.

* In this quickstart we're using the first camera in the collection. Once the desired camera is selected, a

* LocalVideoStream instance will be constructed and passed within `videoOptions` as an item within the

* localVideoStream array to the call method. Once your call connects it will automatically start sending a video stream to the other participant.

*/

startCallButton.onclick = async () => {

try {

const localVideoStream = await createLocalVideoStream();

const videoOptions = localVideoStream ? { localVideoStreams: [localVideoStream] } : undefined;

call = callAgent.startCall([{ communicationUserId: calleeAcsUserId.value.trim() }], { videoOptions });

// Subscribe to the call's properties and events.

subscribeToCall(call);

} catch (error) {

console.error(error);

}

}

/**

* Accepting an incoming call with video

* Add an event listener to accept a call when the `acceptCallButton` is clicked:

* After subscribing to the `CallAgent.on('incomingCall')` event, you can accept the incoming call.

* You can pass the local video stream which you want to use to accept the call with.

*/

acceptCallButton.onclick = async () => {

try {

const localVideoStream = await createLocalVideoStream();

const videoOptions = localVideoStream ? { localVideoStreams: [localVideoStream] } : undefined;

call = await incomingCall.accept({ videoOptions });

// Subscribe to the call's properties and events.

subscribeToCall(call);

} catch (error) {

console.error(error);

}

}

/**

* Subscribe to a call obj.

* Listen for property changes and collection updates.

*/

subscribeToCall = (call) => {

try {

// Inspect the initial call.id value.

console.log(`Call Id: ${call.id}`);

//Subscribe to call's 'idChanged' event for value changes.

call.on('idChanged', () => {

console.log(`Call Id changed: ${call.id}`);

});

// Inspect the initial call.state value.

console.log(`Call state: ${call.state}`);

// Subscribe to call's 'stateChanged' event for value changes.

call.on('stateChanged', async () => {

console.log(`Call state changed: ${call.state}`);

if(call.state === 'Connected') {

connectedLabel.hidden = false;

acceptCallButton.disabled = true;

startCallButton.disabled = true;

hangUpCallButton.disabled = false;

startVideoButton.disabled = false;

stopVideoButton.disabled = false;

remoteVideosGallery.hidden = false;

} else if (call.state === 'Disconnected') {

connectedLabel.hidden = true;

startCallButton.disabled = false;

hangUpCallButton.disabled = true;

startVideoButton.disabled = true;

stopVideoButton.disabled = true;

console.log(`Call ended, call end reason={code=${call.callEndReason.code}, subCode=${call.callEndReason.subCode}}`);

}

});

call.on('isLocalVideoStartedChanged', () => {

console.log(`isLocalVideoStarted changed: ${call.isLocalVideoStarted}`);

});

console.log(`isLocalVideoStarted: ${call.isLocalVideoStarted}`);

call.localVideoStreams.forEach(async (lvs) => {

localVideoStream = lvs;

await displayLocalVideoStream();

});

call.on('localVideoStreamsUpdated', e => {

e.added.forEach(async (lvs) => {

localVideoStream = lvs;

await displayLocalVideoStream();

});

e.removed.forEach(lvs => {

removeLocalVideoStream();

});

});

// Inspect the call's current remote participants and subscribe to them.

call.remoteParticipants.forEach(remoteParticipant => {

subscribeToRemoteParticipant(remoteParticipant);

});

// Subscribe to the call's 'remoteParticipantsUpdated' event to be

// notified when new participants are added to the call or removed from the call.

call.on('remoteParticipantsUpdated', e => {

// Subscribe to new remote participants that are added to the call.

e.added.forEach(remoteParticipant => {

subscribeToRemoteParticipant(remoteParticipant)

});

// Unsubscribe from participants that are removed from the call

e.removed.forEach(remoteParticipant => {

console.log('Remote participant removed from the call.');

});

});

} catch (error) {

console.error(error);

}

}

/**

* Subscribe to a remote participant obj.

* Listen for property changes and collection updates.

*/

subscribeToRemoteParticipant = (remoteParticipant) => {

try {

// Inspect the initial remoteParticipant.state value.

console.log(`Remote participant state: ${remoteParticipant.state}`);

// Subscribe to remoteParticipant's 'stateChanged' event for value changes.

remoteParticipant.on('stateChanged', () => {

console.log(`Remote participant state changed: ${remoteParticipant.state}`);

});

// Inspect the remoteParticipants's current videoStreams and subscribe to them.

remoteParticipant.videoStreams.forEach(remoteVideoStream => {

subscribeToRemoteVideoStream(remoteVideoStream)

});

// Subscribe to the remoteParticipant's 'videoStreamsUpdated' event to be

// notified when the remoteParticipant adds new videoStreams and removes video streams.

remoteParticipant.on('videoStreamsUpdated', e => {

// Subscribe to new remote participant's video streams that were added.

e.added.forEach(remoteVideoStream => {

subscribeToRemoteVideoStream(remoteVideoStream)

});

// Unsubscribe from remote participant's video streams that were removed.

e.removed.forEach(remoteVideoStream => {

console.log('Remote participant video stream was removed.');

})

});

} catch (error) {

console.error(error);

}

}

/**

* Subscribe to a remote participant's remote video stream obj.

* You have to subscribe to the 'isAvailableChanged' event to render the remoteVideoStream. If the 'isAvailable' property

* changes to 'true', a remote participant is sending a stream. Whenever availability of a remote stream changes

* you can choose to destroy the whole 'Renderer', a specific 'RendererView' or keep them, but this will result in displaying blank video frame.

*/

subscribeToRemoteVideoStream = async (remoteVideoStream) => {

let renderer = new VideoStreamRenderer(remoteVideoStream);

let view;

let remoteVideoContainer = document.createElement('div');

remoteVideoContainer.className = 'remote-video-container';

let loadingSpinner = document.createElement('div');

loadingSpinner.className = 'loading-spinner';

remoteVideoStream.on('isReceivingChanged', () => {

try {

if (remoteVideoStream.isAvailable) {

const isReceiving = remoteVideoStream.isReceiving;

const isLoadingSpinnerActive = remoteVideoContainer.contains(loadingSpinner);

if (!isReceiving && !isLoadingSpinnerActive) {

remoteVideoContainer.appendChild(loadingSpinner);

} else if (isReceiving && isLoadingSpinnerActive) {

remoteVideoContainer.removeChild(loadingSpinner);

}

}

} catch (e) {

console.error(e);

}

});

const createView = async () => {

// Create a renderer view for the remote video stream.

view = await renderer.createView();

// Attach the renderer view to the UI.

remoteVideoContainer.appendChild(view.target);

remoteVideosGallery.appendChild(remoteVideoContainer);

}

// Remote participant has switched video on/off

remoteVideoStream.on('isAvailableChanged', async () => {

try {

if (remoteVideoStream.isAvailable) {

await createView();

} else {

view.dispose();

remoteVideosGallery.removeChild(remoteVideoContainer);

}

} catch (e) {

console.error(e);

}

});

// Remote participant has video on initially.

if (remoteVideoStream.isAvailable) {

try {

await createView();

} catch (e) {

console.error(e);

}

}

}

/**

* Start your local video stream.

* This will send your local video stream to remote participants so they can view it.

*/

startVideoButton.onclick = async () => {

try {

const localVideoStream = await createLocalVideoStream();

await call.startVideo(localVideoStream);

} catch (error) {

console.error(error);

}

}

/**

* Stop your local video stream.

* This will stop your local video stream from being sent to remote participants.

*/

stopVideoButton.onclick = async () => {

try {

await call.stopVideo(localVideoStream);

} catch (error) {

console.error(error);

}

}

/**

* To render a LocalVideoStream, you need to create a new instance of VideoStreamRenderer, and then

* create a new VideoStreamRendererView instance using the asynchronous createView() method.

* You may then attach view.target to any UI element.

*/

createLocalVideoStream = async () => {

const camera = (await deviceManager.getCameras())[0];

if (camera) {

return new LocalVideoStream(camera);

} else {

console.error(`No camera device found on the system`);

}

}

/**

* Display your local video stream preview in your UI

*/

displayLocalVideoStream = async () => {

try {

localVideoStreamRenderer = new VideoStreamRenderer(localVideoStream);

const view = await localVideoStreamRenderer.createView();

localVideoContainer.hidden = false;

localVideoContainer.appendChild(view.target);

} catch (error) {

console.error(error);

}

}

/**

* Remove your local video stream preview from your UI

*/

removeLocalVideoStream = async() => {

try {

localVideoStreamRenderer.dispose();

localVideoContainer.hidden = true;

} catch (error) {

console.error(error);

}

}

/**

* End current call

*/

hangUpCallButton.addEventListener("click", async () => {

// end the current call

await call.hangUp();

});

Create a file in the root directory of your project called styles.css to contain the application styling for this quickstart. Add the following code to styles.css:

/**

* CSS for styling the loading spinner over the remote video stream

*/

.remote-video-container {

position: relative;

}

.loading-spinner {

border: 12px solid #f3f3f3;

border-radius: 50%;

border-top: 12px solid #ca5010;

width: 100px;

height: 100px;

-webkit-animation: spin 2s linear infinite; /* Safari */

animation: spin 2s linear infinite;

position: absolute;

margin: auto;

top: 0;

bottom: 0;

left: 0;

right: 0;

transform: translate(-50%, -50%);

}

@keyframes spin {

0% { transform: rotate(0deg); }

100% { transform: rotate(360deg); }

}

/* Safari */

@-webkit-keyframes spin {

0% { -webkit-transform: rotate(0deg); }

100% { -webkit-transform: rotate(360deg); }

}

Add the webpack local server code

Create a file in the root directory of your project called webpack.config.js to contain the local server logic for this quickstart. Add the following code to webpack.config.js:

const path = require('path');

const CopyPlugin = require("copy-webpack-plugin");

module.exports = {

mode: 'development',

entry: './index.js',

output: {

filename: 'main.js',

path: path.resolve(__dirname, 'dist'),

},

devServer: {

static: {

directory: path.join(__dirname, './')

},

},

plugins: [

new CopyPlugin({

patterns: [

'./index.html'

]

}),

]

};

Run the code

Use the webpack-dev-server to build and run your app. Run the following command to bundle the application host in a local webserver:

npx webpack serve --config webpack.config.js

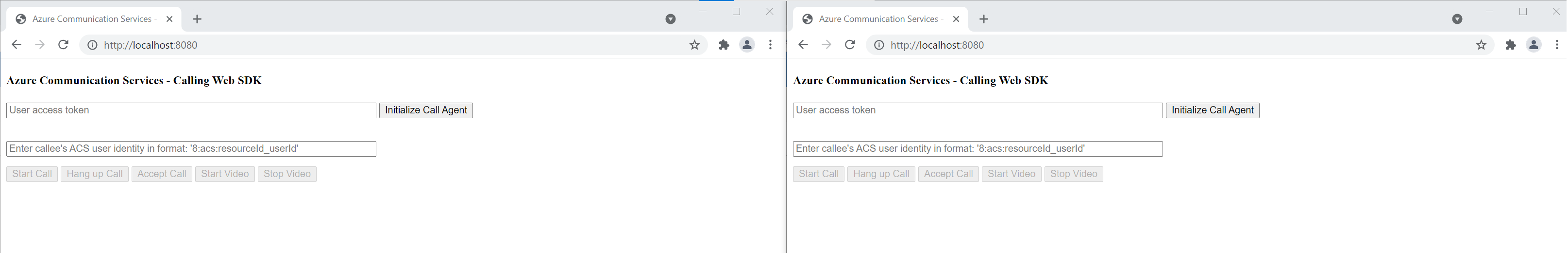

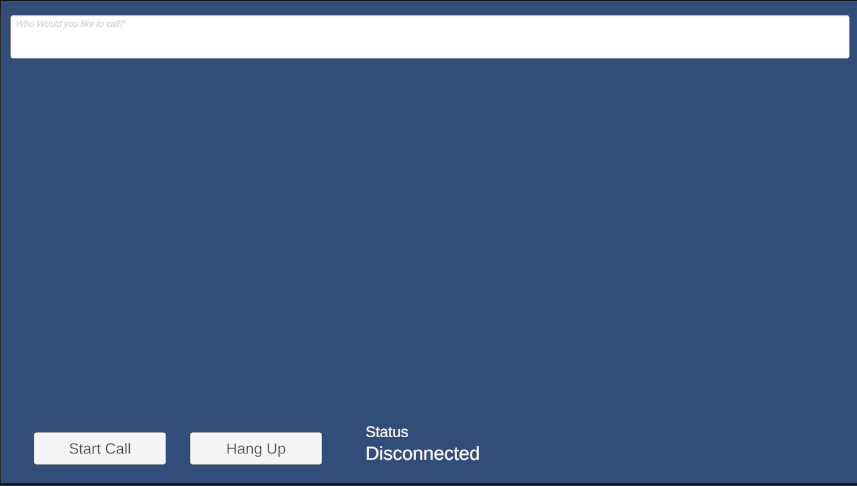

Open your browser and on two tabs navigate to http://localhost:8080/.You should see the following screen:

On the first tab, enter a valid user access token, and on the other tab enter another different valid user access token.

Refer to the user access token documentation, if you don't already have tokens available to use.

On both tabs, click on the "Initialize Call Agent" buttons. You should see the following screen:

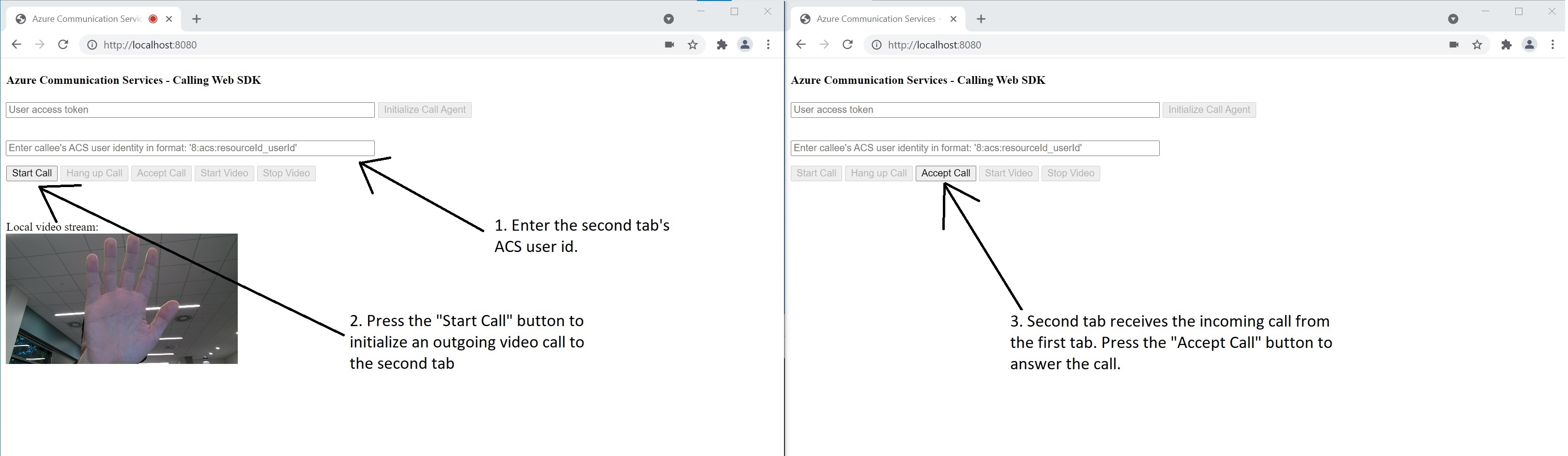

On the first tab, enter the Azure Communication Services user identity of the second tab, and click the "Start Call" button. The first tab starts the outgoing call to the second tab, and the second tab's "Accept Call" button becomes enabled:

From the second tab, click on the "Accept Call" button and the call starts and connect. You should see the following screen:

Both tabs are now successfully in a 1 to 1 video call. Both tabs can hear each other's audio and see each other video stream.

Get started with Azure Communication Services by using the Communication Services calling client library to add video calling to your app. Learn how to include 1:1 video calling, and how to create or join group calls. Additionally, you can start, answer, and join a video call by using the Azure Communication Services Calling SDK for Android.

If you want to get started with sample code, you can download the sample app.

Prerequisites

An Azure account with an active subscription. Create an account for free.

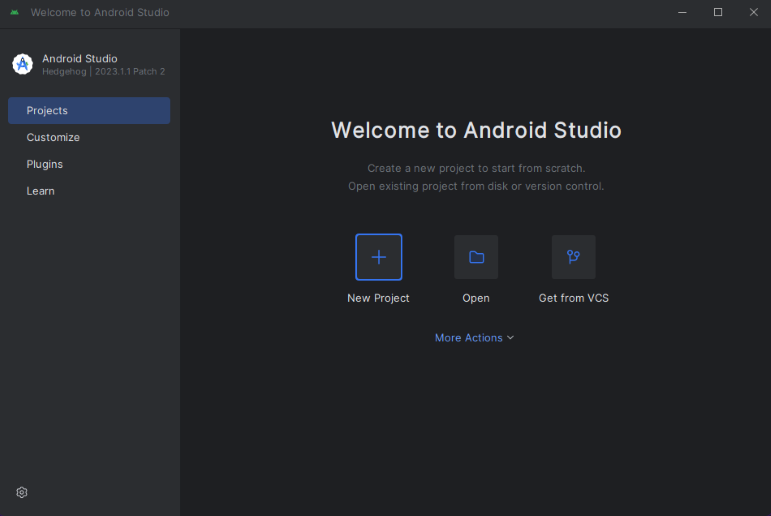

Android Studio, for creating your Android application.

A deployed Communication Services resource. Create a Communication Services resource. You need to record your connection string for this quickstart.

A User Access Token for your Azure Communication Service. You can also use the Azure CLI and run the command with your connection string to create a user and an access token.

az communication identity token issue --scope voip --connection-string "yourConnectionString"For details, see Use Azure CLI to Create and Manage Access Tokens.

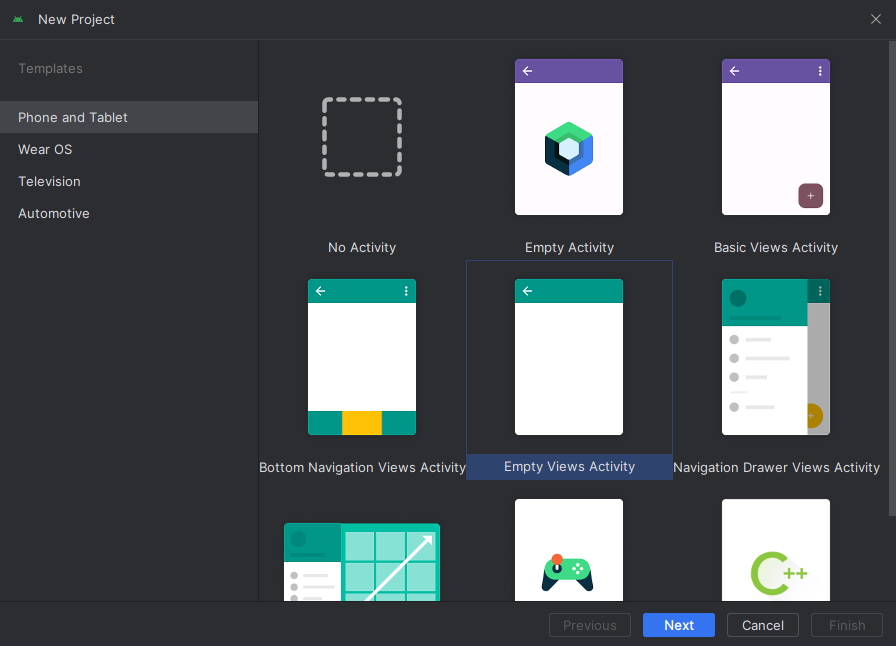

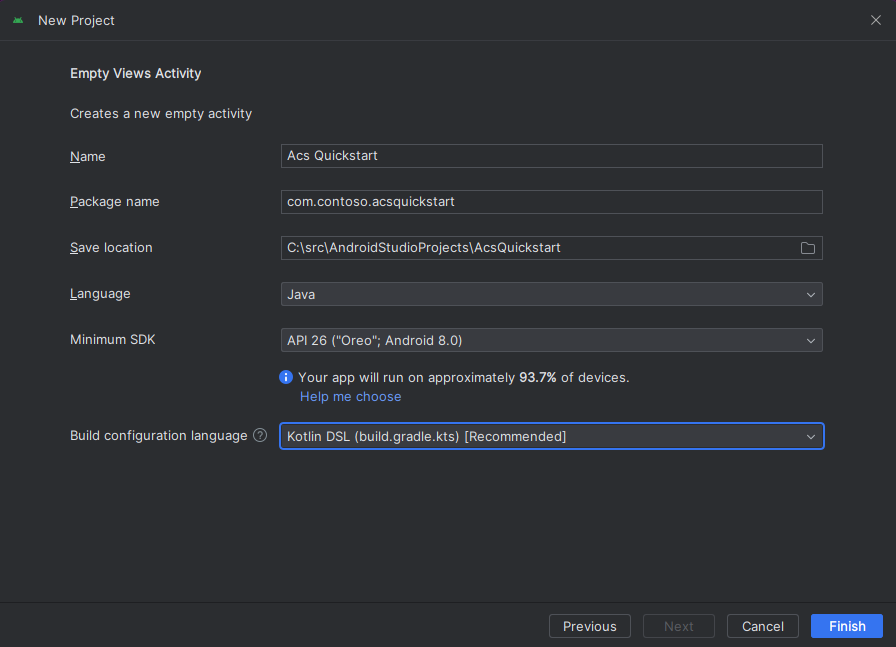

Create an Android app with an empty activity

From Android Studio, select Start a new Android Studio project.

Under Phone and Tablet, select the Empty Activity project template.

For the Minimum SDK, select API 26: Android 8.0 (Oreo), or later. See SDK support versions.

Install the package

Locate your project level build.gradle, and add mavenCentral() to the list of repositories under buildscript and allprojects

buildscript {

repositories {

...

mavenCentral()

...

}

}

allprojects {

repositories {

...

mavenCentral()

...

}

}

Then, in your module level build.gradle, add the following lines to the dependencies and android sections:

android {

...

packagingOptions {

pickFirst 'META-INF/*'

}

compileOptions {

sourceCompatibility JavaVersion.VERSION_1_8

targetCompatibility JavaVersion.VERSION_1_8

}

}

dependencies {

...

implementation 'com.azure.android:azure-communication-calling:2.0.0'

...

}

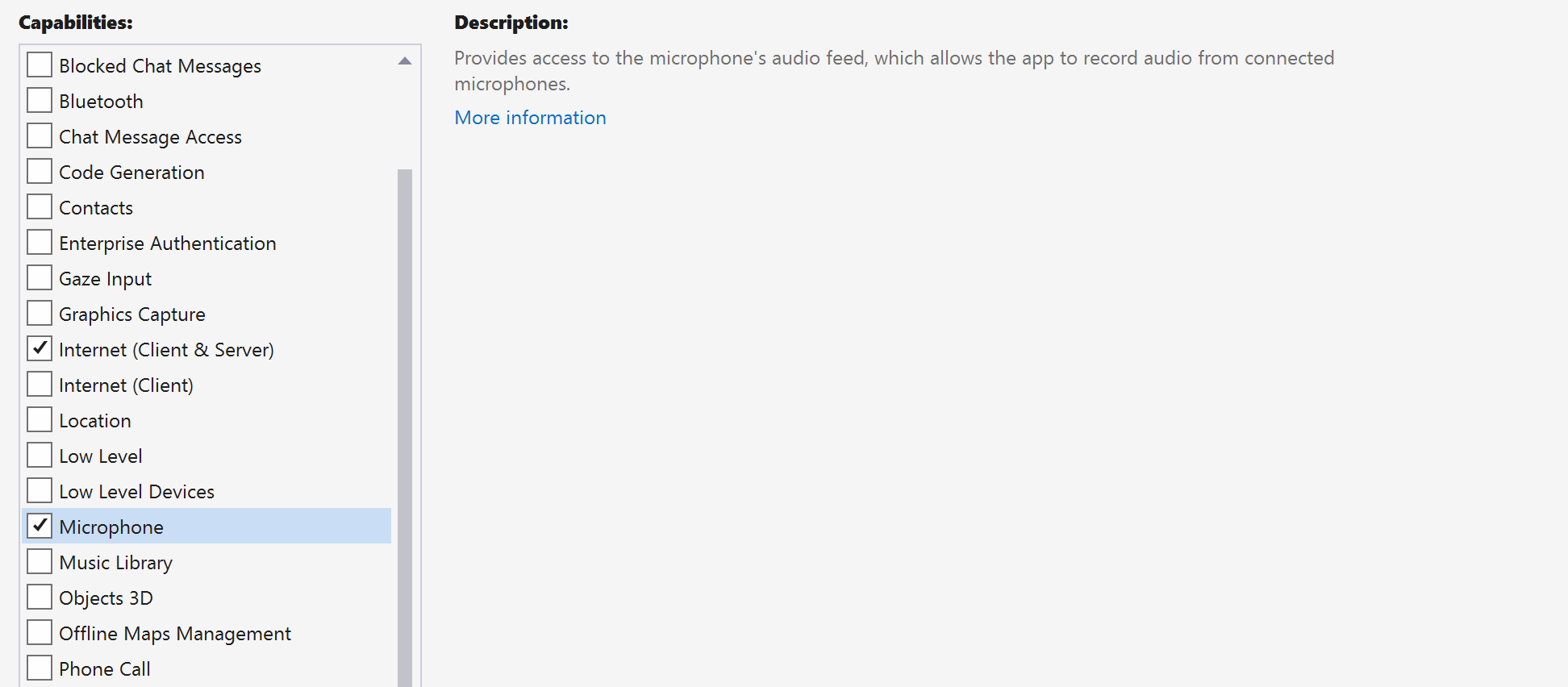

Add permissions to application manifest

To request permissions required to make a call, you must first declare the permissions in the application manifest (app/src/main/AndroidManifest.xml). Replace the content of file with the following code:

<?xml version="1.0" encoding="utf-8"?>

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

package="com.contoso.acsquickstart">

<uses-permission android:name="android.permission.INTERNET" />

<uses-permission android:name="android.permission.ACCESS_NETWORK_STATE" />

<uses-permission android:name="android.permission.ACCESS_WIFI_STATE" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.CAMERA" />

<application

android:allowBackup="true"

android:icon="@mipmap/ic_launcher"

android:label="@string/app_name"

android:roundIcon="@mipmap/ic_launcher_round"

android:supportsRtl="true"

android:theme="@style/AppTheme">

<!--Our Calling SDK depends on the Apache HTTP SDK.

When targeting Android SDK 28+, this library needs to be explicitly referenced.

See https://developer.android.com/about/versions/pie/android-9.0-changes-28#apache-p-->

<uses-library android:name="org.apache.http.legacy" android:required="false"/>

<activity android:name=".MainActivity">

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>

</activity>

</application>

</manifest>

Set up the layout for the app

You need a text input for the callee ID or group call ID, a button for placing the call, and extra button for hanging up the call.

Also need two buttons to turn on and turn off the local video. You need to place two containers for local and remote video streams. You can add these buttons through the designer, or by editing the layout XML.

Go to app/src/main/res/layout/activity_main.xml, and replace the content of file with the following code:

<?xml version="1.0" encoding="utf-8"?>

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".MainActivity">

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:orientation="vertical">

<EditText

android:id="@+id/call_id"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:ems="10"

android:gravity="center"

android:hint="Callee ID"

android:inputType="textPersonName"

app:layout_constraintBottom_toTopOf="@+id/call_button"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent"

app:layout_constraintTop_toTopOf="parent"

app:layout_constraintVertical_bias="0.064" />

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content">

<Button

android:id="@+id/call_button"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_marginBottom="16dp"

android:gravity="center"

android:text="Call"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent" />

<Button

android:id="@+id/show_preview"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_marginBottom="16dp"

android:gravity="center"

android:text="Show Video"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent" />

<Button

android:id="@+id/hide_preview"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_marginBottom="16dp"

android:gravity="center"

android:text="Hide Video"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent" />

<Button

android:id="@+id/hang_up"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:layout_marginBottom="16dp"

android:gravity="center"

android:text="Hang Up"

app:layout_constraintBottom_toBottomOf="parent"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent" />

</LinearLayout>

<ScrollView

android:layout_width="match_parent"

android:layout_height="wrap_content">

<GridLayout

android:id="@+id/remotevideocontainer"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:columnCount="2"

android:rowCount="2"

android:padding="10dp"></GridLayout>

</ScrollView>

</LinearLayout>

<FrameLayout

android:layout_width="match_parent"

android:layout_height="match_parent">

<LinearLayout

android:id="@+id/localvideocontainer"

android:layout_width="180dp"

android:layout_height="300dp"

android:layout_gravity="right|bottom"

android:orientation="vertical"

android:padding="10dp">

<Button

android:id="@+id/switch_source"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:gravity="center"

android:text="Switch Source"

android:visibility="invisible" />

</LinearLayout>

</FrameLayout>

</androidx.constraintlayout.widget.ConstraintLayout>

Create the main activity scaffolding and bindings

With the layout created, you can add the bindings, and the basic scaffolding of the activity. The activity handles requesting runtime permissions, creating the call agent, and placing the call when the button is pressed.

The onCreate method is overridden to invoke getAllPermissions and createAgent, and add the bindings for the call button. This event occurs only once when the activity is created. For more information about onCreate, see the guide Understand the activity lifecycle.

Go to MainActivity.java file, and replace the content with the following code:

package com.example.videocallingquickstart;

import androidx.appcompat.app.AppCompatActivity;

import androidx.core.app.ActivityCompat;

import android.Manifest;

import android.content.pm.PackageManager;

import android.media.AudioManager;

import android.os.Bundle;

import android.util.DisplayMetrics;

import android.util.Log;

import android.view.View;

import android.widget.Button;

import android.widget.EditText;

import android.widget.GridLayout;

import android.widget.Toast;

import android.widget.LinearLayout;

import android.content.Context;

import com.azure.android.communication.calling.CallState;

import com.azure.android.communication.calling.CallingCommunicationException;

import com.azure.android.communication.calling.ParticipantsUpdatedListener;

import com.azure.android.communication.calling.PropertyChangedEvent;

import com.azure.android.communication.calling.PropertyChangedListener;

import com.azure.android.communication.calling.StartCallOptions;

import com.azure.android.communication.calling.VideoDeviceInfo;

import com.azure.android.communication.common.CommunicationIdentifier;

import com.azure.android.communication.common.CommunicationTokenCredential;

import com.azure.android.communication.calling.CallAgent;

import com.azure.android.communication.calling.CallClient;

import com.azure.android.communication.calling.DeviceManager;

import com.azure.android.communication.calling.VideoOptions;

import com.azure.android.communication.calling.LocalVideoStream;

import com.azure.android.communication.calling.VideoStreamRenderer;

import com.azure.android.communication.calling.VideoStreamRendererView;

import com.azure.android.communication.calling.CreateViewOptions;

import com.azure.android.communication.calling.ScalingMode;

import com.azure.android.communication.calling.IncomingCall;

import com.azure.android.communication.calling.Call;

import com.azure.android.communication.calling.AcceptCallOptions;

import com.azure.android.communication.calling.ParticipantsUpdatedEvent;

import com.azure.android.communication.calling.RemoteParticipant;

import com.azure.android.communication.calling.RemoteVideoStream;

import com.azure.android.communication.calling.RemoteVideoStreamsEvent;

import com.azure.android.communication.calling.RendererListener;

import com.azure.android.communication.common.CommunicationUserIdentifier;

import com.azure.android.communication.common.MicrosoftTeamsUserIdentifier;

import com.azure.android.communication.common.PhoneNumberIdentifier;

import com.azure.android.communication.common.UnknownIdentifier;

import java.util.ArrayList;

import java.util.HashSet;

import java.util.List;

import java.util.HashMap;

import java.util.Map;

import java.util.concurrent.ExecutionException;

import java.util.concurrent.Executors;

import java.util.ArrayList;

import java.util.HashSet;

import java.util.List;

import java.util.HashMap;

import java.util.Map;

import java.util.concurrent.ExecutionException;

import java.util.concurrent.Executors;

public class MainActivity extends AppCompatActivity {

private CallAgent callAgent;

private VideoDeviceInfo currentCamera;

private LocalVideoStream currentVideoStream;

private DeviceManager deviceManager;

private IncomingCall incomingCall;

private Call call;

VideoStreamRenderer previewRenderer;

VideoStreamRendererView preview;

final Map<Integer, StreamData> streamData = new HashMap<>();

private boolean renderRemoteVideo = true;

private ParticipantsUpdatedListener remoteParticipantUpdatedListener;

private PropertyChangedListener onStateChangedListener;

final HashSet<String> joinedParticipants = new HashSet<>();

Button switchSourceButton;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

getAllPermissions();

createAgent();

handleIncomingCall();

Button callButton = findViewById(R.id.call_button);

callButton.setOnClickListener(l -> startCall());

Button hangupButton = findViewById(R.id.hang_up);

hangupButton.setOnClickListener(l -> hangUp());

Button startVideo = findViewById(R.id.show_preview);

startVideo.setOnClickListener(l -> turnOnLocalVideo());

Button stopVideo = findViewById(R.id.hide_preview);

stopVideo.setOnClickListener(l -> turnOffLocalVideo());

switchSourceButton = findViewById(R.id.switch_source);

switchSourceButton.setOnClickListener(l -> switchSource());

setVolumeControlStream(AudioManager.STREAM_VOICE_CALL);

}

/**

* Request each required permission if the app doesn't already have it.

*/

private void getAllPermissions() {

// See section on requesting permissions

}

/**

* Create the call agent for placing calls

*/

private void createAgent() {

// See section on creating the call agent

}

/**

* Handle incoming calls

*/

private void handleIncomingCall() {

// See section on answering incoming call

}

/**

* Place a call to the callee id provided in `callee_id` text input.

*/

private void startCall() {

// See section on starting the call

}

/**

* End calls

*/

private void hangUp() {

// See section on ending the call

}

/**

* Mid-call operations

*/

public void turnOnLocalVideo() {

// See section

}

public void turnOffLocalVideo() {

// See section

}

/**

* Change the active camera for the next available

*/

public void switchSource() {

// See section

}

}

Request permissions at runtime

For Android 6.0 and later (API level 23), and targetSdkVersion 23 or later, permissions are granted at runtime instead of when the app is installed. In order to support it, getAllPermissions can be implemented to call ActivityCompat.checkSelfPermission and ActivityCompat.requestPermissions for each required permission.

/**

* Request each required permission if the app doesn't already have it.

*/

private void getAllPermissions() {

String[] requiredPermissions = new String[]{Manifest.permission.RECORD_AUDIO, Manifest.permission.CAMERA, Manifest.permission.WRITE_EXTERNAL_STORAGE, Manifest.permission.READ_PHONE_STATE};

ArrayList<String> permissionsToAskFor = new ArrayList<>();

for (String permission : requiredPermissions) {

if (ActivityCompat.checkSelfPermission(this, permission) != PackageManager.PERMISSION_GRANTED) {

permissionsToAskFor.add(permission);

}

}

if (!permissionsToAskFor.isEmpty()) {

ActivityCompat.requestPermissions(this, permissionsToAskFor.toArray(new String[0]), 1);

}

}

Note

When you're designing your app, consider when these permissions should be requested. Permissions should be requested as they are needed, not ahead of time. For more information, see, the Android Permissions Guide.

Object model

The following classes and interfaces handle some of the major features of the Azure Communication Services Calling SDK:

| Name | Description |

|---|---|

CallClient |

The main entry point to the Calling SDK. |

CallAgent |

Used to start and manage calls. |

CommunicationTokenCredential |

Used as the token credential to instantiate the CallAgent. |

CommunicationIdentifier |

Used as a different type of participant that might be part of a call. |

Create an agent from the user access token

You need a user token to create an authenticated call agent. Generally, this token is generated from a service with authentication specific to the application. For more information on user access tokens, see User access tokens.

For the quickstart, replace <User_Access_Token> with a user access token generated for your Azure Communication Services resource.

/**

* Create the call agent for placing calls

*/

private void createAgent() {

Context context = this.getApplicationContext();

String userToken = "<USER_ACCESS_TOKEN>";

try {

CommunicationTokenCredential credential = new CommunicationTokenCredential(userToken);

CallClient callClient = new CallClient();

deviceManager = callClient.getDeviceManager(context).get();

callAgent = callClient.createCallAgent(getApplicationContext(), credential).get();

} catch (Exception ex) {

Toast.makeText(context, "Failed to create call agent.", Toast.LENGTH_SHORT).show();

}

}

Start a video call by using the call agent

You can place the call by using the call agent. All you need to do is provide a list of callee IDs and the call options.

To place a call with video, you have to enumerate local cameras by using the deviceManager getCameras API. After you select a desired camera, use it to construct a LocalVideoStream instance. Then pass it into videoOptions as an item in the localVideoStream array to a call method. When the call connects, it automatically starts sending a video stream from the selected camera to the other participant.

private void startCall() {

Context context = this.getApplicationContext();

EditText callIdView = findViewById(R.id.call_id);

String callId = callIdView.getText().toString();

ArrayList<CommunicationIdentifier> participants = new ArrayList<CommunicationIdentifier>();

List<VideoDeviceInfo> cameras = deviceManager.getCameras();

StartCallOptions options = new StartCallOptions();

if(!cameras.isEmpty()) {

currentCamera = getNextAvailableCamera(null);

currentVideoStream = new LocalVideoStream(currentCamera, context);

LocalVideoStream[] videoStreams = new LocalVideoStream[1];

videoStreams[0] = currentVideoStream;

VideoOptions videoOptions = new VideoOptions(videoStreams);

options.setVideoOptions(videoOptions);

showPreview(currentVideoStream);

}

participants.add(new CommunicationUserIdentifier(callId));

call = callAgent.startCall(

context,

participants,

options);

//Subscribe to events on updates of call state and remote participants

remoteParticipantUpdatedListener = this::handleRemoteParticipantsUpdate;

onStateChangedListener = this::handleCallOnStateChanged;

call.addOnRemoteParticipantsUpdatedListener(remoteParticipantUpdatedListener);

call.addOnStateChangedListener(onStateChangedListener);

}

In this quickstart, you rely on the function getNextAvailableCamera to pick the camera that the call uses. The function takes the enumeration of cameras as input, and iterates through the list to get the next camera available. If the argument is null, the function picks the first device on the list. If there are no available cameras when you select Start Call, an audio call starts instead. But if the remote participant answered with video, you can still see the remote video stream.

private VideoDeviceInfo getNextAvailableCamera(VideoDeviceInfo camera) {

List<VideoDeviceInfo> cameras = deviceManager.getCameras();

int currentIndex = 0;

if (camera == null) {

return cameras.isEmpty() ? null : cameras.get(0);

}

for (int i = 0; i < cameras.size(); i++) {

if (camera.getId().equals(cameras.get(i).getId())) {

currentIndex = i;

break;

}

}

int newIndex = (currentIndex + 1) % cameras.size();

return cameras.get(newIndex);

}

After you construct a LocalVideoStream instance, you can create a renderer to display it on the UI.

private void showPreview(LocalVideoStream stream) {

previewRenderer = new VideoStreamRenderer(stream, this);

LinearLayout layout = findViewById(R.id.localvideocontainer);

preview = previewRenderer.createView(new CreateViewOptions(ScalingMode.FIT));

preview.setTag(0);

runOnUiThread(() -> {

layout.addView(preview);

switchSourceButton.setVisibility(View.VISIBLE);

});

}

To allow the user to toggle the local video source, use switchSource. This method picks the next available camera and defines it as the local stream.

public void switchSource() {

if (currentVideoStream != null) {

try {

currentCamera = getNextAvailableCamera(currentCamera);

currentVideoStream.switchSource(currentCamera).get();

} catch (InterruptedException e) {

e.printStackTrace();

} catch (ExecutionException e) {

e.printStackTrace();

}

}

}

Accept an incoming call

You can obtain an incoming call by subscribing to addOnIncomingCallListener on callAgent.

private void handleIncomingCall() {

callAgent.addOnIncomingCallListener((incomingCall) -> {

this.incomingCall = incomingCall;

Executors.newCachedThreadPool().submit(this::answerIncomingCall);

});

}

To accept a call with the video camera on, enumerate the local cameras by using the deviceManager getCameras API. Pick a camera, and construct a LocalVideoStream instance. Pass it into acceptCallOptions before calling the accept method on a call object.

private void answerIncomingCall() {

Context context = this.getApplicationContext();

if (incomingCall == null){

return;

}

AcceptCallOptions acceptCallOptions = new AcceptCallOptions();

List<VideoDeviceInfo> cameras = deviceManager.getCameras();

if(!cameras.isEmpty()) {

currentCamera = getNextAvailableCamera(null);

currentVideoStream = new LocalVideoStream(currentCamera, context);

LocalVideoStream[] videoStreams = new LocalVideoStream[1];

videoStreams[0] = currentVideoStream;

VideoOptions videoOptions = new VideoOptions(videoStreams);

acceptCallOptions.setVideoOptions(videoOptions);

showPreview(currentVideoStream);

}

try {

call = incomingCall.accept(context, acceptCallOptions).get();

} catch (InterruptedException e) {

e.printStackTrace();

} catch (ExecutionException e) {

e.printStackTrace();

}

//Subscribe to events on updates of call state and remote participants

remoteParticipantUpdatedListener = this::handleRemoteParticipantsUpdate;

onStateChangedListener = this::handleCallOnStateChanged;

call.addOnRemoteParticipantsUpdatedListener(remoteParticipantUpdatedListener);

call.addOnStateChangedListener(onStateChangedListener);

}

Remote participant and remote video streams

All remote participants are available through the getRemoteParticipants() method on a call instance. Once the call becomes connected (CallState.CONNECTED), we can access the remote participants of the call and handle the remote video streams.

When you start a call or answer an incoming call, you need to subscribe to the addOnRemoteParticipantsUpdatedListener event to handle remote participants.

remoteParticipantUpdatedListener = this::handleRemoteParticipantsUpdate;

call.addOnRemoteParticipantsUpdatedListener(remoteParticipantUpdatedListener);

When you use event listeners that are defined within the same class, bind the listener to a variable. Pass the variable in as an argument to add and remove listener methods.

If you try to pass the listener in directly as an argument, you lose the reference to that listener. Java creates new instances of these listeners, not referencing previously created ones. You can't remove prior instances, because you don’t have a reference to them.

Note

When a user joins a call, they can access the current remote participants through the getRemoteParticipants() method. The addOnRemoteParticipantsUpdatedListener event will not trigger for these existing participants. This event will only trigger when a remote participant joins or leaves the call while the user is already in the call.

Remote video stream updates

For 1:1 calling, you need to handle added participants. When you remove the remote participant, the call ends. For added participants, you subscribe to addOnVideoStreamsUpdatedListener to handle video stream updates.

public void handleRemoteParticipantsUpdate(ParticipantsUpdatedEvent args) {

handleAddedParticipants(args.getAddedParticipants());

}

private void handleAddedParticipants(List<RemoteParticipant> participants) {

for (RemoteParticipant remoteParticipant : participants) {

if(!joinedParticipants.contains(getId(remoteParticipant))) {

joinedParticipants.add(getId(remoteParticipant));

if (renderRemoteVideo) {

for (RemoteVideoStream stream : remoteParticipant.getVideoStreams()) {

StreamData data = new StreamData(stream, null, null);

streamData.put(stream.getId(), data);

startRenderingVideo(data);

}

}

remoteParticipant.addOnVideoStreamsUpdatedListener(videoStreamsEventArgs -> videoStreamsUpdated(videoStreamsEventArgs));

}

}

}

private void videoStreamsUpdated(RemoteVideoStreamsEvent videoStreamsEventArgs) {

for(RemoteVideoStream stream : videoStreamsEventArgs.getAddedRemoteVideoStreams()) {

StreamData data = new StreamData(stream, null, null);

streamData.put(stream.getId(), data);

if (renderRemoteVideo) {

startRenderingVideo(data);

}

}

for(RemoteVideoStream stream : videoStreamsEventArgs.getRemovedRemoteVideoStreams()) {

stopRenderingVideo(stream);

}

}

public String getId(final RemoteParticipant remoteParticipant) {

final CommunicationIdentifier identifier = remoteParticipant.getIdentifier();

if (identifier instanceof PhoneNumberIdentifier) {

return ((PhoneNumberIdentifier) identifier).getPhoneNumber();

} else if (identifier instanceof MicrosoftTeamsUserIdentifier) {

return ((MicrosoftTeamsUserIdentifier) identifier).getUserId();

} else if (identifier instanceof CommunicationUserIdentifier) {

return ((CommunicationUserIdentifier) identifier).getId();

} else {

return ((UnknownIdentifier) identifier).getId();

}

}

Render remote videos

Create a renderer of the remote video stream, and attach it to the view to start rendering the remote view. Dispose of the view to stop rendering it.

void startRenderingVideo(StreamData data){

if (data.renderer != null) {

return;

}

GridLayout layout = ((GridLayout)findViewById(R.id.remotevideocontainer));

data.renderer = new VideoStreamRenderer(data.stream, this);

data.renderer.addRendererListener(new RendererListener() {

@Override

public void onFirstFrameRendered() {

String text = data.renderer.getSize().toString();

Log.i("MainActivity", "Video rendering at: " + text);

}

@Override

public void onRendererFailedToStart() {

String text = "Video failed to render";

Log.i("MainActivity", text);

}

});

data.rendererView = data.renderer.createView(new CreateViewOptions(ScalingMode.FIT));

data.rendererView.setTag(data.stream.getId());

runOnUiThread(() -> {

GridLayout.LayoutParams params = new GridLayout.LayoutParams(layout.getLayoutParams());

DisplayMetrics displayMetrics = new DisplayMetrics();

getWindowManager().getDefaultDisplay().getMetrics(displayMetrics);

params.height = (int)(displayMetrics.heightPixels / 2.5);

params.width = displayMetrics.widthPixels / 2;

layout.addView(data.rendererView, params);

});

}

void stopRenderingVideo(RemoteVideoStream stream) {

StreamData data = streamData.get(stream.getId());

if (data == null || data.renderer == null) {

return;

}

runOnUiThread(() -> {

GridLayout layout = findViewById(R.id.remotevideocontainer);

for(int i = 0; i < layout.getChildCount(); ++ i) {

View childView = layout.getChildAt(i);

if ((int)childView.getTag() == data.stream.getId()) {

layout.removeViewAt(i);

}

}

});

data.rendererView = null;

// Dispose renderer

data.renderer.dispose();

data.renderer = null;

}

static class StreamData {

RemoteVideoStream stream;

VideoStreamRenderer renderer;

VideoStreamRendererView rendererView;

StreamData(RemoteVideoStream stream, VideoStreamRenderer renderer, VideoStreamRendererView rendererView) {

this.stream = stream;

this.renderer = renderer;

this.rendererView = rendererView;

}

}

Call state update

The state of a call can change from connected to disconnected. When the call is connected, you handle the remote participant, and when the call is disconnected, you dispose of previewRenderer to stop local video.

private void handleCallOnStateChanged(PropertyChangedEvent args) {

if (call.getState() == CallState.CONNECTED) {

runOnUiThread(() -> Toast.makeText(this, "Call is CONNECTED", Toast.LENGTH_SHORT).show());

handleCallState();

}

if (call.getState() == CallState.DISCONNECTED) {

runOnUiThread(() -> Toast.makeText(this, "Call is DISCONNECTED", Toast.LENGTH_SHORT).show());

if (previewRenderer != null) {

previewRenderer.dispose();

}

switchSourceButton.setVisibility(View.INVISIBLE);

}

}

End a call

End the call by calling the hangUp() function on the call instance. Dispose of previewRenderer to stop local video.

private void hangUp() {

try {

call.hangUp().get();

switchSourceButton.setVisibility(View.INVISIBLE);

} catch (ExecutionException | InterruptedException e) {

e.printStackTrace();

}

if (previewRenderer != null) {

previewRenderer.dispose();

}

}

Hide and show local video

When the call has started, you can stop local video rendering and streaming with turnOffLocalVideo(), this method removes the view that wraps the local render, and disposes of the current stream. To resume the stream and render the local preview again, use turnOnLocalVideo(), this method shows the video preview and starts streaming.

public void turnOnLocalVideo() {

List<VideoDeviceInfo> cameras = deviceManager.getCameras();

if(!cameras.isEmpty()) {

try {

currentVideoStream = new LocalVideoStream(currentCamera, this);

showPreview(currentVideoStream);

call.startVideo(this, currentVideoStream).get();

switchSourceButton.setVisibility(View.VISIBLE);

} catch (CallingCommunicationException acsException) {

acsException.printStackTrace();

} catch (ExecutionException | InterruptedException e) {

e.printStackTrace();

}

}

}

public void turnOffLocalVideo() {

try {

LinearLayout container = findViewById(R.id.localvideocontainer);

for (int i = 0; i < container.getChildCount(); ++i) {

Object tag = container.getChildAt(i).getTag();

if (tag != null && (int)tag == 0) {

container.removeViewAt(i);

}

}

switchSourceButton.setVisibility(View.INVISIBLE);

previewRenderer.dispose();

previewRenderer = null;

call.stopVideo(this, currentVideoStream).get();

} catch (CallingCommunicationException acsException) {

acsException.printStackTrace();

} catch (ExecutionException | InterruptedException e) {

e.printStackTrace();

}

}

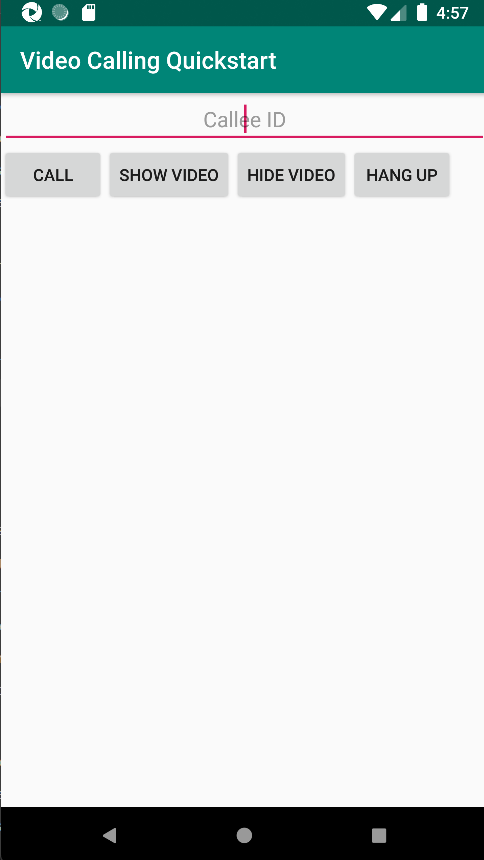

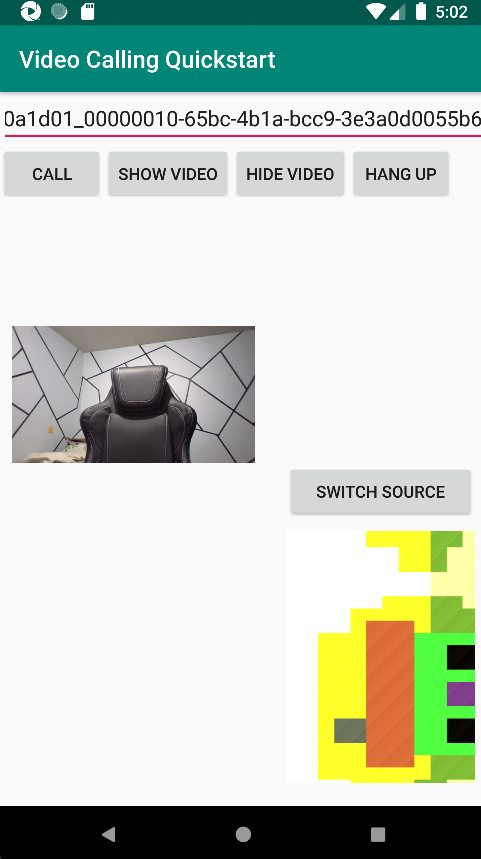

Run the code

You can now launch the app by using the Run App button on the toolbar of Android Studio.

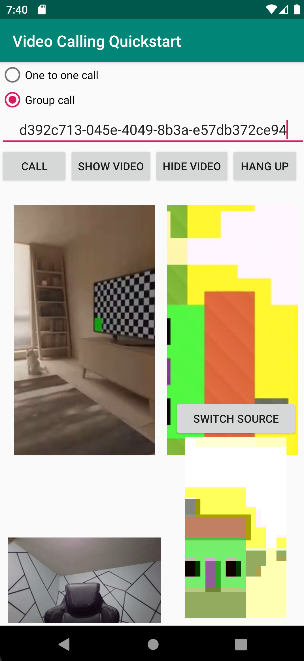

| Completed application | 1:1 call |

|---|---|

|

|

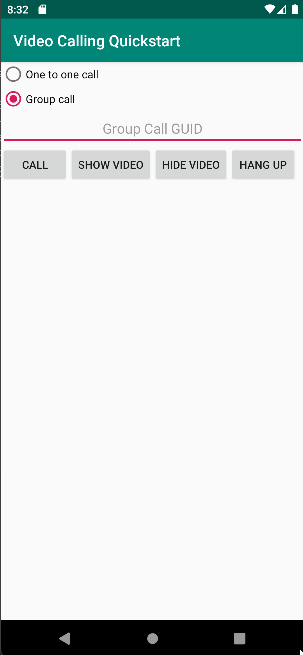

Add group call capability

Now you can update your app to let the user choose between 1:1 calls or group calls.

Update layout

Use radio buttons to select if the SDK creates a 1:1 call or joins a group call. The radio buttons are at the top, so the first section of app/src/main/res/layout/activity_main.xml end as follows.

<?xml version="1.0" encoding="utf-8"?>

<androidx.constraintlayout.widget.ConstraintLayout xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:app="http://schemas.android.com/apk/res-auto"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".MainActivity">

<LinearLayout

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:orientation="vertical">

<RadioGroup

android:layout_width="match_parent"

android:layout_height="wrap_content">

<RadioButton

android:id="@+id/one_to_one_call"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:text="One to one call" />

<RadioButton

android:id="@+id/group_call"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:text="Group call" />

</RadioGroup>

<EditText

android:id="@+id/call_id"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:ems="10"

android:gravity="center"

android:hint="Callee ID"

android:inputType="textPersonName"

app:layout_constraintBottom_toTopOf="@+id/call_button"

app:layout_constraintEnd_toEndOf="parent"

app:layout_constraintStart_toStartOf="parent"

app:layout_constraintTop_toTopOf="parent"

app:layout_constraintVertical_bias="0.064" />

.

.

.

</androidx.constraintlayout.widget.ConstraintLayout>

Update MainActivity.Java

You can now update the elements and logic to decide when to create a 1:1 call, and when to join a group call. The first portion of code requires updates to add dependencies, items, and other configurations.

Dependencies:

import android.widget.RadioButton;

import com.azure.android.communication.calling.GroupCallLocator;

import com.azure.android.communication.calling.JoinCallOptions;

import java.util.UUID;

Global elements:

RadioButton oneToOneCall, groupCall;

Update onCreate():

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

getAllPermissions();

createAgent();

handleIncomingCall();

Button callButton = findViewById(R.id.call_button);

callButton.setOnClickListener(l -> startCall());

Button hangupButton = findViewById(R.id.hang_up);

hangupButton.setOnClickListener(l -> hangUp());

Button startVideo = findViewById(R.id.show_preview);

startVideo.setOnClickListener(l -> turnOnLocalVideo());

Button stopVideo = findViewById(R.id.hide_preview);

stopVideo.setOnClickListener(l -> turnOffLocalVideo());

switchSourceButton = findViewById(R.id.switch_source);

switchSourceButton.setOnClickListener(l -> switchSource());

setVolumeControlStream(AudioManager.STREAM_VOICE_CALL);

oneToOneCall = findViewById(R.id.one_to_one_call);

oneToOneCall.setOnClickListener(this::onCallTypeSelected);

oneToOneCall.setChecked(true);

groupCall = findViewById(R.id.group_call);

groupCall.setOnClickListener(this::onCallTypeSelected);

}

Update startCall():

private void startCall() {

Context context = this.getApplicationContext();

EditText callIdView = findViewById(R.id.call_id);

String callId = callIdView.getText().toString();

ArrayList<CommunicationIdentifier> participants = new ArrayList<CommunicationIdentifier>();

List<VideoDeviceInfo> cameras = deviceManager.getCameras();

if(oneToOneCall.isChecked()){

StartCallOptions options = new StartCallOptions();

if(!cameras.isEmpty()) {

currentCamera = getNextAvailableCamera(null);

currentVideoStream = new LocalVideoStream(currentCamera, context);

LocalVideoStream[] videoStreams = new LocalVideoStream[1];

videoStreams[0] = currentVideoStream;

VideoOptions videoOptions = new VideoOptions(videoStreams);

options.setVideoOptions(videoOptions);

showPreview(currentVideoStream);

}

participants.add(new CommunicationUserIdentifier(callId));

call = callAgent.startCall(

context,

participants,

options);

}

else{

JoinCallOptions options = new JoinCallOptions();

if(!cameras.isEmpty()) {

currentCamera = getNextAvailableCamera(null);

currentVideoStream = new LocalVideoStream(currentCamera, context);

LocalVideoStream[] videoStreams = new LocalVideoStream[1];

videoStreams[0] = currentVideoStream;

VideoOptions videoOptions = new VideoOptions(videoStreams);

options.setVideoOptions(videoOptions);

showPreview(currentVideoStream);

}

GroupCallLocator groupCallLocator = new GroupCallLocator(UUID.fromString(callId));

call = callAgent.join(

context,

groupCallLocator,

options);

}

remoteParticipantUpdatedListener = this::handleRemoteParticipantsUpdate;

onStateChangedListener = this::handleCallOnStateChanged;

call.addOnRemoteParticipantsUpdatedListener(remoteParticipantUpdatedListener);

call.addOnStateChangedListener(onStateChangedListener);

}

Add onCallTypeSelected():

public void onCallTypeSelected(View view) {

boolean checked = ((RadioButton) view).isChecked();

EditText callIdView = findViewById(R.id.call_id);

switch(view.getId()) {

case R.id.one_to_one_call:

if (checked){

callIdView.setHint("Callee id");

}

break;

case R.id.group_call:

if (checked){

callIdView.setHint("Group Call GUID");

}

break;

}

}

Run the upgraded app

At this point, you can launch the app by using the Run App button on the toolbar of Android Studio.

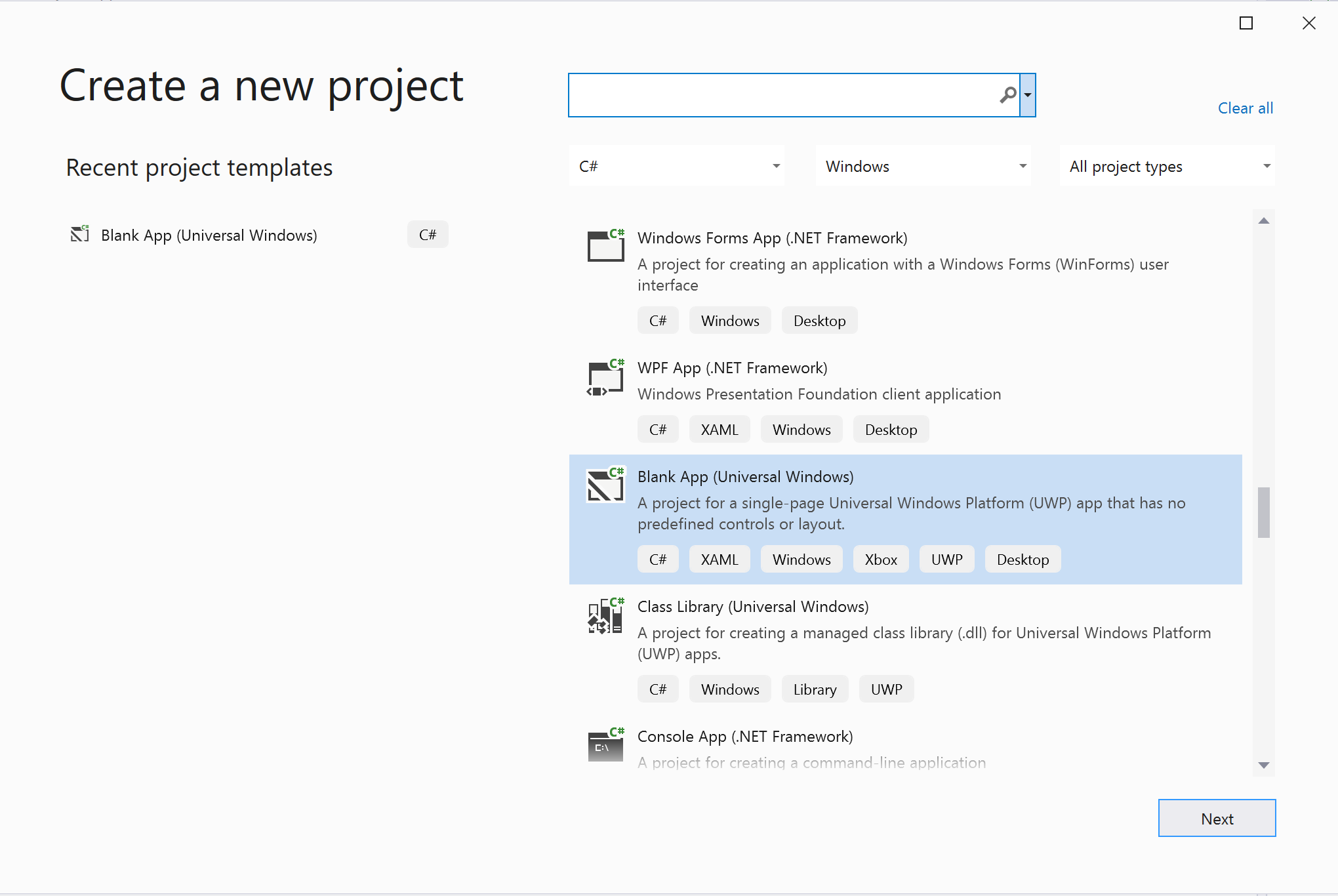

| Screen update | Group call |

|---|---|

|

|

Get started with Azure Communication Services by using the Communication Services calling SDK to add one on one video calling to your app. You learn how to start and answer a video call using the Azure Communication Services Calling SDK for iOS.

Sample Code

If you'd like to skip ahead to the end, you can download this quickstart as a sample on GitHub.

Prerequisites

Obtain an Azure account with an active subscription. Create an account for free.

A Mac running Xcode, along with a valid developer certificate installed into your Keychain.

Create an active Communication Services resource. Create a Communication Services resource. You need to record your connection string for this quickstart.

A User Access Token for your Azure Communication Service. You can also use the Azure CLI and run the command with your connection string to create a user and an access token.

az communication identity token issue --scope voip --connection-string "yourConnectionString"For details, see Use Azure CLI to Create and Manage Access Tokens.

Setting up

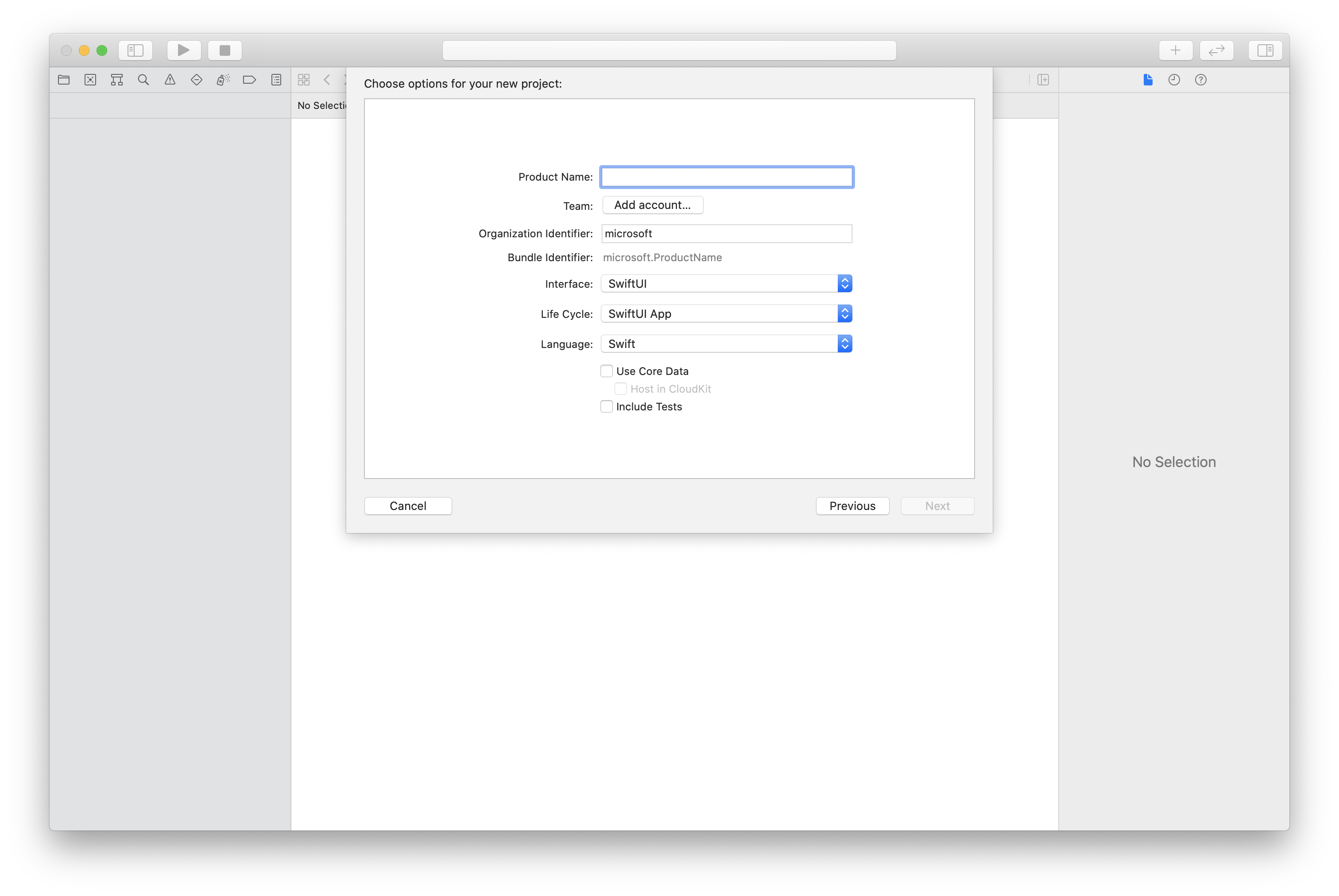

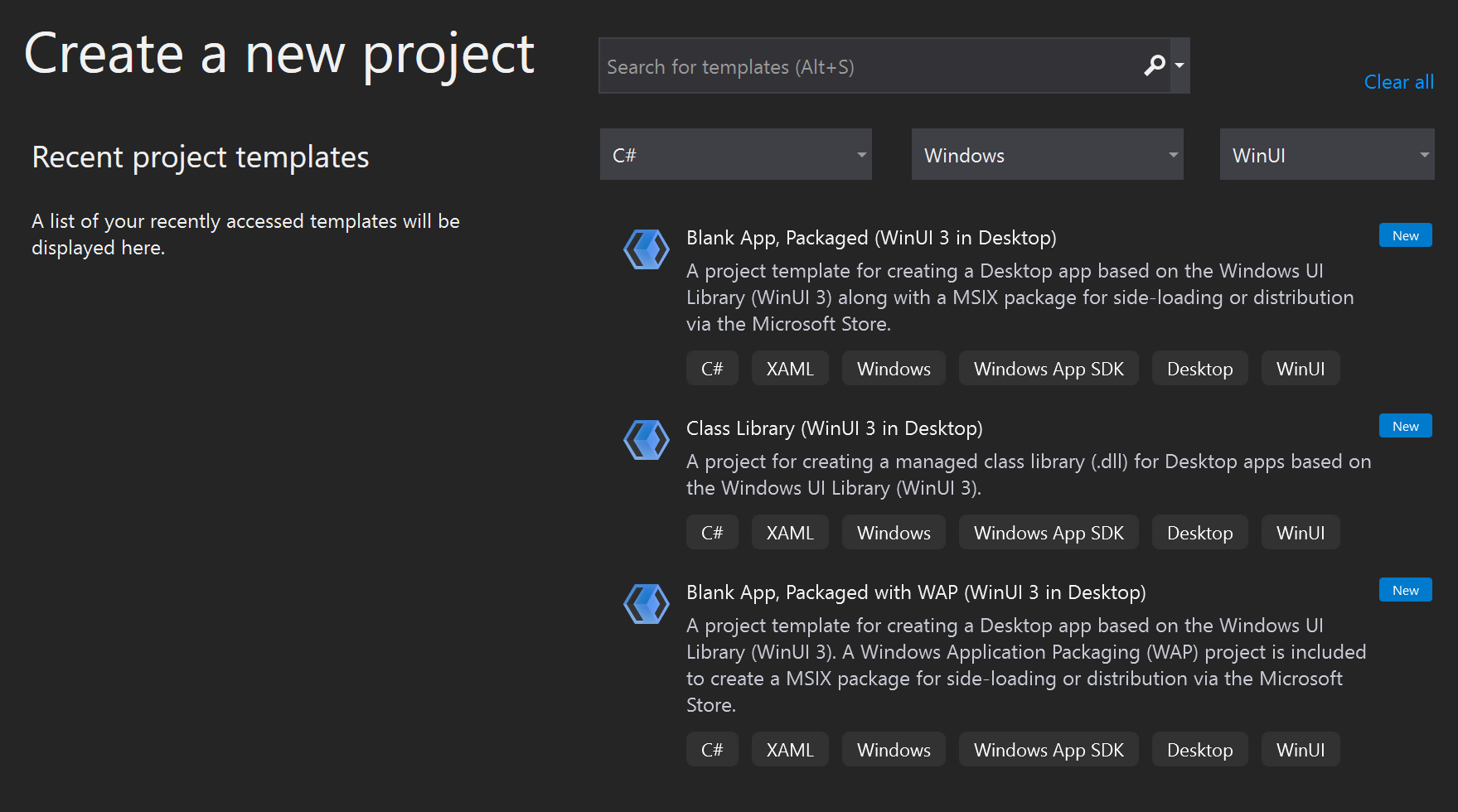

Creating the Xcode project

In Xcode, create a new iOS project and select the Single View App template. This tutorial uses the SwiftUI framework, so you should set the Language to Swift and the User Interface to SwiftUI. You're not going to create tests during this quick start. Feel free to uncheck Include Tests.

Installing CocoaPods

Use this guide to install CocoaPods on your Mac.

Install the package and dependencies with CocoaPods

To create a

Podfilefor your application, open the terminal and navigate to the project folder and run pod init.Add the following code to the

Podfileand save. See SDK support versions.

platform :ios, '13.0'

use_frameworks!

target 'VideoCallingQuickstart' do

pod 'AzureCommunicationCalling', '~> 1.0.0'

end

Run pod install.

Open the

.xcworkspacewith Xcode.

Using XCFramework directly

If you aren't using CocoaPods as a dependency manager, you can directly download the AzureCommunicationCalling.xcframework directly from our release page.

Is important to know that AzureCommunicationCalling has a dependency on AzureCommunicationCommon so you need to install it as well in your project.

Note

Although AzureCommunicationCommon is a pure swift package, you cannot install it using Swift Package Manager to use it with AzureCommunicationCalling because the latter is an Objective-C framework and Swift Package Manager deliberately do not support Swift ObjC interface headers by design which means is not possible to work together with AzureCommunicationCalling if installed using Swift Package Manager. You would have to either install via another dependency manager or generate a xcframework from AzureCommunicationCommon sources and import into your project.

Request access to the microphone and camera

To access the device's microphone and camera, you need to update your app's Information Property List with an NSMicrophoneUsageDescription and NSCameraUsageDescription. You set the associated value to a string that includes the dialog the system uses to request access from the user.

Right-click the Info.plist entry of the project tree and select Open As > Source Code. Add the following lines the top level <dict> section, and then save the file.

<key>NSMicrophoneUsageDescription</key>

<string>Need microphone access for VOIP calling.</string>

<key>NSCameraUsageDescription</key>

<string>Need camera access for video calling</string>

Set up the app framework

Open your project's ContentView.swift file and add an import declaration to the top of the file to import the AzureCommunicationCalling library and AVFoundation. AVFoundation is used to capture audio permission from code.

import AzureCommunicationCalling

import AVFoundation

Object model

The following classes and interfaces handle some of the major features of the Azure Communication Services Calling SDK for iOS.

| Name | Description |

|---|---|

CallClient |

The CallClient is the main entry point to the Calling SDK. |

CallAgent |

The CallAgent is used to start and manage calls. |

CommunicationTokenCredential |

The CommunicationTokenCredential is used as the token credential to instantiate the CallAgent. |

CommunicationIdentifier |

The CommunicationIdentifier is used to represent the identity of the user, which can be one of the following options: CommunicationUserIdentifier, PhoneNumberIdentifier or CallingApplication. |

Create the Call Agent

Replace the implementation of the ContentView struct with some simple UI controls that enable a user to initiate and end a call. We add business logic to these controls in this quickstart.

struct ContentView: View {

@State var callee: String = ""

@State var callClient: CallClient?

@State var callAgent: CallAgent?

@State var call: Call?

@State var deviceManager: DeviceManager?

@State var localVideoStream:[LocalVideoStream]?

@State var incomingCall: IncomingCall?

@State var sendingVideo:Bool = false

@State var errorMessage:String = "Unknown"

@State var remoteVideoStreamData:[Int32:RemoteVideoStreamData] = [:]

@State var previewRenderer:VideoStreamRenderer? = nil

@State var previewView:RendererView? = nil

@State var remoteRenderer:VideoStreamRenderer? = nil

@State var remoteViews:[RendererView] = []

@State var remoteParticipant: RemoteParticipant?

@State var remoteVideoSize:String = "Unknown"

@State var isIncomingCall:Bool = false

@State var callObserver:CallObserver?

@State var remoteParticipantObserver:RemoteParticipantObserver?

var body: some View {

NavigationView {

ZStack{

Form {

Section {

TextField("Who would you like to call?", text: $callee)

Button(action: startCall) {

Text("Start Call")

}.disabled(callAgent == nil)

Button(action: endCall) {

Text("End Call")

}.disabled(call == nil)

Button(action: toggleLocalVideo) {

HStack {

Text(sendingVideo ? "Turn Off Video" : "Turn On Video")

}

}

}

}

// Show incoming call banner

if (isIncomingCall) {

HStack() {

VStack {

Text("Incoming call")

.padding(10)

.frame(maxWidth: .infinity, alignment: .topLeading)

}

Button(action: answerIncomingCall) {

HStack {

Text("Answer")

}

.frame(width:80)

.padding(.vertical, 10)

.background(Color(.green))

}

Button(action: declineIncomingCall) {

HStack {

Text("Decline")

}

.frame(width:80)

.padding(.vertical, 10)

.background(Color(.red))

}

}

.frame(maxWidth: .infinity, alignment: .topLeading)

.padding(10)

.background(Color.gray)

}

ZStack{

VStack{

ForEach(remoteViews, id:\.self) { renderer in

ZStack{

VStack{

RemoteVideoView(view: renderer)

.frame(width: .infinity, height: .infinity)

.background(Color(.lightGray))

}

}

Button(action: endCall) {

Text("End Call")

}.disabled(call == nil)

Button(action: toggleLocalVideo) {

HStack {

Text(sendingVideo ? "Turn Off Video" : "Turn On Video")

}

}

}

}.frame(maxWidth: .infinity, maxHeight: .infinity, alignment: .topLeading)

VStack{

if(sendingVideo)

{

VStack{

PreviewVideoStream(view: previewView!)

.frame(width: 135, height: 240)

.background(Color(.lightGray))

}

}

}.frame(maxWidth:.infinity, maxHeight:.infinity,alignment: .bottomTrailing)

}

}

.navigationBarTitle("Video Calling Quickstart")

}.onAppear{

// Authenticate the client

// Initialize the CallAgent and access Device Manager

// Ask for permissions

}

}

}

//Functions and Observers

struct PreviewVideoStream: UIViewRepresentable {

let view:RendererView

func makeUIView(context: Context) -> UIView {

return view

}

func updateUIView(_ uiView: UIView, context: Context) {}

}

struct RemoteVideoView: UIViewRepresentable {

let view:RendererView

func makeUIView(context: Context) -> UIView {

return view

}

func updateUIView(_ uiView: UIView, context: Context) {}

}

struct ContentView_Previews: PreviewProvider {

static var previews: some View {

ContentView()

}

}

Authenticate the client

In order to initialize a CallAgent instance, you need a User Access Token, which enables it to make, and receive calls. Refer to the user access token documentation, if you don't have a token available.

Once you have a token, Add the following code to the onAppear callback in ContentView.swift. You need to replace <USER ACCESS TOKEN> with a valid user access token for your resource:

var userCredential: CommunicationTokenCredential?

do {

userCredential = try CommunicationTokenCredential(token: "<USER ACCESS TOKEN>")

} catch {

print("ERROR: It was not possible to create user credential.")

return

}

Initialize the CallAgent and access Device Manager

To create a CallAgent instance from a CallClient, use the callClient.createCallAgent method that asynchronously returns a CallAgent object once it's initialized. DeviceManager lets you enumerate local devices that can be used in a call to transmit audio/video streams. It also allows you to request permission from a user to access microphone/camera.

self.callClient = CallClient()

self.callClient?.createCallAgent(userCredential: userCredential!) { (agent, error) in

if error != nil {

print("ERROR: It was not possible to create a call agent.")

return

}

else {

self.callAgent = agent

print("Call agent successfully created.")

self.callAgent!.delegate = incomingCallHandler

self.callClient?.getDeviceManager { (deviceManager, error) in

if (error == nil) {

print("Got device manager instance")

self.deviceManager = deviceManager

} else {

print("Failed to get device manager instance")

}

}

}

}

Ask for permissions

We need to add the following code to the onAppear callback to ask for permissions for audio and video.

AVAudioSession.sharedInstance().requestRecordPermission { (granted) in

if granted {

AVCaptureDevice.requestAccess(for: .video) { (videoGranted) in

/* NO OPERATION */

}

}

}

Configure audio session

You use an AVAudioSession object to configure your app’s audio session. Here is an example of enabling bluetooth audio device for your app:

func configureAudioSession() -> Error? {

// Retrieve the audio session.

let audioSession: AVAudioSession = AVAudioSession.sharedInstance()

// set options to allow bluetooth device

let options: AVAudioSession.CategoryOptions = .allowBluetooth

var configError: Error?

do {

// Set the audio session category.

try audioSession.setCategory(.playAndRecord, options: options)

print("configureAudioSession successfully")

} catch {

print("configureAudioSession failed")

configError = error

}

return configError

}

Display local video

Before starting a call, you can manage settings related to video. In this quickstart, we introduce the implementation of toggling local video before or during a call.

First we need to access local cameras with deviceManager. Once the desired camera is selected, we can construct LocalVideoStream and create a VideoStreamRenderer, and then attach it to previewView. During the call, we can use startVideo or stopVideo to start or stop sending LocalVideoStream to remote participants. This function also works with handling incoming calls.

func toggleLocalVideo() {

// toggling video before call starts

if (call == nil)

{

if(!sendingVideo)

{

self.callClient = CallClient()

self.callClient?.getDeviceManager { (deviceManager, error) in

if (error == nil) {

print("Got device manager instance")

self.deviceManager = deviceManager

} else {

print("Failed to get device manager instance")

}

}

guard let deviceManager = deviceManager else {

return

}

let camera = deviceManager.cameras.first

let scalingMode = ScalingMode.fit

if (self.localVideoStream == nil) {

self.localVideoStream = [LocalVideoStream]()

}

localVideoStream!.append(LocalVideoStream(camera: camera!))

previewRenderer = try! VideoStreamRenderer(localVideoStream: localVideoStream!.first!)

previewView = try! previewRenderer!.createView(withOptions: CreateViewOptions(scalingMode:scalingMode))

self.sendingVideo = true

}

else{

self.sendingVideo = false

self.previewView = nil

self.previewRenderer!.dispose()

self.previewRenderer = nil

}

}

// toggle local video during the call

else{

if (sendingVideo) {

call!.stopVideo(stream: localVideoStream!.first!) { (error) in

if (error != nil) {

print("cannot stop video")

}

else {

self.sendingVideo = false

self.previewView = nil

self.previewRenderer!.dispose()

self.previewRenderer = nil

}

}

}

else {

guard let deviceManager = deviceManager else {

return

}

let camera = deviceManager.cameras.first

let scalingMode = ScalingMode.fit

if (self.localVideoStream == nil) {

self.localVideoStream = [LocalVideoStream]()

}

localVideoStream!.append(LocalVideoStream(camera: camera!))

previewRenderer = try! VideoStreamRenderer(localVideoStream: localVideoStream!.first!)

previewView = try! previewRenderer!.createView(withOptions: CreateViewOptions(scalingMode:scalingMode))

call!.startVideo(stream:(localVideoStream?.first)!) { (error) in

if (error != nil) {

print("cannot start video")

}

else {

self.sendingVideo = true

}

}

}

}

}

Place an outgoing call

The startCall method is set as the action that is performed when the Start Call button is tapped. In this quickstart, outgoing calls are audio only by default. To start a call with video, we need to set VideoOptions with LocalVideoStream and pass it with startCallOptions to set initial options for the call.

func startCall() {

let startCallOptions = StartCallOptions()

if(sendingVideo)

{

if (self.localVideoStream == nil) {

self.localVideoStream = [LocalVideoStream]()

}

let videoOptions = VideoOptions(localVideoStreams: localVideoStream!)

startCallOptions.videoOptions = videoOptions

}

let callees:[CommunicationIdentifier] = [CommunicationUserIdentifier(self.callee)]

self.callAgent?.startCall(participants: callees, options: startCallOptions) { (call, error) in

setCallAndObserver(call: call, error: error)

}

}

CallObserver and RemoteParticipantObserver are used to manage mid-call events and remote participants. We set the observers in the setCallAndObserver function.

func setCallAndObserver(call: Call!, error: Error?) {

if error == nil {

self.call = call

self.callObserver = CallObserver(self)

self.call!.delegate = self.callObserver

self.remoteParticipantObserver = RemoteParticipantObserver(self)

} else {

print("Failed to get call object")

}

}

Answer an incoming call

To answer an incoming call, implement an IncomingCallHandler to display the incoming call banner in order to answer or decline the call. Put the following implementation in IncomingCallHandler.swift.

final class IncomingCallHandler: NSObject, CallAgentDelegate, IncomingCallDelegate {

public var contentView: ContentView?

private var incomingCall: IncomingCall?

private static var instance: IncomingCallHandler?

static func getOrCreateInstance() -> IncomingCallHandler {

if let c = instance {

return c

}

instance = IncomingCallHandler()

return instance!

}

private override init() {}

public func callAgent(_ callAgent: CallAgent, didReceiveIncomingCall incomingCall: IncomingCall) {

self.incomingCall = incomingCall

self.incomingCall?.delegate = self

contentView?.showIncomingCallBanner(self.incomingCall!)

}

public func callAgent(_ callAgent: CallAgent, didUpdateCalls args: CallsUpdatedEventArgs) {

if let removedCall = args.removedCalls.first {

contentView?.callRemoved(removedCall)

self.incomingCall = nil

}

}

}

We need to create an instance of IncomingCallHandler by adding the following code to the onAppear callback in ContentView.swift:

let incomingCallHandler = IncomingCallHandler.getOrCreateInstance()

incomingCallHandler.contentView = self

Set a delegate to the CallAgent after the CallAgent being successfully created:

self.callAgent!.delegate = incomingCallHandler

Once there's an incoming call, the IncomingCallHandler calls the function showIncomingCallBanner to display answer and decline button.

func showIncomingCallBanner(_ incomingCall: IncomingCall?) {

isIncomingCall = true

self.incomingCall = incomingCall

}

The actions attached to answer and decline are implemented as the following code. In order to answer the call with video, we need to turn on the local video and set the options of AcceptCallOptions with localVideoStream.

func answerIncomingCall() {

isIncomingCall = false

let options = AcceptCallOptions()

if (self.incomingCall != nil) {

guard let deviceManager = deviceManager else {

return

}

if (self.localVideoStream == nil) {

self.localVideoStream = [LocalVideoStream]()

}

if(sendingVideo)