หมายเหตุ

การเข้าถึงหน้านี้ต้องได้รับการอนุญาต คุณสามารถลอง ลงชื่อเข้าใช้หรือเปลี่ยนไดเรกทอรีได้

การเข้าถึงหน้านี้ต้องได้รับการอนุญาต คุณสามารถลองเปลี่ยนไดเรกทอรีได้

Caution

This article references CentOS, a Linux distribution that is end of support status. Please consider your use and planning accordingly. For more information, see the CentOS End Of Life guidance.

This tutorial describes procedures to connect to Azure Data Box Blob storage via REST APIs over http or https. Once connected, the steps required to copy the data to Data Box Blob storage and prepare the Data Box to ship, are also described.

In this tutorial, you learn how to:

- Prerequisites

- Connect to Data Box Blob storage via http or https

- Copy data to Data Box

Prerequisites

Before you begin, make sure that:

- You've completed the Tutorial: Set up Azure Data Box.

- You've received your Data Box and the order status in the portal is Delivered.

- You review the system requirements for Data Box Blob storage and are familiar with supported versions of APIs, SDKs, and tools.

- You have a host computer that has the data that you want to copy over to Data Box. Your host computer must:

- Run a Supported operating system.

- Be connected to a high-speed network. We strongly recommend that you have at least one 10-GbE connection. If a 10-GbE connection isn't available, use a 1-GbE data link but the copy speeds are impacted.

- Download AzCopy V10 on your host computer. AzCopy is used to copy data to Azure Data Box Blob storage from your host computer.

Before you begin, make sure that:

- You've completed the Tutorial: Set up Azure Data Box.

- You've received your Data Box and the order status in the portal is Delivered.

- You review the system requirements for Data Box Blob storage and are familiar with supported versions of APIs, SDKs, and tools.

- You have a host computer that has the data that you want to copy over to Data Box. Your host computer must:

- Run a Supported operating system.

- Be connected to a high-speed network. We strongly recommend that you have at least one 100-GbE connection. If a 100-GbE connection isn't available, use a 10-GbE or 1-GbE data link but the copy speeds are impacted.

- Download AzCopy V10 on your host computer. AzCopy is used to copy data to Azure Data Box Blob storage from your host computer.

Connect via http or https

You can connect to Data Box Blob storage over http or https.

- Https is the secure and recommended way to connect to Data Box Blob storage.

- Http is used when connecting over trusted networks.

The steps to connect are different when you connect to Data Box Blob storage over http or https.

Connect via http

Connection to Data Box Blob storage REST APIs over http requires the following steps:

- Add the device IP and blob service endpoint to the remote host

- Configure partner software and verify the connection

Each of these steps is described in the following sections.

Add device IP address and blob service endpoint

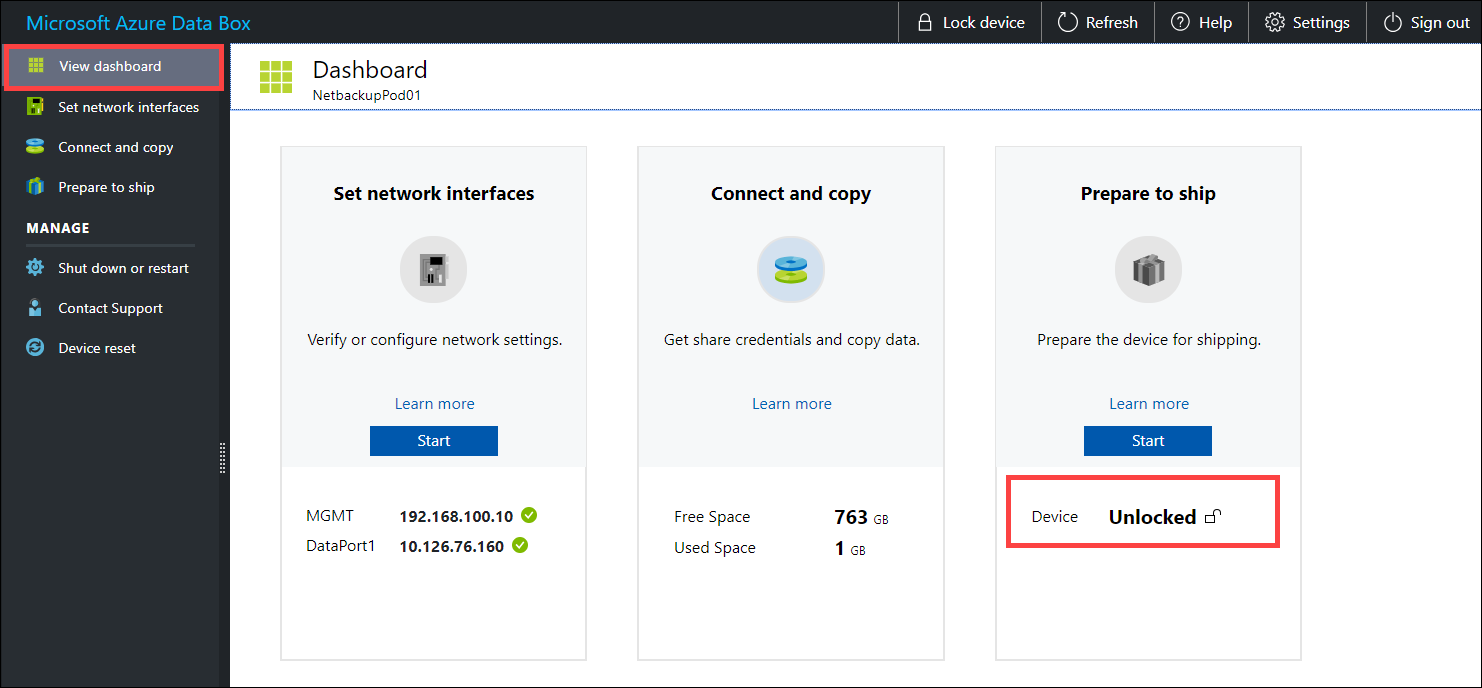

Sign into the Data Box device. Ensure it is unlocked.

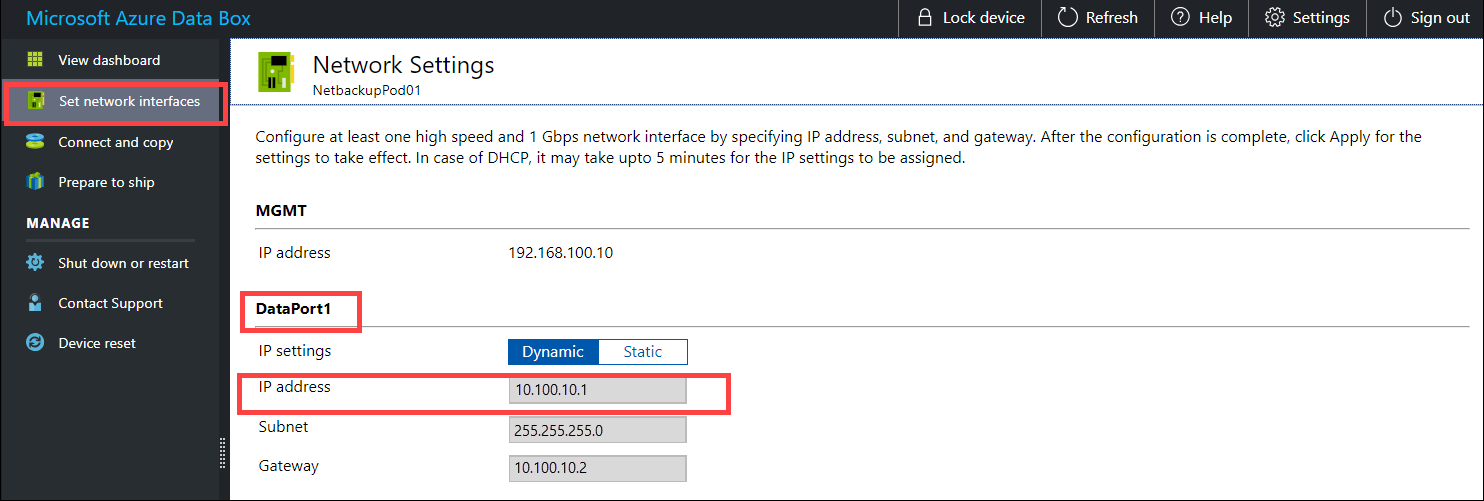

Go to Set network interfaces. Make a note of the device IP address for the network interface used to connect to the client.

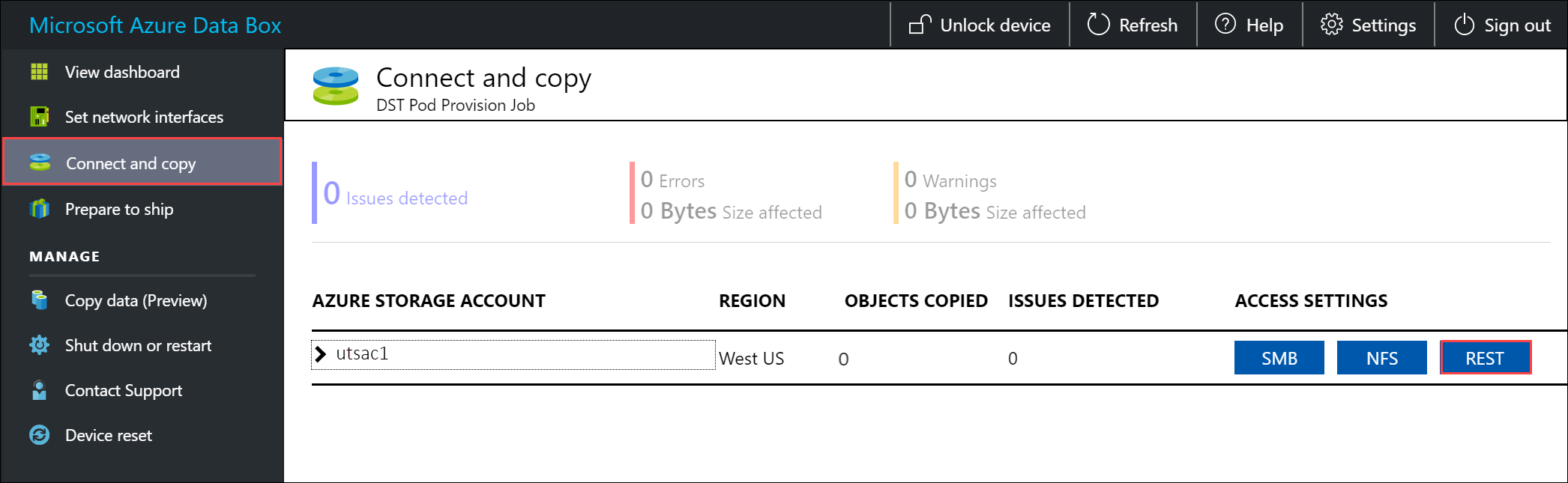

Go to Connect and copy and click Rest.

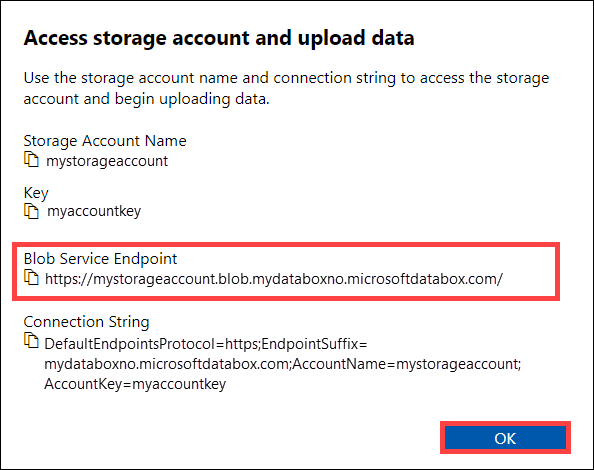

From the Access Storage account and upload data dialog, copy the Blob Service Endpoint.

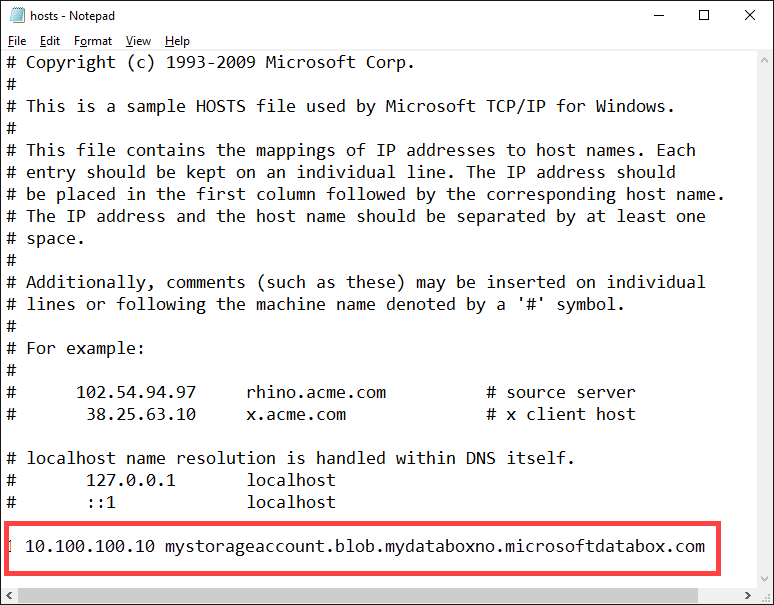

Start Notepad as an administrator, and then open the hosts file located at

C:\Windows\System32\Drivers\etc.Add the following entry to your hosts file:

<device IP address> <Blob service endpoint>For reference, use the following image. Save the hosts file.

Verify connection and configure partner software

Configure the partner software to connect to the client. To connect to the partner software, you typically need the following information (may vary) you gathered from the Connect and copy page of the local web UI in the previous step:

- Storage account name

- Access key

- Blob service endpoint

To verify that the connection is successfully established, use Storage Explorer to attach to an external storage account. If you don't have Storage Explorer, you need to download and install.

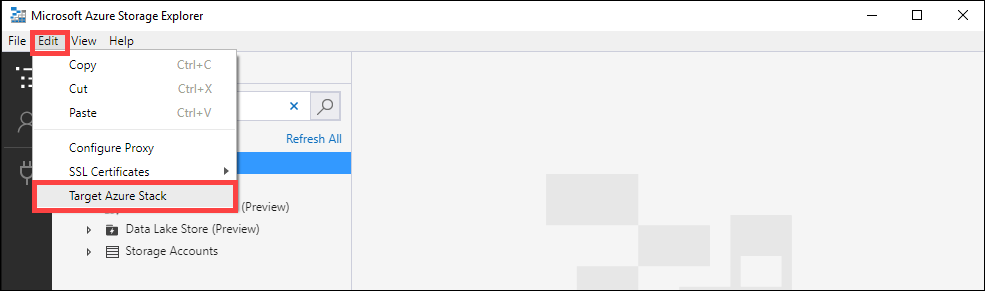

The first time you use Storage Explorer, you need to perform the following steps:

From the top command bar, go to Edit > Target Azure Stack.

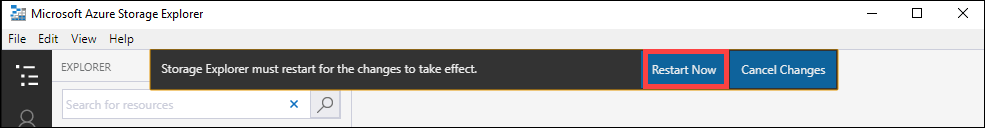

Restart the Storage Explorer for the changes to take effect.

Follow these steps to connect to the storage account and verify the connection.

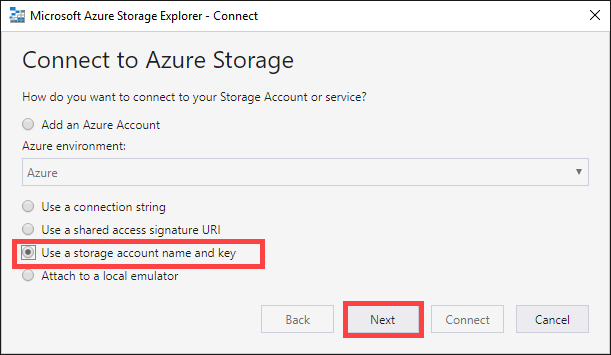

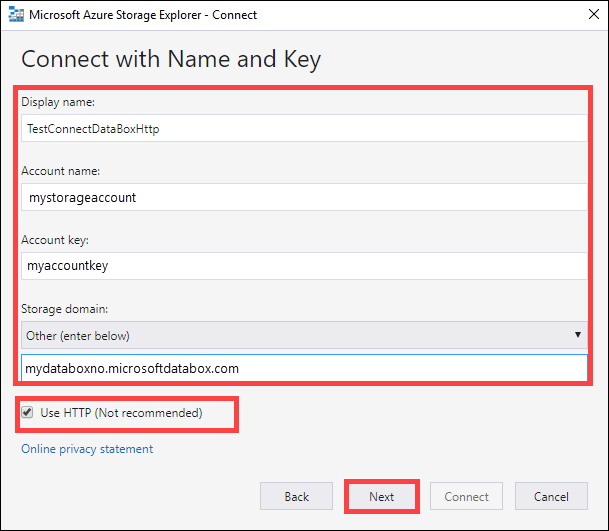

In Storage Explorer, open the Connect to Azure Storage dialog. In the Connect to Azure Storage dialog, select Use a storage account name and key.

Paste your Account name and Account key (key 1 value from the Connect and copy page in the local web UI). Select Storage endpoints domain as Other (enter below) and then provide the blob service endpoint as shown below. Check Use HTTP option only if transferring over http. If using https, leave the option unchecked. Select Next.

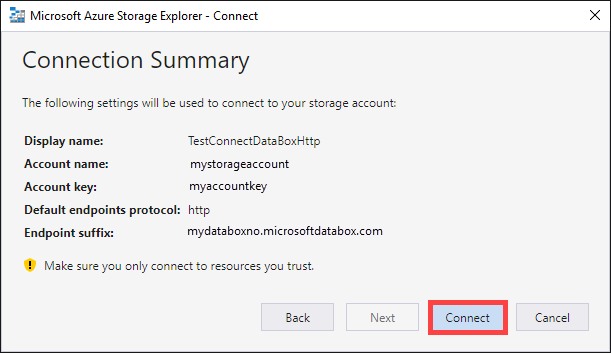

In the Connection Summary dialog, review the provided information. Select Connect.

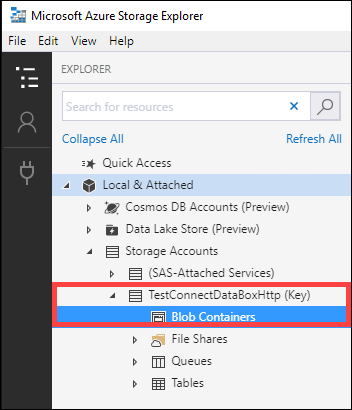

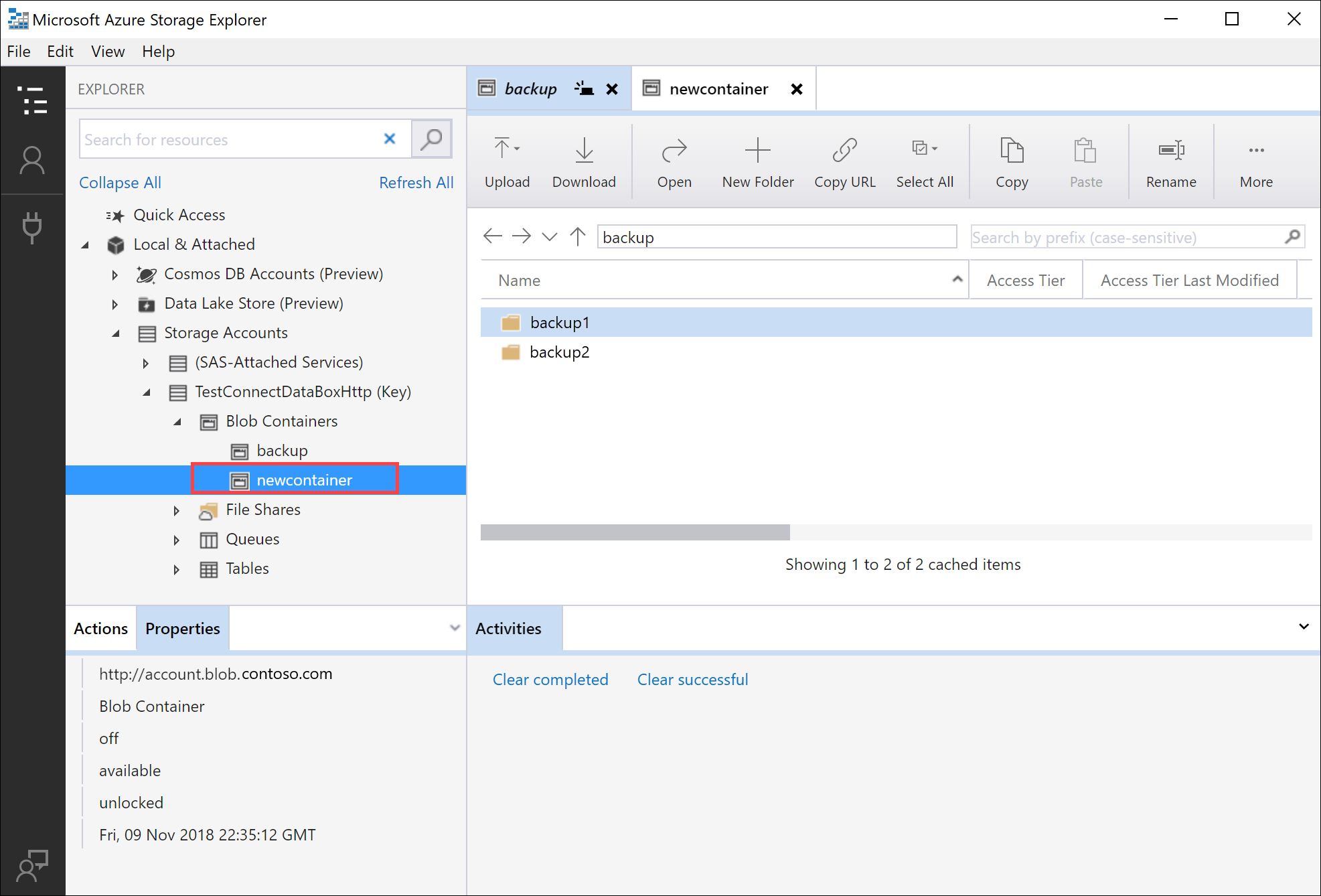

The account that you successfully added is displayed in the left pane of Storage Explorer with (External, Other) appended to its name. Click Blob Containers to view the container.

Connect via https

Connection to Azure Blob storage REST APIs over https requires the following steps:

- Download the certificate from Azure portal. This certificate is used for connecting to the web UI and Azure Blob storage REST APIs.

- Import the certificate on the client or remote host.

- Add the device IP and blob service endpoint to the client or remote host.

- Configure partner software and verify the connection.

Each of these steps is described in the following sections.

Download certificate

Use the Azure portal to download certificate.

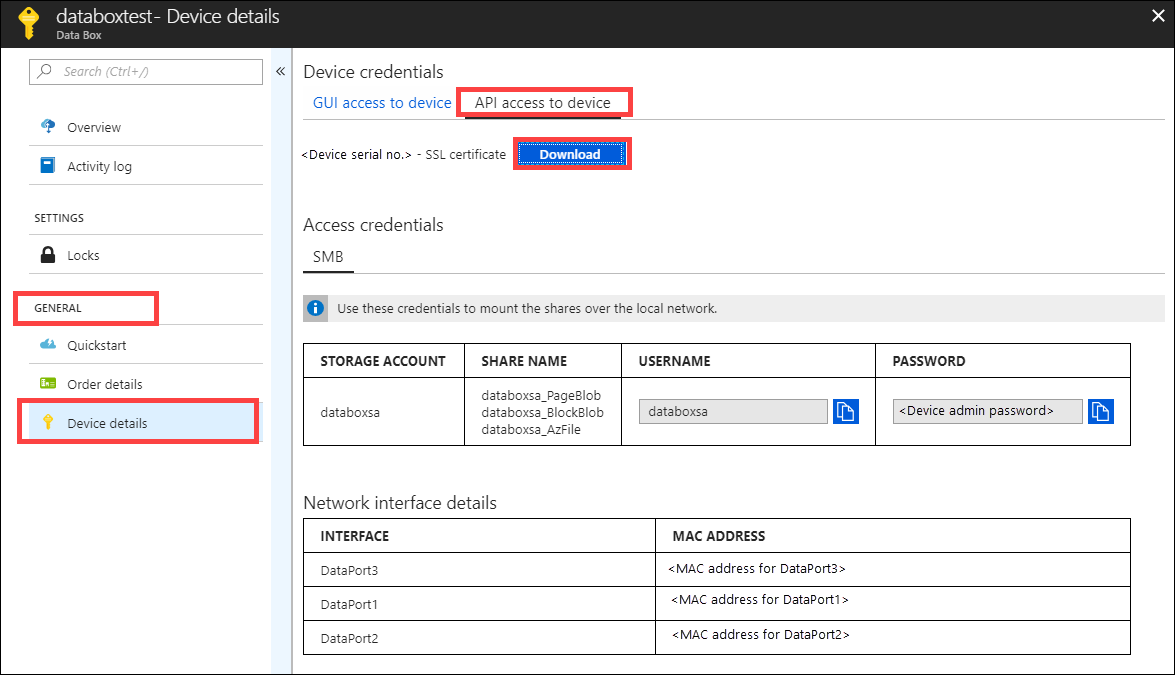

Sign into the Azure portal.

Go to your Data Box order and navigate to General > Device details.

Under Device credentials, go to API access to device. Select Download. This action downloads a <your order name>.cer certificate file. Save this file and install it on the client or host computer you use to connect to the device.

Import certificate

Accessing Data Box Blob storage over HTTPS requires a TLS/SSL certificate for the device. The way in which this certificate is made available to the client application varies from application to application and across operating systems and distributions. Some applications can access the certificate after importing it into the system's certificate store, while other applications don't make use of that mechanism.

Specific information for some applications is mentioned in this section. For more information on other applications, see the documentation for the application and the operating system used.

Follow these steps to import the .cer file into the root store of a Windows or Linux client. On a Windows system, you can use Windows PowerShell or the Windows Server UI to import and install the certificate on your system.

Use Windows PowerShell

Start a Windows PowerShell session as an administrator.

At the command prompt, type:

Import-Certificate -FilePath C:\temp\localuihttps.cer -CertStoreLocation Cert:\LocalMachine\Root

Use Windows Server UI

Right-click the

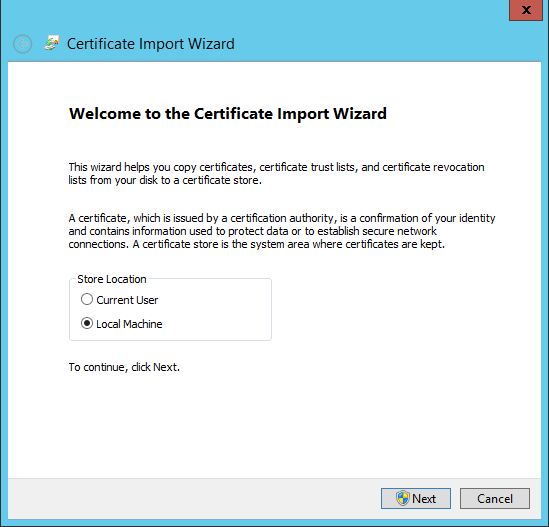

.cerfile and select Install certificate. This action starts the Certificate Import Wizard.For Store location, select Local Machine, and then select Next.

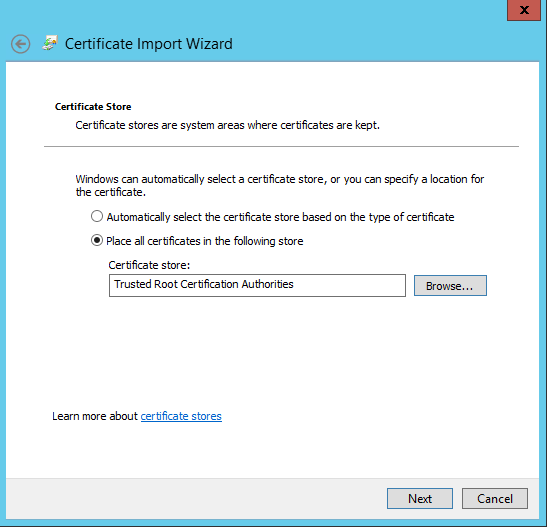

Select Place all certificates in the following store, and then select Browse. Navigate to the root store of your remote host, and then select Next.

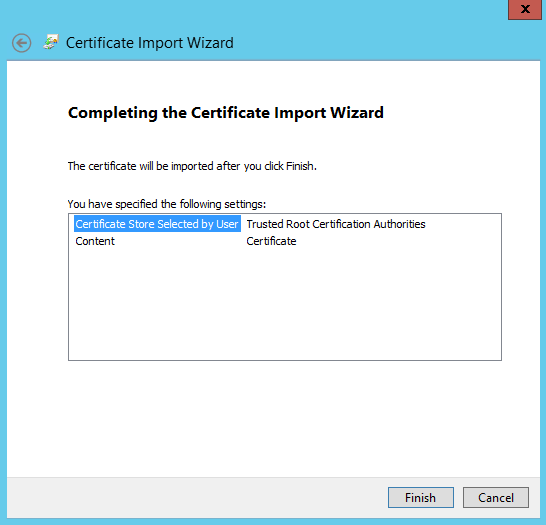

Select Finish. A message that tells you that the import was successful appears.

Use a Linux system

The method to import a certificate varies by distribution.

Several, such as Ubuntu and Debian, use the update-ca-certificates command.

- Rename the Base64-encoded certificate file to have a

.crtextension and copy it into the/usr/local/share/ca-certificates directory. - Run the command

update-ca-certificates.

Recent versions of RHEL, Fedora, and CentOS use the update-ca-trust command.

- Copy the certificate file into the

/etc/pki/ca-trust/source/anchorsdirectory. - Run

update-ca-trust.

Consult the documentation specific to your distribution for details.

Add device IP address and blob service endpoint

Follow the same steps to add device IP address and blob service endpoint when connecting over http.

Configure partner software and verify connection

Follow the steps to Configure partner software that you used while connecting over http. The only difference is that you should leave the Use http option unchecked.

Copy data to Data Box

After one or more Data Box shares are connected, the next step is to copy data. Before you initiate data copy operations, consider the following limitations:

- While copying data, ensure that the data size conforms to the size limits described in the Azure storage and Data Box limits.

- Simultaneous uploads by Data Box and another non-Data Box application could potentially result in upload job failures and data corruption.

Important

Make sure that you maintain a copy of the source data until you can confirm that your data has been copied into Azure Storage.

In this tutorial, AzCopy is used to copy data to Data Box Blob storage. If you prefer a GUI-based tool, you can also use Azure Storage Explorer or other partner software to copy the data.

The copy procedure has the following steps:

- Create a container

- Upload contents of a folder to Data Box Blob storage

- Upload modified files to Data Box Blob storage

Each of these steps is described in detail in the following sections.

Create a container

The first step is to create a container, because blobs are always uploaded into a container. Containers organize groups of blobs like you organize files in folders on your computer. Follow these steps to create a blob container.

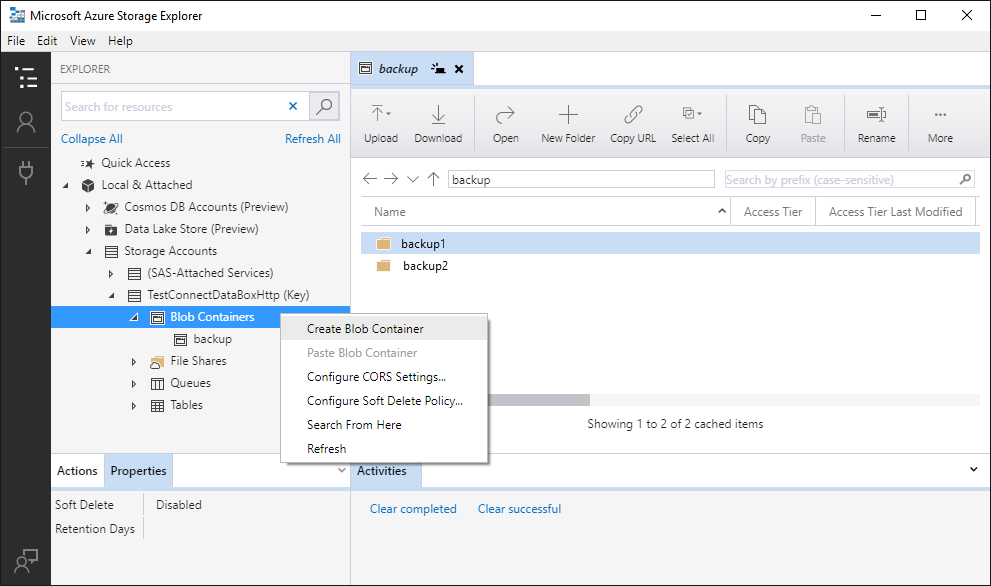

Open Storage Explorer.

In the left pane, expand the storage account within which you wish to create the blob container.

Right-click Blob Containers, and from the context menu, select Create Blob Container.

A text box appears below the Blob Containers folder. Enter the name for your blob container. See the Create the container and set permissions for information on rules and restrictions on naming blob containers.

Press Enter when done to create the blob container, or Esc to cancel. After successful creation, the blob container is displayed under the selected storage account's Blob Containers folder.

Upload the contents of a folder to Data Box Blob storage

Use AzCopy to upload all files within a folder to Blob storage on Windows or Linux. To upload all blobs in a folder, enter the following AzCopy command:

Linux

azcopy \

--source /mnt/myfolder \

--destination https://data-box-storage-account-name.blob.device-serial-no.microsoftdatabox.com/container-name/ \

--dest-key <key> \

--recursive

Windows

AzCopy /Source:C:\myfolder /Dest:https://data-box-storage-account-name.blob.device-serial-no.microsoftdatabox.com/container-name/ /DestKey:<key> /S

Replace <key> with your account key. You can retrieve your account key within the Azure portal by navigating to your storage account. Select Settings > Access keys, choose a key, then copy and paste the value into the AzCopy command.

If the specified destination container doesn't exist, AzCopy creates it and uploads the file into it. Update the source path to your data directory, and replace data-box-storage-account-name in the destination URL with the name of the storage account associated with your Data Box.

To upload the contents of the specified directory to Blob storage recursively, specify the --recursive option for Linux or the /S option for Windows. When you run AzCopy with one of these options, all subfolders and their files are uploaded as well.

Upload modified files to Data Box Blob storage

You can also use AzCopy to upload files based on their last-modified time. To upload only updated or new files, add the --exclude-older parameter for Linux or the /XO parameter for Windows parameter to the AzCopy command.

If you only want to copy the resources within your local source that don't exist within the destination, specify both the --exclude-older and --exclude-newer parameters for Linux, or the /XO and /XN parameters for Windows in the AzCopy command. AzCopy uploads updated data only, as determined by its time stamp.

Linux

azcopy \

--source /mnt/myfolder \

--destination https://data-box-storage-account-name.blob.device-serial-no.microsoftdatabox.com/container-name/ \

--dest-key <key> \

--recursive \

--exclude-older

Windows

AzCopy /Source:C:\myfolder /Dest:https://data-box-storage-account-name.blob.device-serial-no.microsoftdatabox.com/container-name/ /DestKey:<key> /S /XO

If there are any errors during the connect or copy operation, see Troubleshoot issues with Data Box Blob storage.

The next step is to prepare your device to ship.

Next steps

In this tutorial, you learned about Azure Data Box topics such as:

- Prerequisites for copy data to Azure Data Box Blob storage using REST APIs

- Connecting to Data Box Blob storage via http or https

- Copy data to Data Box

Advance to the next tutorial to learn how to ship your Data Box back to Microsoft.