Tutorial: Custom .NET deserializers for Azure Stream Analytics in Visual Studio Code (Preview)

Important

Custom .net deserializer for Azure Stream Analytics will be retired on September 30th 2024. After that date, it won't be possible to use the feature.

Azure Stream Analytics has built-in support for three data formats: JSON, CSV, and Avro as shown in this doc. With custom .NET deserializers, you can process data in other formats such as Protocol Buffer, Bond and other user defined formats for cloud jobs. This tutorial demonstrates how to create, test, and debug a custom .NET deserializer for an Azure Stream Analytics job using Visual Studio Code.

You'll learn how to:

- Create a custom deserializer for protocol buffer.

- Create an Azure Stream Analytics job in Visual Studio Code.

- Configure your Stream Analytics job to use the custom deserializer.

- Run your Stream Analytics job locally to test and debug the custom deserializer.

Prerequisites

Install .NET core SDK and restart Visual Studio Code.

Use this quickstart to learn how to create a Stream Analytics job using Visual Studio Code.

Create a custom deserializer

Open a terminal and run following command to create a .NET class library in Visual Studio Code for your custom deserializer called ProtobufDeserializer.

dotnet new classlib -o ProtobufDeserializerGo to the ProtobufDeserializer project directory and install the Microsoft.Azure.StreamAnalytics and Google.Protobuf NuGet packages.

dotnet add package Microsoft.Azure.StreamAnalyticsdotnet add package Google.ProtobufAdd the MessageBodyProto class and the MessageBodyDeserializer class to your project.

Build the ProtobufDeserializer project.

Add an Azure Stream Analytics project

Open Visual Studio Code and select Ctrl+Shift+P to open the command palette. Then enter ASA and select ASA: Create New Project. Name it ProtobufCloudDeserializer.

Configure a Stream Analytics job

Double-click JobConfig.json. Use the default configurations, except for the following settings:

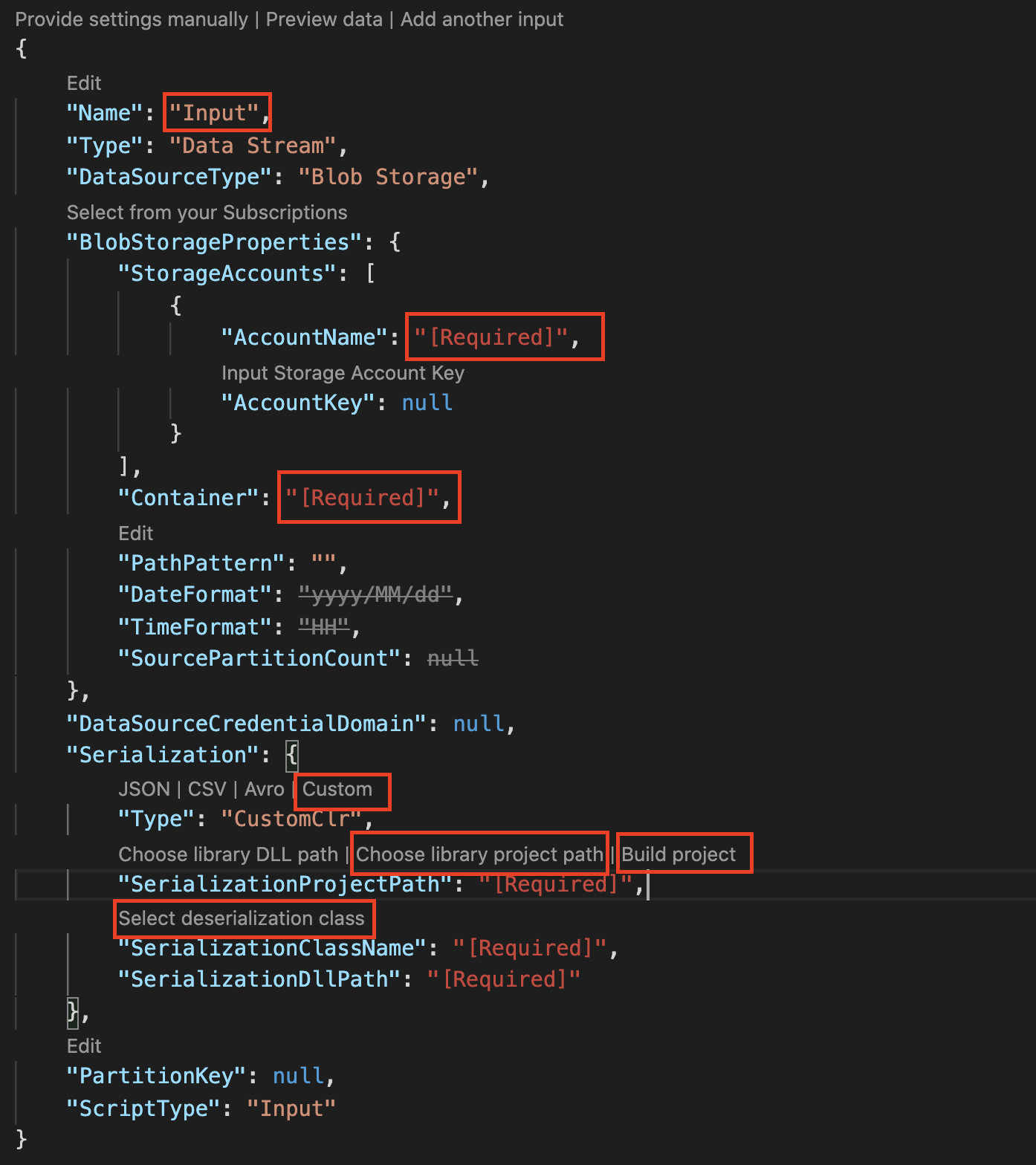

Setting Suggested Value Global Storage Settings Resource Choose data source from current account Global Storage Settings Subscription < your subscription > Global Storage Settings Storage Account < your storage account > CustomCodeStorage Settings Storage Account < your storage account > CustomCodeStorage Settings Container < your storage container > Under Inputs folder open input.json. Select Add live input and add an input from Azure Data Lake Storage Gen2/Blob storage, choose Select from your Azure subscription. Use the default configurations, except for the following settings:

Setting Suggested Value Name Input Subscription < your subscription > Storage Account < your storage account > Container < your storage container > Serialization Type Choose Custom SerializationProjectPath Select Choose library project path from CodeLens and select the ProtobufDeserializer library project created in previous section. Select build project to build the project SerializationClassName Select select deserialization class from CodeLens to auto populate the class name and DLL path Class Name MessageBodyProto.MessageBodyDeserializer

Add the following query to the ProtobufCloudDeserializer.asaql file.

SELECT * FROM InputDownload the sample protobuf input file. In the Inputs folder, right-click input.json and select Add local input. Then, double-click local_input1.json and use the default configurations, except for the following settings.

Setting Suggested Value Select local file path Select CodeLens to select < The file path for the downloaded sample protobuf input file>

Execute the Stream Analytics job

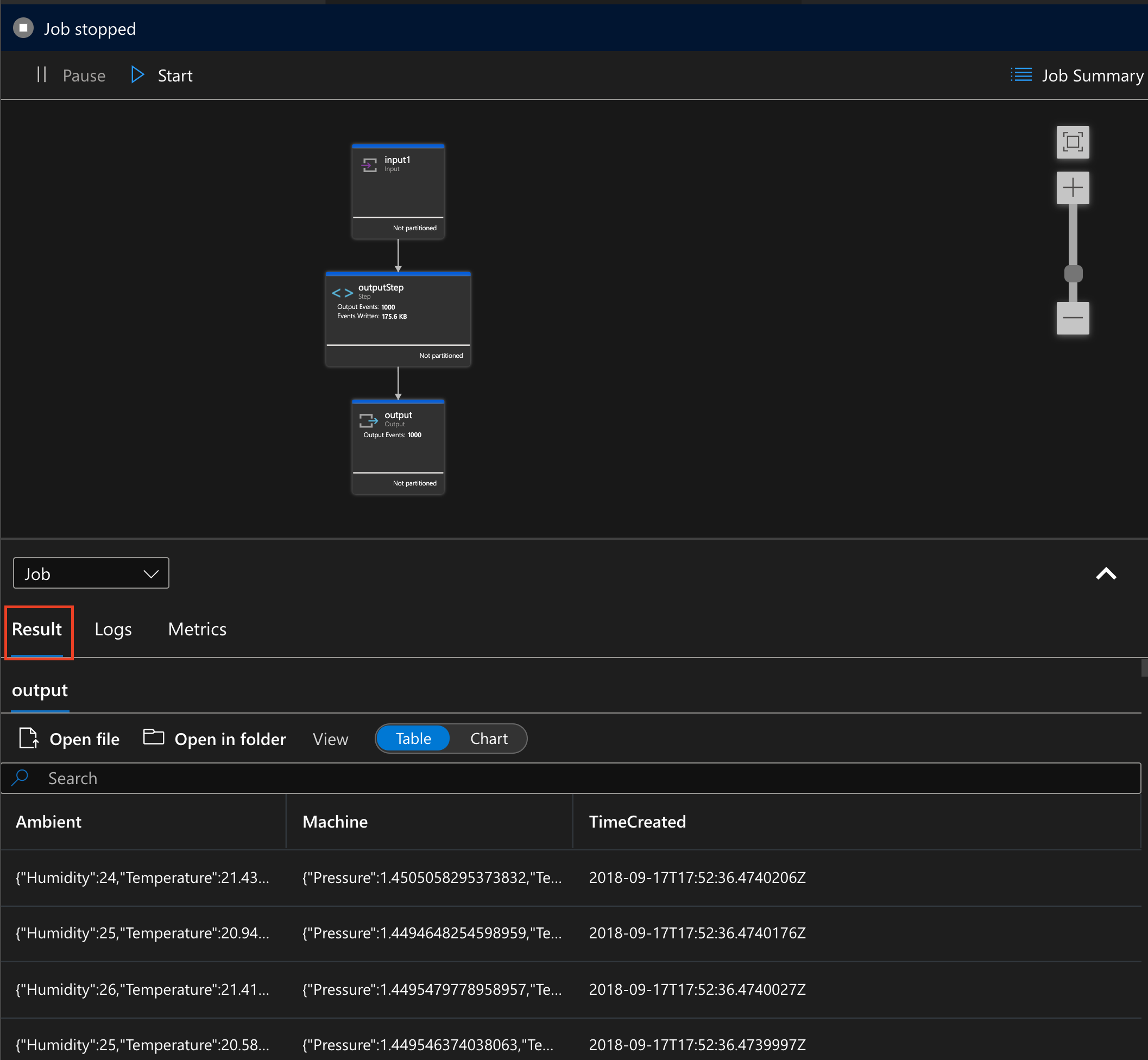

Open ProtobufCloudDeserializer.asaql and select Run Locally from CodeLens then choose Use Local Input from the dropdown list.

Under the Results tab in the Job diagram, you can view the output results. You can also click on the steps in the job diagram to view intermediate result. For more details, please see Debug locally using job diagram.

You've successfully implemented a custom deserializer for your Stream Analytics job! In this tutorial, you tested the custom deserializer locally with local input data. You can also test it using live data input in the cloud. For running the job in the cloud, you would properly configure the input and output. Then submit the job to Azure from Visual Studio Code to run your job in the cloud using the custom deserializer you implemented.

Debug your deserializer

You can debug your .NET deserializer locally the same way you debug standard .NET code.

Add breakpoints in your .NET function.

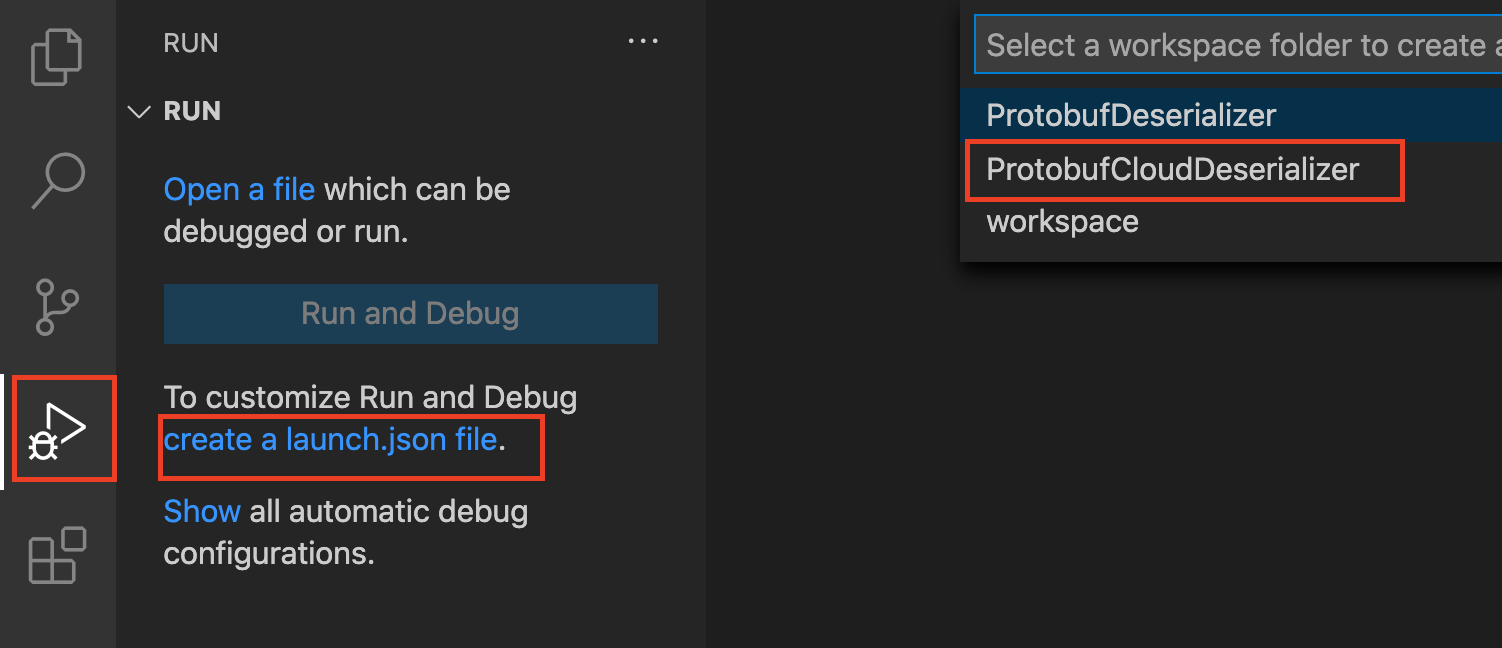

Click Run from Visual Studio Code Activity bar and select create a launch.json file.

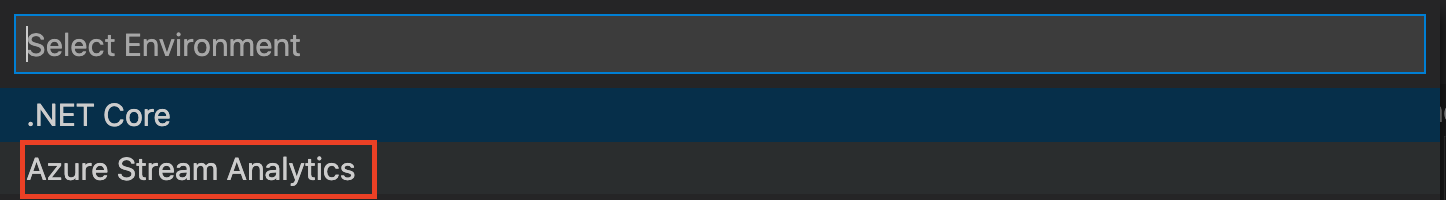

Choose ProtobufCloudDeserializer and then Azure Stream Analytics from the dropdown list.

Edit the launch.json file to replace <ASAScript>.asaql with ProtobufCloudDeserializer.asaql.

Press F5 to start debugging. The program will stop at your breakpoints as expected. This works for both local input and live input data.

Clean up resources

When no longer needed, delete the resource group, the streaming job, and all related resources. Deleting the job avoids billing the streaming units consumed by the job. If you're planning to use the job in future, you can stop it and restart it later when you need. If you aren't going to continue to use this job, delete all resources created by this tutorial by using the following steps:

From the left-hand menu in the Azure portal, select Resource groups and then select the name of the resource you created.

On your resource group page, select Delete, type the name of the resource to delete in the text box, and then select Delete.

Next steps

In this tutorial, you learned how to implement a custom .NET deserializer for the protocol buffer input serialization. To learn more about creating custom deserializers, continue to the following article: