Troubleshoot healthcare data solutions in Microsoft Fabric (preview)

[This article is prerelease documentation and is subject to change.]

This article provides information about some issues or errors you might see when using healthcare data solutions in Microsoft Fabric (preview) and how to resolve them. The article also includes some application monitoring guidance.

If your issue continues to persist after following the guidance in this article, use Create a support ticket to submit an incident ticket.

Troubleshoot deployment anomalies

Following is a list of intermittent issues observed while deploying healthcare data solutions (preview) to the Fabric workspace, and workarounds in the event you encounter any of them.

Solution creation fails.

Error: Creation of the healthcare solution is in progress for more than 5 minutes, and/or fails.

Cause: This error occurs if there's another healthcare solution that shares the same name, or was recently deleted.

Resolution: If you recently deleted a solution, wait for 30 to 60 minutes before attempting another deployment.

Capability deployment fails.

Error: Capabilities within healthcare data solutions (preview) fail to deploy.

Resolution: Verify whether the capability is listed under the Manage deployed capabilities section.

- If the capability isn't listed in the table, retry deploying the capability by selecting the capability tile and then selecting the Deploy to workspace button.

- If the capability is listed in the table with the status value Failed, mitigate this issue by creating a new healthcare data solutions (preview) environment and redeploy the capability in the new environment.

Troubleshoot unidentified tables

When delta tables are created in the lakehouse for the first time, they might temporarily show up as "unidentified" or empty in the Lakehouse Explorer view. However, they should appear correctly under the tables folder after a few minutes.

Rerun data pipeline

To rerun the sample data end-to-end, you can follow these steps:

Run a Spark SQL statement from a notebook, such as the following example, to delete all the tables from a lakehouse:

lakehouse_name = "<lakehouse_name>" tables = spark.sql(f"SHOW TABLES IN {lakehouse_name}") for row in tables.collect(): spark.sql(f"DROP TABLE {lakehouse_name}.{row[1]}")Use OneLake file explorer to connect to OneLake in your Windows File Explorer.

Navigate to your workspace folder in Windows File Explorer. Under

<solution_name>.HealthDataManager/DMHCheckpoint, delete all the corresponding folders in<lakehouse_id>/<table_name>. Alternatively, you can also use Microsoft Spark Utilities (MSSparkUtils) for Fabric to delete the folder.Rerun all the notebooks, beginning with the ingestion notebooks in the bronze lakehouse.

Monitor Apache Spark applications with Azure Log Analytics

The Apache Spark application logs are sent to an Azure Log Analytics workspace instance that can be queried. Here's a sample Kusto query to filter the logs specific to healthcare data solutions (preview):

AppTraces

| where Properties['LoggerName'] contains "Healthcaredatasolutions"

or Properties['LoggerName'] contains "DMF"

or Properties['LoggerName'] contains "RMT"

| limit 1000

Additionally, the notebook's console logs also log the RunId for each execution. You can use this value to retrieve logs for a specific run as indicated in the following sample query:

AppTraces

| where Properties['RunId'] == "<RunId>"

For general monitoring information, review the guidance in Use the Fabric Monitoring hub.

Resolve authorization errors with the FHIR export notebook

When the FHIR export notebook healthcare#_msft_fhir_export_service is under execution, the request might fail with an HTTP 401: Unauthorized error if you missed assigning the required permissions to the Azure function app or to the FHIR server.

Ensure you assign the FHIR Data Exporter role to the function app on the FHIR service and the Storage blob data contributor role to the FHIR service on the configured export storage account.

For more information, review the post-deployment steps in Deploy Azure Marketplace offer.

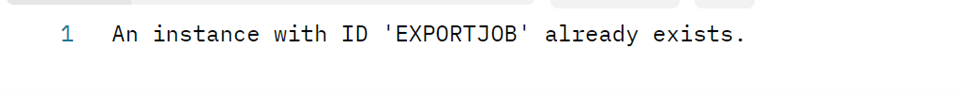

Resolve conflict errors with the FHIR export notebook

When the FHIR export notebook healthcare#_msft_fhir_export_service is under execution, the request might sometimes fail with an HTTP 409: Conflict error.

The Azure function app is configured to run only one instance of export at any point. An HTTP 409 error indicates that there's another instance of an export operation that's currently running. You should wait until the instance completes, and then trigger another export.

Monitor function app logs with Log Analytics

You can monitor the export function app service's logs in the Log Analytics workspace deployed to your Azure resource group. Here's a sample Kusto query to view the function app traces:

AppTraces

| where AppRoleName startswith "msft-func-datamanager-export"

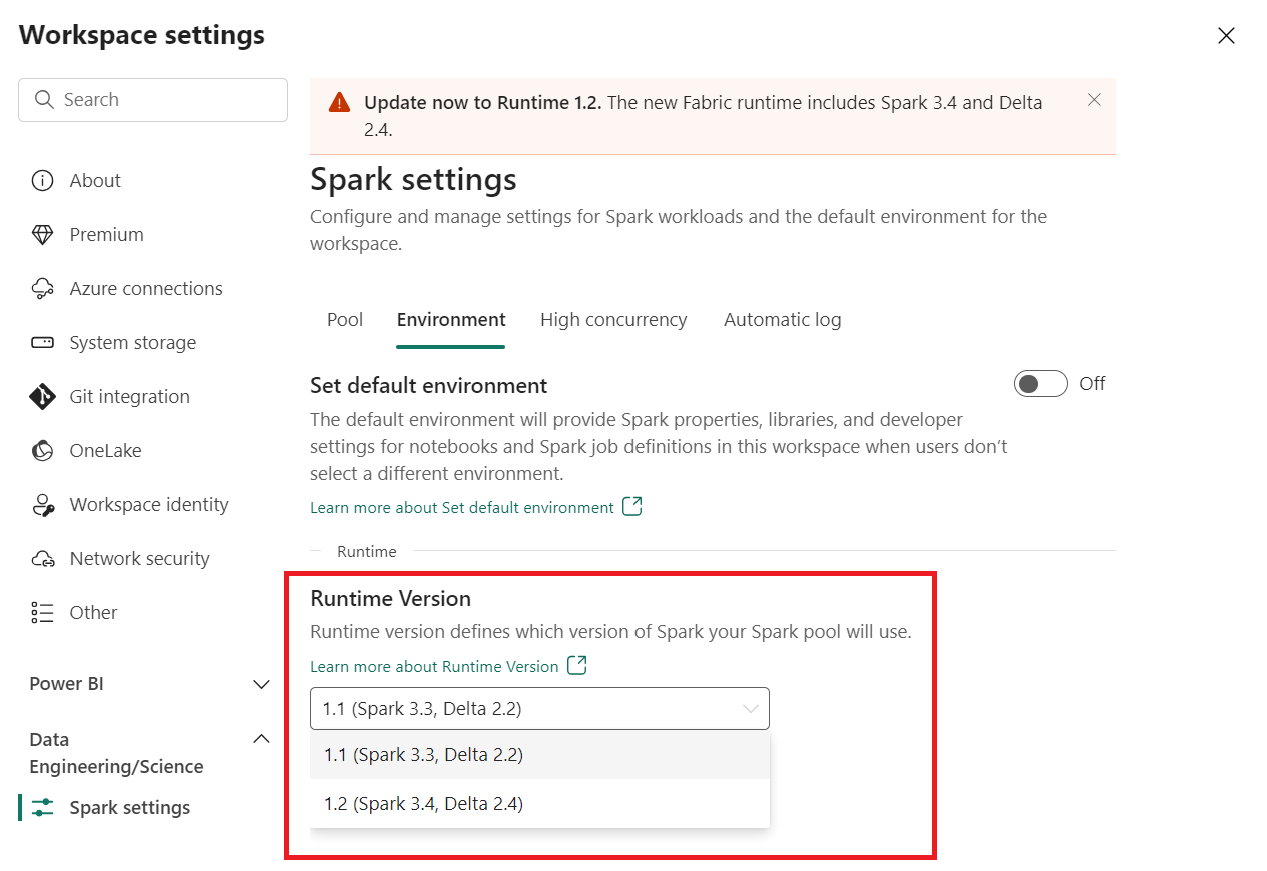

Reset Spark runtime version in the Fabric workspace

By default, all new Fabric workspaces use the latest Fabric runtime version, which is now Runtime 1.2. However, healthcare data solutions (preview) currently only support Runtime 1.1.

Hence, post deploying healthcare data solutions (preview) to your workspace, remember to update the default Fabric runtime version to Runtime 1.1 (Apache Spark 3.3.1 and Delta Lake 2.2.0) before executing any of the pipelines or notebooks. If not, your pipeline or notebook executions can fail.

For more information, see Support for multiple runtimes in Fabric Runtime.

Refresh the Fabric UI and OneLake file explorer

Sometimes, you might notice that the Fabric UI or the OneLake file explorer doesn't always refresh the content after each notebook execution. If you don't see the expected result in the UI after running any execution step (such as creating a new folder or lakehouse, or ingesting new data into a table), try refreshing the artifact (table, lakehouse, folder). This refresh can often resolve discrepancies before you explore other options or investigate further.