Hyper-V 2012 R2 Network Architectures (Part 8 of 7) – Bonus

Hi again, this post was not planned but today one MVP (Hans Vredevoort https://www.hyper-v.nu/ ) explained me an additional Backend architecture option that is also recommended. He did a similar diagram to represent this option, so let’s figure out what is different.

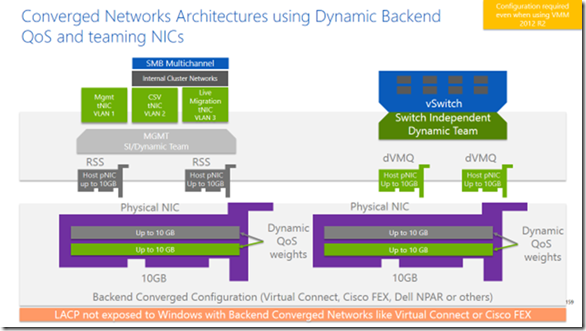

This configuration is slightly different compared with the Dynamic Backend QoS in part 5 because is simplifying the Backend configuration and only presenting 4 Multiplexed adapters to Windows. Once this is done, you just need to create a TEAM with two of these adapters for all the networks required on the parent partition, like Mgmt, CSV and Live Migration.

On top of the TEAM, you have to create a tNIC (Teaming NIC) for each traffic with the corresponding VLAN. This gives isolation between traffics and control from the OS, while allowing to dynamically create more tNICs if needed without having to reboot the Hyper-V host. (you may need additional tNICs for SMB for example)

Everything can be managed either by PowerShell or the NIC TEAM Console (lbfoadmin.exe) and of course RSS will give you the best possible performance from the adapters presented by the backend.

The TEAM and the vSwitch configuration on the right side of the diagram is identical to the Dynamic Backend QoS discussed in part 5, so the VMs/Tenants traffic is completely isolated from Mgmt, CSV and Live Migration traffics.

One caveat on this configuration is the QoS for each parent partition network traffic type. How I can prioritize Mgmt over CSV or viceversa? I would answer this questions with another question… do you really need to prioritize the traffic of your tNICs when there is already a QoS policy at the backend that will guarantee at least the amount of bandwidth that you define?

Is it true that in part 5 architecture each parent partition traffic will have his own QoS in the backend, but it is also true that the sum of them can be equivalent to what you may want to reserve when using the tNICs approach.

Many thanks to Hans Hvredevoort for the architecture reference.

Comments

- Anonymous

January 01, 2003

The comment has been removed - Anonymous

February 27, 2014

Hi Virtualization gurus, Since 6 months now, I’ve been working on the internal readiness about Hyper - Anonymous

February 28, 2014

First, Great article! Thanks for taking the time to share your deep knowledge and experience.

I have some few questions, I hope you have some time to spare.

Whats better for VM traffic using CNAs, Host Dynamic NIC Teaming with dVMQ or SRV-IO with VM NIC Teaming?

Regarding bonus, part 8. HP FlexFabric uplinks will it be better to hace LACP o A/S SUS? will LACP cause MAC Flap?

Also regarding bonus, part 8. Why 10GB Network? where is FCoE? My opinion is Part6 (4GB FcoE + 6GB LAN) mix with part8 (tNIC part), what do you think?

Kindest Regards,

Juan Quesada - Anonymous

March 04, 2014

As an IT guy I have the strong belief that engineers understand graphics and charts much better than - Anonymous

March 20, 2014

Hello Cristian! Great series! What about another scenario - same to this, but without backend converged configuration. Just QoS configured in server itself? Maybe useful if you have only 1G adapters?

New-NetQosPolicy –Name "Live Migration policy" –LiveMigration –MinBandwidthWeightAction 50

New-NetQosPolicy –Name "Cluster policy" -IPProtocolMatchCondition UDP -IPDstPortMatchCondition 3343 –MinBandwidthWeightAction 40

New-NetQosPolicy –Name "Management policy" –DestinationAddress x.x.x.x/24 –MinBandwidthWeightAction 10 - Anonymous

March 24, 2014

The comment has been removed - Anonymous

May 24, 2014

The comment has been removed - Anonymous

January 13, 2015

Did this configuration and everything worked awesome except for one thing, one of the uplink LAN Swtich failed, as such mgmt team went down (only 1 out of 2 adapters went down however team went down because of NIC teaming convergence (I have configured SI Dynamic Team with all adapters active)) Because mgmt team went down for about 2 min cluster communication got lost taking down several VMs (about 25% out of a 200VM 4 node cluster).

How can I overcome this? I'm thinking of having 3 teams, one for VMs (Active/Active), one for the domain network (Active/Pasive) and one for LM and CSV (Passive/Active). So if one Switch fails, at least one Network will not enter into convergence since already has a passive lane always maintaning full a live cluster heartbeat. has anyone encounter this? Solved it? - Anonymous

April 16, 2015

Can you provide PowerShell for this example?