Troubleshooting Test Data with String Decoder

I value static test data that is derived from historical failure indicators, or representative of typical end-users. But, of course a problem with static test data is that it only provides a limited set of all possible data, and becomes stale or provides little new information after multiple iterations of the test. So, I am a proponent of using random data in well-designed tests. Of course, recklessly generating random data is just plain dumb and potentially results in numerous false positives. But, when the data set is well defined and decomposed into equivalence class subsets then it is possible to generate random data that is representative of all possible data elements; probabilistic stochastic test data!

Last week I released an update to the test tool Babel for generating random strings of Unicode characters. Babel is a useful tool for comprehensive positive or negative testing of a textbox and other edit controls, and API parameters that take string arguments. Using probabilistic stochastic test data significantly increases the breadth of data coverage during a test cycle which increases the probability of exposing anomalies in string parsing and other string manipulation algorithms. But, when using characters from across the Unicode spectrum anomalies are usually caused by a specific character code point (or code points for surrogate pair characters), or combinations of characters.

Of course, telling a developer that a string composed of the characters ꁲᱚRבּ䍳܁쭤ኳ causes an unexpected error would most likely be met with that classic deer in headlights look followed by some muttering such as "That's not a real string" and "nobody would ever enter such a string." Often times developers are likely to shun random strings as test data, and managers might claim it is not representative of 'real' customer scenarios. So, the professional tester knows that instead of simply arguing in favor of random string testing we must troubleshoot the string to identify the specific character code point or code point combination causing the error. Because while a 'real' customer may not likely enter a string of random characters from multiple language scripts, the problem is likely caused by a single character (and sometimes the combination of character code points), and there is some probability of a customer somewhere in the world entering that problematic character! So, as professional's we must find that specific problematic character.

Of course, telling a developer that a string composed of the characters ꁲᱚRבּ䍳܁쭤ኳ causes an unexpected error would most likely be met with that classic deer in headlights look followed by some muttering such as "That's not a real string" and "nobody would ever enter such a string." Often times developers are likely to shun random strings as test data, and managers might claim it is not representative of 'real' customer scenarios. So, the professional tester knows that instead of simply arguing in favor of random string testing we must troubleshoot the string to identify the specific character code point or code point combination causing the error. Because while a 'real' customer may not likely enter a string of random characters from multiple language scripts, the problem is likely caused by a single character (and sometimes the combination of character code points), and there is some probability of a customer somewhere in the world entering that problematic character! So, as professional's we must find that specific problematic character.

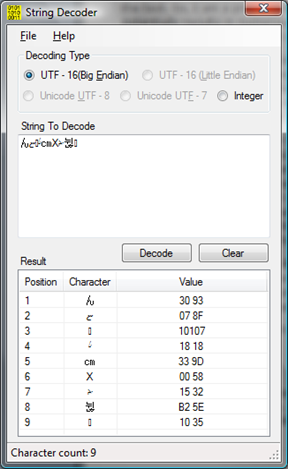

To help professional testers decode each character in a string to its code point value I recently completed a new tool called String Decoder. This test tool is an updated version of my old Str2Val tool (which had some serious problems when converting strings with surrogate pair characters). String Decoder will decode Unicode characters (including surrogate pairs) to their hexadecimal UTF-16 (Big or Little Endian), UTF-8, UTF-7 encoding values, or an integer value (UTF-32).

For example the characters in the string んޏ᠘㎝Xᔲ뉞ဵ have UTF-16 Big Endian encoding values displayed in the Results list in the image.

Once the specific character code point or combination is identified, the tester can now tell the developer exactly what Unicode character or integer value is causing the anomaly. For example, it is much better to state a Unicode value of U+13BD is causing unexpected functionality as compared to trying to explain how to input the Cherokee letter MU or saying "just enter this character Ꮍ."

String Decoder can also be used to compare different Unicode transformation format encodings, or convert between Unicode hex values and 32-bit integer values of characters.

Let me know what you think!

Comments

- Anonymous

February 24, 2009

PingBack from http://www.clickandsolve.com/?p=14280