Get in the game…literally!

You’re the hero, blasting your way through a hostile battlefield, dispatching villains right and left. You feel the power as you control your well-armed, sculpted character through the game. But there is always the nagging feeling: that avatar doesn’t really look like me. Wouldn’t it be great if you could create a fully animated 3D game character that was a recognizable version of yourself?

Well, with the Kinect for Windows v2 sensor and Fuse from Mixamo, you can do just that—no prior knowledge of 3D modelling required. In almost no time, you’ll have a fully armed, animated version of you, ready to insert into selected games and game engines.

[View:https://www.youtube.com/watch?v=Q_T8_yMR0zg]

The magic begins with the Kinect for Windows v2 sensor. You simply pose in front of the Kinect for Windows v2 sensor while its 1080p high-resolution camera captures six images of you: four of your body in static poses, and two of your face. With its enhanced depth sensing—up to three times greater than the original Kinect for Windows sensor—and its improved facial and body tracking, the v2 sensor captures your body in incredible, 3D detail. It tracks 25 joint positions and, with a mesh of 2,000 points, a wealth of facial detail.

You begin creating your personal 3D avatar by posing in front of the Kinect for Windows v2 sensor.

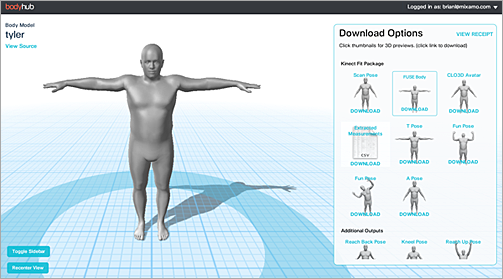

Once you have captured your image with the Kinect sensor, you simply upload it to Body Snap or a similar scanning software program, which will render it as a 3D model. This model is ready for download into an .obj file format designed for Fuse import requirements, which takes place in Body Hub, which, like Body Snap, is a product of Body Labs.

In Body Hub, your 3D model is prepared for download as an .obj file.

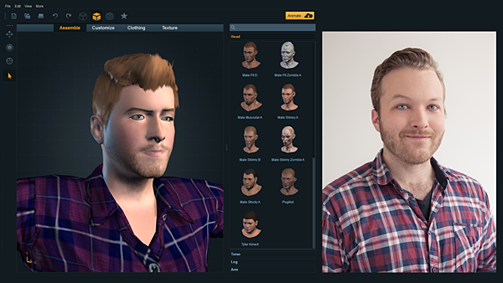

Next, you upload the 3D model to Fuse, where you can take advantage of more 280 “blendshapes” that you can push and pull, sculpting your 3D avatar as much as you want. You can also change your hairstyle and your coloring, as well as choose from a large assortment of clothing.

With your model imported to Fuse, you can customize its shape, hair style, and coloring.

The customization process also gives you an extensive array of wardrobe choices.

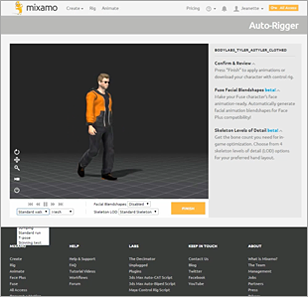

Once you’ve customized your newly scanned image, you export it to Mixamo, where it gets automatically rigged and animated. The process is so simple that it seems almost unreal. Rigging prepares your static 3D model for animation by inserting a 3D skeleton and binding it to the skin of your avatar. Normally, you would need to be a highly skilled technical director to accomplish this, but with Maximo, any gamer can rig a character. Now you’re ready to save and export your animated self into Garry’s Mod and Team Fortress 2—which are just the first two games that have community-made workflows for Fuse-created characters. Support for exporting directly from Fuse to other popular "modding" games is on the Fuse development roadmap.

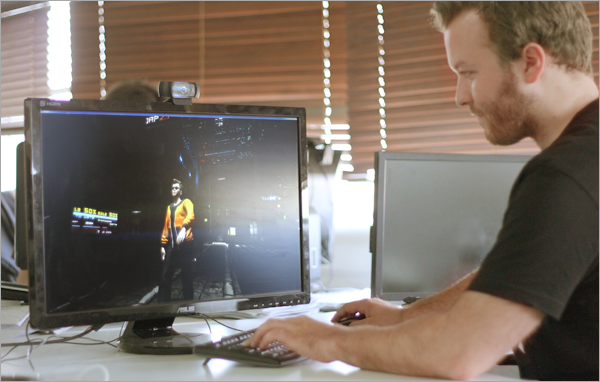

On the left is a customized 3D avatar created from the scans of the gamer on the right.

The beauty of this system is not only its simplicity, but also its speed and relatively low cost. Within just minutes, you can create a fully rigged and animated 3D character. The Kinect for Windows v2 sensor costs just US$199 in the Microsoft Store, and Body Snap from Body Labs is free to download. Fuse can be purchased through Steam for $99, and includes two free auto-rigs per week.

In Mixamo, your avatar really comes to life, as auto-rigging makes it fully animated.

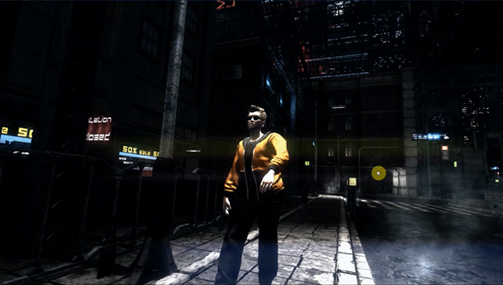

The speed and low cost of this system make it appealing to professional game developers and designers, too, especially since workflows exist for Unity, UDK, Unreal Engine, Source Engine, and Source Filmmaker.

Rigged and ready for action, your personal 3D avatar can be added to games and game engines, as in this shot from a game being developed with Unity.

The folks at Mixamo are committed to making character creation as easy and accessible as possible. “Mixamo’s mission is to make 3D content creation accessible for everyone, and this is another step in that direction,” says Stefano Corazza, CEO of Mixamo. “Kinect for Windows v2 and Fuse make it easier than ever for gamers and game developers to put their likeness into a game. In minutes, the 3D version of you can be running around in a 3D scene.”

And here's the payoff—the gamer plays the 3D avatar of himself. Now that’s putting yourself in the action!

The expertise and equipment required for 3D modeling have long thwarted players and developers who want to add more characters to games, but Kinect for Windows v2 plus Fuse is poised to break down this barrier. Soon, you can thrill to an animated version of you fulfilling your gaming desires, be it holding off alien hordes or building a virtual community. It’s just one more example of how Kinect for Windows technology and partnerships are enhancing entertainment and creativity.

Kinect for Windows Team

Key links

- Learn more about Kinect for Windows

- Purchase the Kinect for Windows v2 sensor

- See other Kinect for Windows applications in action

- Mixamo

- Follow us on Facebook and Twitter

Comments

Anonymous

August 08, 2014

He is soo dreamy!Anonymous

August 10, 2014

Woah--nice! Is there an SDK I can use to integrate this into a game?Anonymous

August 13, 2014

Hello Jenny, Please reach out to Mixamo directly about their Fuse product. Thank you! https://www.mixamo.com/Anonymous

August 23, 2014

My research group at USC Institute for Creative Technologies (a number of months ago) has put together a pipeline to use the Kinect v1 sensor to capture an avatar and automatically use it in a video game. You can see a video of this here: www.youtube.com/watch The autorigging and animation capabilities are in SmartBody, our open source (LGPL) software: http://smartbody.ict.usc.edu This will also let you save out the model in COLLADA format, which is compatible with most game engines. We leverage the superscan capability of the v1 sensor, which used the v1's motor. Unfortunately, since the v2 doesn't have a motor, we can't simply port this capability to the new sensor, although we are looking for other ways to achieve the same effect with v2. If you can create a scan using your own methods (perhaps using Kinect Fusion with the v2 sensor) you can still have SmartBody autorig and skin and animate the model. Regards, Ari Shapiro Research Scientist Institute for Creative Technologies University of Southern California shapiro@ict.usc.edu .Anonymous

September 10, 2014

I have literally wanted to do this forever... I am a modeler and aspiring game dev.... I literally can't use my xbox's kinect for this? I will gladly buy an adapter cord... C'mon Microsoft....Anonymous

September 10, 2014

Wow! How I wish I can get step by step tutorials for this.Anonymous

October 17, 2014

Just to point out a few differences with the smartbody script, Mixamo auto-rigger www.mixamo.com/auto-rigger is fully automated (0 clicks), pose-invariant, works with any/multiple meshes and is provided as a cloud-service. You can upload OBJ or FBX and download FBX or Collada. The rigged characters coming out of Fuse also have facial blendshapes. Free control scripts are available for Maya and 3dsMax to animate the downloaded rig. Mixamo has workflows with every 3D software, for more information see www.mixamo.com/workflows Thanks. Stefano Corazza, PhD contact@mixamo.com