Supported data types

Note

The Time Series Insights service will be retired on 7 July 2024. Consider migrating existing environments to alternative solutions as soon as possible. For more information on the deprecation and migration, visit our documentation.

The following table lists the data types supported by Azure Time Series Insights Gen2

| Data type | Description | Example | Time Series Expression syntax | Property column name in Parquet |

|---|---|---|---|---|

| bool | A data type having one of two states: true or false. |

"isQuestionable" : true |

$event.isQuestionable.Bool or $event['isQuestionable'].Bool |

isQuestionable_bool |

| datetime | Represents an instant in time, typically expressed as a date and time of day. Expressed in ISO 8601 format. Datetime properties are always stored in UTC format. Time zone offsets, if correctly formatted, will be applied and then the valued stored in UTC. See this section for more information on the environment timestamp property and datetime offsets | "eventProcessedLocalTime": "2020-03-20T09:03:32.8301668Z" |

If "eventProcessedLocalTime" is the event source timestamp: $event.$ts. If it's another JSON property: $event.eventProcessedLocalTime.DateTime or $event['eventProcessedLocalTime'].DateTime |

eventProcessedLocalTime_datetime |

| double | A double-precision 64-bit number | "value": 31.0482941 |

$event.value.Double or $event['value'].Double |

value_double |

| long | A signed 64-bit integer | "value" : 31 |

$event.value.Long or $event['value'].Long |

value_long |

| string | Text values, must consist of valid UTF-8. Null and empty strings are treated the same. | "site": "DIM_MLGGG" |

$event.site.String or $event['site'].String |

site_string |

| dynamic | A complex (non-primitive) type consisting of either an array or property bag (dictionary). Currently only stringified JSON arrays of primitives or arrays of objects not containing the TS ID or timestamp property(ies) will be stored as dynamic. Read this article to understand how objects will be flattened and arrays may be unrolled. Payload properties stored as this type are only accessible by selecting Explore Events in the Time Series Insights Explorer to view raw events, or through the GetEvents Query API for client-side parsing. |

"values": "[197, 194, 189, 188]" |

Referencing dynamic types in a Time Series Expression is not yet supported | values_dynamic |

Note

64 bit integer values are supported, but the largest number that the Azure Time Series Insights Explorer can safely express is 9,007,199,254,740,991 (2^53-1) due to JavaScript limitations. If you work with numbers in your data model above this, you can reduce the size by creating a Time Series Model variable and converting the value.

Note

String type is not nullable:

- A Time Series Expression (TSX) expressed in a Time Series Query comparing the value of an empty string ('') against NULL will behave the same way:

$event.siteid.String = NULLis equivalent to$event.siteid.String = ''. - The API may return NULL values even if original events contained empty strings.

- Do not take dependency on NULL values in String columns to do comparisons or evaluations, treat them the same way as empty strings.

Sending mixed data types

Your Azure Time Series Insights Gen2 environment is strongly typed. If devices or tags send data of different types for a device property, values will be stored in two separated columns and the coalesce() function should be used when defining your Time Series Model Variable expressions in API calls.

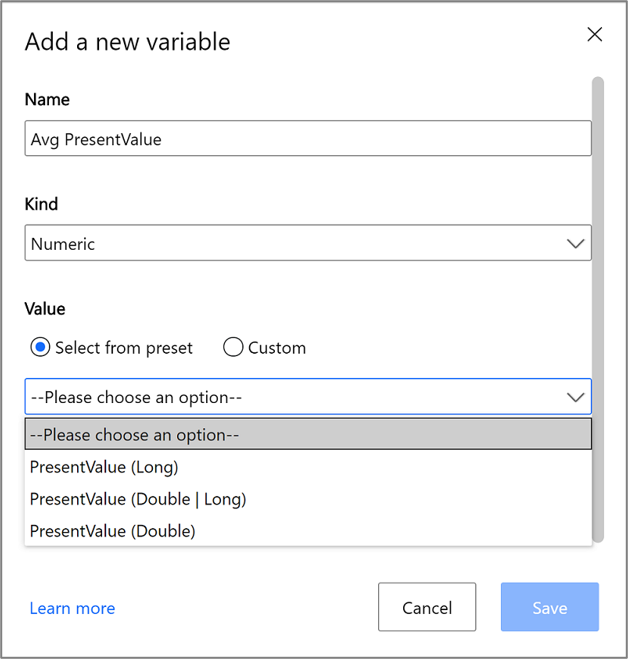

The Azure Time Series Insights Explorer offers a way to auto-coalesce the separate columns of the same device property. In the example below, the sensor sends a PresentValue property that can be both a Long or Double. To query against all stored values (regardless of data type) of the PresentValue property, choose PresentValue (Double | Long) and the columns will be coalesced for you.

Objects and arrays

You may send complex types such as objects and arrays as part of your event payload. Nested objects will be flattened and arrays will either be stored as dynamic or flattened to produce multiple events depending on your environment configuration and JSON shape. To learn more read about the JSON Flattening and Escaping Rules

Next steps

Read the JSON flattening and escaping rules to understand how events will be stored.

Understand your environment's throughput limitations

Learn about event sources to ingest streaming data.