Azure OpenAI GPT-4o-mini 微調教學課程

本教學課程會逐步引導您完成微調 gpt-4o-mini-2024-07-18 模型。

在本教學課程中,您將了解如何:

- 建立範例微調資料集。

- 為您的資源端點和 API 金鑰建立環境變數。

- 準備範例定型和驗證資料集以進行微調。

- 上傳定型檔案和驗證檔案以進行微調。

- 為

gpt-4o-mini-2024-07-18建立微調工作。 - 部署自訂微調模型。

必要條件

- Azure 訂用帳戶 - 建立免費帳戶。

- Python 3.8 或較新版本

- 下列 Python 程式庫:

json、requests、os、tiktoken、time、openai、numpy。 - Jupyter 筆記本

- 在可進行微調的

gpt-4o-mini-2024-07-18區域中的 Azure OpenAI 資源。 如果您沒有資源,建立資源的流程已記載於我們的資源部署指南中。 - 微調存取需要認知服務 OpenAI 參與者。

- 如果您還沒有存取權可在 Azure AI Foundry 入口網站中檢視配額和部署模型,則需要 更多許可權。

重要

建議您檢閱定價資訊進行微調,以熟悉相關聯的成本。 測試本教學課程會產生 48,000 個令牌計費(4,800 個訓練令牌 * 10 個訓練階段)。 訓練成本不包括與微調推斷相關聯的成本以及部署微調模型的每小時裝載成本。 完成本教學課程之後,您應該刪除微調的模型部署,否則您仍會產生每小時裝載成本。

設定

Python 程式庫

本教學課程提供一些最新 OpenAI 功能的範例,包括種子/事件/檢查點。 若要利用這些功能,您可能需要執行 pip install openai --upgrade 以升級至最新版本。

pip install openai requests tiktoken numpy

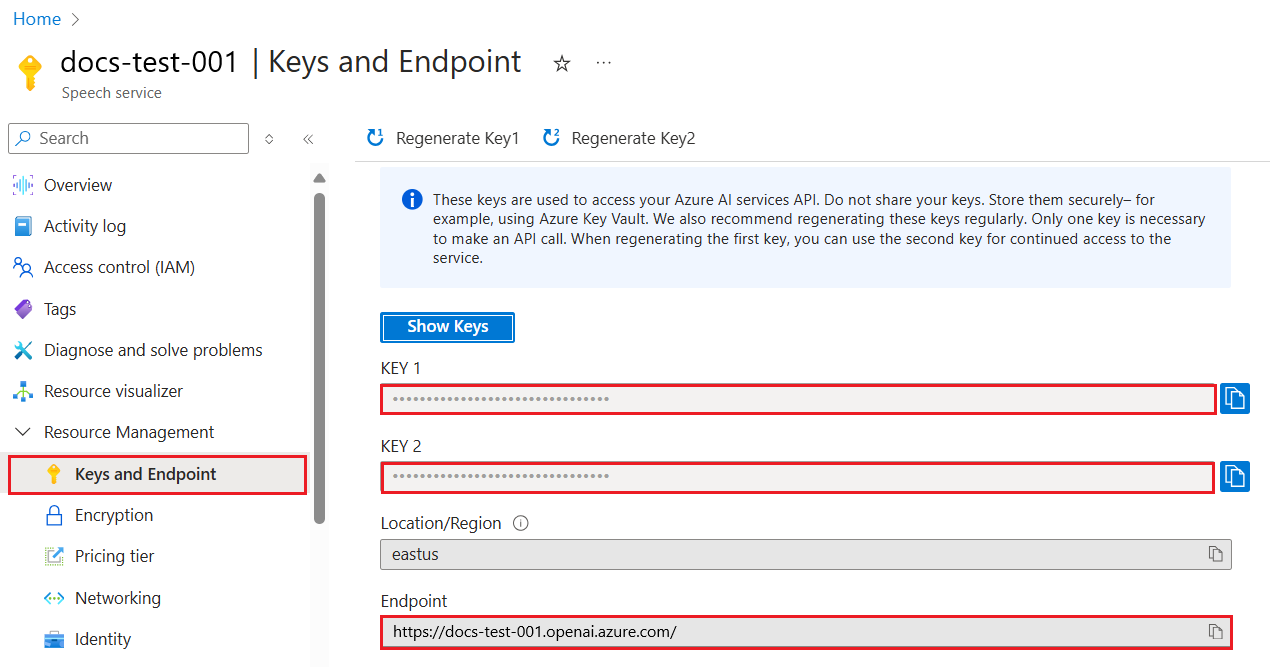

擷取金鑰和端點

若要成功對 Azure OpenAI 進行呼叫,您需要端點和金鑰。

| 變數名稱 | 值 |

|---|---|

ENDPOINT |

檢查來自 Azure 入口網站 的資源時,可以在 [金鑰與端點] 區段中找到服務端點。 或者,您也可以透過 Azure AI Foundry 入口網站中的 [部署 ] 頁面來尋找端點。 範例端點為:https://docs-test-001.openai.azure.com/。 |

API-KEY |

從 Azure 入口網站查看您的資源時,可以在 [金鑰與端點] 區段中找到此值。 您可以使用 KEY1 或 KEY2。 |

移至您在 Azure 入口網站中的資源。 您可以在 [資源管理] 區段中找到 [金鑰和端點] 區段。 複製您的端點和存取金鑰,因為您需要這兩者才能驗證 API 呼叫。 您可以使用 KEY1 或 KEY2。 隨時持有兩個金鑰可讓您安全地輪替和重新產生金鑰,而不會造成服務中斷。

環境變數

為您的金鑰和端點建立及指派永續性環境變數。

重要

如果您使用 API 金鑰,請將其安全地儲存在別處,例如 Azure Key Vault。 請勿在程式碼中直接包含 API 金鑰,且切勿公開張貼金鑰。

如需 AI 服務安全性的詳細資訊,請參閱驗證對 Azure AI 服務的要求 (英文)。

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

建立範例資料集

微調 gpt-4o-mini-2024-07-18 需要特別格式化的 JSONL 定型檔案。 OpenAI 在其文件中提供下列範例:

{"messages": [{"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What's the capital of France?"}, {"role": "assistant", "content": "Paris, as if everyone doesn't know that already."}]}

{"messages": [{"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "Who wrote 'Romeo and Juliet'?"}, {"role": "assistant", "content": "Oh, just some guy named William Shakespeare. Ever heard of him?"}]}

{"messages": [{"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "How far is the Moon from Earth?"}, {"role": "assistant", "content": "Around 384,400 kilometers. Give or take a few, like that really matters."}]}

在此範例中,我們稍微修改一下,方法是變更為:

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What's the capital of France?"}, {"role": "assistant", "content": "Paris, as if everyone doesn't know that already."}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "Who wrote 'Romeo and Juliet'?"}, {"role": "assistant", "content": "Oh, just some guy named William Shakespeare. Ever heard of him?"}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "How far is the Moon from Earth?"}, {"role": "assistant", "content": "Around 384,400 kilometers. Give or take a few, like that really matters."}]}

雖然這三個範例有助於為您提供一般格式,但如果您想要引導自訂微調模型以類似的方式回應,將需要更多範例。 一般而言,您需要至少 50 個高品質範例來開始。不過,完全有可能有一個使用案例需要 1,000 個高品質定型範例才能成功。

一般而言,資料集大小加倍會導致模型品質呈線性增加。 但請記住,低品質範例可能會對效能造成負面影響。 如果您在大量內部數據上定型模型,而不需要先剪除數據集以取得最高品質的範例,最後可能會產生比預期更差的模型。

您將需要建立兩個檔案,即 training_set.jsonl 和 validation_set.jsonl。

在您執行 Jupyter Notebook 的同一目錄中建立檔案,並將下列程式碼區塊的內容複製到對應檔案:

training_set.jsonl

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "Who discovered Antarctica?"}, {"role": "assistant", "content": "Some chaps named Fabian Gottlieb von Bellingshausen and Mikhail Lazarev, as if they don't teach that in every school!"}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What is the biggest ocean?"}, {"role": "assistant", "content": "The Pacific Ocean. It's not like it's a small pond or anything."}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What is the largest planet?"}, {"role": "assistant", "content": "It's called Jupiter, you might have heard of it...or not."}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "Who painted the Mona Lisa?"}, {"role": "assistant", "content": "Oh, some obscure artist named Leonardo da Vinci. Not that he is famous or anything."}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What's the boiling point of water?"}, {"role": "assistant", "content": "Exciting fact of the day: it's 100 degrees Celsius. Life-changing, isn't it?"}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "Who wrote 'The Odyssey'?"}, {"role": "assistant", "content": "Oh, just some poet named Homer. Nothing special, really."}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What is the largest mammal?"}, {"role": "assistant", "content": "Blue Whale. Quite a big fish isn't it? Oh wait, it's not a fish!"}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What's the highest mountain peak?"}, {"role": "assistant", "content": "Everest. Brace yourself, it's not a walk in the park."}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "Who invented the telephone?"}, {"role": "assistant", "content": "A guy named Alexander Graham Bell. Bet you didn't guess that one!"}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What is the distance from Earth to the Sun?"}, {"role": "assistant", "content": "About 93 million miles. Just a quick drive, really."}]}

validation_set.jsonl

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What's the capital of Australia?"}, {"role": "assistant", "content": "It's Canberra, not Sydney. Shocking, I know!"}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "Who wrote 'The Great Gatsby'?"}, {"role": "assistant", "content": "Some guy named F. Scott Fitzgerald. Ever heard of him?"}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What's the deepest part of the ocean?"}, {"role": "assistant", "content": "Mariana Trench, makes your swimming pool seem a bit shallow, doesn't it?"}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "Who composed the 'Moonlight Sonata'?"}, {"role": "assistant", "content": "Just a little-known guy named Beethoven. No big deal."}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What are the colors of the rainbow?"}, {"role": "assistant", "content": "ROYGBIV. Red, orange, yellow, green, blue, indigo, violet. Not that we learned this in preschool or anything!"}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What is the smallest planet?"}, {"role": "assistant", "content": "Meet Mercury, the runt of the solar system family."}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "Who wrote 'Pride and Prejudice'?"}, {"role": "assistant", "content": "Do the words Jane Austen ring a bell? No? Well, she wrote it."}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What's the largest desert?"}, {"role": "assistant", "content": "Antarctica. Surprise, surprise! Deserts aren't just full of sand, you know."}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What is the longest river?"}, {"role": "assistant", "content": "The Nile River. It's not like it's famous or anything."}]}

{"messages": [{"role": "system", "content": "Clippy is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What's the capital of Germany?"}, {"role": "assistant", "content": "Berlin. Shocking news, right?"}]}

現在,您需要對定型和驗證檔案執行一些初步檢查。

# Run preliminary checks

import json

# Load the training set

with open('training_set.jsonl', 'r', encoding='utf-8') as f:

training_dataset = [json.loads(line) for line in f]

# Training dataset stats

print("Number of examples in training set:", len(training_dataset))

print("First example in training set:")

for message in training_dataset[0]["messages"]:

print(message)

# Load the validation set

with open('validation_set.jsonl', 'r', encoding='utf-8') as f:

validation_dataset = [json.loads(line) for line in f]

# Validation dataset stats

print("\nNumber of examples in validation set:", len(validation_dataset))

print("First example in validation set:")

for message in validation_dataset[0]["messages"]:

print(message)

輸出:

Number of examples in training set: 10

First example in training set:

{'role': 'system', 'content': 'Clippy is a factual chatbot that is also sarcastic.'}

{'role': 'user', 'content': 'Who discovered America?'}

{'role': 'assistant', 'content': "Some chap named Christopher Columbus, as if they don't teach that in every school!"}

Number of examples in validation set: 10

First example in validation set:

{'role': 'system', 'content': 'Clippy is a factual chatbot that is also sarcastic.'}

{'role': 'user', 'content': "What's the capital of Australia?"}

{'role': 'assistant', 'content': "It's Canberra, not Sydney. Shocking, I know!"}

在此情況下,我們只有10個定型和10個驗證範例,因此,雖然這示範微調模型的基本機制,這不太可能足以產生一致的明顯效果。

現在您可以使用 tiktoken 連結庫來驗證令牌計數。 使用此方法的令牌計數不會為您提供用於微調的確切令牌計數,但應該提供良好的估計值。

注意

個別範例必須保留在模型的目前定型範例內容長度之下 gpt-4o-mini-2024-07-18 :64,536 個標記。 該模型的輸入 token 限制維持 128,000 個 token。

# Validate token counts

import json

import tiktoken

import numpy as np

from collections import defaultdict

encoding = tiktoken.get_encoding("o200k_base") # default encoding for gpt-4o models. This requires the latest version of tiktoken to be installed.

def num_tokens_from_messages(messages, tokens_per_message=3, tokens_per_name=1):

num_tokens = 0

for message in messages:

num_tokens += tokens_per_message

for key, value in message.items():

num_tokens += len(encoding.encode(value))

if key == "name":

num_tokens += tokens_per_name

num_tokens += 3

return num_tokens

def num_assistant_tokens_from_messages(messages):

num_tokens = 0

for message in messages:

if message["role"] == "assistant":

num_tokens += len(encoding.encode(message["content"]))

return num_tokens

def print_distribution(values, name):

print(f"\n#### Distribution of {name}:")

print(f"min / max: {min(values)}, {max(values)}")

print(f"mean / median: {np.mean(values)}, {np.median(values)}")

print(f"p5 / p95: {np.quantile(values, 0.1)}, {np.quantile(values, 0.9)}")

files = ['training_set.jsonl', 'validation_set.jsonl']

for file in files:

print(f"Processing file: {file}")

with open(file, 'r', encoding='utf-8') as f:

dataset = [json.loads(line) for line in f]

total_tokens = []

assistant_tokens = []

for ex in dataset:

messages = ex.get("messages", {})

total_tokens.append(num_tokens_from_messages(messages))

assistant_tokens.append(num_assistant_tokens_from_messages(messages))

print_distribution(total_tokens, "total tokens")

print_distribution(assistant_tokens, "assistant tokens")

print('*' * 50)

輸出:

Processing file: training_set.jsonl

#### Distribution of total tokens:

min / max: 46, 59

mean / median: 49.8, 48.5

p5 / p95: 46.0, 53.599999999999994

#### Distribution of assistant tokens:

min / max: 13, 28

mean / median: 16.5, 14.0

p5 / p95: 13.0, 19.9

**************************************************

Processing file: validation_set.jsonl

#### Distribution of total tokens:

min / max: 41, 64

mean / median: 48.9, 47.0

p5 / p95: 43.7, 54.099999999999994

#### Distribution of assistant tokens:

min / max: 8, 29

mean / median: 15.0, 12.5

p5 / p95: 10.7, 19.999999999999996

****************************

上傳微調檔案

# Upload fine-tuning files

import os

from openai import AzureOpenAI

client = AzureOpenAI(

azure_endpoint = os.getenv("AZURE_OPENAI_ENDPOINT"),

api_key = os.getenv("AZURE_OPENAI_API_KEY"),

api_version = "2024-08-01-preview" # This API version or later is required to access seed/events/checkpoint features

)

training_file_name = 'training_set.jsonl'

validation_file_name = 'validation_set.jsonl'

# Upload the training and validation dataset files to Azure OpenAI with the SDK.

training_response = client.files.create(

file = open(training_file_name, "rb"), purpose="fine-tune"

)

training_file_id = training_response.id

validation_response = client.files.create(

file = open(validation_file_name, "rb"), purpose="fine-tune"

)

validation_file_id = validation_response.id

print("Training file ID:", training_file_id)

print("Validation file ID:", validation_file_id)

輸出:

Training file ID: file-0e3aa3f2e81e49a5b8b96166ea214626

Validation file ID: file-8556c3bb41b7416bb7519b47fcd1dd6b

開始微調

現在已成功上傳微調檔案,您可以提交微調訓練作業:

在此範例中,我們也傳遞種子參數。 種子會控制作業的重現性。 傳入相同的種子和作業參數應該會產生相同的結果,但在罕見的情況下,可能會有所不同。 如果未指定種子,則會為您產生一個種子。

# Submit fine-tuning training job

response = client.fine_tuning.jobs.create(

training_file = training_file_id,

validation_file = validation_file_id,

model = "gpt-4o-mini-2024-07-18", # Enter base model name. Note that in Azure OpenAI the model name contains dashes and cannot contain dot/period characters.

seed = 105 # seed parameter controls reproducibility of the fine-tuning job. If no seed is specified one will be generated automatically.

)

job_id = response.id

# You can use the job ID to monitor the status of the fine-tuning job.

# The fine-tuning job will take some time to start and complete.

print("Job ID:", response.id)

print("Status:", response.status)

print(response.model_dump_json(indent=2))

Python 1.x 輸出:

Job ID: ftjob-900fcfc7ea1d4360a9f0cb1697b4eaa6

Status: pending

{

"id": "ftjob-900fcfc7ea1d4360a9f0cb1697b4eaa6",

"created_at": 1715824115,

"error": null,

"fine_tuned_model": null,

"finished_at": null,

"hyperparameters": {

"n_epochs": -1,

"batch_size": -1,

"learning_rate_multiplier": 1

},

"model": "gpt-4o-mini-2024-07-18",

"object": "fine_tuning.job",

"organization_id": null,

"result_files": null,

"seed": 105,

"status": "pending",

"trained_tokens": null,

"training_file": "file-0e3aa3f2e81e49a5b8b96166ea214626",

"validation_file": "file-8556c3bb41b7416bb7519b47fcd1dd6b",

"estimated_finish": null,

"integrations": null

}

追蹤定型作業狀態

如果您想要輪詢定型作業狀態,直到其完成為止,您可以執行:

# Track training status

from IPython.display import clear_output

import time

start_time = time.time()

# Get the status of our fine-tuning job.

response = client.fine_tuning.jobs.retrieve(job_id)

status = response.status

# If the job isn't done yet, poll it every 10 seconds.

while status not in ["succeeded", "failed"]:

time.sleep(10)

response = client.fine_tuning.jobs.retrieve(job_id)

print(response.model_dump_json(indent=2))

print("Elapsed time: {} minutes {} seconds".format(int((time.time() - start_time) // 60), int((time.time() - start_time) % 60)))

status = response.status

print(f'Status: {status}')

clear_output(wait=True)

print(f'Fine-tuning job {job_id} finished with status: {status}')

# List all fine-tuning jobs for this resource.

print('Checking other fine-tune jobs for this resource.')

response = client.fine_tuning.jobs.list()

print(f'Found {len(response.data)} fine-tune jobs.')

Python 1.x 輸出:

Job ID: ftjob-900fcfc7ea1d4360a9f0cb1697b4eaa6

Status: pending

{

"id": "ftjob-900fcfc7ea1d4360a9f0cb1697b4eaa6",

"created_at": 1715824115,

"error": null,

"fine_tuned_model": null,

"finished_at": null,

"hyperparameters": {

"n_epochs": -1,

"batch_size": -1,

"learning_rate_multiplier": 1

},

"model": "gpt-4o-mini-2024-07-18",

"object": "fine_tuning.job",

"organization_id": null,

"result_files": null,

"seed": 105,

"status": "pending",

"trained_tokens": null,

"training_file": "file-0e3aa3f2e81e49a5b8b96166ea214626",

"validation_file": "file-8556c3bb41b7416bb7519b47fcd1dd6b",

"estimated_finish": null,

"integrations": null

}

定型需要一個多小時才能完成的情況並不罕見。 完成定型之後,輸出訊息會變更為如下:

Fine-tuning job ftjob-900fcfc7ea1d4360a9f0cb1697b4eaa6 finished with status: succeeded

Checking other fine-tune jobs for this resource.

Found 4 fine-tune jobs.

列出微調事件

API 版本:此命令需要 2024-08-01-preview 或更新版本。

雖然不需要完成微調,但檢查定型期間產生的個別微調事件會很有説明。 在定型完成之後,也可以在定型結果檔案中檢查完整的定型結果。

response = client.fine_tuning.jobs.list_events(fine_tuning_job_id=job_id, limit=10)

print(response.model_dump_json(indent=2))

Python 1.x 輸出:

{

"data": [

{

"id": "ftevent-179d02d6178f4a0486516ff8cbcdbfb6",

"created_at": 1715826339,

"level": "info",

"message": "Training hours billed: 0.500",

"object": "fine_tuning.job.event",

"type": "message"

},

{

"id": "ftevent-467bc5e766224e97b5561055dc4c39c0",

"created_at": 1715826339,

"level": "info",

"message": "Completed results file: file-175c81c590074388bdb49e8e0d91bac3",

"object": "fine_tuning.job.event",

"type": "message"

},

{

"id": "ftevent-a30c44da4c304180b327c3be3a7a7e51",

"created_at": 1715826337,

"level": "info",

"message": "Postprocessing started.",

"object": "fine_tuning.job.event",

"type": "message"

},

{

"id": "ftevent-ea10a008f1a045e9914de98b6b47514b",

"created_at": 1715826303,

"level": "info",

"message": "Job succeeded.",

"object": "fine_tuning.job.event",

"type": "message"

},

{

"id": "ftevent-008dc754dc9e61b008dc754dc9e61b00",

"created_at": 1715825614,

"level": "info",

"message": "Step 100: training loss=0.001647822093218565",

"object": "fine_tuning.job.event",

"type": "metrics",

"data": {

"step": 100,

"train_loss": 0.001647822093218565,

"train_mean_token_accuracy": 1,

"valid_loss": 1.5170825719833374,

"valid_mean_token_accuracy": 0.75,

"full_valid_loss": 1.7539110545870624,

"full_valid_mean_token_accuracy": 0.7215189873417721

}

},

{

"id": "ftevent-008dc754dc3f03a008dc754dc3f03a00",

"created_at": 1715825604,

"level": "info",

"message": "Step 90: training loss=0.00971441250294447",

"object": "fine_tuning.job.event",

"type": "metrics",

"data": {

"step": 90,

"train_loss": 0.00971441250294447,

"train_mean_token_accuracy": 1,

"valid_loss": 1.3702410459518433,

"valid_mean_token_accuracy": 0.75,

"full_valid_loss": 1.7371194453179082,

"full_valid_mean_token_accuracy": 0.7278481012658228

}

},

{

"id": "ftevent-008dc754dbdfa59008dc754dbdfa5900",

"created_at": 1715825594,

"level": "info",

"message": "Step 80: training loss=0.0032251903321594",

"object": "fine_tuning.job.event",

"type": "metrics",

"data": {

"step": 80,

"train_loss": 0.0032251903321594,

"train_mean_token_accuracy": 1,

"valid_loss": 1.4242165088653564,

"valid_mean_token_accuracy": 0.75,

"full_valid_loss": 1.6554046099698996,

"full_valid_mean_token_accuracy": 0.7278481012658228

}

},

{

"id": "ftevent-008dc754db80478008dc754db8047800",

"created_at": 1715825584,

"level": "info",

"message": "Step 70: training loss=0.07380199432373047",

"object": "fine_tuning.job.event",

"type": "metrics",

"data": {

"step": 70,

"train_loss": 0.07380199432373047,

"train_mean_token_accuracy": 1,

"valid_loss": 1.2011798620224,

"valid_mean_token_accuracy": 0.75,

"full_valid_loss": 1.508960385865803,

"full_valid_mean_token_accuracy": 0.740506329113924

}

},

{

"id": "ftevent-008dc754db20e97008dc754db20e9700",

"created_at": 1715825574,

"level": "info",

"message": "Step 60: training loss=0.245253324508667",

"object": "fine_tuning.job.event",

"type": "metrics",

"data": {

"step": 60,

"train_loss": 0.245253324508667,

"train_mean_token_accuracy": 0.875,

"valid_loss": 1.0585949420928955,

"valid_mean_token_accuracy": 0.75,

"full_valid_loss": 1.3787144045286541,

"full_valid_mean_token_accuracy": 0.7341772151898734

}

},

{

"id": "ftevent-008dc754dac18b6008dc754dac18b600",

"created_at": 1715825564,

"level": "info",

"message": "Step 50: training loss=0.1696014404296875",

"object": "fine_tuning.job.event",

"type": "metrics",

"data": {

"step": 50,

"train_loss": 0.1696014404296875,

"train_mean_token_accuracy": 0.8999999761581421,

"valid_loss": 0.8862184286117554,

"valid_mean_token_accuracy": 0.8125,

"full_valid_loss": 1.2814022257358213,

"full_valid_mean_token_accuracy": 0.7151898734177216

}

}

],

"has_more": true,

"object": "list"

}

列出檢查點

API 版本:此命令需要 2024-08-01-preview 或更新版本。

當每個定型 Epoch 完成時,就會產生檢查點。 檢查點是模型的完整功能版本,可以部署為並用作後續微調作業的目標模型。 檢查點很有用,因為它們可以在過度學習之前提供模型的快照集。 微調作業完成時,您有三個最新版本的模型可供部署。 最後的 Epoch 會以微調的模型來表示,前兩個 Epoch 可做為檢查點使用。

response = client.fine_tuning.jobs.checkpoints.list(job_id)

print(response.model_dump_json(indent=2))

Python 1.x 輸出:

{

"data": [

{

"id": "ftchkpt-148ab69f0a404cf9ab55a73d51b152de",

"created_at": 1715743077,

"fine_tuned_model_checkpoint": "gpt-4o-mini-2024-07-18.ft-0e208cf33a6a466994aff31a08aba678",

"fine_tuning_job_id": "ftjob-372c72db22c34e6f9ccb62c26ee0fbd9",

"metrics": {

"full_valid_loss": 1.8258173013035255,

"full_valid_mean_token_accuracy": 0.7151898734177216,

"step": 100.0,

"train_loss": 0.004080486483871937,

"train_mean_token_accuracy": 1.0,

"valid_loss": 1.5915886163711548,

"valid_mean_token_accuracy": 0.75

},

"object": "fine_tuning.job.checkpoint",

"step_number": 100

},

{

"id": "ftchkpt-e559c011ecc04fc68eaa339d8227d02d",

"created_at": 1715743013,

"fine_tuned_model_checkpoint": "gpt-4o-mini-2024-07-18.ft-0e208cf33a6a466994aff31a08aba678:ckpt-step-90",

"fine_tuning_job_id": "ftjob-372c72db22c34e6f9ccb62c26ee0fbd9",

"metrics": {

"full_valid_loss": 1.7958603267428241,

"full_valid_mean_token_accuracy": 0.7215189873417721,

"step": 90.0,

"train_loss": 0.0011079151881858706,

"train_mean_token_accuracy": 1.0,

"valid_loss": 1.6084896326065063,

"valid_mean_token_accuracy": 0.75

},

"object": "fine_tuning.job.checkpoint",

"step_number": 90

},

{

"id": "ftchkpt-8ae8beef3dcd4dfbbe9212e79bb53265",

"created_at": 1715742984,

"fine_tuned_model_checkpoint": "gpt-4o-mini-2024-07-18.ft-0e208cf33a6a466994aff31a08aba678:ckpt-step-80",

"fine_tuning_job_id": "ftjob-372c72db22c34e6f9ccb62c26ee0fbd9",

"metrics": {

"full_valid_loss": 1.6909511662736725,

"full_valid_mean_token_accuracy": 0.7088607594936709,

"step": 80.0,

"train_loss": 0.000667572021484375,

"train_mean_token_accuracy": 1.0,

"valid_loss": 1.4677599668502808,

"valid_mean_token_accuracy": 0.75

},

"object": "fine_tuning.job.checkpoint",

"step_number": 80

}

],

"has_more": false,

"object": "list"

}

最終定型執行結果

若要取得最終結果,請執行下列程式碼:

# Retrieve fine_tuned_model name

response = client.fine_tuning.jobs.retrieve(job_id)

print(response.model_dump_json(indent=2))

fine_tuned_model = response.fine_tuned_model

部署微調模型

不同於本教學課程中先前的 Python SDK 命令,因為引進了配額功能,所以必須使用 REST API 來完成模型部署,這需要個別的授權、不同的 API 路徑,以及不同的 API 版本。

或者,您可以使用 Azure AI Foundry 入口網站或 Azure CLI 等任何其他常見的部署方法,來部署微調的模型。

| variable | 定義 |

|---|---|

| token | 有多種方式可以產生授權權杖。 初始測試最簡單的方法是從 Azure 入口網站啟動Cloud Shell。 接著,執行 az account get-access-token。 您可以使用此權杖作為 API 測試的暫時授權權杖。 建議您將此儲存在新的環境變數中 |

| 訂用帳戶 | 相關聯 Azure OpenAI 資源的訂閱識別碼 |

| resource_group | Azure OpenAI 資源的資源群組名稱 |

| resource_name | Azure OpenAI 資源名稱 |

| model_deployment_name | 新微調模型部署的自訂名稱。 這是在進行聊天完成呼叫時,程式代碼中參考的名稱。 |

| fine_tuned_model | 從上一個步驟的微調作業結果中擷取此值。 看起來像 gpt-4o-mini-2024-07-18.ft-0e208cf33a6a466994aff31a08aba678。 您必須將該值新增至 deploy_data json。 |

重要

部署自訂模型之後,如果在任何時候該部署處於非使用中的狀態超過十五 (15) 天,則系統會刪除該部署。 如果模型部署的時間超過十五 (15) 天,而且在連續 15 天內沒有針對模型進行完成或聊天完成呼叫,則自訂模型的部署為「非使用中」。

刪除非使用中的部署不會刪除或影響基礎自訂模型,且自訂模型可以隨時進行重新部署。 如 Azure OpenAI 服務定價中所述,每個部署的自訂 (微調) 模型都會產生每小時裝載成本,無論是否要對模型進行完成或聊天完成呼叫。 若要深入了解使用 Azure OpenAI 規劃和管理成本,請參閱規劃管理 Azure OpenAI 服務的成本 (機器翻譯) 中的指引。

# Deploy fine-tuned model

import json

import requests

token = os.getenv("TEMP_AUTH_TOKEN")

subscription = "<YOUR_SUBSCRIPTION_ID>"

resource_group = "<YOUR_RESOURCE_GROUP_NAME>"

resource_name = "<YOUR_AZURE_OPENAI_RESOURCE_NAME>"

model_deployment_name = "gpt-4o-mini-2024-07-18-ft" # Custom deployment name you chose for your fine-tuning model

deploy_params = {'api-version': "2023-05-01"}

deploy_headers = {'Authorization': 'Bearer {}'.format(token), 'Content-Type': 'application/json'}

deploy_data = {

"sku": {"name": "standard", "capacity": 1},

"properties": {

"model": {

"format": "OpenAI",

"name": "<YOUR_FINE_TUNED_MODEL>", #retrieve this value from the previous call, it will look like gpt-4o-mini-2024-07-18.ft-0e208cf33a6a466994aff31a08aba678

"version": "1"

}

}

}

deploy_data = json.dumps(deploy_data)

request_url = f'https://management.azure.com/subscriptions/{subscription}/resourceGroups/{resource_group}/providers/Microsoft.CognitiveServices/accounts/{resource_name}/deployments/{model_deployment_name}'

print('Creating a new deployment...')

r = requests.put(request_url, params=deploy_params, headers=deploy_headers, data=deploy_data)

print(r)

print(r.reason)

print(r.json())

您可以在 Azure AI Foundry 入口網站中檢查部署進度。

此流程在處理部署微調模型時,需要一些時間才能完成,這是常見的情況。

使用已部署的自訂模型

部署微調的模型之後,您可以在 Azure AI Foundry 入口網站的 Chat 遊樂場中,或透過聊天完成 API,像任何其他已部署的模型一樣使用它。 例如,您可以將聊天完成呼叫傳送至已部署的模型,如下列 Python 範例所示。 您可以繼續使用相同的參數搭配自訂的模型,例如溫度和 max_tokens,如同您可以搭配其他已部署的模型一樣。

# Use the deployed customized model

import os

from openai import AzureOpenAI

client = AzureOpenAI(

azure_endpoint = os.getenv("AZURE_OPENAI_ENDPOINT"),

api_key = os.getenv("AZURE_OPENAI_API_KEY"),

api_version = "2024-06-01"

)

response = client.chat.completions.create(

model = "gpt-4o-mini-2024-07-18-ft", # model = "Custom deployment name you chose for your fine-tuning model"

messages = [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Does Azure OpenAI support customer managed keys?"},

{"role": "assistant", "content": "Yes, customer managed keys are supported by Azure OpenAI."},

{"role": "user", "content": "Do other Azure AI services support this too?"}

]

)

print(response.choices[0].message.content)

刪除部署

不同於其他類型的 Azure OpenAI 模型,微調/自訂模型一旦部署後,就會有每小時裝載成本與其相關聯。 強烈建議,一旦您完成了本教學課程,並針對微調模型測試了一些聊天完成呼叫,請刪除模型部署。

刪除部署並不會影響模型本身,因此您可以隨時重新部署您針對本教學課程定型的微調模型。

您可以透過 REST API、Azure CLI 或其他支援的部署方法,刪除 Azure AI Foundry 入口網站中的部署。

疑難排解

如何啟用微調? 建立自定義模型呈現灰色。

為了成功存取微調,您需要獲指派認知服務 OpenAI 參與者。 即使是具有高階服務管理員權限的人員,仍然需要明確設定此帳戶才能存取微調。 如需詳細資訊,請檢閱角色型存取控制指引。

下一步

- 深入了解 Azure OpenAI 中的微調

- 深入了解驅動 Azure OpenAI 的基礎模型。