Poznámka:

Přístup k této stránce vyžaduje autorizaci. Můžete se zkusit přihlásit nebo změnit adresáře.

Přístup k této stránce vyžaduje autorizaci. Můžete zkusit změnit adresáře.

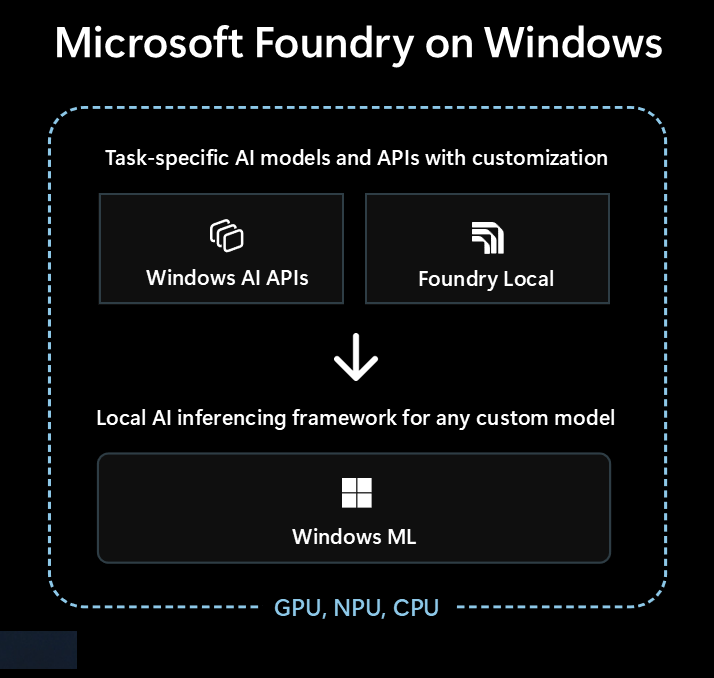

Microsoft Foundry on Windows je prvotřídní řešení pro vývojáře, kteří chtějí integrovat místní funkce umělé inteligence do svých aplikací pro Windows.

Microsoft Foundry on Windows poskytuje vývojářům...

- Modely umělé inteligence a rozhraní API připravené k použití prostřednictvím Windows AI APIsFoundry Local

- Architektura odvozování AI pro místní spuštění libovolného modelu prostřednictvím Windows ML

Bez ohledu na to, jestli s AI začínáte, nebo jste zkušený odborník na strojové učení (ML), Microsoft Foundry on Windows má něco pro vás.

Ihned použitelné modely AI a rozhraní API

Vaše aplikace může bez problémů používat následující místní modely AI a rozhraní API za méně než hodinu. Distribuci a provozní dobu souborů modelu zajišťuje Microsoft a modely jsou sdíleny mezi aplikacemi. Použití těchto modelů a rozhraní API využívá pouze několik řádků kódu, které vyžadují nulové znalosti ml.

| Typ modelu nebo rozhraní API | Co to je | Možnosti a podporovaná zařízení |

|---|---|---|

| Velké jazykové modely (LLM) | Generování textových modelů | Phi Silica via AI APIs (podporuje jemné ladění) nebo 20 a více modelů OSS LLM prostřednictvím Foundry Local Podívejte se na local LLMs a dozvíte se více. |

| Popis obrázku | Získání popisu textu v přirozeném jazyce obrázku | Popis obrázku prostřednictvím AI APIs (Copilot+ počítače) |

| Extraktor popředí obrázku | Segmentace popředí obrázku | Extrakce popředí obrázku prostřednictvím AI APIs (Copilot+ počítače) |

| Generování obrazu | Generování obrázků z textu | Generování obrázků prostřednictvím AI APIs (Copilot+ počítače) |

| Vymazání objektu obrázku | Vymazání objektů z obrázků | Vymazání objektu obrázku prostřednictvím AI APIs (Copilot+ počítače) |

| Extraktor objektu obrázku | Segmentace konkrétních objektů na obrázku | Extraktor objektů z obrázku použitím AI APIs (Copilot+ počítače) |

| Super rozlišení obrázku | Zvýšení rozlišení obrázků | Super rozlišení obrázku přes AI APIs (Copilot+ PC) |

| Sémantické hledání | Séanticky prohledávat text a obrázky | Vyhledávání obsahu aplikace prostřednictvím AI APIs (Copilot+ počítače) |

| Rozpoznávání řeči | Převod řeči na text | Šeptání prostřednictvím Foundry Local nebo rozpoznávání řeči prostřednictvím sady Windows SDK Další informace najdete v tématu Rozpoznávání řeči . |

| Rozpoznávání textu (OCR) | Rozpoznávání textu z obrázků | OCR prostřednictvím AI APIs (Copilot+ počítače) |

| Video Super resolution (VSR) | Zvýšení rozlišení videí | Super rozlišení videa: AI APIs (počítače Copilot+) |

Použití jiných modelů s Windows ML

Můžete použít širokou škálu modelů z Hugging Face nebo jiných zdrojů, nebo dokonce trénovat vlastní modely a spouštět je místně na počítačích s Windows 10 a novějších pomocí Windows ML(kompatibilita modelů a výkon se budou lišit v závislosti na hardwaru zařízení).

Další informace najdete o vyhledávání nebo trénování modelů pro použití s Windows ML.

Kterou možnost začít

Podle tohoto rozhodovacího stromu vyberte nejlepší přístup pro vaši aplikaci a scénář:

Zkontrolujte, jestli předdefinovaný systém Windows AI APIs pokrývá váš scénář a cílíte na počítače Copilot+ . Jedná se o nejrychlejší cestu k uvedení na trh s minimálním úsilím o vývoj.

Pokud Windows AI APIs nemá to, co potřebujete, nebo potřebujete podporovat Windows 10 a novější, zvažte použití Foundry Local pro scénáře LLM nebo převod hlasu na text.

Pokud potřebujete vlastní modely, chcete využít existující modely z Hugging Face nebo jiných zdrojů nebo máte specifické požadavky na model, které nejsou popsané výše uvedenými možnostmi, Windows ML získáte flexibilitu při hledání nebo trénování vlastních modelů (a podporuje Windows 10 a novější).

Vaše aplikace může také používat kombinaci všech tří těchto technologií.

Dostupné technologie pro místní AI

Následující technologie jsou k dispozici v Microsoft Foundry on Windows:

| Windows AI APIs | Foundry Local | Windows ML | |

|---|---|---|---|

| Co to je | Připravené k použití modelů AI a rozhraní API pro různé typy úloh optimalizované pro počítače Copilot+ | Připravené k použití LLMs a modely pro převod hlasu na text | ONNX Runtime architektura pro spouštění modelů, které najdete nebo vytrénujete |

| Podporovaná zařízení | Copilot+ počítače | Windows 10 a novější počítače a multiplatformní počítače (Výkon se liší podle dostupného hardwaru, ne všech dostupných modelů) |

Počítače s Windows 10 a novější, a multiplatformně prostřednictvím open source ONNX Runtime (Výkon se liší podle dostupného hardwaru) |

| Dostupné typy modelů a rozhraní API |

LLM Popis obrázku Extraktor popředí obrázku Generování obrazu Vymazání objektu obrázku Extraktor objektu obrázku Super rozlišení obrázku Sémantické hledání Rozpoznávání textu (OCR) Super rozlišení videa |

LLM (více) převod řeči na text Prohlédněte si více než 20 dostupných modelů |

Vyhledání nebo trénování vlastních modelů |

| Distribuce modelu | Hostováno společností Microsoft, získané za běhu a sdílené napříč aplikacemi | Hostováno společností Microsoft, získané za běhu a sdílené napříč aplikacemi | Distribuce zpracovávaná vaší aplikací (knihovny aplikací můžou sdílet modely napříč aplikacemi) |

| Další informace | AI APIs Přečtěte si dokumentaci | Foundry Local Přečtěte si dokumentaci | Windows ML Přečtěte si dokumentaci |

AI Toolkit pro Visual Studio Code je rozšíření VS Code, které umožňuje stahovat a spouštět modely AI místně, včetně přístupu k hardwarové akceleraci pro lepší výkon a škálování prostřednictvím DirectML. AI Toolkit vám může také pomoci s:

- Testování modelů v intuitivním dětském prostředí nebo v aplikaci pomocí rozhraní REST API

- Vyladění modelu AI místně i v cloudu (na virtuálním počítači) za účelem vytvoření nových dovedností, zlepšení spolehlivosti odpovědí, nastavení tónu a formátu odpovědi

- Vyladění oblíbených malých jazykových modelů (SLM), jako jsou Phi-3 a Mistral.

- Nasaďte funkci AI buď do cloudu, nebo pomocí aplikace, která běží na zařízení.

- Využijte hardwarovou akceleraci pro lepší výkon s funkcemi AI pomocí DirectML. DirectML je rozhraní API nízké úrovně, které umožňuje hardwaru zařízení s Windows zrychlit výkon modelů ML pomocí GPU nebo NPU zařízení. Párování DirectML s ONNX Runtime je obvykle nejjednodušší způsob, jak vývojářům přinést hardwarově akcelerovanou AI uživatelům ve velkém měřítku. Další informace: DirectML Overview.

- Kvantování a ověření modelu pro použití v NPU pomocí funkcí převodu modelů

Návrhy pro využití místní umělé inteligence

Několik způsobů, jak můžou aplikace pro Windows využívat místní AI k vylepšení jejich funkcí a uživatelského prostředí, patří:

- Aplikace můžou používat modely Generative AI LLM k pochopení složitých témat, aby je shrnovaly, přepisovaly, vytvářely zprávy o nich nebo je rozšiřovaly.

- Aplikace můžou pomocí modelů LLM transformovat volný obsah do strukturovaného formátu, kterému vaše aplikace rozumí.

- Aplikace můžou používat sémantické vyhledávací modely , které uživatelům umožňují vyhledávat obsah podle významu a rychle najít související obsah.

- Aplikace můžou používat modely zpracování přirozeného jazyka k odůvodnění složitých požadavků v přirozeném jazyce a plánovat a spouštět akce, které umožní provést žádost uživatele.

- Aplikace mohou k inteligentní úpravě obrázků, mazání nebo přidávání subjektů, zvětšování rozlišení nebo generování nového obsahu využívat modely pro úpravu obrázků.

- Aplikace mohou používat prediktivní diagnostické modely k identifikaci a předvídání potíží, a tím buď uživatele vést, nebo jednat za něj.

Použití cloudových modelů AI

Pokud pro vás použití místních funkcí AI není správnou cestou, může být řešením použití modelů a prostředků Cloudové AI .

Používání postupů zodpovědné umělé inteligence

Při každém začlenění funkcí AI do aplikace pro Windows důrazně doporučujeme postupovat podle pokynů pro vývoj zodpovědných aplikací a funkcí pro generování AI ve Windows.