AI app templates

This section of the documentation introduces you to the AI app templates and related articles that use these templates to demonstrate how to perform key developer tasks. AI app templates provide you with well-maintained, easy to deploy reference implementations that help to ensure a high-quality starting point for your AI apps.

There are two categories of AI app templates, building blocks and end-to-end solutions. The following sections introduce some of the key templates in each category for the programming language you have selected at the top of this article. To browse a more comprehensive list including these and other templates, see the AI app templates on the AI App Template gallery.

Building blocks

Building blocks are smaller-scale samples that focus on specific scenarios and tasks. Most building blocks demonstrate functionality that leverages the end-to-end solution for a chat app that uses your own data.

| Building block | Description |

|---|---|

| Load balance with Azure Container Apps | Learn how to add load balancing to your application to extend the chat app beyond the Azure OpenAI token and model quota limits. This approach uses Azure Container Apps to create three Azure OpenAI endpoints, as well as a primary container to direct incoming traffic to one of the three endpoints. |

| Building block | Description |

|---|---|

| Configure document security for the chat app | When you build a chat application using the RAG pattern with your own data, make sure that each user receives an answer based on their permissions. An authorized user should have access to answers contained within the documents of the chat app. An unauthorized user shouldn't have access to answers from secured documents they don't have authorization to see. |

| Evaluate chat app answers | Learn how to evaluate a chat app's answers against a set of correct or ideal answers (known as ground truth). Whenever you change your chat application in a way which affects the answers, run an evaluation to compare the changes. This demo application offers tools you can use today to make it easier to run evaluations. |

| Load balance with Azure Container Apps | Learn how to add load balancing to your application to extend the chat app beyond the Azure OpenAI token and model quota limits. This approach uses Azure Container Apps to create three Azure OpenAI endpoints, as well as a primary container to direct incoming traffic to one of the three endpoints. |

| Load balance with API Management | Learn how to add load balancing to your application to extend the chat app beyond the Azure OpenAI token and model quota limits. This approach uses Azure API Management to create three Azure OpenAI endpoints, as well as a primary container to direct incoming traffic to one of the three endpoints. |

| Load test the Python chat app with Locust | Learn the process to perform load testing on a Python chat application using the RAG pattern with Locust, a popular open-source load testing tool. The primary objective of load testing is to ensure that the expected load on your chat application does not exceed the current Azure OpenAI Transactions Per Minute (TPM) quota. By simulating user behavior under heavy load, you can identify potential bottlenecks and scalability issues in your application. |

| Secure your AI App with keyless authentication | Learn the process to secure your Python Azure OpenAI chat application with keyless authentication. Application requests to most Azure services should be authenticated with keyless or passwordless connections. Keyless authentication offers improved management and security benefits over the account key because there's no key (or connection string) to store. |

| Building block | Description |

|---|---|

| Load balance with Azure Container Apps | Learn how to add load balancing to your application to extend the chat app beyond the Azure OpenAI token and model quota limits. This approach uses Azure Container Apps to create three Azure OpenAI endpoints, as well as a primary container to direct incoming traffic to one of the three endpoints. |

| Building block | Description |

|---|---|

| Evaluate chat app answers | Learn how to evaluate a chat app's answers against a set of correct or ideal answers (known as ground truth). Whenever you change your chat application in a way which affects the answers, run an evaluation to compare the changes. This demo application offers tools you can use today to make it easier to run evaluations. |

| Load balance with Azure Container Apps | Learn how to add load balancing to your application to extend the chat app beyond the Azure OpenAI token and model quota limits. This approach uses Azure Container Apps to create three Azure OpenAI endpoints, as well as a primary container to direct incoming traffic to one of the three endpoints. |

| Load balance with API Management | Learn how to add load balancing to your application to extend the chat app beyond the Azure OpenAI token and model quota limits. This approach uses Azure API Management to create three Azure OpenAI endpoints, as well as a primary container to direct incoming traffic to one of the three endpoints. |

End-to-end solutions

End-to-end solutions are comprehensive reference samples including documentation, source code, and deployment to allow you to take and extend for your own purposes.

Chat with your data using Azure OpenAI and Azure AI Search with .NET

This template is a complete end-to-end solution demonstrating the Retrieval-Augmented Generation (RAG) pattern running in Azure. It uses Azure AI Search for retrieval and Azure OpenAI large language models to power ChatGPT-style and Q&A experiences.

To get started with this template, see Get started with the chat using your own data sample for .NET. To access the source code and read in-depth details about the template, see the azure-search-openai-demo-csharp GitHub repo.

This template demonstrates the use of these features.

| Azure hosting solution | Technologies | AI models |

|---|---|---|

| Azure Container Apps Azure Functions |

Azure OpenAI Azure Computer Vision Azure Form Recognizer Azure AI Search Azure Storage |

GPT 3.5 Turbo GPT 4.0 |

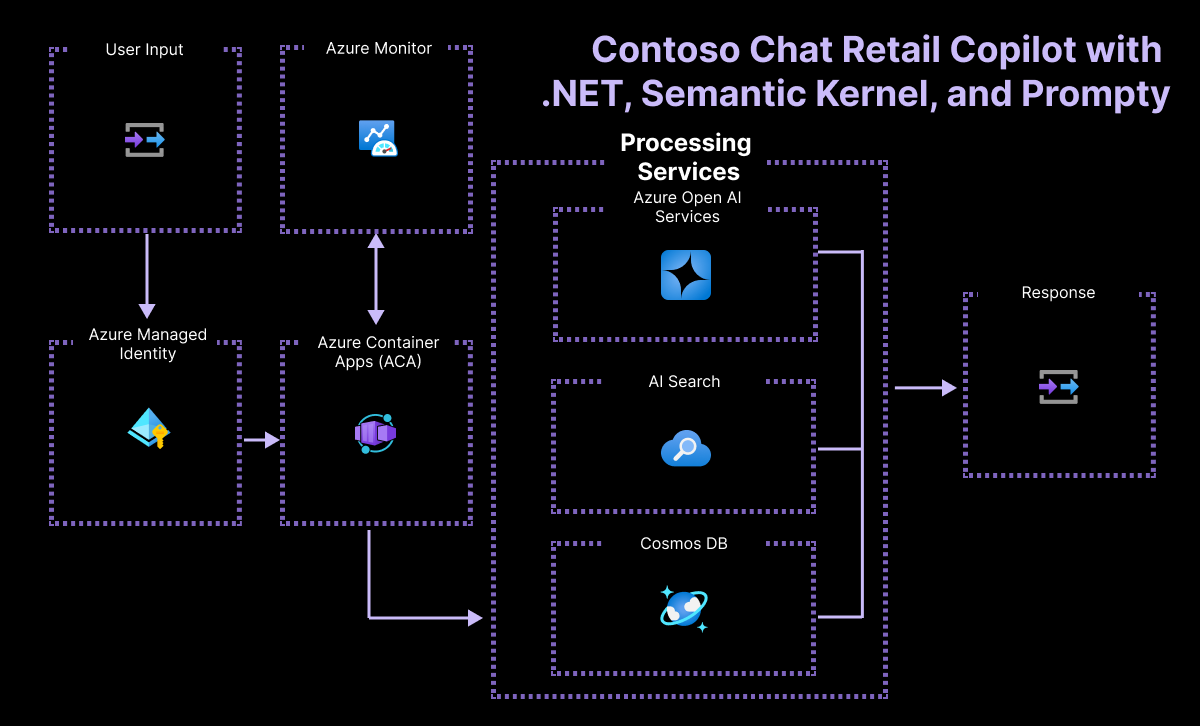

Contoso chat retail Copilot with .NET and Semantic Kernel

This template implements Contoso Outdoors, a conceptual store specializing in outdoor gear for hiking and camping enthusiasts. This virtual store enhances customer engagement and sales support through an intelligent chat agent. This agent is powered by the Retrieval Augmented Generation (RAG) pattern within the Microsoft Azure AI Stack, enriched with Semantic Kernel and Prompty support.

To access the source code and read in-depth details about the template, see the contoso-chat-csharp-prompty GitHub repo.

This template demonstrates the use of these features.

| Azure hosting solution | Technologies | AI models |

|---|---|---|

| Azure Container Apps |

Azure OpenAI Microsoft Entra ID Azure Managed Identity Azure Monitor Azure AI Search Azure AI Foundry Azure SQL Azure Storage |

GPT 3.5 Turbo GPT 4.0 |

Process automation with speech to text and summarization with .NET and GPT 3.5 Turbo

This template is a process automation solution that receives issues reported by field and shop floor workers at a company called Contoso Manufacturing, a manufacturing company that makes car batteries. The issues are shared by the workers either live through microphone input or pre-recorded as audio files. The solution translates audio input from speech to text and then uses an LLM and Prompty or Promptflow to summarize the issue and return the results in a format specified by the solution.

To access the source code and read in-depth details about the template, see the summarization-openai-csharp-prompty GitHub repo.

This template demonstrates the use of these features.

| Azure hosting solution | Technologies | AI models |

|---|---|---|

| Azure Container Apps | Speech to Text Summarization Azure OpenAI |

GPT 3.5 Turbo |

Chat with your data using Azure OpenAI and Azure AI Search with Python

This template is a complete end-to-end solution demonstrating the Retrieval-Augmented Generation (RAG) pattern running in Azure. It uses Azure AI Search for retrieval and Azure OpenAI large language models to power ChatGPT-style and Question and Answer (Q&A) experiences.

To get started with this template, see Get started with the chat using your own data sample for Python. To access the source code and read in-depth details about the template, see the azure-search-openai-demo GitHub repo.

This template demonstrates the use of these features.

| Azure hosting solution | Technologies | AI models |

|---|---|---|

| Azure Container Apps | Azure OpenAI Azure AI Search Azure Blob Storage Azure Monitor Azure Document Intelligence |

GPT 3.5 Turbo GPT 4 GPT 4o GPT 4o-mini |

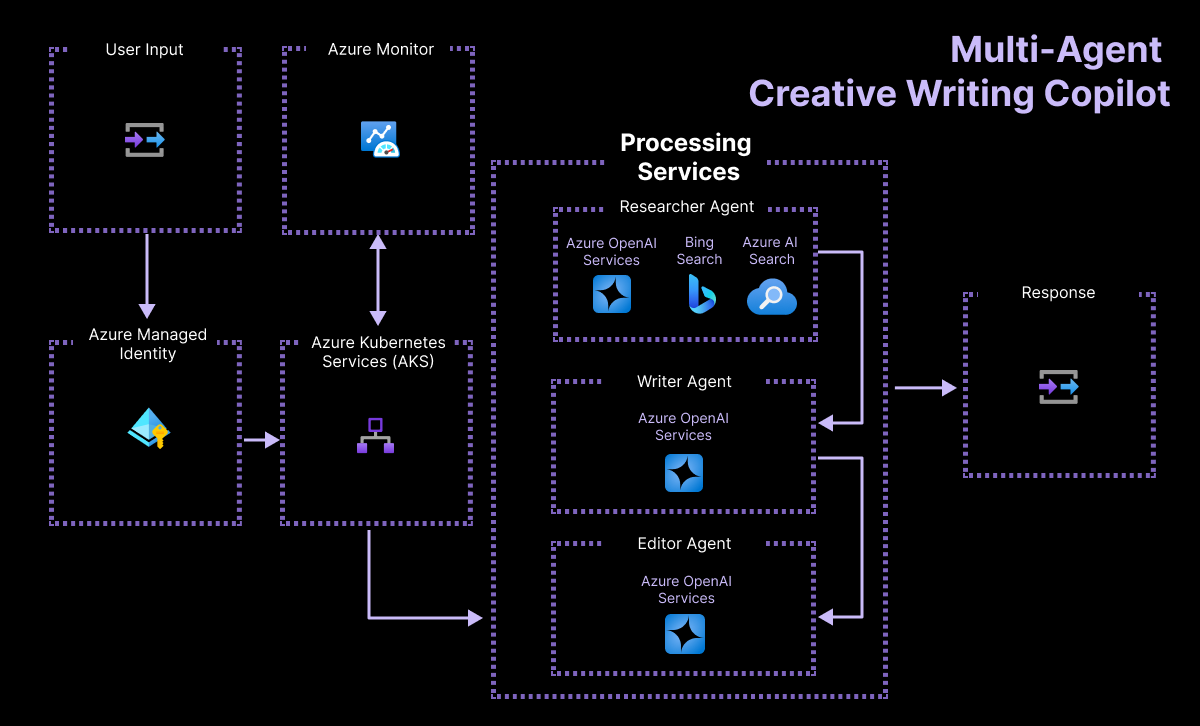

Multi-Modal Creative Writing Copilot with DALL-E

This template is a creative writing multi-agent solution to help users write articles. It demonstrates how to create and work with AI agents driven by Azure OpenAI.

It includes:

- A Flask app that takes an article and instruction from a user.

- A research agent that uses the Bing Search API to research the article.

- A product agent that uses Azure AI Search to do a semantic similarity search for related products from a vector store.

- A writer agent to combine the research and product information into a helpful article.

- An editor agent to refine the article presented to the user.

To access the source code and read in-depth details about the template, see the agent-openai-python-prompty GitHub repo.

This template demonstrates the use of these features.

| Azure hosting solution | Technologies | AI models |

|---|---|---|

| Azure Container Registry Azure Kubernetes |

Azure OpenAI Bing Search Azure Managed Identity Azure Monitor Azure AI Search Azure AI Foundry |

GPT 3.5 Turbo GPT 4.0 DALL-E |

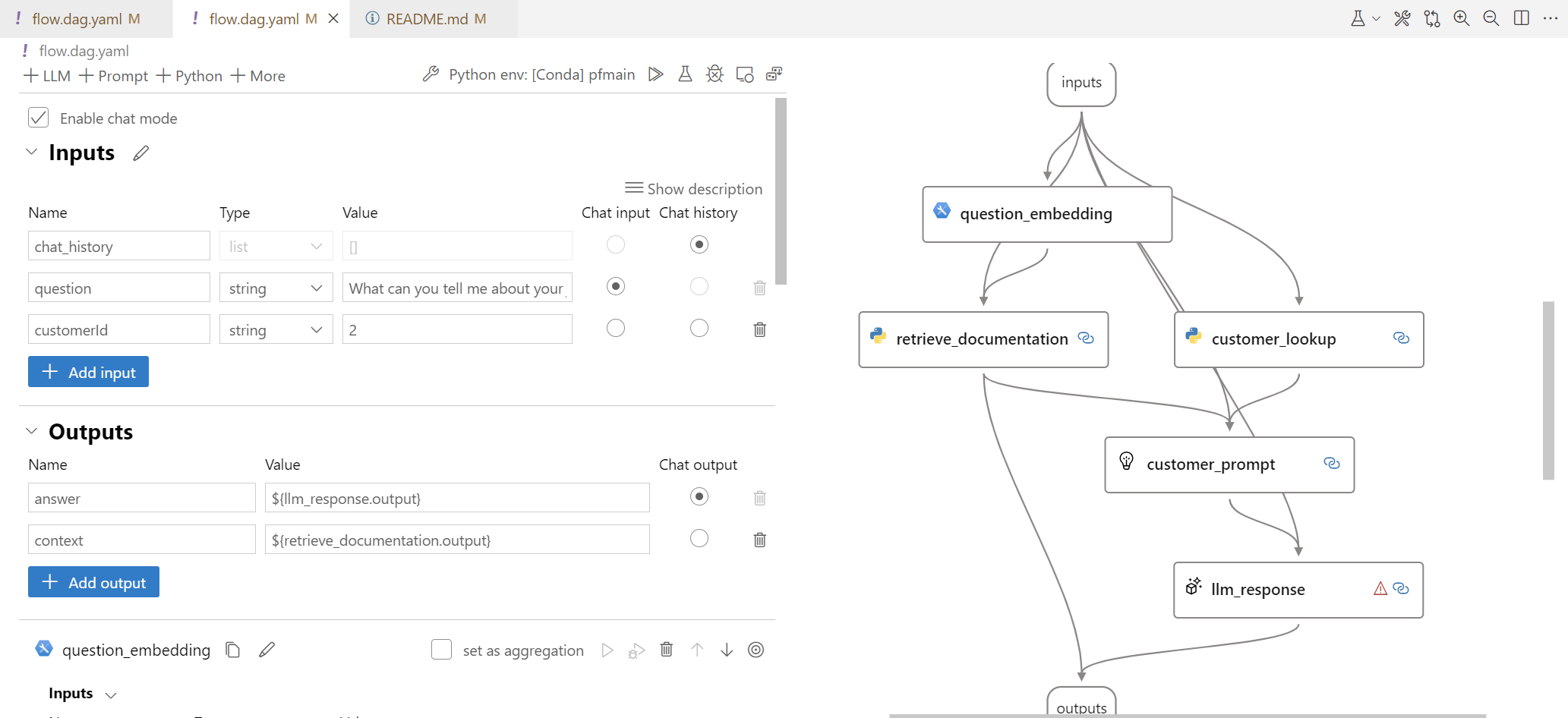

Contoso Chat Retail Copilot with Azure AI Foundry

This template implements Contoso Chat - a retail copilot solution for Contoso Outdoor that uses a retrieval augmented generation design pattern to ground chatbot responses in the retailer's product and customer data. Customers can ask questions from the website in natural language, and get relevant responses with potential recommendations based on their purchase history - with responsible AI practices to ensure response quality and safety.

This template illustrates the end-to-end workflow (GenAIOps) for building a RAG-based copilot code-first with Azure AI and Prompty. By exploring and deploying this sample, learn to:

- Ideate and iterate rapidly on app prototypes using Prompty

- Deploy and use Azure OpenAI models for chat, embeddings, and evaluation

- Use Azure AI Search (indexes) and Azure Cosmos DB (databases) for your data

- Evaluate chat responses for quality using AI-assisted evaluation flows

- Host the application as a FastAPI endpoint deployed to Azure Container Apps

- Provision and deploy the solution using the Azure Developer CLI

- Support Responsible AI practices with content safety & assessments

To access the source code and read in-depth details about the template, see the contoso-chat GitHub repo.

This template demonstrates the use of these features.

| Azure hosting solution | Technologies | AI models |

|---|---|---|

| Azure Container Apps |

Azure OpenAI Azure AI Search Azure AI Foundry Prompty Azure Cosmos DB |

GPT 3.5 Turbo GPT 4.0 Managed Integration Runtime (MIR) |

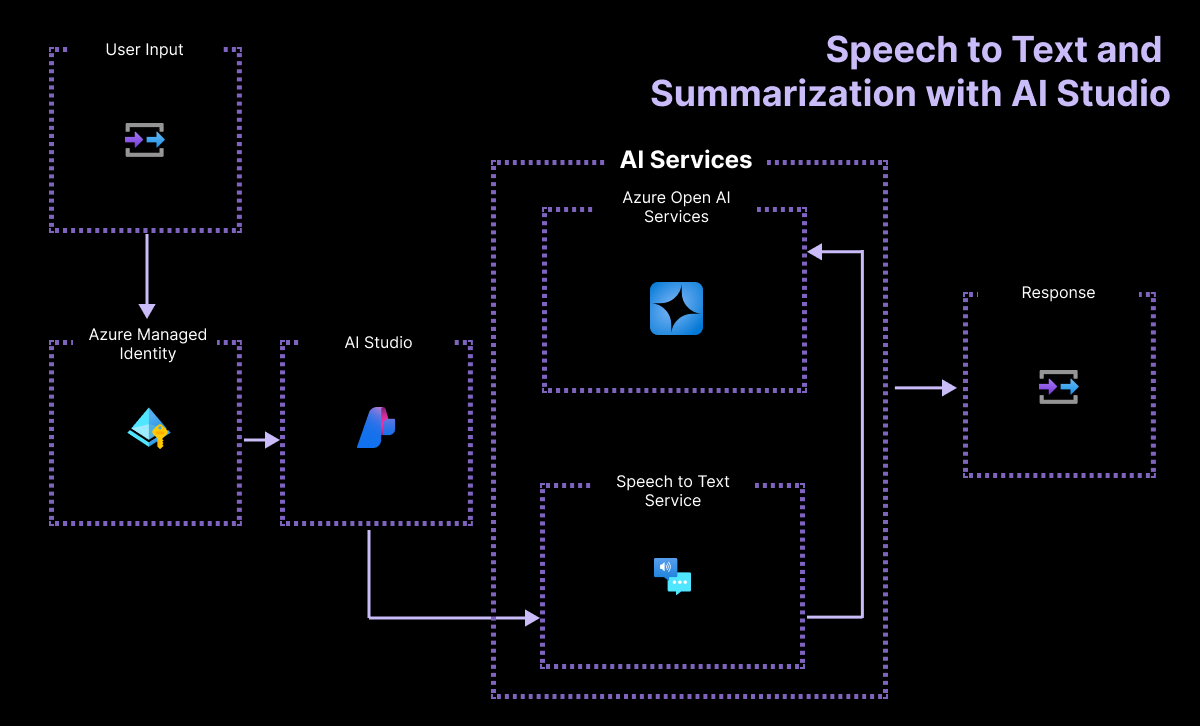

Process automation with speech to text and summarization with Azure AI Foundry

This template creates a web-based app that allows workers at a company called Contoso Manufacturing to report issues via text or speech. Audio input is translated to text and then summarized to highlight important information and the report is sent to the appropriate department.

To access the source code and read in-depth details about the template, see the summarization-openai-python-promptflow GitHub repo.

This template demonstrates the use of these features.

| Azure hosting solution | Technologies | AI models |

|---|---|---|

| Azure Container Apps | Azure AI Foundry Speech to Text Service Prompty Managed Integration Runtime (MIR) |

GPT 3.5 Turbo |

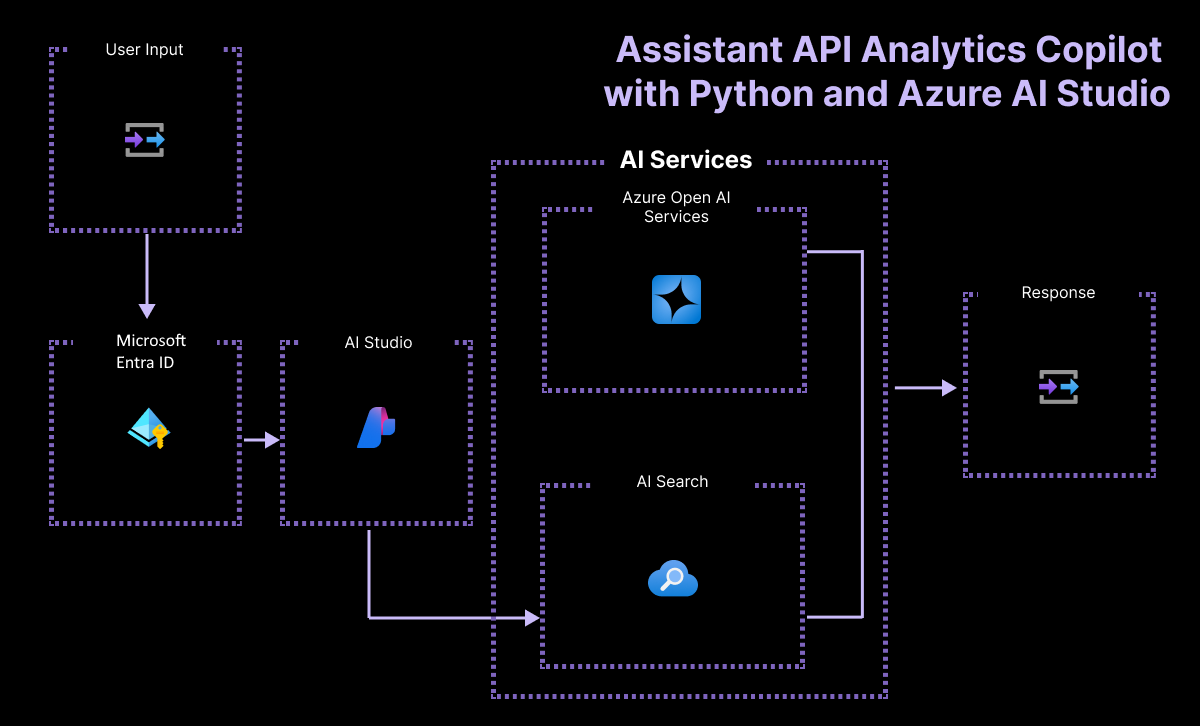

Assistant API Analytics Copilot with Python and Azure AI Foundry

This template is an Assistant API to chat with tabular data and perform analytics in natural language.

To access the source code and read in-depth details about the template, see the assistant-data-openai-python-promptflow GitHub repo.

This template demonstrates the use of these features.

| Azure hosting solution | Technologies | AI models |

|---|---|---|

| Machine Learning service | Azure AI Search Azure AI Foundry Managed Integration Runtime (MIR) Azure OpenAI |

GPT 3.5 Turbo GPT 4 |

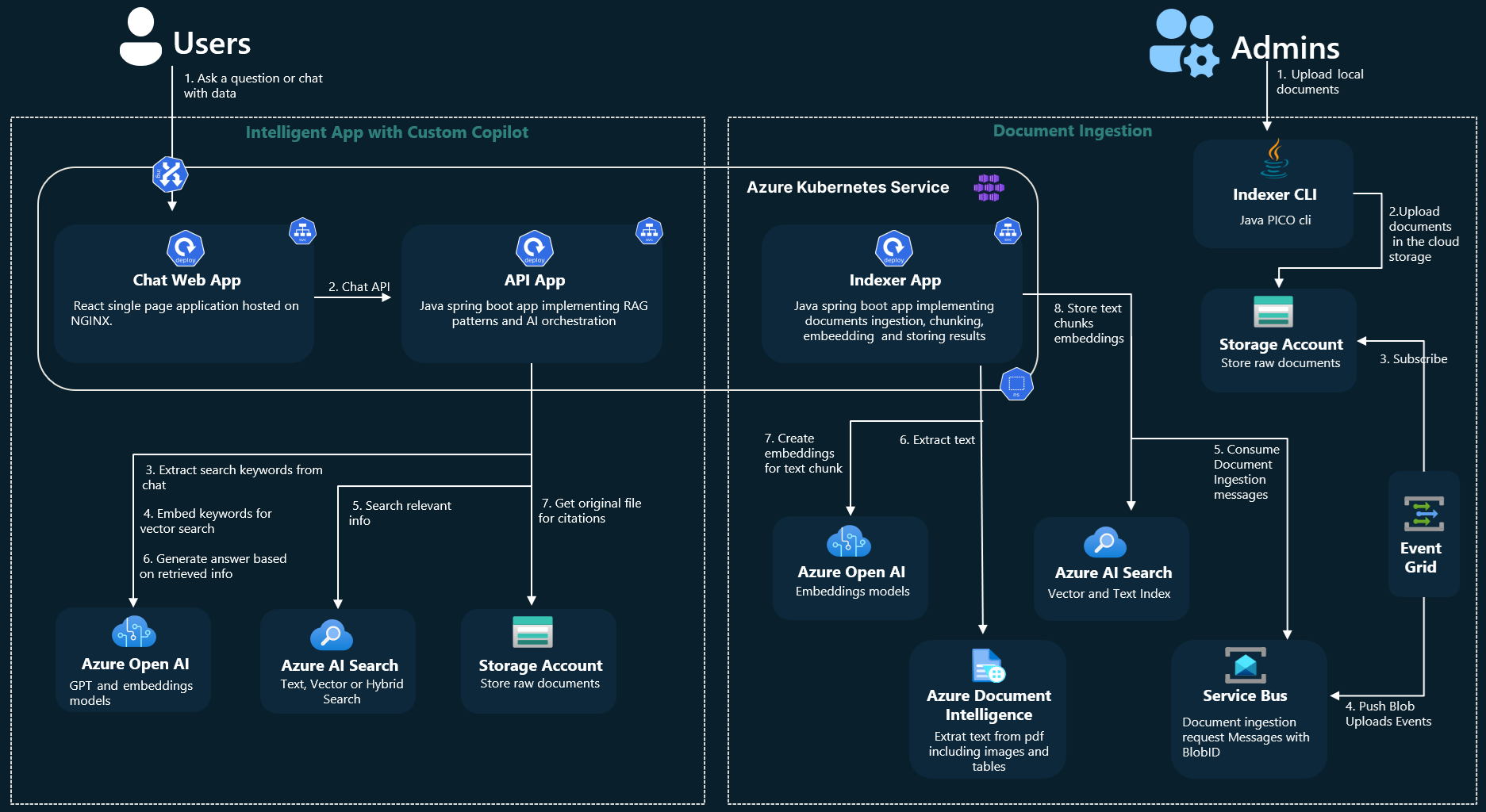

Chat with your data using Azure OpenAI and Azure AI Search with Java

This template is a complete end-to-end solution that demonstrates the Retrieval-Augmented Generation (RAG) pattern running in Azure. It uses Azure AI Search for retrieval and Azure OpenAI large language models to power ChatGPT-style and Q&A experiences.

To get started with this template, see Get started with the chat using your own data sample for Java. To access the source code and read in-depth details about the template, see the azure-search-openai-demo-java GitHub repo.

This template demonstrates the use of these features.

| Azure hosting solution | Technologies | AI models |

|---|---|---|

| Azure App Service Azure Container Apps Azure Kubernetes Service |

Azure OpenAI Azure AI Search Azure Document Intelligence Azure Storage Azure App Insights Azure Service Bus Azure Event Grid |

gpt-35-turbo |

Multi Agents Banking Assistant with Java and Semantic Kernel

This project is designed as a Proof of Concept (PoC) to explore the innovative realm of generative AI within the context of multi-agent architectures. By leveraging Java and Microsoft Semantic Kernel AI orchestration framework, our aim is to build a chat web app to demonstrate the feasibility and reliability of using generative AI agents to transform user experience from web clicks to natural language conversations while maximizing reuse of the existing workload data and APIs.

The core use case revolves around a banking personal assistant designed to revolutionize the way users interact with their bank account information, transaction history, and payment functionalities. Utilizing the power of generative AI within a multi-agent architecture, this assistant aims to provide a seamless, conversational interface through which users can effortlessly access and manage their financial data.

Invoices samples are included in the data folder to make it easy to explore payments feature. The payment agent equipped with optical character recognition (OCR) tools (Azure Document Intelligence) leads the conversation with the user to extract the invoice data and initiate the payment process. Other account fake data - such as transactions, payment methods, and account balance - are also available to be queried by the user. All data and services are exposed as external REST APIs and consumed by the agents to provide the user with the requested information.

To access the source code and read in-depth details about the template, see the agent-openai-java-banking-assistant GitHub repo.

This template demonstrates the use of these features.

| Azure hosting solution | Technologies | AI models |

|---|---|---|

| Azure Container Apps | Azure OpenAI Azure Document Intelligence Azure Storage Azure Monitor |

gpt-4o gpt-4o-mini |

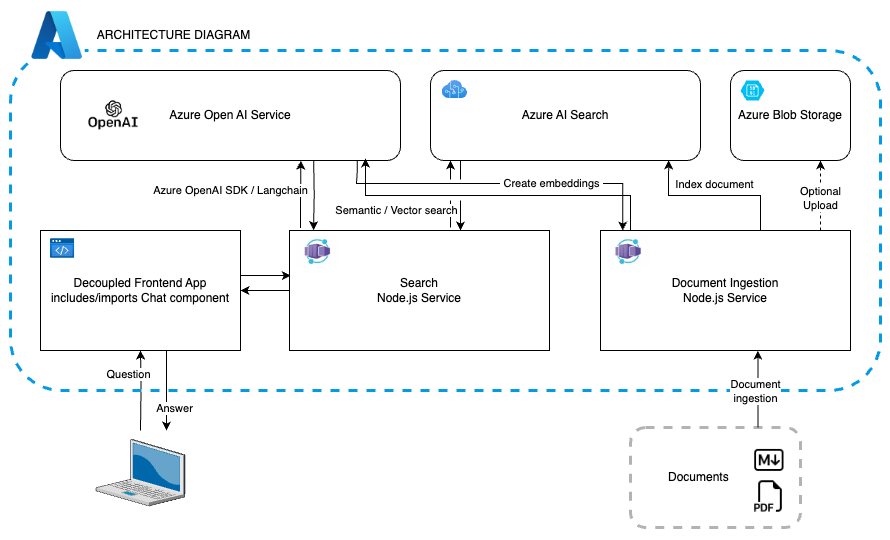

Chat with your data using Azure OpenAI and Azure AI Search with JavaScript

This template is a complete end-to-end solution demonstrating the Retrieval-Augmented Generation (RAG) pattern running in Azure. It uses Azure AI Search for retrieval and Azure OpenAI large language models to power ChatGPT-style and Q&A experiences.

To get started with this template, see Get started with the chat using your own data sample for JavaScript. To access the source code and read in-depth details about the template, see the azure-search-openai-javascript GitHub repo.

This template demonstrates the use of these features.

| Azure hosting solution | Technologies | AI models |

|---|---|---|

| Azure Container Apps Azure Static Web Apps |

Azure OpenAI Azure AI Search Azure Storage Azure Monitor |

text-embedding-ada-002 |

Azure OpenAI chat frontend

This template is a minimal OpenAI chat web component that can be hooked to any backend implementation as a client.

To access the source code and read in-depth details about the template, see the azure-openai-chat-frontend GitHub repo.

This template demonstrates the use of these features.

| Azure hosting solution | Technologies | AI models |

|---|---|---|

| Azure Static Web Apps | Azure AI Search Azure OpenAI |

GPT 3.5 Turbo GPT4 |

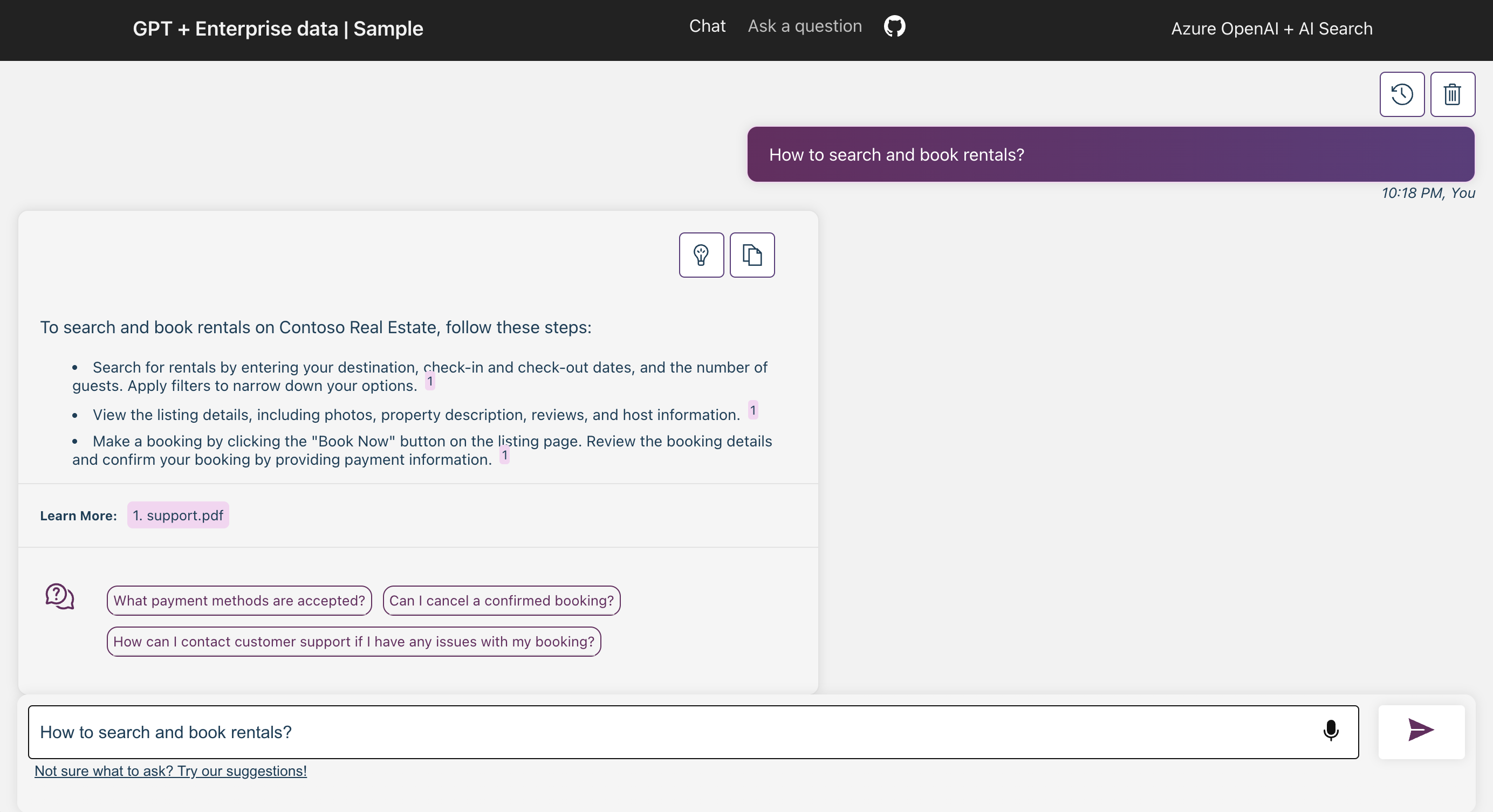

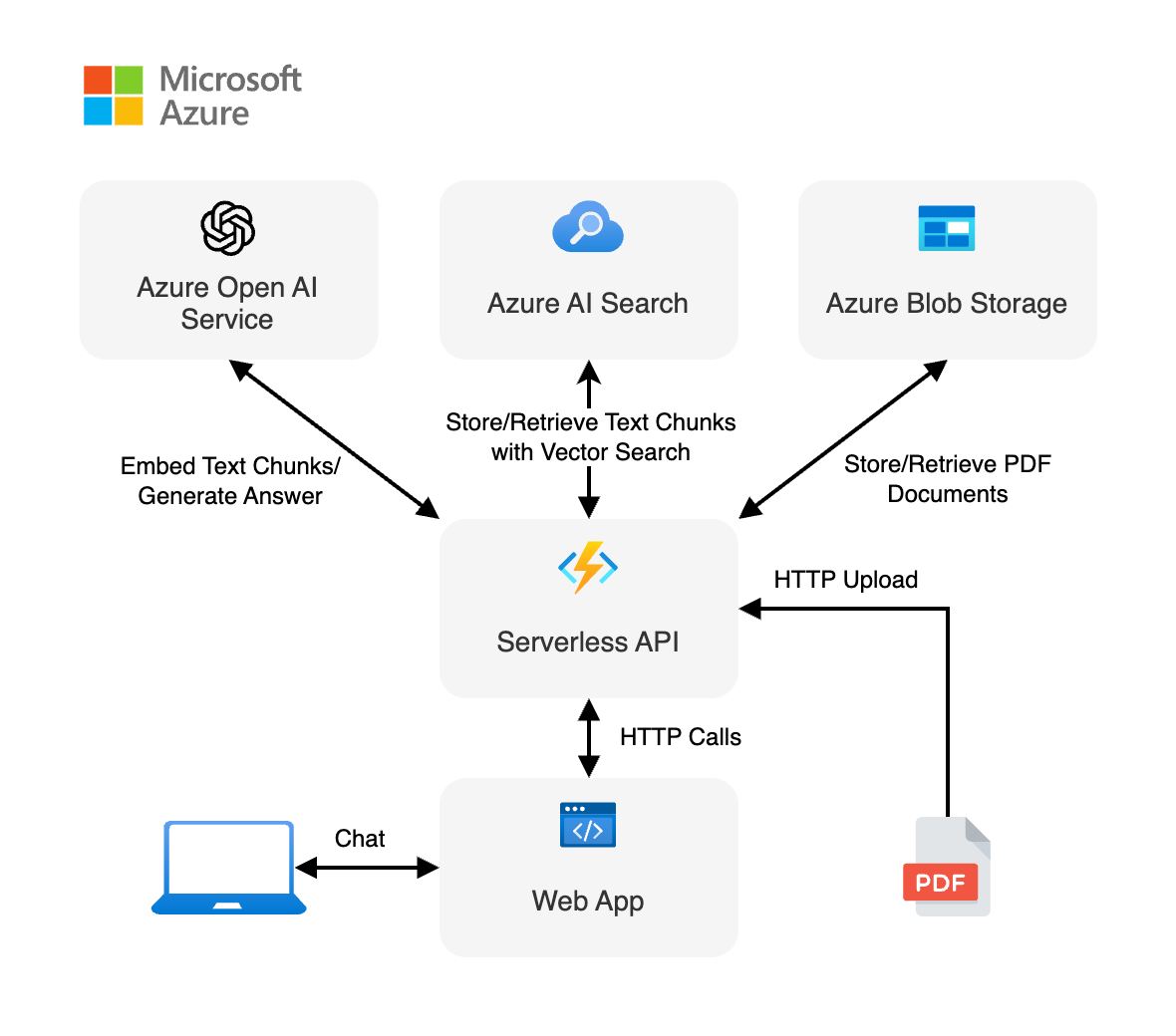

Serverless AI chat with RAG using LangChain.js

The template is a serverless AI chatbot with Retrieval Augmented Generation using LangChain.js and Azure that uses a set of enterprise documents to generate responses to user queries. It uses a fictitious company called Contoso Real Estate, and the experience allows its customers to ask support questions about the usage of its products. The sample data includes a set of documents that describes its terms of service, privacy policy and a support guide.

To learn how to deploy and run this template, see Get started with Serverless AI Chat with RAG using LangChain.js. To access the source code and read in-depth details about the template, see the serverless-chat-langchainjs GitHub repo.

Learn how to deploy and run this JavaScript reference template.

This template demonstrates the use of these features.

| Azure hosting solution | Technologies | AI models |

|---|---|---|

| Azure Static Web Apps Azure Functions |

Azure AI Search Azure OpenAI Azure Cosmos DB Azure Storage Azure Managed Identity |

GPT4 Mistral Ollama |