Complying with enterprise data governance policies

Enterprises define data governance policies for how data is managed and can be accessed. AI engineering teams need to respect and support data security and compliance policies when working with enterprise data.

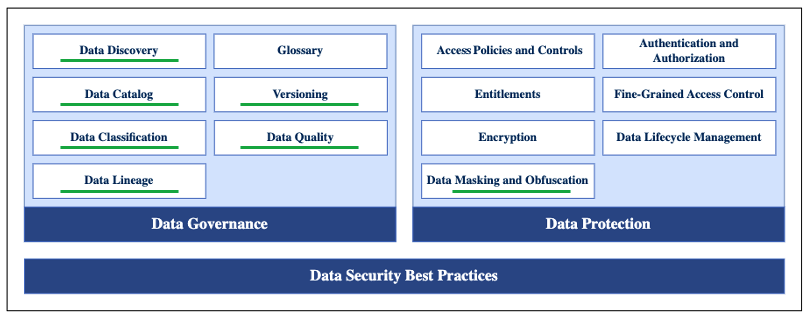

This content discusses the data governance capabilities underlined in the diagram below. For more information about data governance, see Data in the Microsoft Solutions Playbook.

Data catalog

A data catalog is an in-depth inventory of all data assets in an organization. Its main purpose is to make it easy for users to find the right data for analytical or business purpose.

These data assets can include, amongst others:

- Structured data

- Unstructured data such as documents, images, audio, and video

- Reports and query results

- Data visualizations and dashboards

- ML models

- Features extracted from ML models

- Connections between databases

Microsoft provides the following data catalog services:

- Azure Data Catalog helps your organization find, understand, and consume data sources.

- Azure Purview Data Catalog finds trusted data sources by browsing and searching your data assets. The data catalog aligns your assets with friendly business terms and data classification to identify data sources.

ℹ️ See Data: data catalog for more information nuanced to a Data Engineer/Governance role.

Data Catalog from an MLOps perspective

AI practitioners need to easily find, access, and search organizational data that can be valuable for solving problems with machine learning. Considerations such as data privacy and access need to be enforced as well.

The following are levels of data catalogs that relate to ML practitioners beyond a standard data catalog, namely:

Finding pre-processed datasets

The bulk of development time in an ML project typically is spent finding, pre-processing and preparing data. Thus, significant savings can result by ensuring that ML practitioners are able to easily discover existing datasets.

The data versioning guide provides more detailed information on how versioning may be achieved.

Finding pre-trained ML models

Similarly, being able to find a model that has already been trained can avoid the costs associated with duplicated effort. A model registry allows an organization to store and version models and also helps to organize and keep track of trained models.

For more information, see Azure Machine Learning Model Registry.

Finding pre-computed/pre-extracted ML features

Being able to store and re-use pre-computed or extracted features from a trained ML model saves an organization both development time and compute costs. It is important for organizations dealing with a large number of machine learning models to have an effective way to discover and select these features. These needs are typically now met with what is known as a Feature Store.

A Feature Store is a data system designed to manage raw data transformations into features - data inputs for a model. Feature Stores often include metadata management tools to register, share, and track features as well.

The feature store adoption guide dives deeper into whether a Feature Store may be right for your project.

Finding previous experiments

Tracking experiments in ML is essential to ensure repeatability and transparency. It allows for a clear record of work already done, making it easier to understand and replicate experiments. Keeping a complete audit record of experiments allows organizations to avoid duplicating efforts and incurring unnecessary costs. Even if not all models make it to production, the recorded experiments enable the organization to resume the work in the future.

For more information, see AI experimentation for more detailed guidance.

Some useful resources for data catalogs:

- Medical Imaging Server for DICOM: Catalog and discover medical imagery for a holistic view of patient data

Data Classification

Data Classification is the process of defining and categorizing data and other critical business information. Data Classification typically relates to the sensitivity of the data and who can access it. Data classification enables administrators to identify sensitive data and determine how it should be accessed and shared. Data Classification forms an essential first step toward data regulation compliancy.

ℹ️ Refer to the Data: data classification section for more information nuanced to a Data Engineer/Governance role. ℹ️ For information on creating a Data Classification Framework, refer to the Create a well-designed data classification framework article on Microsoft Learn.

Data Classification from an MLOps perspective

Data classification within ML relates to using ML algorithms and models to tag data by some means of an inferred relationship. At this foundational data stage, this classification may relate to identifying PII and other sensitive data, or as part of the Data Discovery process, logically grouping or clustering data that has not been explored or understood.

Data lineage and versioning

Data lineage is the process of tracking the flow of data over time from its provenance, transformations applied, final output and consumption within a Data Pipeline.

Understanding this lineage is crucial for many reasons, for example, to facilitate troubleshooting, to validate accuracy, and for consistency.

ℹ️ Refer to the Data: Data Lineage section for more information nuanced to a Data Engineer/Governance role.

Data lineage and versioning from an MLOps perspective

Data lineage in machine learning requires tracking the data's origin and all transformations applied to it. This includes tracking changes made by both existing data pipeline transformations as well as any additional transformations made to support the machine learning work.

See Versioning and Tracking ML Datasets for more information on implementing this in Azure Machine Learning through its dataset registration and versioning capabilities. The data versioning guide in this playbook provides more general guidance on the patterns and concepts used.

By registering a dataset, you have a clear and complete record of the specific data used to train an ML model. This is a vital component of MLOps because it enables reproducibility and comprehensive interpretation of the model’s predictions. Data versions are useful to tag the addition of new training data or the application of data engineering/feature transform techniques. Note that in Azure ML pipelines, it's possible to register and version both the input and output datasets passed through the pipeline steps. This is crucial for interpretability and reproducibility across a machine learning workflow, as emphasized above.

Data Quality

Data Quality may be defined as its suitability to solve the underlying business problem. In general, the following characteristics may be applied to determine the quality of data, amongst others, namely:

- Validity: The data is stored in the same format.

- Completeness: All data attributes are present and consistently collected and stored.

- Consistency: The same rules are applied consistently to all of the data.

- Accuracy: The data represents exactly what it should.

- Timeliness: The data is relevant to the time frame, which needs to be addressed within the business context.

ℹ️ Refer to the Data: Data Quality section for more information nuanced to a Data Engineer/Governance role.

Great Expectations is a useful tool for assessing data quality.

Data quality from an MLOps perspective

For an ML practitioner, data quality can include characteristics such as:

- Its distribution, outliers, and completeness.

- Its representativeness to solve a specific business problem using ML.

- Whether it contains PII data and whether redaction or obfuscation is required.

Refer to Exploratory data analysis for information.