Hello @Sameer ,

The issue could be because of the first stage (Source -> staging blob) use csv format and the format serializer failed to escape the escape char in the data eventually causing the data to be invalid.

You could try the below workaround at your end.

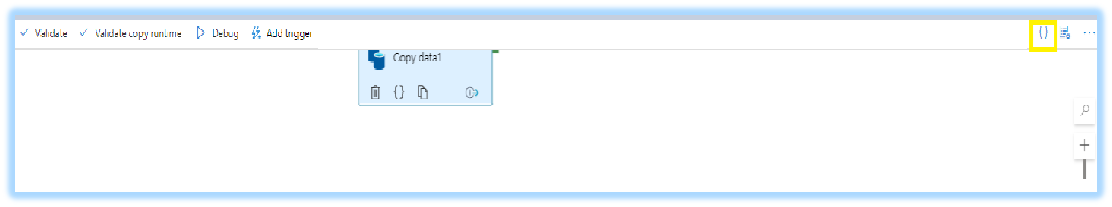

Manually edit JSON payload of your pipeline, go to properties -> activities -> {your copy activity} -> "typeProperties", add a flag "escapeQuoteEscaping": true

Please Note : If using SHIR, please make sure the SHIR version must >= 5.5.7762.1 to get this fix

Hope this will help. Please let us know if any further queries.

------------------------------

- Please don't forget to click on

or upvote

or upvote  button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how

button whenever the information provided helps you. Original posters help the community find answers faster by identifying the correct answer. Here is how - Want a reminder to come back and check responses? Here is how to subscribe to a notification

- If you are interested in joining the VM program and help shape the future of Q&A: Here is how you can be part of Q&A Volunteer Moderators