Modern Datacenter Architecture Patterns-Global Load Balanced Web Tier

The Global Load Balanced Web Tier design pattern details the Azure features and services required to deliver web tier services that can provide predictable performance and high availability across geographic boundaries.

Table of Contents

1.1 Pattern Requirements and Service Description'

4. Availability and Resiliency

Prepared by:

Avery Spates – Microsoft

Joel Yoker – Microsoft

Tom Shinder – Microsoft

David Ziembicki – Microsoft

Cloud Platform Integration Framework Overview and Patterns:

Cloud Platform Integration Framework – Overview and Architecture

Modern Datacenter Architecture Patterns-Multi-Site Data Tier

Modern Datacenter Architecture Patterns - Offsite Batch Processing Tier

Modern Datacenter Architecture Patterns-Global Load Balanced Web Tier

1 Overview

The Global Load Balanced Web Tier design pattern details the Azure features and services required to deliver web tier services  that can provide predictable performance and high availability across geographic boundaries. A full list of Microsoft Azure regions and the services available within each is provided within the Microsoft Azure documentation site.

that can provide predictable performance and high availability across geographic boundaries. A full list of Microsoft Azure regions and the services available within each is provided within the Microsoft Azure documentation site.

For the purposes of this design pattern a web tier is defined as a tier of service providing traditional HTTP/HTTPS content or application services in either an isolated manner or as part of a multi-tiered web application. Within this pattern, load balancing of the web tier is provided both locally within the region and across regions. From a compute perspective, these services can be provided through Microsoft Azure virtual machines, web sites or a combination of both. Virtual machines providing web services can host content using any supported Microsoft Windows or Linux distribution guest operating system within Microsoft Azure.

1.1 Pattern Requirements and Service Description

The Global Load Balanced Web Tier pattern is designed to help meet the need for security, reliability, and presentation layer services within a standalone configuration or as part of a multi-tiered web application. It is designed for reliability, availability, and performance with a 99.9 percent scheduled uptime service level target (SLT). The 99.9 percent uptime is rationalized across Microsoft Azure architectural constructs to support the number of planned and unplanned failures across Azure regions, within an Azure datacenter, and across Azure virtual machines which can be supported within a year while still meeting the SLT. Being a foundational architectural pattern, it is expected that the web tier outlined in this document will be composed with other tiers (such as a data tier) to support a larger solution architecture or design pattern.

A foundational set of design principles has been employed to make sure that the Global Load Balanced Web Tier architectural pattern is optimized to deliver a robust set of capabilities and functionalities with the highest possible uptime. The following design principles are the basis for this architectural pattern.

- High availability: The Global Load Balanced Web Tier pattern is designed to deliver 99.9 percent scheduled uptime using standard Microsoft Azure Service. Leveraging Azure availability sets within a given Azure region along with hosting the web tier across Azure regions helps make sure that the platform is able to deliver this SLT. The ability to meet this SLT is also determined by a customer’s internal operational processes, tools and the reliability of the web application itself.

- Service continuity: Best practices from Microsoft Azure architectures are incorporated into the architecture and is part of the pattern to handle unscheduled service outages.

- Quality: The Global Load Balanced Web Tier is designed based on lessons learned from internal Microsoft deployments and Microsoft Azure recommended practices helping make sure the highest quality and reliability from the design.

- Management: Microsoft System Center and Azure management capabilities will provide the necessary platform for the management of the Global Load Balanced Web Tier as defined within the Cloud Platform Integration Framework.

- Administration: Using Microsoft Windows PowerShell™, the Microsoft Azure Portal and Visual Studio, administrators can perform any tasks associated with Microsoft Azure and the web application tier hosted within the service.

2 Architecture Pattern

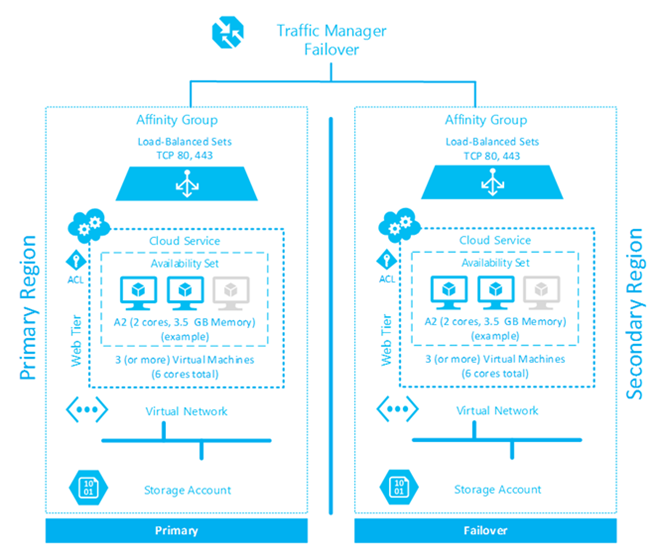

This document describes a pattern for providing access to web services or web server content over multiple geographies for the purposes of availability and redundancy. Critical services are illustrated below without attention to web platform constraints or development methodology within the web service itself. There are two variations to this pattern – one which hosts the web content or services on virtual machines (using Azure supported operating systems and families) and one which uses Azure Websites. The diagram below is a simple illustration of the relevant services and how they are used as part of this pattern using the example of virtual machines.

Each of the main service areas are outlined in more detail following the diagram.

2.1 Pattern Dependencies

The primary dependency of the Global Load Balanced Web Tier architectural patternis the additional service tiers typically found within a multi-tier web application. These services typically include a database tier. However, they may also include other business logic components which provide additional capabilities to the web application. For these services, it is critical to provide alignment with the Azure region and more specifically the affinity groups which contain the Azure services outlined above. Where possible, aligning other service tiers within the same Azure affinity group helps make sure resources associated with this architecture are co-located within a given Azure datacenter. This has the added benefit of increasing performance between Azure services and minimizing costs associated with egress (outbound from the Azure datacenter) traffic.

2.2 Azure Services

The Global Load Balanced Web Tier architectural pattern is comprised of the following Azure services:

- Affinity Groups

- Cloud Services

- Availability Sets

- Endpoint Load-Balanced Sets

- Virtual Networks

- Virtual Machines or Web Sites

- Storage Account

- Traffic Manager

This pattern consists of two variations with core web services or content being hosted either within Azure web roles or within virtual machines (running as web servers) located in two separate Azure regions. From a compute perspective a minimum of two Azure compute resources (web roles or virtual machines) must be deployed to support local availability of the web tier.

Azure affinity groups are established at each selected Azure region to help make sure that resources associated with the web tier (Azure compute and storage resources) are co-located within a given Azure datacenter. A single cloud service per region will be required to host the resources residing within this tier. This has the added benefit of improving performance between interconnected components found within this tier. Across regions the web content must be synchronized either through internal or external mechanisms. Local availability of the web tier is addressed through a combination of Azure availability sets and load-balanced endpoints.

From a network perspective, the Azure web sites or virtual machines hosted in each cloud service must have web services endpoints exposed through load-balanced sets across the systems within each availability set. Each web server within the cloud service will respond using the Azure load balancer with the port defined within the endpoint when configured as a load-balanced set to distribute traffic across systems within this tier.

It is recommended that endpoints (including load-balanced sets) be configured only for the services which should be exposed to other tiers, including external access. Additionally, access control lists (ACLs) can be configured on each defined endpoint to provide security for ports required for administration or communications between services in other tiers.

Each region must have a virtual network defined to support communication between resources within the tier and between  other tiers which may be found in a multi-tier web application (such as a database or other component found within the Solution). The virtual network must include adequate address space to support the compute instances and have DNS servers defined to support DNS queries across service tiers.

other tiers which may be found in a multi-tier web application (such as a database or other component found within the Solution). The virtual network must include adequate address space to support the compute instances and have DNS servers defined to support DNS queries across service tiers.

Alternatively, Azure Traffic Manager also supports the use of Azure Websites Custom Domains which provides the flexibility to provide a more recognizable name for their users. To support availability between regions, an Azure traffic manager instance must be configured between each cloud service defined above and is configured to use the failover load balancing method.

Note that when using Web Roles, the requirement for Azure Traffic Manager is that the web role “Web Hosting Plan Mode” is configured for Standard Mode. This allows Azure Traffic Manager to register and recognize the site.

Storage components include one or more storage accounts per region. Each storage account should be configured to be located in the affinity group defined within each region (as opposed to a specific region) to help make sure the storage services will be in the same data center with the Azure compute services outlined above. Each storage account should be configured to use geo-redundant storage to support availability.

While the primary location can be selected, the secondary location is paired with another Azure region by default. Details on location pairings can be found in the article Windows Azure Storage Redundancy Options and Read Access Geo Redundant Storage. Within each storage account, a container should be created to contain the virtual hard disks associated with the virtual machines outlined above.

Optional services for the include the use of Application Insights within the web application, Autoscale configurations, configuration of alert rules to monitor virtual machine instances and endpoint monitoring of specified URLs from select locations. Storage accounts can additionally be configured to monitor transaction statistics and log read, write and/or delete requests for a specified number of days.

2.3 Pattern Considerations

Considerations for the Global Load Balanced Web Tier architectural patterninclude the securing of exposed endpoints and subscription compute limits within a given cloud service. For each component of Microsoft Azure, a series of subscription and service limits are defined by the service. These limits are subject to change and are published on the Azure Subscription and Service Limits, Quotas, and Constraints page.

Microsoft Azure limits fall into the categories of default and maximum limits. Default limits are those which exist on every Azure subscription and can be increased through a request to Microsoft support whereas maximum limits define the upper boundary of a given service or capability within Azure. Limits can be raised by contacting Microsoft support as outlined through the Azure portal as outlined in Understanding Azure Limits and Increases. However, the request cannot exceed the maximum limits outlined for each Azure service listed above.

The primary constraint that typically is encountered with web farms is the number of cores per subscription and within each cloud service. The default number of cores (currently 20) can quickly be exceeded by web servers which either need to scale up or scale out to meet performance needs within this tier.

For example, if the web tier is hosted in Azure virtual machines and requires at least four cores per instance to support the desired performance of the web application, the Azure virtual machine size required will be A3 or higher. Using this example, the cloud service hosting the web tier can only scale to support five A3 virtual machines, which may not be sufficient to support the inbound traffic and performance requirements of the application. This also can impact Azure autoscale configurations by not providing enough resources to support load scenarios.

For these reasons it is recommended that compute scale considerations of the entire tier be evaluated when implementing this pattern and a request to raise the number of cores available to the configured cloud service and subscription be performed prior to any production implementation.

Another consideration includes the number of reserved IP addresses which can be used within a subscription (currently five), which may require expansion if dynamic addressing of compute resources is not a preferred configuration within the web application.

3 Interfaces and End Points

As outlined earlier, the Azure web roles or virtual machines hosted in each cloud service will typically expose web endpoints (HTTP and HTTPS) to support a presentation layer or expose web services within a given web application. It is recommended that endpoints (including load-balanced sets) be configured only for the services which should be exposed to other tiers, including external access.

For public facing web applications, these services are typically granted broad access, however in hybrid enterprise scenarios the desired access for these applications may be restricted to only the internal organization. In addition, endpoints for remote management services may be required. When using the portal to create virtual machines, Azure defines administrative endpoints for remote management using PowerShell and Remote Desktop Protocol by default.

In addition, this tier may also require other TCP/IP ports to support bi-directional communication with other tiers of the solution, which is typical for multi-tier applications. In most all cases these endpoints, along with other administrative ports, should be secured to specific subnets within Azure. To support this, access control lists (ACLs) should be configured on each defined endpoint to provide security for ports required for administration or communications between services in other tiers.

4 Availability and Resiliency

Microsoft provides clearly defined Service Level Agreements (SLAs) for each service provided within Azure. Each architectural pattern is comprised of one or more Azure services and details about each individual Azure service can be found on the Microsoft Azure Service Level Agreement website. For the Global Load Balanced Web Tier architectural pattern, the Azure services required carry the following SLAs:

| Azure Service | Service Level Agreement |

| Cloud Service | 99.95% |

| Virtual Network Gateway | 99.9% |

| Virtual Machines (deployed in the same availability set) | 99.95% |

| Geo Redundant Storage (GRS) | 99.9% |

| Traffic Manager | 99.99% |

The composite Service Level Agreement (SLA) of the Global Load Balanced Web Tier architectural pattern is 99.9% when Geo Redundant Storage (GRS) is leveraged. The key factors effecting availability is the redundancy of architectural components found within and across Azure regions.

As described above, a minimum of two Azure compute resources (web roles or virtual machines) must be deployed to support local availability of the web tier. It is important to help make sure the number of compute resources includes reserve capacity, not just to address performance requirements.

Microsoft Azure supports availability at the region level with fault domains and upgrade domains. Fault domains are a physical availability construct and failure can take down all services within a given fault domain, of which two are available per subscription. An update domain is a logical availability construct which provides resiliency against planned failures (updates) to the underlying fabric the services reside within. Five update domains are available per subscription. While fault domains cannot be configured directly, update domains can be defined through the use of Azure availability sets or through the configuration of Web Role service definition attributes.

To support planned or unplanned downtime events, the use of availability sets will help make sure that a given virtual machine will be available and meet the SLAs outlined above. As discussed previously, endpoints within the cloud service will be configured as a load-balanced set to distribute traffic across systems within this tier within a given region. Across regions, traffic manager will be configured using the load balancing method with the highest order endpoint configured as the service located in the primary Azure region defined within the architecture.

5 Scale and Performance

A key consideration for the performance and scale of the Global Load Balanced Web Tier architectural pattern is the number of web role or virtual machine instances running within each cloud service at each datacenter. It is important that during testing the underlying solution is tested to determine minimum number of instances to meet performance requirements (both minimum and maximum).

Consider that within a datacenter, a fault domain could potentially fail, reducing the capacity in half for this design pattern. If using virtual machines in the pattern, this means that the minimum number of compute instances required to deliver predictable minimum capacity during failure of a fault domain is 2 times the minimum number of virtual machines required for performance. To address this while maintaining reduced costs, one possible approach is to have additional virtual machines deployed in reserve in an offline state with either autoscale or automation rules enabled to address the web tier's capacity concerns.

Additionally, depending on the audience for the application or service, Azure traffic manager can be configured to optimize for performance in lieu of failover. As discussed earlier, the traffic manager load distribution method is suggested to be configured as failover. When using the failover load distribution method is configured as failover, a primary endpoint is utilized and in the event of failure requests are redirected to a secondary endpoint.

The design pattern illustrated above focuses on the uptime of the service and utilizes this method to provide availability across Azure regions. From a performance and scale perspective, the Azure traffic manager component of the pattern could be configured the load distribution method to performance which would distribute requests to the endpoint (hosted in a given Azure region) which has the lowest latency.

6 Cost

An important consideration when deploying any solution within Microsoft Azure is the cost of ownership. Costs related to on-premises cloud environments typically consist of up-front investments compute, storage and network resources, while costs related to public cloud environments such as Azure are based on the granular consumption of the services and resources found within them. Costs can be broken down into two main categories:

- Cost factors

- Cost drivers

Cost factors consist of the specific Microsoft Azure services which have a unit consumption cost and are required to compose a given architectural pattern. Cost drivers are a series of configuration decisions for these services within a given architectural pattern that can increase or decrease costs.

Microsoft Azure costs are divided by the specific service or capability hosted within Azure and continually updated to keep pace with the market demand. Costs for each service are published publicly on the Microsoft Azure pricing calculator. It is recommended that costs be reviewed regularly during the design, implementation and operation of this and other architectural patterns.

6.1 Cost Factors

Cost factors within the Global Load Balanced Web Tier architectural pattern include the choices between Azure compute resources and the use of Azure traffic manager.

When using Azure virtual machines, the two factors which impact cost are the size of virtual machine and the storage of the virtual machine data. Microsoft Azure provides a predefined set of available virtual machine sizes which provide an array of CPU and memory configurations within the service. Virtual machines are measured (and therefore charged) by the hour of use and de-allocated virtual machines (those which are turned off) carry no charge. The storage accounts used to host the virtual machine hard disks carry a charge per gigabyte (GB) regardless of the state of the data found within it. Given that the Global Load Balanced Web Tier architectural pattern specifies the use of Geo Redundant Storage (GRS), this cost is higher than the base configuration of local redundant storage.

The Global Load Balanced Web Tier architectural pattern also specifies the use of traffic manager to support failover between endpoints across Azure regions. Costs for traffic manager are calculated by the number of queries (per million) and the health checks which are measured by the number of service endpoints checked per month.

Additional cost factors consist of the inclusion of optional services such as the use of Application Insights for monitoring the web application hosted within the pattern.

Finally, while ingress network traffic is included in the Azure service, the egress of network traffic outbound from an Azure virtual network carries a cost.

6.2 Cost Drivers

As stated earlier, cost drivers consist of the configurable options of the Azure services required when implementing an architectural pattern which can impact the overall cost of the Solution. These configuration choices can have both a positive or negative impact on the cost of ownership of a given Solution within Azure, however they may also potentially impact the overall performance and availability of the Solution depending on the selections made by the organization. Cost drivers can be categorized by their level of impact (high, medium and low). Cost drivers for the Global Load Balanced Web Tier architectural pattern are summarized in the table below.

| Impact Level | Cost Driver | Description |

| HIGH | State of Azure virtual machine instances Number of Azure virtual machine instances | Azure compute costs are driven by the number of running instances and the size of the virtual machine. Unlike running virtual machines, those which are in and offline state are de-allocated and do not charge compute costs per hour Azure compute costs are also driven the number of running virtual machines in a cloud service. To support local availability constructs, the architectural pattern uses multiple virtual machines in each Azure region using availability sets. Azure autoscale can be used to maintain a lower number of running instances and address scale requirements in an on-demand basis when defined performance thresholds are exceeded |

| MEDIUM | Size (and type) of Azure virtual machine instances | A consideration for Azure compute costs include the virtual machine instance configuration size (and type). Instances range from low CPU and memory configurations to CPU and memory intensive sizes. Higher memory and CPU core allocations carry higher per hour operating costs. Options include using a fewer number large instances vs. a larger number of small instances to address performance requirements |

| LOW | Virtual machine hard disk size Number of traffic manager DNS queries Number of traffic manager health checks | Azure storage carries a cost per GB and provisioned virtual machines (online and offline) are stored within the defined storage account within the Azure subscription. While still a cost consideration, the virtual machine hard disk sizes are expected to be low and are not expected to have a significant impact on the overall cost of solutions using this pattern One price metric for Azure traffic manager is the number of DNS queries traffic manager must load balance. Costs are defined by the number of DNS queries balanced per month, measured in billions. The difference in cost is defined by the first billion DNS queries and any number of queries above this amount in a given month. This cost is the same across all Azure traffic manager load-balancing methods Azure traffic manager also monitors the health of each service endpoint and performs redirection in the event of failure. The difference in cost for this service is dependent on whether the endpoint defined within traffic manager is internal or external to Azure. Like DNS queries, this cost is the same across all Azure traffic manager load-balancing methods |

7 Operations

The Cloud Platform Integration Framework (CPIF) extends the operational and management functions of Microsoft Azure,  System Center and Windows Server to support managed cloud workloads. As outlined in CPIF, Microsoft Azure architectural patterns support deployment, business continuity and disaster recovery, monitoring and maintenance as part of the operations of the Global Load Balanced Web Tier architectural pattern.

System Center and Windows Server to support managed cloud workloads. As outlined in CPIF, Microsoft Azure architectural patterns support deployment, business continuity and disaster recovery, monitoring and maintenance as part of the operations of the Global Load Balanced Web Tier architectural pattern.

Deployment of this pattern can be achieved through the standard Azure Management Portal, Visual Studio and Azure PowerShell. For deployment using PowerShell, several examples are provided at the Azure Script Center. Relevant examples include the Deploy a Windows Azure Virtual Machine with Two Data Disks sample and the Deploy Windows Azure VMs to an Availability Set and Load Balanced on an Endpoint sample.

Additionally, the Global Load Balanced Web Tier architectural pattern can be created using the Azure Resource Group capability which is in preview. Templates can be used in conjunction with Azure resource groups to deploy the necessary components for this pattern, such as the sample for updating PowerShell deployment scripts to use Azure Resource Manager template.

Monitoring of this pattern and associated resources can be achieved in two ways using System Center and Microsoft Azure. When using virtual machines (IaaS), the application can be monitored using System Center 2012 R2 Operations Manager. Like on-premises resources, Operations Manager provides management and monitoring capabilities for virtual machines running within Azure, monitoring critical services using standard management packs.

In addition, Operations Manager can provide application telemetry for websites hosted within virtual machines using Application Performance Monitoring (APM). Operations Manager APM supports the monitoring of .NET and Java applications hosted on Windows Servers using Internet Information Services (IIS). By defining thresholds, APM provides Operations Manager with performance and reliability data to support detailed analysis of application failures.

For applications hosted natively within Azure Websites and Web Roles, Azure Application Insights can be leveraged to provide performance information about the web application and, like Operations Manager APM, provide notification of performance issues or application exceptions when they occur.

Maintenance of the Global Load Balanced Web Tier architectural pattern falls into two categories:

- Maintenance of the web application

- Maintenance of the web platform

For the update and maintenance of the web application, an integrated development environment (IDE) product and associated source control repository can be leveraged. Some IDE products such as Visual Studio and Visual Studio Online can natively integrate with Microsoft Azure subscriptions. Details about this integration can be found at the following link.

Platform updates depend on whether the Solution is deployed within Azure virtual machines or web roles. When using Azure virtual machines it is the responsibility of the organization to perform platform updating. This can be accomplished through tools such as Windows Server Update Services or System Center Configuration Manager Software Updates. When the web application is deployed using Microsoft Azure web roles, updates are managed by Microsoft Azure. While this simplifies the platform maintenance cycle, it comes at the risk of availability if services are not properly deployed with this in mind.

As discussed previously, the use of availability sets and web role configurations can help make sure the application spans update and fault domains, allowing the hosted web application to be resilient to outages caused by Azure platform updates. Like Azure web roles, Solutions deployed in Azure virtual machines must also consider the update of the Azure fabric as well and require the same level of availability planning outlined earlier in this pattern guide.

From a business continuity and disaster recovery perspective, the availability constructs outlined earlier should help make sure that solutions deployed using the Global Load Balanced Web Tier architectural pattern are designed to support continuity of operations in the event of failure of one or more services within both locally and across Azure regions. Backup of the tier must still be maintained by the organization using native Azure capabilities, through Microsoft products or third party solutions. The use of geo-redundant storage as specified within this pattern supports the ability to restore data across regions in the event of local failure.

8 Architecture Anti-Patterns

As with any architectural design there are both recommended practices and approaches which are not desirable. Anti-patterns for the Global Load Balanced Web Tier include:

- Single Region Deployment

- Single Virtual Machine Deployment (per Region)

Within the Global Load Balanced Web Tier architectural pattern there are multiple decisions which could impact the availability of the entire application infrastructure or solution which depend on this tier. In many cases, a web tier serves as a presentation layer for other tiers of an application, including search, business logic components and data tiers.

For these reasons, it is important to avoid decisions which impact the availability of this tier. While scalability and performance are important for any web application, unlike other constructs, these configuration choices can be reviewed and revisited over time with little configuration changes required and minimal impact to overall cost.

Deployment to a single Azure region potentially exposes the application to the failure or inaccessibility of a given Azure region. While the loss of an entire region is an unlikely event, in order to withstand failures of this magnitude the pattern should include deployment to a second Azure region as outlined in the sections above.

In addition, deployment of a single virtual machine into each Azure region may appear on the surface to reduce costs,  however this does not protect against planned and unplanned availability within a given Azure datacenter or region. Events such as regular updates and intermittent failures would not be protected by Azure fault and update domains, leaving consumers of the service to be redirected to another region, which could have an impact on end-user performance depending on their latency to the region designated for failover.

however this does not protect against planned and unplanned availability within a given Azure datacenter or region. Events such as regular updates and intermittent failures would not be protected by Azure fault and update domains, leaving consumers of the service to be redirected to another region, which could have an impact on end-user performance depending on their latency to the region designated for failover.

While impact to the end-user experience is never considered an acceptable outcome in normal operations, during times of service outage having diminished capacity can sometimes be acceptable given the rarity of this type of catastrophic event. However, when availability is only planned between regions, not within a given region (as would be the case in a single virtual machine deployment), the frequency of this type of outage would be higher due to expected and planned maintenance cycles of the Azure fabric. For these reasons the architectural anti-patterns for this tier will typically focus on those decisions which impact the availability of the deployed Solution.