Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Azure resource logs provide insight into operations that are performed in an Azure resource. The content of resource logs is different for each resource type. They can include information about the operations performed on the resource, the status of those operations, and other details that help you understand the health and performance of the resource.

Collecting resource logs

Resource logs aren't collected by default. To collect them, you must create a diagnostic setting for each Azure resource. See Diagnostic settings in Azure Monitor for details. The information below provides further details on the different destinations that resources logs can be sent to.

Note

Resource Logs aren't completely lossless. They're based on a store and forward architecture designed to affordably move petabytes of data per day at scale. This capability includes built-in redundancy and retries across the platform but doesn't provide transactional guarantees. Anytime a persistent source of data loss is identified, its resolution and future prevention is prioritized. Small data losses may still occur to temporary, non-repeating service issues distributed across Azure.

Destinations

When you create a diagnostic setting, you can choose to send resource logs to one or more of the following destinations. The destinations you choose are based on your needs for analysis, retention, and integration with other systems.

The following sections describe details of resource logs for each destination.

Send the resource logs to a Log Analytics workspace for the following functionality:

- Correlate resource logs with other log data using log queries.

- Create log alerts from resource log entries.

- Access resource log data with Power BI.

Collection mode

The tables in the Log Analytics workspace used by resource logs depend on the resource type and the type of collection the resource is using. There are two types of collection modes for resource logs:

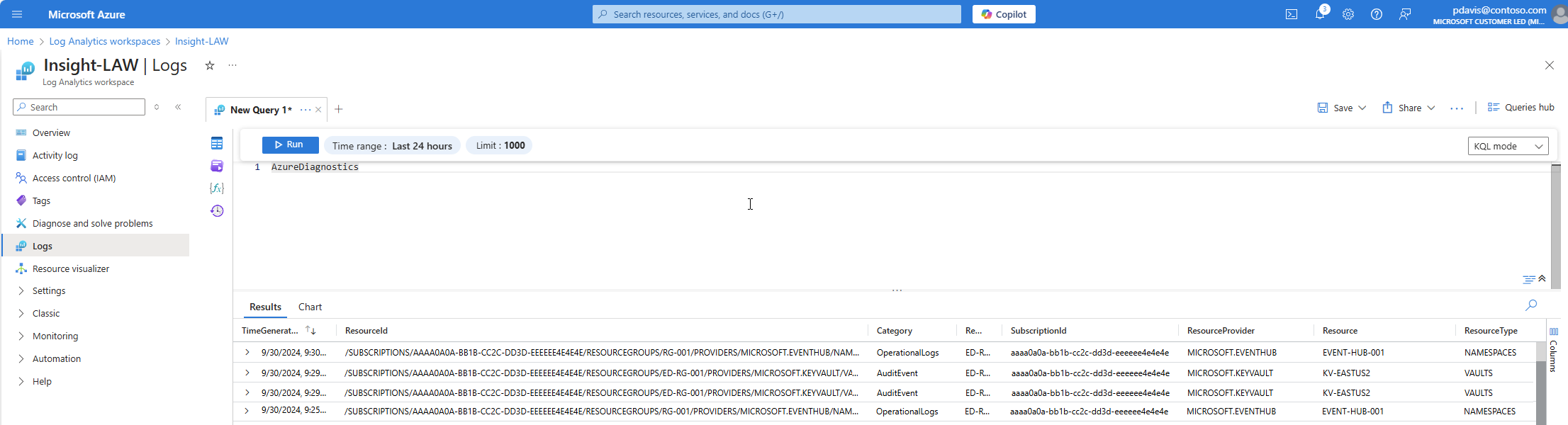

- Azure diagnostics: All data is written to the AzureDiagnostics table.

- Resource-specific: Data is written to individual tables for each category of the resource.

Resource-specific

For logs using resource-specific mode, individual tables in the selected workspace are created for each log category selected in the diagnostic setting. Resource-specific logs have the following advantages over Azure diagnostics logs:

- Makes it easier to work with the data in log queries.

- Provides better discoverability of schemas and their structure.

- Improves performance across ingestion latency and query times.

- Provides the ability to grant Azure role-based access control rights on a specific table.

For a description of resource-specific logs and tables, see Supported Resource log categories for Azure Monitor

Azure diagnostics mode

In Azure diagnostics mode, all data from any diagnostic setting is collected in the AzureDiagnostics table. This legacy method is used today by a minority of Azure services. Because multiple resource types send data to the same table, its schema is the superset of the schemas of all the different data types being collected. For details on the structure of this table and how it works with this potentially large number of columns, see AzureDiagnostics reference.

The AzureDiagnostics table contains the resourceId of the resource that generated the log, the category of the log, and the time the log was generated as well as resource specific properties.

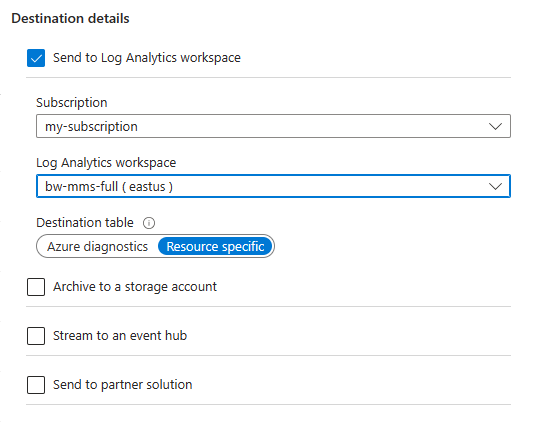

Select the collection mode

Most Azure resources write data to the workspace in either Azure diagnostics or resource-specific mode without giving you a choice. For more information, see Common and service-specific schemas for Azure resource logs.

All Azure services will eventually use the resource-specific mode. As part of this transition, some resources allow you to select a mode in the diagnostic setting. Specify resource-specific mode for any new diagnostic settings because this mode makes the data easier to manage. It also might help you avoid complex migrations later.

Note

For an example that sets the collection mode by using an Azure Resource Manager template, see Resource Manager template samples for diagnostic settings in Azure Monitor.

You can modify an existing diagnostic setting to resource-specific mode. In this case, data that was already collected remains in the AzureDiagnostics table until it's removed according to your retention setting for the workspace. New data is collected in the dedicated table. Use the union operator to query data across both tables.

Continue to watch the Azure Updates blog for announcements about Azure services that support resource-specific mode.

Categories and schemas

All resource logs share a common top-level schema. Each service defines unique properties for its own logs. See Common and service-specific schemas for Azure resource logs for the common schema and the schemas for each service. See Supported Resource log categories for Azure Monitor for the different categories supported by each service and links to the schemas for each category.