Display call transcription state on the client

Note

Call transcription state is only available from Teams meetings. Currently there's no support for call transcription state for Azure Communication Services to Azure Communication Services calls.

When using call transcription you may want to let your users know that a call is being transcribe. Here's how.

Prerequisites

- An Azure account with an active subscription. Create an account for free.

- A deployed Communication Services resource. Create a Communication Services resource.

- A user access token to enable the calling client. For more information, see Create and manage access tokens.

- Optional: Complete the quickstart to add voice calling to your application

Install the SDK

Locate your project-level build.gradle file and add mavenCentral() to the list of repositories under buildscript and allprojects:

buildscript {

repositories {

...

mavenCentral()

...

}

}

allprojects {

repositories {

...

mavenCentral()

...

}

}

Then, in your module-level build.gradle file, add the following lines to the dependencies section:

dependencies {

...

implementation 'com.azure.android:azure-communication-calling:1.0.0'

...

}

Initialize the required objects

To create a CallAgent instance, you have to call the createCallAgent method on a CallClient instance. This call asynchronously returns a CallAgent instance object.

The createCallAgent method takes CommunicationUserCredential as an argument, which encapsulates an access token.

To access DeviceManager, you must create a callAgent instance first. Then you can use the CallClient.getDeviceManager method to get DeviceManager.

String userToken = '<user token>';

CallClient callClient = new CallClient();

CommunicationTokenCredential tokenCredential = new CommunicationTokenCredential(userToken);

android.content.Context appContext = this.getApplicationContext(); // From within an activity, for instance

CallAgent callAgent = callClient.createCallAgent(appContext, tokenCredential).get();

DeviceManager deviceManager = callClient.getDeviceManager(appContext).get();

To set a display name for the caller, use this alternative method:

String userToken = '<user token>';

CallClient callClient = new CallClient();

CommunicationTokenCredential tokenCredential = new CommunicationTokenCredential(userToken);

android.content.Context appContext = this.getApplicationContext(); // From within an activity, for instance

CallAgentOptions callAgentOptions = new CallAgentOptions();

callAgentOptions.setDisplayName("Alice Bob");

DeviceManager deviceManager = callClient.getDeviceManager(appContext).get();

CallAgent callAgent = callClient.createCallAgent(appContext, tokenCredential, callAgentOptions).get();

Warning

Up until version 1.1.0 and beta release version 1.1.0-beta.1 of the Azure Communication Services Calling Android SDK has the isTranscriptionActive and addOnIsTranscriptionActiveChangedListener are part of the Call object. For new beta releases, those APIs have been moved as an extended feature of Call just like described below.

Call transcription is an extended feature of the core Call object. You first need to obtain the transcription feature object:

TranscriptionCallFeature callTranscriptionFeature = call.feature(Features.TRANSCRIPTION);

Then, to check if the call is being transcribed, inspect the isTranscriptionActive property of callTranscriptionFeature. It returns boolean.

boolean isTranscriptionActive = callTranscriptionFeature.isTranscriptionActive();

You can also subscribe to changes in transcription:

private void handleCallOnIsTranscriptionChanged(PropertyChangedEvent args) {

boolean isTranscriptionActive = callTranscriptionFeature.isTranscriptionActive();

}

callTranscriptionFeature.addOnIsTranscriptionActiveChangedListener(handleCallOnIsTranscriptionChanged);

Set up your system

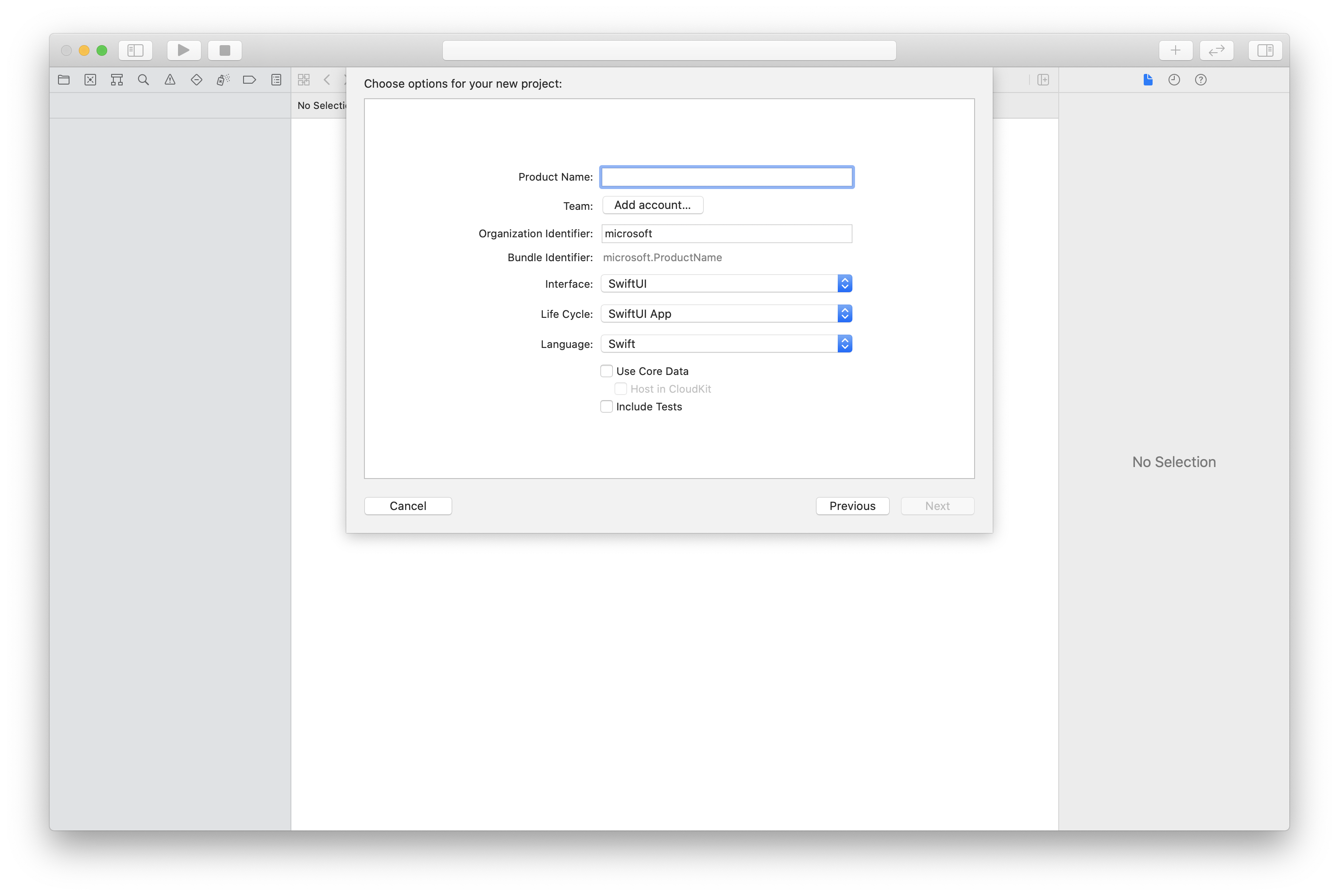

Create the Xcode project

In Xcode, create a new iOS project and select the Single View App template. This quickstart uses the SwiftUI framework, so you should set Language to Swift and set Interface to SwiftUI.

You're not going to create tests during this quickstart. Feel free to clear the Include Tests checkbox.

Install the package and dependencies by using CocoaPods

Create a Podfile for your application, like this example:

platform :ios, '13.0' use_frameworks! target 'AzureCommunicationCallingSample' do pod 'AzureCommunicationCalling', '~> 1.0.0' endRun

pod install.Open

.xcworkspaceby using Xcode.

Request access to the microphone

To access the device's microphone, you need to update your app's information property list by using NSMicrophoneUsageDescription. You set the associated value to a string that will be included in the dialog that the system uses to request access from the user.

Right-click the Info.plist entry of the project tree, and then select Open As > Source Code. Add the following lines in the top-level <dict> section, and then save the file.

<key>NSMicrophoneUsageDescription</key>

<string>Need microphone access for VOIP calling.</string>

Set up the app framework

Open your project's ContentView.swift file. Add an import declaration to the top of the file to import the AzureCommunicationCalling library. In addition, import AVFoundation. You'll need it for audio permission requests in the code.

import AzureCommunicationCalling

import AVFoundation

Initialize CallAgent

To create a CallAgent instance from CallClient, you have to use a callClient.createCallAgent method that asynchronously returns a CallAgent object after it's initialized.

To create a call client, pass a CommunicationTokenCredential object:

import AzureCommunication

let tokenString = "token_string"

var userCredential: CommunicationTokenCredential?

do {

let options = CommunicationTokenRefreshOptions(initialToken: token, refreshProactively: true, tokenRefresher: self.fetchTokenSync)

userCredential = try CommunicationTokenCredential(withOptions: options)

} catch {

updates("Couldn't created Credential object", false)

initializationDispatchGroup!.leave()

return

}

// tokenProvider needs to be implemented by Contoso, which fetches a new token

public func fetchTokenSync(then onCompletion: TokenRefreshOnCompletion) {

let newToken = self.tokenProvider!.fetchNewToken()

onCompletion(newToken, nil)

}

Pass the CommunicationTokenCredential object that you created to CallClient, and set the display name:

self.callClient = CallClient()

let callAgentOptions = CallAgentOptions()

options.displayName = " iOS Azure Communication Services User"

self.callClient!.createCallAgent(userCredential: userCredential!,

options: callAgentOptions) { (callAgent, error) in

if error == nil {

print("Create agent succeeded")

self.callAgent = callAgent

} else {

print("Create agent failed")

}

})

Warning

Up until version 1.1.0 and beta release version 1.1.0-beta.1 of the Azure Communication Services Calling iOS SDK has the isTranscriptionActive as part of the Call object and didChangeTranscriptionState is part of CallDelegate delegate. For new beta releases, those APIs have been moved as an extended feature of Call just like described below.

Call transcription is an extended feature of the core Call object. You first need to obtain the transcription feature object:

let callTranscriptionFeature = call.feature(Features.transcription)

Then, to check if the call is transcribed, inspect the isTranscriptionActive property of callTranscriptionFeature. It returns Bool.

let isTranscriptionActive = callTranscriptionFeature.isTranscriptionActive;

You can also subscribe to transcription changes by implementing TranscriptionCallFeatureDelegate delegate on your class with the event didChangeTranscriptionState:

callTranscriptionFeature.delegate = self

// didChangeTranscriptionState is a member of TranscriptionCallFeatureDelegate

public func transcriptionCallFeature(_ transcriptionCallFeature: TranscriptionCallFeature, didChangeTranscriptionState args: PropertyChangedEventArgs) {

let isTranscriptionActive = callTranscriptionFeature.isTranscriptionActive

}

Set up your system

Create the Visual Studio project

For a UWP app, in Visual Studio 2022, create a new Blank App (Universal Windows) project. After you enter the project name, feel free to choose any Windows SDK later than 10.0.17763.0.

For a WinUI 3 app, create a new project with the Blank App, Packaged (WinUI 3 in Desktop) template to set up a single-page WinUI 3 app. Windows App SDK version 1.3 or later is required.

Install the package and dependencies by using NuGet Package Manager

The Calling SDK APIs and libraries are publicly available via a NuGet package.

The following steps exemplify how to find, download, and install the Calling SDK NuGet package:

- Open NuGet Package Manager by selecting Tools > NuGet Package Manager > Manage NuGet Packages for Solution.

- Select Browse, and then enter

Azure.Communication.Calling.WindowsClientin the search box. - Make sure that the Include prerelease check box is selected.

- Select the

Azure.Communication.Calling.WindowsClientpackage, and then selectAzure.Communication.Calling.WindowsClient1.4.0-beta.1 or a newer version. - Select the checkbox that corresponds to the Communication Services project on the right-side tab.

- Select the Install button.

Call transcription is an extended feature of the core Call object. You first need to obtain the transcription feature object:

TranscriptionCallFeature transcriptionFeature = call.Features.Transcription;

Then, to check if the call is being transcribed, inspect the IsTranscriptionActive property of transcriptionFeature. It returns boolean.

boolean isTranscriptionActive = transcriptionFeature.isTranscriptionActive;

You can also subscribe to changes in transcription:

private async void Call__OnIsTranscriptionActiveChanged(object sender, PropertyChangedEventArgs args)

boolean isTranscriptionActive = transcriptionFeature.IsTranscriptionActive();

}

transcriptionFeature.IsTranscriptionActiveChanged += Call__OnIsTranscriptionActiveChanged;

Next steps

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for