Work with registered models in Azure Machine Learning

APPLIES TO:

Azure CLI ml extension v2 (current)

Azure CLI ml extension v2 (current)

Python SDK azure-ai-ml v2 (current)

Python SDK azure-ai-ml v2 (current)

In this article, you learn to register and work with models in Azure Machine Learning by using:

- The Azure Machine Learning studio UI.

- The Azure Machine Learning V2 CLI.

- The Python Azure Machine Learning V2 SDK.

You learn how to:

- Create registered models in the model registry from local files, datastores, or job outputs.

- Work with different types of models, such as custom, MLflow, and Triton.

- Use models as inputs or outputs in training jobs.

- Manage the lifecycle of model assets.

Model registration

Model registration lets you store and version your models in your workspace in the Azure cloud. The model registry helps you organize and keep track of your trained models. You can register models as assets in Azure Machine Learning by using the Azure CLI, the Python SDK, or the Machine Learning studio UI.

Supported paths

To register a model, you need to specify a path that points to the data or job location. The following table shows the various data locations Azure Machine Learning supports, and the syntax for the path parameter:

| Location | Syntax |

|---|---|

| Local computer | <model-folder>/<model-filename> |

| Azure Machine Learning datastore | azureml://datastores/<datastore-name>/paths/<path_on_datastore> |

| Azure Machine Learning job | azureml://jobs/<job-name>/outputs/<output-name>/paths/<path-to-model-relative-to-the-named-output-location> |

| MLflow job | runs:/<run-id>/<path-to-model-relative-to-the-root-of-the-artifact-location> |

| Model asset in a Machine Learning workspace | azureml:<model-name>:<version> |

| Model asset in a Machine Learning registry | azureml://registries/<registry-name>/models/<model-name>/versions/<version> |

Supported modes

When you use models for inputs or outputs, you can specify one of the following modes. For example, you can specify whether the model should be read-only mounted or downloaded to the compute target.

ro_mount: Mount the data to the compute target as read-only.rw_mount: Read-write mount the data.download: Download the data to the compute target.upload: Upload the data from the compute target.direct: Pass in the URI as a string.

The following table shows the available mode options for different model type inputs and outputs.

| Type | upload |

download |

ro_mount |

rw_mount |

direct |

|---|---|---|---|---|---|

custom file input |

|||||

custom folder input |

✓ | ✓ | ✓ | ||

mlflow input |

✓ | ✓ | |||

custom file output |

✓ | ✓ | ✓ | ||

custom folder output |

✓ | ✓ | ✓ | ||

mlflow output |

✓ | ✓ | ✓ |

Prerequisites

- An Azure subscription with a free or paid version of Azure Machine Learning. If you don't have an Azure subscription, create a free account before you begin.

- An Azure Machine Learning workspace.

To run the code samples in this article and work with the Azure Machine Learning V2 CLI or Python Azure Machine Learning V2 SDK, you also need:

Azure CLI version 2.38.0 or greater installed.

V2 of the

mlextension installed by running the following command. For more information, see Install, set up, and use the CLI (v2).az extension add -n ml

Note

V2 provides full backward compatibility. You can still use model assets from the v1 SDK or CLI. All models registered with the v1 CLI or SDK are assigned the type custom.

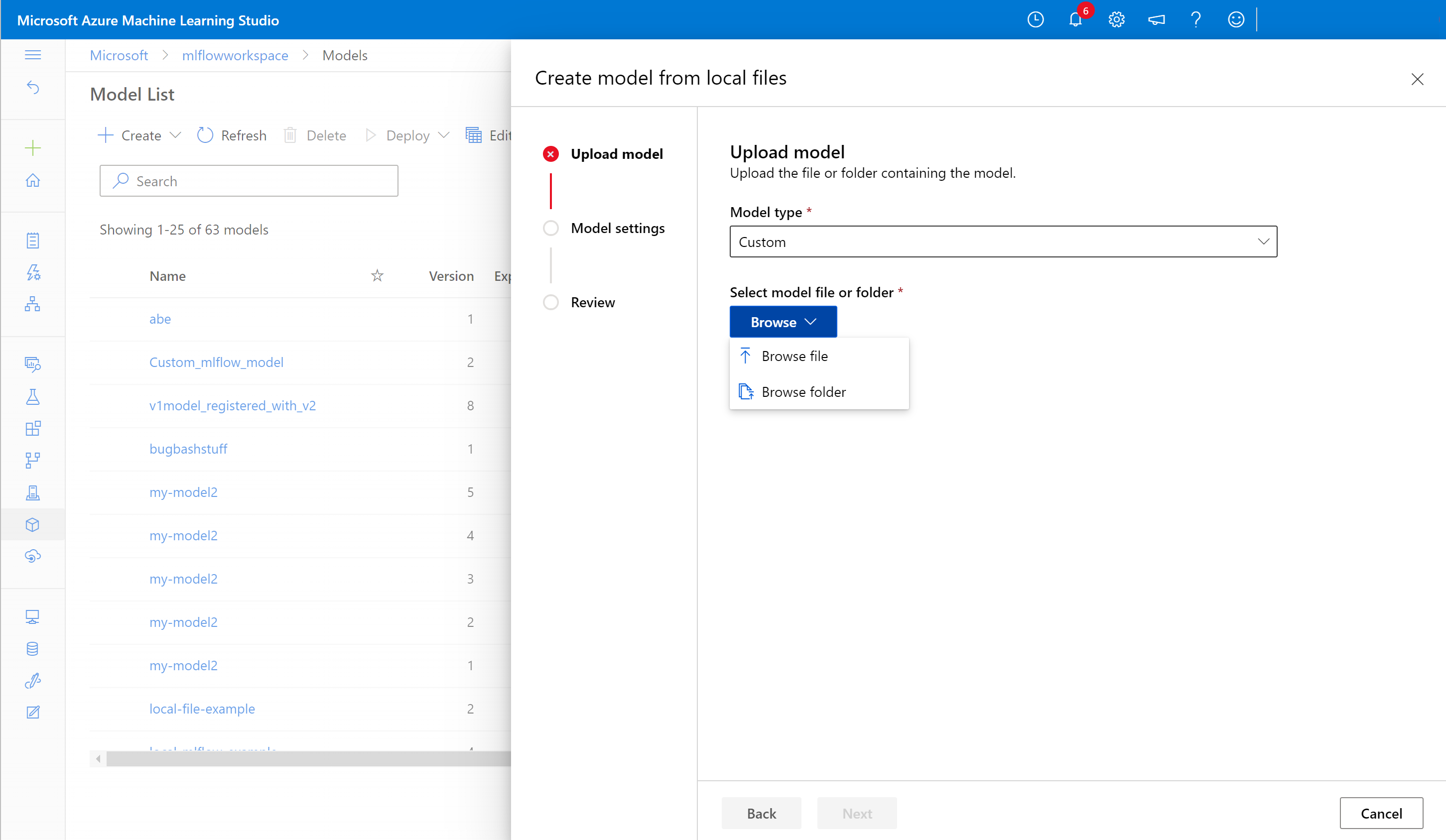

Register a model by using the studio UI

To register a model by using the Azure Machine Learning studio UI:

In your workspace in the studio, select Models from the left navigation.

On the Model List page, select Register, and select one of the following locations from the dropdown list:

- From local files

- From a job output

- From datastore

- From local files (based on framework)

On the first Register model screen:

- Navigate to the local file, datastore, or job output for your model.

- Select the input model type: MLflow, Triton, or Unspecified type.

On the Model settings screen, provide a name and other optional settings for your registered model, and select Next.

On the Review screen, review the configuration and then select Register.

Register a model by using the Azure CLI or Python SDK

The following code snippets cover how to register a model as an asset in Azure Machine Learning by using the Azure CLI or Python SDK. These snippets use custom and mlflow model types.

customtype refers to a model file or folder trained with a custom standard that Azure Machine Learning doesn't currently support.mlflowtype refers to a model trained with MLflow. MLflow trained models are in a folder that contains the MLmodel file, the model file, the conda dependencies file, and the requirements.txt file.

Tip

You can follow along with the Python versions of the following samples by running the model.ipynb notebook in the azureml-examples repository.

Connect to your workspace

The workspace is the top-level resource for Azure Machine Learning, providing a centralized place to work with all the artifacts you create when you use Azure Machine Learning. In this section, you connect to your Azure Machine Learning workspace to create the registered model.

Sign in to Azure by running

az loginand following the prompts.In the following commands, replace

<subscription-id>,<workspace-name>,<resource-group>, and<location>placeholders with the values for your environment.az account set --subscription <subscription-id> az configure --defaults workspace=<workspace-name> group=<resource-group> location=<location>

Create the registered model

You can create a registered model from a model that's:

- Located on your local computer.

- Located on an Azure Machine Learning datastore.

- Output from an Azure Machine Learning job.

Local file or folder

Create a YAML file <file-name>.yml. In the file, provide a name for your registered model, a path to the local model file, and a description. For example:

$schema: https://azuremlschemas.azureedge.net/latest/model.schema.json name: local-file-example path: mlflow-model/model.pkl description: Model created from local file.Run the following command, using the name of your YAML file:

az ml model create -f <file-name>.yml

For a complete example, see the model YAML.

Datastore

You can create a model from a cloud path by using any of the supported URI formats.

The following example uses the shorthand azureml scheme for pointing to a path on the datastore by using the syntax azureml://datastores/<datastore-name>/paths/<path_on_datastore>.

az ml model create --name my-model --version 1 --path azureml://datastores/myblobstore/paths/models/cifar10/cifar.pt

For a complete example, see the CLI reference.

Job output

If your model data comes from a job output, you have two options for specifying the model path. You can use the MLflow runs: URI format or the azureml://jobs URI format.

Note

The artifacts reserved keyword represents output from the default artifact location.

MLflow runs: URI format

This option is optimized for MLflow users, who are probably already familiar with the MLflow

runs:URI format. This option creates a model from artifacts in the default artifact location, where all MLflow-logged models and artifacts are located. This option also establishes a lineage between a registered model and the run the model came from.Format:

runs:/<run-id>/<path-to-model-relative-to-the-root-of-the-artifact-location>Example:

az ml model create --name my-registered-model --version 1 --path runs:/my_run_0000000000/model/ --type mlflow_model

azureml://jobs URI format

The

azureml://jobsreference URI option lets you register a model from artifacts in any of the job's output paths. This format aligns with theazureml://datastoresreference URI format, and also supports referencing artifacts from named outputs other than the default artifact location.If you didn't directly register your model within the training script by using MLflow, you can use this option to establish a lineage between a registered model and the job it was trained from.

Format:

azureml://jobs/<run-id>/outputs/<output-name>/paths/<path-to-model>- Default artifact location:

azureml://jobs/<run-id>/outputs/artifacts/paths/<path-to-model>/. This location is equivalent to MLflowruns:/<run-id>/<model>. - Named output folder:

azureml://jobs/<run-id>/outputs/<named-output-folder> - Specific file within the named output folder:

azureml://jobs/<run-id>/outputs/<named-output-folder>/paths/<model-filename> - Specific folder path within the named output folder:

azureml://jobs/<run-id>/outputs/<named-output-folder>/paths/<model-folder-name>

Example:

Save a model from a named output folder:

az ml model create --name run-model-example --version 1 --path azureml://jobs/my_run_0000000000/outputs/artifacts/paths/model/For a complete example, see the CLI reference.

- Default artifact location:

Use models for training

The v2 Azure CLI and Python SDK also let you use models as inputs or outputs in training jobs.

Use a model as input in a training job

Create a job specification YAML file, <file-name>.yml. In the

inputssection of the job, specify:- The model

type, which can bemlflow_model,custom_model, ortriton_model. - The

pathwhere your model is located, which can be any of the paths listed in the comment of the following example.

$schema: https://azuremlschemas.azureedge.net/latest/commandJob.schema.json # Possible Paths for models: # AzureML Datastore: azureml://datastores/<datastore-name>/paths/<path_on_datastore> # MLflow run: runs:/<run-id>/<path-to-model-relative-to-the-root-of-the-artifact-location> # Job: azureml://jobs/<job-name>/outputs/<output-name>/paths/<path-to-model-relative-to-the-named-output-location> # Model Asset: azureml:<my_model>:<version> command: | ls ${{inputs.my_model}} inputs: my_model: type: mlflow_model # List of all model types here: https://learn.microsoft.com/azure/machine-learning/reference-yaml-model#yaml-syntax path: ../../assets/model/mlflow-model environment: azureml://registries/azureml/environments/sklearn-1.5/labels/latest- The model

Run the following command, substituting your YAML filename.

az ml job create -f <file-name>.yml

For a complete example, see the model GitHub repo.

Write a model as output for a job

Your job can write a model to your cloud-based storage by using outputs.

Create a job specification YAML file <file-name>.yml. Populate the

outputssection with the output model type and path.$schema: https://azuremlschemas.azureedge.net/latest/commandJob.schema.json # Possible Paths for Model: # Local path: mlflow-model/model.pkl # AzureML Datastore: azureml://datastores/<datastore-name>/paths/<path_on_datastore> # MLflow run: runs:/<run-id>/<path-to-model-relative-to-the-root-of-the-artifact-location> # Job: azureml://jobs/<job-name>/outputs/<output-name>/paths/<path-to-model-relative-to-the-named-output-location> # Model Asset: azureml:<my_model>:<version> code: src command: >- python hello-model-as-output.py --input_model ${{inputs.input_model}} --custom_model_output ${{outputs.output_folder}} inputs: input_model: type: mlflow_model # mlflow_model,custom_model, triton_model path: ../../assets/model/mlflow-model outputs: output_folder: type: custom_model # mlflow_model,custom_model, triton_model environment: azureml://registries/azureml/environments/sklearn-1.5/labels/latestCreate a job by using the CLI:

az ml job create --file <file-name>.yml

For a complete example, see the model GitHub repo.

Manage models

The Azure CLI and Python SDK also allow you to manage the lifecycle of your Azure Machine Learning model assets.

List

List all the models in your workspace:

az ml model list

List all the model versions under a given name:

az ml model list --name run-model-example

Show

Get the details of a specific model:

az ml model show --name run-model-example --version 1

Update

Update mutable properties of a specific model:

Important

For models, only description and tags can be updated. All other properties are immutable, and if you need to change them, you should create a new version of the model.

az ml model update --name run-model-example --version 1 --set description="This is an updated description." --set tags.stage="Prod"

Archive

Archiving a model hides it from list queries like az ml model list by default. You can continue to reference and use an archived model in your workflows.

You can archive all versions or only specific versions of a model. If you don't specify a version, all versions of the model are archived. If you create a new model version under an archived model container, the new version is also automatically set as archived.

Archive all versions of a model:

az ml model archive --name run-model-example

Archive a specific model version:

az ml model archive --name run-model-example --version 1