Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

APPLIES TO:  Python SDK azure-ai-ml v2 (current)

Python SDK azure-ai-ml v2 (current)

In this article, learn how to run your Keras training scripts using the Azure Machine Learning Python SDK v2.

The example code in this article uses Azure Machine Learning to train, register, and deploy a Keras model built using the TensorFlow backend. The model, a deep neural network (DNN) built with the Keras Python library running on top of TensorFlow, classifies handwritten digits from the popular MNIST dataset.

Keras is a high-level neural network API capable of running top of other popular DNN frameworks to simplify development. With Azure Machine Learning, you can rapidly scale out training jobs using elastic cloud compute resources. You can also track your training runs, version models, deploy models, and much more.

Whether you're developing a Keras model from the ground-up or you're bringing an existing model into the cloud, Azure Machine Learning can help you build production-ready models.

Note

If you are using the Keras API tf.keras built into TensorFlow and not the standalone Keras package, refer instead to Train TensorFlow models.

Prerequisites

To benefit from this article, you'll need to:

- Access an Azure subscription. If you don't have one already, create a free account.

- Run the code in this article using either an Azure Machine Learning compute instance or your own Jupyter notebook.

- Azure Machine Learning compute instance—no downloads or installation necessary

- Complete Create resources to get started to create a dedicated notebook server pre-loaded with the SDK and the sample repository.

- In the samples deep learning folder on the notebook server, find a completed and expanded notebook by navigating to this directory: v2 > sdk > python > jobs > single-step > tensorflow > train-hyperparameter-tune-deploy-with-keras.

- Your Jupyter notebook server

- Azure Machine Learning compute instance—no downloads or installation necessary

- Download the training scripts keras_mnist.py and utils.py.

You can also find a completed Jupyter Notebook version of this guide on the GitHub samples page.

Before you can run the code in this article to create a GPU cluster, you'll need to request a quota increase for your workspace.

Set up the job

This section sets up the job for training by loading the required Python packages, connecting to a workspace, creating a compute resource to run a command job, and creating an environment to run the job.

Connect to the workspace

First, you'll need to connect to your Azure Machine Learning workspace. The Azure Machine Learning workspace is the top-level resource for the service. It provides you with a centralized place to work with all the artifacts you create when you use Azure Machine Learning.

We're using DefaultAzureCredential to get access to the workspace. This credential should be capable of handling most Azure SDK authentication scenarios.

If DefaultAzureCredential doesn't work for you, see azure-identity reference documentation or Set up authentication for more available credentials.

# Handle to the workspace

from azure.ai.ml import MLClient

# Authentication package

from azure.identity import DefaultAzureCredential

credential = DefaultAzureCredential()If you prefer to use a browser to sign in and authenticate, you should uncomment the following code and use it instead.

# Handle to the workspace

# from azure.ai.ml import MLClient

# Authentication package

# from azure.identity import InteractiveBrowserCredential

# credential = InteractiveBrowserCredential()

Next, get a handle to the workspace by providing your Subscription ID, Resource Group name, and workspace name. To find these parameters:

- Look for your workspace name in the upper-right corner of the Azure Machine Learning studio toolbar.

- Select your workspace name to show your Resource Group and Subscription ID.

- Copy the values for Resource Group and Subscription ID into the code.

# Get a handle to the workspace

ml_client = MLClient(

credential=credential,

subscription_id="<SUBSCRIPTION_ID>",

resource_group_name="<RESOURCE_GROUP>",

workspace_name="<AML_WORKSPACE_NAME>",

)The result of running this script is a workspace handle that you'll use to manage other resources and jobs.

Note

- Creating

MLClientwill not connect the client to the workspace. The client initialization is lazy and will wait for the first time it needs to make a call. In this article, this will happen during compute creation.

Create a compute resource to run the job

Azure Machine Learning needs a compute resource to run a job. This resource can be single or multi-node machines with Linux or Windows OS, or a specific compute fabric like Spark.

In the following example script, we provision a Linux compute cluster. You can see the Azure Machine Learning pricing page for the full list of VM sizes and prices. Since we need a GPU cluster for this example, let's pick a STANDARD_NC6 model and create an Azure Machine Learning compute.

from azure.ai.ml.entities import AmlCompute

gpu_compute_target = "gpu-cluster"

try:

# let's see if the compute target already exists

gpu_cluster = ml_client.compute.get(gpu_compute_target)

print(

f"You already have a cluster named {gpu_compute_target}, we'll reuse it as is."

)

except Exception:

print("Creating a new gpu compute target...")

# Let's create the Azure ML compute object with the intended parameters

gpu_cluster = AmlCompute(

# Name assigned to the compute cluster

name="gpu-cluster",

# Azure ML Compute is the on-demand VM service

type="amlcompute",

# VM Family

size="STANDARD_NC4AS_T4_V3",

# Minimum running nodes when there is no job running

min_instances=0,

# Nodes in cluster

max_instances=4,

# How many seconds will the node running after the job termination

idle_time_before_scale_down=180,

# Dedicated or LowPriority. The latter is cheaper but there is a chance of job termination

tier="Dedicated",

)

# Now, we pass the object to MLClient's create_or_update method

gpu_cluster = ml_client.begin_create_or_update(gpu_cluster).result()

print(

f"AMLCompute with name {gpu_cluster.name} is created, the compute size is {gpu_cluster.size}"

)Create a job environment

To run an Azure Machine Learning job, you'll need an environment. An Azure Machine Learning environment encapsulates the dependencies (such as software runtime and libraries) needed to run your machine learning training script on your compute resource. This environment is similar to a Python environment on your local machine.

Azure Machine Learning allows you to either use a curated (or ready-made) environment or create a custom environment using a Docker image or a Conda configuration. In this article, you'll create a custom Conda environment for your jobs, using a Conda YAML file.

Create a custom environment

To create your custom environment, you'll define your Conda dependencies in a YAML file. First, create a directory for storing the file. In this example, we've named the directory dependencies.

import os

dependencies_dir = "./dependencies"

os.makedirs(dependencies_dir, exist_ok=True)Then, create the file in the dependencies directory. In this example, we've named the file conda.yml.

%%writefile {dependencies_dir}/conda.yaml

name: keras-env

channels:

- conda-forge

dependencies:

- python=3.8

- pip=21.2.4

- pip:

- protobuf~=3.20

- numpy==1.22

- tensorflow-gpu==2.2.0

- keras<=2.3.1

- matplotlib

- azureml-mlflow==1.42.0The specification contains some usual packages (such as numpy and pip) that you'll use in your job.

Next, use the YAML file to create and register this custom environment in your workspace. The environment will be packaged into a Docker container at runtime.

from azure.ai.ml.entities import Environment

custom_env_name = "keras-env"

job_env = Environment(

name=custom_env_name,

description="Custom environment for keras image classification",

conda_file=os.path.join(dependencies_dir, "conda.yaml"),

image="mcr.microsoft.com/azureml/openmpi4.1.0-ubuntu20.04:latest",

)

job_env = ml_client.environments.create_or_update(job_env)

print(

f"Environment with name {job_env.name} is registered to workspace, the environment version is {job_env.version}"

)For more information on creating and using environments, see Create and use software environments in Azure Machine Learning.

Configure and submit your training job

In this section, we'll begin by introducing the data for training. We'll then cover how to run a training job, using a training script that we've provided. You'll learn to build the training job by configuring the command for running the training script. Then, you'll submit the training job to run in Azure Machine Learning.

Obtain the training data

You'll use data from the Modified National Institute of Standards and Technology (MNIST) database of handwritten digits. This data is sourced from Yan LeCun's website and stored in an Azure storage account.

web_path = "wasbs://datasets@azuremlexamples.blob.core.windows.net/mnist/"For more information about the MNIST dataset, visit Yan LeCun's website.

Prepare the training script

In this article, we've provided the training script keras_mnist.py. In practice, you should be able to take any custom training script as is and run it with Azure Machine Learning without having to modify your code.

The provided training script does the following:

- handles the data preprocessing, splitting the data into test and train data;

- trains a model, using the data; and

- returns the output model.

During the pipeline run, you'll use MLFlow to log the parameters and metrics. To learn how to enable MLFlow tracking, see Track ML experiments and models with MLflow.

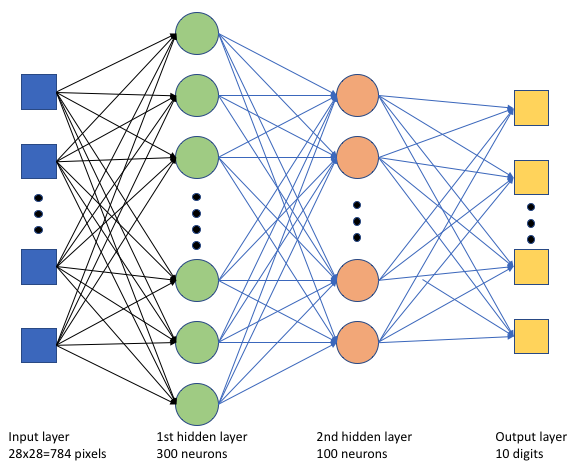

In the training script keras_mnist.py, we create a simple deep neural network (DNN). This DNN has:

- An input layer with 28 * 28 = 784 neurons. Each neuron represents an image pixel.

- Two hidden layers. The first hidden layer has 300 neurons and the second hidden layer has 100 neurons.

- An output layer with 10 neurons. Each neuron represents a targeted label from 0 to 9.

Build the training job

Now that you have all the assets required to run your job, it's time to build it using the Azure Machine Learning Python SDK v2. For this example, we'll be creating a command.

An Azure Machine Learning command is a resource that specifies all the details needed to execute your training code in the cloud. These details include the inputs and outputs, type of hardware to use, software to install, and how to run your code. The command contains information to execute a single command.

Configure the command

You'll use the general purpose command to run the training script and perform your desired tasks. Create a Command object to specify the configuration details of your training job.

from azure.ai.ml import command

from azure.ai.ml import UserIdentityConfiguration

from azure.ai.ml import Input

web_path = "wasbs://datasets@azuremlexamples.blob.core.windows.net/mnist/"

job = command(

inputs=dict(

data_folder=Input(type="uri_folder", path=web_path),

batch_size=50,

first_layer_neurons=300,

second_layer_neurons=100,

learning_rate=0.001,

),

compute=gpu_compute_target,

environment=f"{job_env.name}:{job_env.version}",

code="./src/",

command="python keras_mnist.py --data-folder ${{inputs.data_folder}} --batch-size ${{inputs.batch_size}} --first-layer-neurons ${{inputs.first_layer_neurons}} --second-layer-neurons ${{inputs.second_layer_neurons}} --learning-rate ${{inputs.learning_rate}}",

experiment_name="keras-dnn-image-classify",

display_name="keras-classify-mnist-digit-images-with-dnn",

)The inputs for this command include the data location, batch size, number of neurons in the first and second layer, and learning rate. Notice that we've passed in the web path directly as an input.

For the parameter values:

- provide the compute cluster

gpu_compute_target = "gpu-cluster"that you created for running this command; - provide the custom environment

keras-envthat you created for running the Azure Machine Learning job; - configure the command line action itself—in this case, the command is

python keras_mnist.py. You can access the inputs and outputs in the command via the${{ ... }}notation; and - configure metadata such as the display name and experiment name; where an experiment is a container for all the iterations one does on a certain project. All the jobs submitted under the same experiment name would be listed next to each other in Azure Machine Learning studio.

- provide the compute cluster

In this example, you'll use the

UserIdentityto run the command. Using a user identity means that the command will use your identity to run the job and access the data from the blob.

Submit the job

It's now time to submit the job to run in Azure Machine Learning. This time, you'll use create_or_update on ml_client.jobs.

ml_client.jobs.create_or_update(job)Once completed, the job will register a model in your workspace (as a result of training) and output a link for viewing the job in Azure Machine Learning studio.

Warning

Azure Machine Learning runs training scripts by copying the entire source directory. If you have sensitive data that you don't want to upload, use a .ignore file or don't include it in the source directory.

What happens during job execution

As the job is executed, it goes through the following stages:

Preparing: A docker image is created according to the environment defined. The image is uploaded to the workspace's container registry and cached for later runs. Logs are also streamed to the job history and can be viewed to monitor progress. If a curated environment is specified, the cached image backing that curated environment will be used.

Scaling: The cluster attempts to scale up if it requires more nodes to execute the run than are currently available.

Running: All scripts in the script folder src are uploaded to the compute target, data stores are mounted or copied, and the script is executed. Outputs from stdout and the ./logs folder are streamed to the job history and can be used to monitor the job.

Tune model hyperparameters

You've trained the model with one set of parameters, let's now see if you can further improve the accuracy of your model. You can tune and optimize your model's hyperparameters using Azure Machine Learning's sweep capabilities.

To tune the model's hyperparameters, define the parameter space in which to search during training. You'll do this by replacing some of the parameters (batch_size, first_layer_neurons, second_layer_neurons, and learning_rate) passed to the training job with special inputs from the azure.ml.sweep package.

from azure.ai.ml.sweep import Choice, LogUniform

# we will reuse the command_job created before. we call it as a function so that we can apply inputs

# we do not apply the 'iris_csv' input again -- we will just use what was already defined earlier

job_for_sweep = job(

batch_size=Choice(values=[25, 50, 100]),

first_layer_neurons=Choice(values=[10, 50, 200, 300, 500]),

second_layer_neurons=Choice(values=[10, 50, 200, 500]),

learning_rate=LogUniform(min_value=-6, max_value=-1),

)Then, you'll configure sweep on the command job, using some sweep-specific parameters, such as the primary metric to watch and the sampling algorithm to use.

In the following code, we use random sampling to try different configuration sets of hyperparameters in an attempt to maximize our primary metric, validation_acc.

We also define an early termination policy—the BanditPolicy. This policy operates by checking the job every two iterations. If the primary metric, validation_acc, falls outside the top ten percent range, Azure Machine Learning will terminate the job. This saves the model from continuing to explore hyperparameters that show no promise of helping to reach the target metric.

from azure.ai.ml.sweep import BanditPolicy

sweep_job = job_for_sweep.sweep(

compute=gpu_compute_target,

sampling_algorithm="random",

primary_metric="Accuracy",

goal="Maximize",

max_total_trials=20,

max_concurrent_trials=4,

early_termination_policy=BanditPolicy(slack_factor=0.1, evaluation_interval=2),

)Now, you can submit this job as before. This time, you'll be running a sweep job that sweeps over your train job.

returned_sweep_job = ml_client.create_or_update(sweep_job)

# stream the output and wait until the job is finished

ml_client.jobs.stream(returned_sweep_job.name)

# refresh the latest status of the job after streaming

returned_sweep_job = ml_client.jobs.get(name=returned_sweep_job.name)You can monitor the job by using the studio user interface link that is presented during the job run.

Find and register the best model

Once all the runs complete, you can find the run that produced the model with the highest accuracy.

from azure.ai.ml.entities import Model

if returned_sweep_job.status == "Completed":

# First let us get the run which gave us the best result

best_run = returned_sweep_job.properties["best_child_run_id"]

# lets get the model from this run

model = Model(

# the script stores the model as "keras_dnn_mnist_model"

path="azureml://jobs/{}/outputs/artifacts/paths/keras_dnn_mnist_model/".format(

best_run

),

name="run-model-example",

description="Model created from run.",

type="mlflow_model",

)

else:

print(

"Sweep job status: {}. Please wait until it completes".format(

returned_sweep_job.status

)

)You can then register this model.

registered_model = ml_client.models.create_or_update(model=model)Deploy the model as an online endpoint

After you've registered your model, you can deploy it as an online endpoint—that is, as a web service in the Azure cloud.

To deploy a machine learning service, you'll typically need:

- The model assets that you want to deploy. These assets include the model's file and metadata that you already registered in your training job.

- Some code to run as a service. The code executes the model on a given input request (an entry script). This entry script receives data submitted to a deployed web service and passes it to the model. After the model processes the data, the script returns the model's response to the client. The script is specific to your model and must understand the data that the model expects and returns. When you use an MLFlow model, Azure Machine Learning automatically creates this script for you.

For more information about deployment, see Deploy and score a machine learning model with managed online endpoint using Python SDK v2.

Create a new online endpoint

As a first step to deploying your model, you need to create your online endpoint. The endpoint name must be unique in the entire Azure region. For this article, you'll create a unique name using a universally unique identifier (UUID).

import uuid

# Creating a unique name for the endpoint

online_endpoint_name = "keras-dnn-endpoint-" + str(uuid.uuid4())[:8]from azure.ai.ml.entities import (

ManagedOnlineEndpoint,

ManagedOnlineDeployment,

Model,

Environment,

)

# create an online endpoint

endpoint = ManagedOnlineEndpoint(

name=online_endpoint_name,

description="Classify handwritten digits using a deep neural network (DNN) using Keras",

auth_mode="key",

)

endpoint = ml_client.begin_create_or_update(endpoint).result()

print(f"Endpint {endpoint.name} provisioning state: {endpoint.provisioning_state}")Once you've created the endpoint, you can retrieve it as follows:

endpoint = ml_client.online_endpoints.get(name=online_endpoint_name)

print(

f'Endpint "{endpoint.name}" with provisioning state "{endpoint.provisioning_state}" is retrieved'

)Deploy the model to the endpoint

After you've created the endpoint, you can deploy the model with the entry script. An endpoint can have multiple deployments. Using rules, the endpoint can then direct traffic to these deployments.

In the following code, you'll create a single deployment that handles 100% of the incoming traffic. We've specified an arbitrary color name (tff-blue) for the deployment. You could also use any other name such as tff-green or tff-red for the deployment. The code to deploy the model to the endpoint does the following:

- deploys the best version of the model that you registered earlier;

- scores the model, using the

score.pyfile; and - uses the custom environment (that you created earlier) to perform inferencing.

from azure.ai.ml.entities import ManagedOnlineDeployment, CodeConfiguration

model = registered_model

# create an online deployment.

blue_deployment = ManagedOnlineDeployment(

name="keras-blue-deployment",

endpoint_name=online_endpoint_name,

model=model,

# code_configuration=CodeConfiguration(code="./src", scoring_script="score.py"),

instance_type="Standard_DS3_v2",

instance_count=1,

)

blue_deployment = ml_client.begin_create_or_update(blue_deployment).result()Note

Expect this deployment to take a bit of time to finish.

Test the deployed model

Now that you've deployed the model to the endpoint, you can predict the output of the deployed model, using the invoke method on the endpoint.

To test the endpoint you need some test data. Let us locally download the test data which we used in our training script.

import urllib.request

data_folder = os.path.join(os.getcwd(), "data")

os.makedirs(data_folder, exist_ok=True)

urllib.request.urlretrieve(

"https://azureopendatastorage.blob.core.windows.net/mnist/t10k-images-idx3-ubyte.gz",

filename=os.path.join(data_folder, "t10k-images-idx3-ubyte.gz"),

)

urllib.request.urlretrieve(

"https://azureopendatastorage.blob.core.windows.net/mnist/t10k-labels-idx1-ubyte.gz",

filename=os.path.join(data_folder, "t10k-labels-idx1-ubyte.gz"),

)Load these into a test dataset.

from src.utils import load_data

X_test = load_data(os.path.join(data_folder, "t10k-images-idx3-ubyte.gz"), False)

y_test = load_data(

os.path.join(data_folder, "t10k-labels-idx1-ubyte.gz"), True

).reshape(-1)Pick 30 random samples from the test set and write them to a JSON file.

import json

import numpy as np

# find 30 random samples from test set

n = 30

sample_indices = np.random.permutation(X_test.shape[0])[0:n]

test_samples = json.dumps({"input_data": X_test[sample_indices].tolist()})

# test_samples = bytes(test_samples, encoding='utf8')

with open("request.json", "w") as outfile:

outfile.write(test_samples)You can then invoke the endpoint, print the returned predictions, and plot them along with the input images. Use red font color and inverted image (white on black) to highlight the misclassified samples.

import matplotlib.pyplot as plt

# predict using the deployed model

result = ml_client.online_endpoints.invoke(

endpoint_name=online_endpoint_name,

request_file="./request.json",

deployment_name="keras-blue-deployment",

)

# compare actual value vs. the predicted values:

i = 0

plt.figure(figsize=(20, 1))

for s in sample_indices:

plt.subplot(1, n, i + 1)

plt.axhline("")

plt.axvline("")

# use different color for misclassified sample

font_color = "red" if y_test[s] != result[i] else "black"

clr_map = plt.cm.gray if y_test[s] != result[i] else plt.cm.Greys

plt.text(x=10, y=-10, s=result[i], fontsize=18, color=font_color)

plt.imshow(X_test[s].reshape(28, 28), cmap=clr_map)

i = i + 1

plt.show()Note

Because the model accuracy is high, you might have to run the cell a few times before seeing a misclassified sample.

Clean up resources

If you won't be using the endpoint, delete it to stop using the resource. Make sure no other deployments are using the endpoint before you delete it.

ml_client.online_endpoints.begin_delete(name=online_endpoint_name)Note

Expect this cleanup to take a bit of time to finish.

Next steps

In this article, you trained and registered a Keras model. You also deployed the model to an online endpoint. See these other articles to learn more about Azure Machine Learning.