Troubleshoot guidance

This article addresses frequent questions about prompt flow usage.

"Package tool isn't found" error occurs when you update the flow for a code-first experience

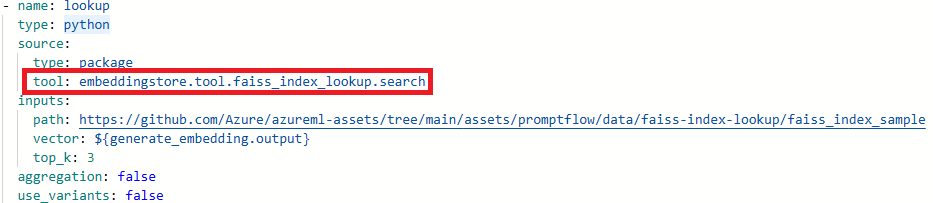

When you update flows for a code-first experience, if the flow utilized the Faiss Index Lookup, Vector Index Lookup, Vector DB Lookup, or Content Safety (Text) tools, you might encounter the following error message:

Package tool 'embeddingstore.tool.faiss_index_lookup.search' is not found in the current environment.

To resolve the issue, you have two options:

Option 1

Update your runtime to the latest version.

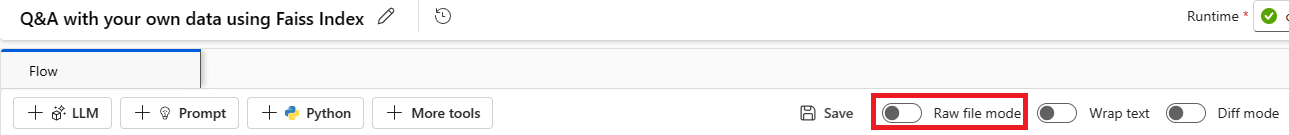

Select Raw file mode to switch to the raw code view. Then open the flow.dag.yaml file.

Update the tool names.

Tool New tool name Faiss Index Lookup promptflow_vectordb.tool.faiss_index_lookup.FaissIndexLookup.search Vector Index Lookup promptflow_vectordb.tool.vector_index_lookup.VectorIndexLookup.search Vector DB Lookup promptflow_vectordb.tool.vector_db_lookup.VectorDBLookup.search Content Safety (Text) content_safety_text.tools.content_safety_text_tool.analyze_text Save the flow.dag.yaml file.

Option 2

- Update your runtime to the latest version.

- Remove the old tool and re-create a new tool.

"No such file or directory" error

Prompt flow relies on a file share storage to store a snapshot of the flow. If the file share storage has an issue, you might encounter the following problem. Here are some workarounds you can try:

If you're using a private storage account, see Network isolation in prompt flow to make sure your workspace can access your storage account.

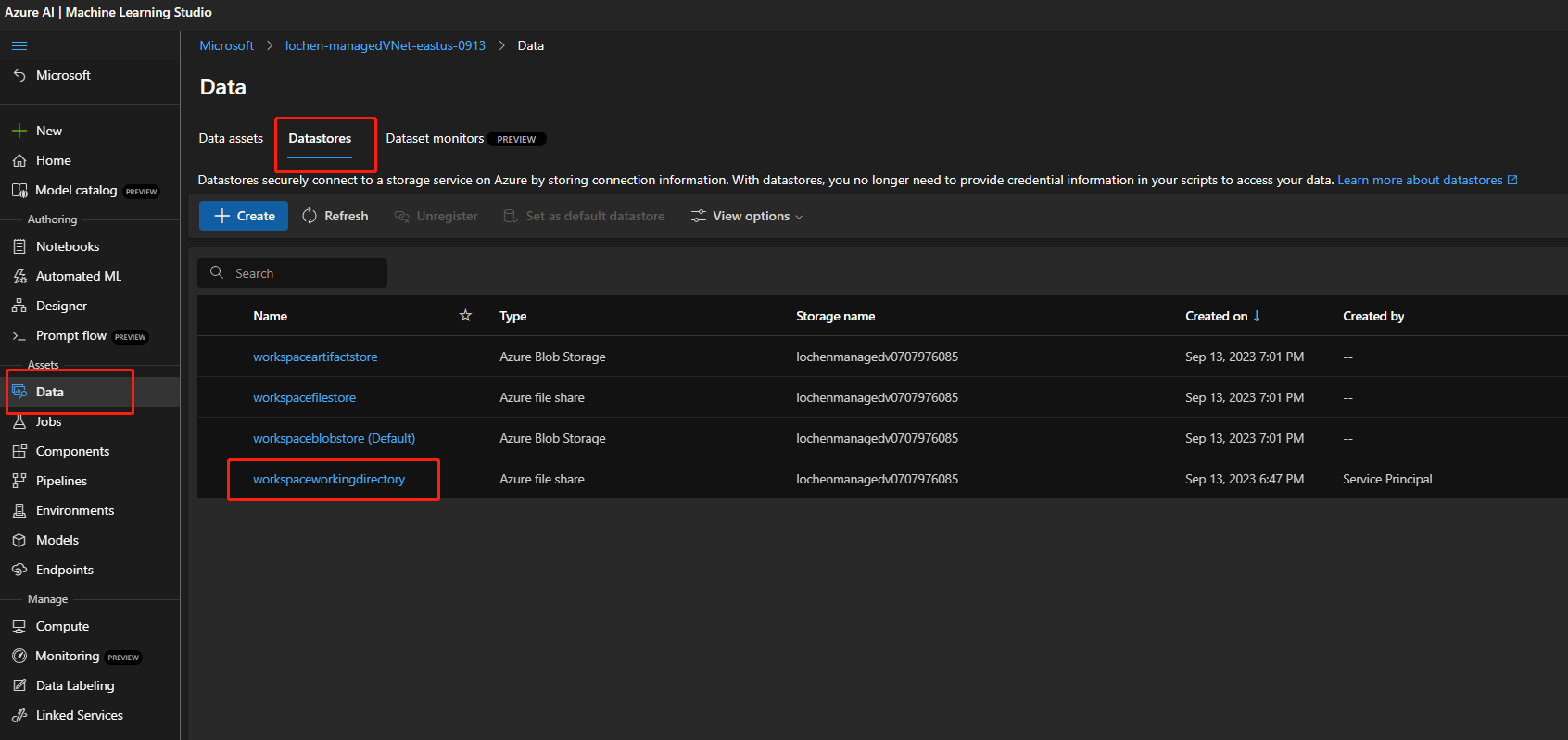

If the storage account is enabled for public access, check whether there's a datastore named

workspaceworkingdirectoryin your workspace. It should be a file share type.

- If you didn't get this datastore, you need to add it in your workspace.

- Create a file share with the name

code-391ff5ac-6576-460f-ba4d-7e03433c68b6. - Create a datastore with the name

workspaceworkingdirectory. See Create datastores.

- Create a file share with the name

- If you have a

workspaceworkingdirectorydatastore but its type isblobinstead offileshare, create a new workspace. Use storage that doesn't enable hierarchical namespaces for Azure Data Lake Storage Gen2 as a workspace default storage account. For more information, see Create workspace.

- If you didn't get this datastore, you need to add it in your workspace.

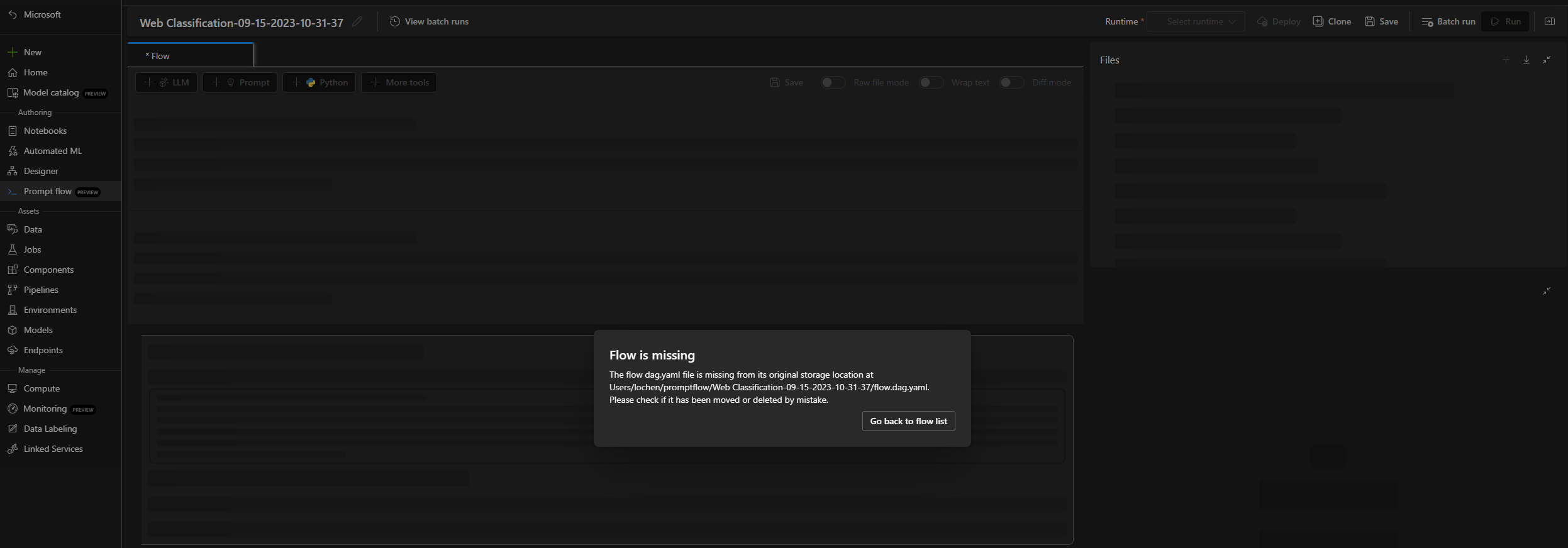

Flow is missing

There are possible reasons for this issue:

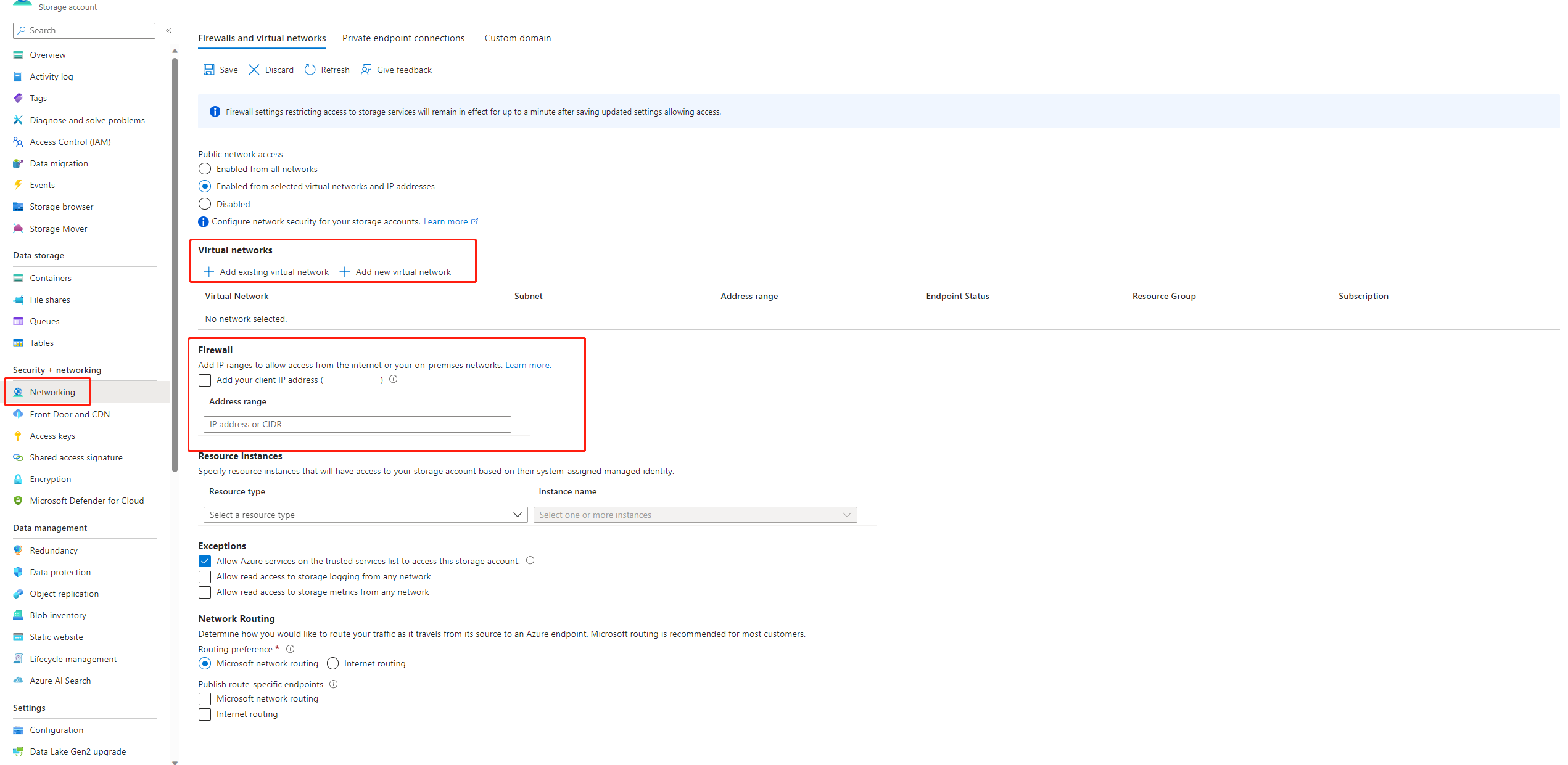

If public access to your storage account is disabled, you must ensure access by either adding your IP to the storage firewall or enabling access through a virtual network that has a private endpoint connected to the storage account.

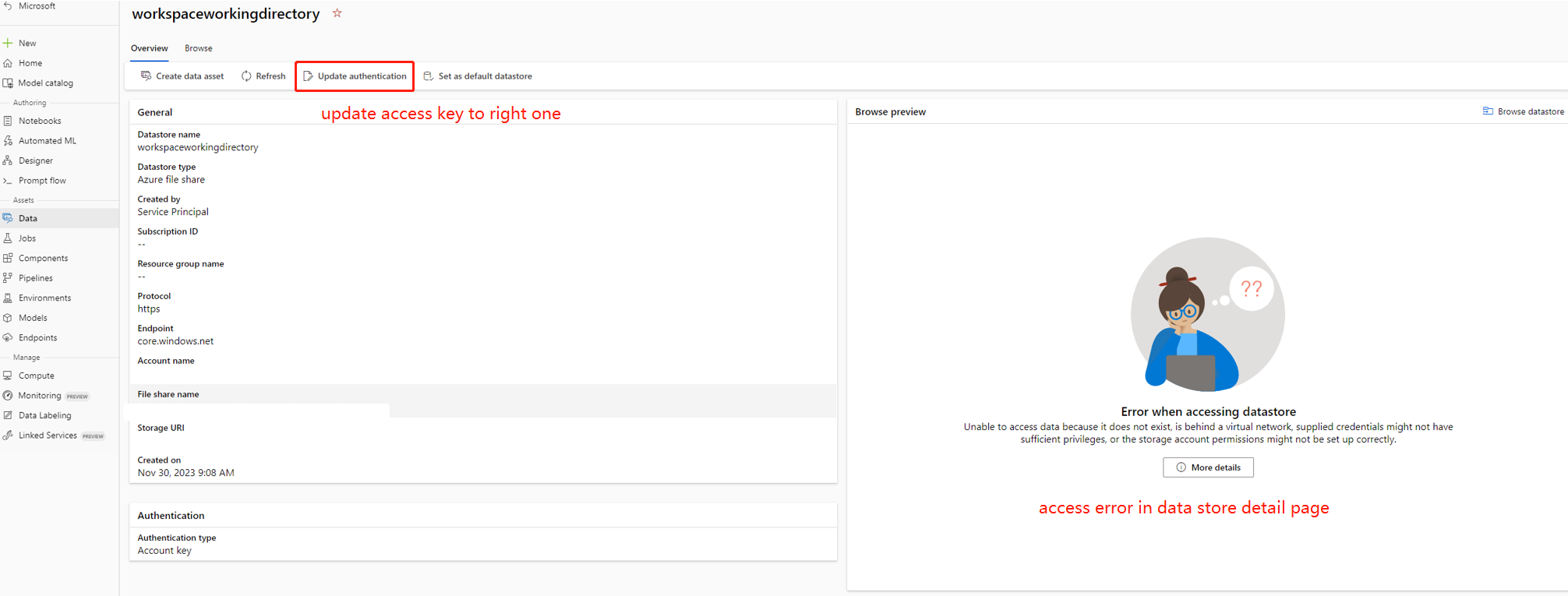

There are some cases. The account key in data store is out of sync with the storage account, you can try to update the account key in data store detail page to fix this.

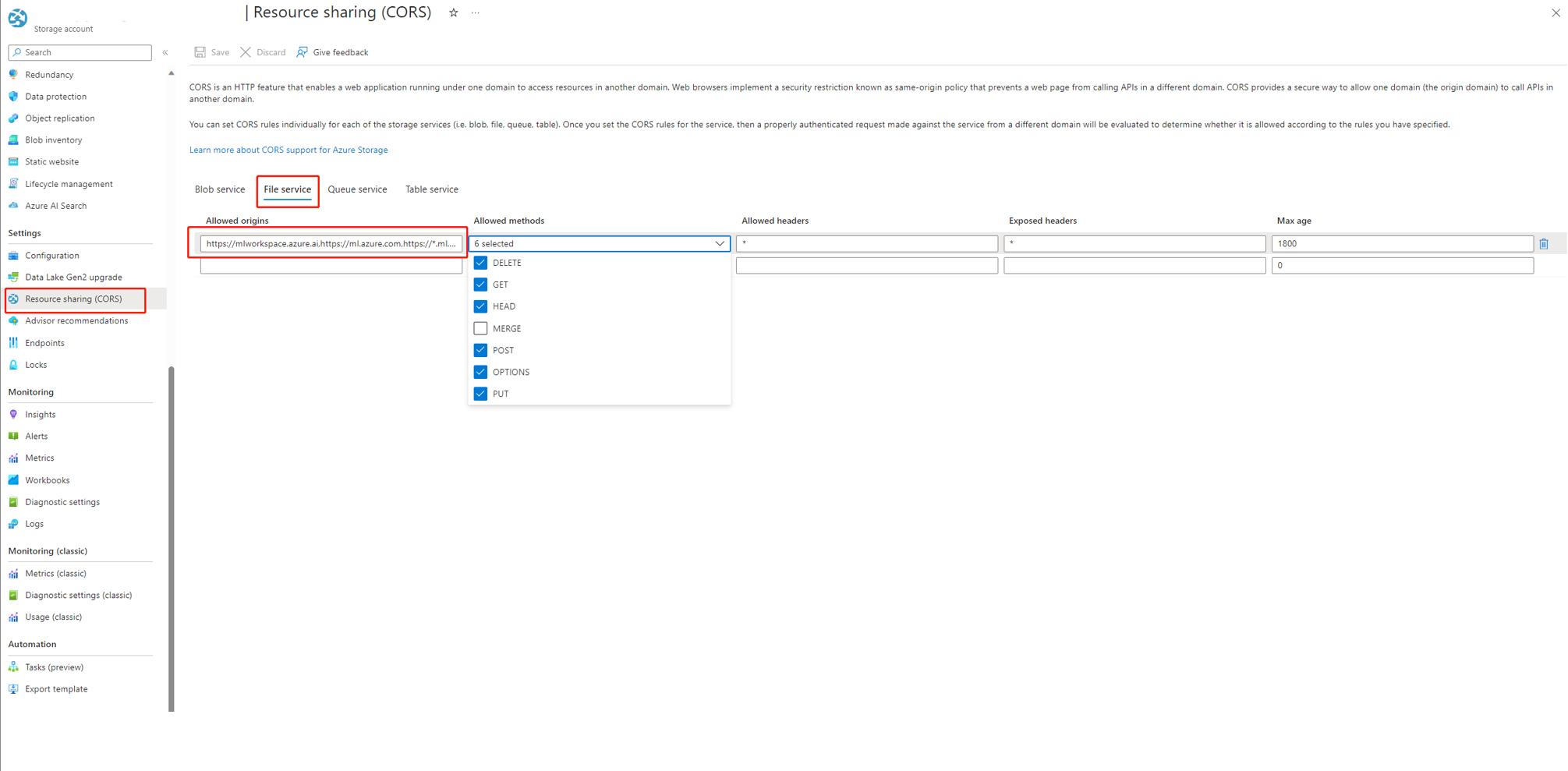

If you're using AI studio, the storage account needs to set CORS to allow AI studio access the storage account, otherwise, you'll see the flow missing issue. You can add following CORS settings to the storage account to fix this issue.

- Go to storage account page, select

Resource sharing (CORS)undersettings, and select toFile servicetab. - Allowed origins:

https://mlworkspace.azure.ai,https://ml.azure.com,https://*.ml.azure.com,https://ai.azure.com,https://*.ai.azure.com,https://mlworkspacecanary.azure.ai,https://mlworkspace.azureml-test.net - Allowed methods:

DELETE, GET, HEAD, POST, OPTIONS, PUT

- Go to storage account page, select

Runtime-related issues

You might experience runtime issues.

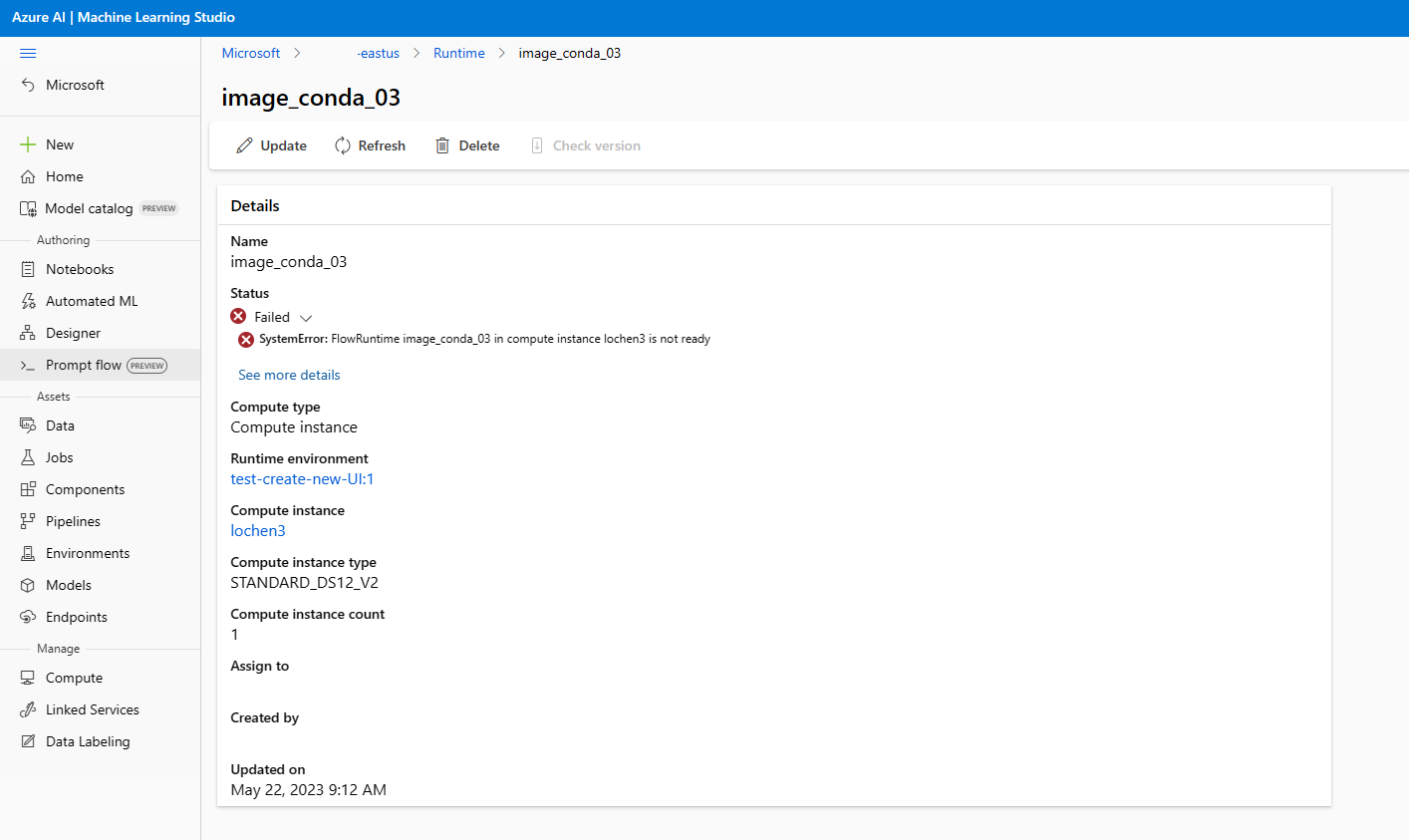

Runtime failed with "system error runtime not ready" when you used a custom environment

First, go to the compute instance terminal and run docker ps to find the root cause.

Use docker images to check if the image was pulled successfully. If your image was pulled successfully, check if the Docker container is running. If it's already running, locate this runtime. It attempts to restart the runtime and compute instance.

If you're using compute instance runtime AI studio, this scenario isn't currently supported, so use automatic runtime instead, Switch compute instance runtime to automatic runtime.

Run failed because of "No module named XXX"

This type of error related to runtime lacks required packages. If you're using a default environment, make sure the image of your runtime is using the latest version. For more information, see Runtime update. If you're using a custom image and you're using a conda environment, make sure you installed all the required packages in your conda environment. For more information, see Customize a prompt flow environment.

Where to find the serverless instance used by automatic runtime?

Automatic runtime is running on a serverless instance, you can find the serverless instance under compute quota page, View your usage and quotas in the Azure portal. The serverless instances with have with name like this sessionxxxxyyyy.

Request timeout issue

You might experience timeout issues.

Request timeout error shown in the UI

Compute instance runtime request timeout error:

The error in the example says "UserError: Invoking runtime gega-ci timeout, error message: The request was canceled due to the configured HttpClient. Timeout of 100 seconds elapsing."

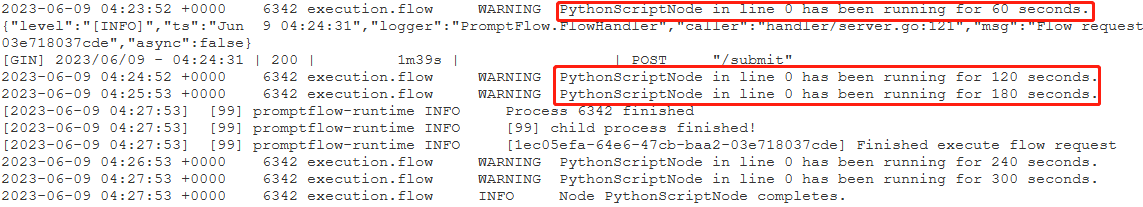

Identify which node consumes the most time

Check the runtime logs.

Try to find the following warning log format:

{node_name} has been running for {duration} seconds.

For example:

Case 1: Python script node runs for a long time.

In this case, you can find that

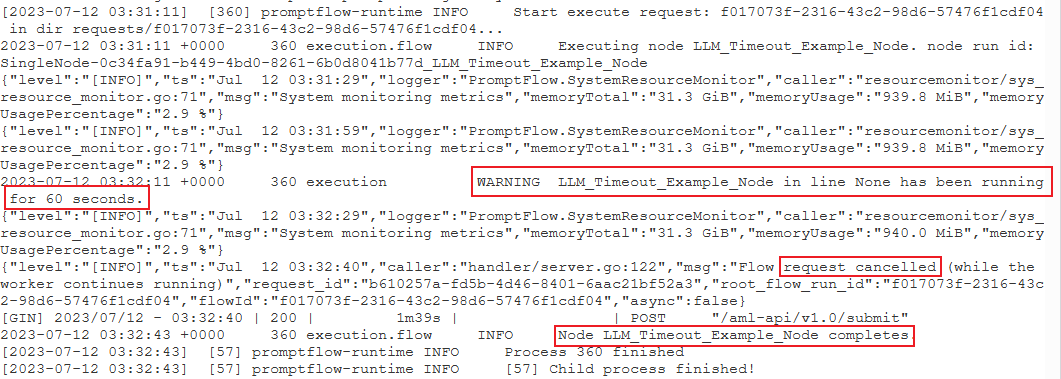

PythonScriptNodewas running for a long time (almost 300 seconds). Then you can check the node details to see what's the problem.Case 2: LLM node runs for a long time.

In this case, if you find the message

request canceledin the logs, it might be because the OpenAI API call is taking too long and exceeding the runtime limit.An OpenAI API timeout could be caused by a network issue or a complex request that requires more processing time. For more information, see OpenAI API timeout.

Wait a few seconds and retry your request. This action usually resolves any network issues.

If retrying doesn't work, check whether you're using a long context model, such as

gpt-4-32k, and have set a large value formax_tokens. If so, the behavior is expected because your prompt might generate a long response that takes longer than the interactive mode's upper threshold. In this situation, we recommend tryingBulk testbecause this mode doesn't have a timeout setting.

If you can't find anything in runtime logs to indicate it's a specific node issue:

- Contact the prompt flow team (promptflow-eng) with the runtime logs. We try to identify the root cause.

Find the compute instance runtime log for further investigation

Go to the compute instance terminal and run docker logs -<runtime_container_name>.

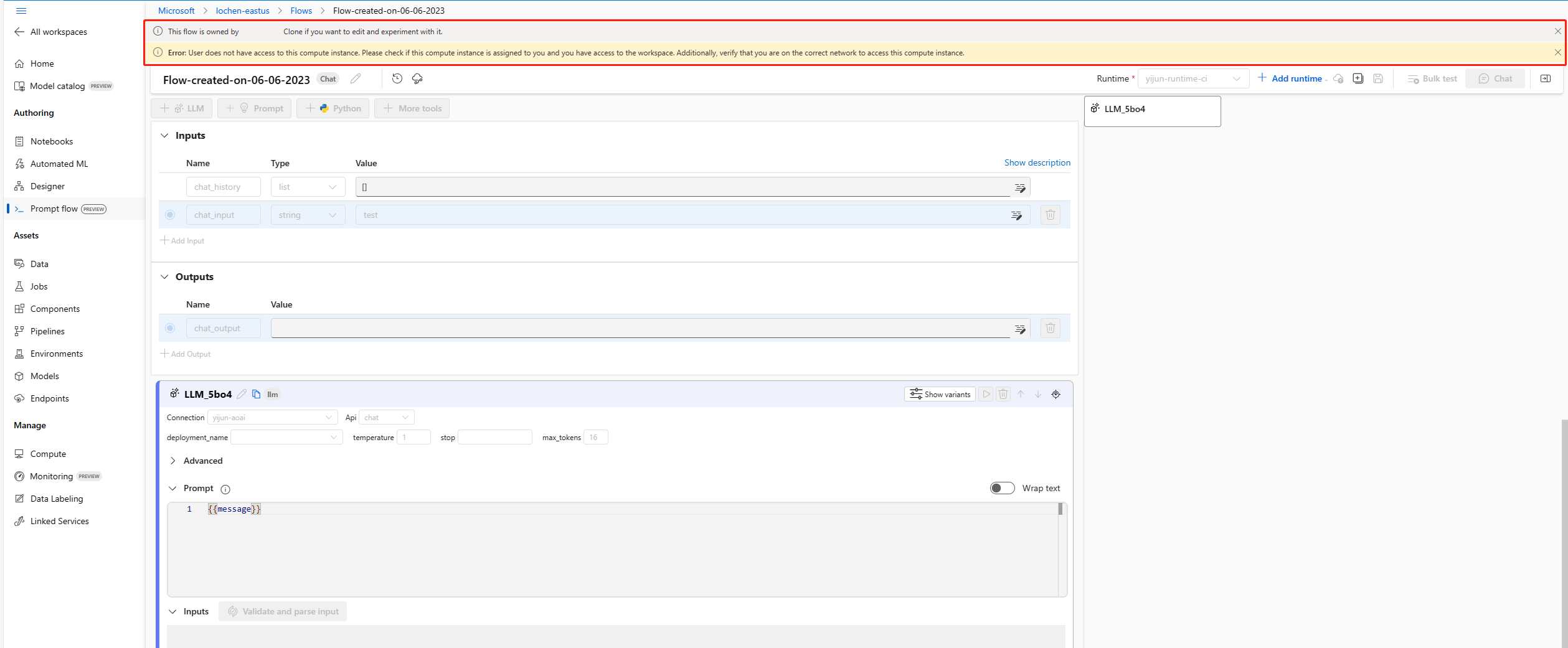

You don't have access to this compute instance

Check if this compute instance is assigned to you and you have access to the workspace. Also, verify that you're on the correct network to access this compute instance.

This error occurs because you're cloning a flow from others that's using a compute instance as the runtime. Because the compute instance runtime is user isolated, you need to create your own compute instance runtime or select a managed online deployment/endpoint runtime, which can be shared with others.

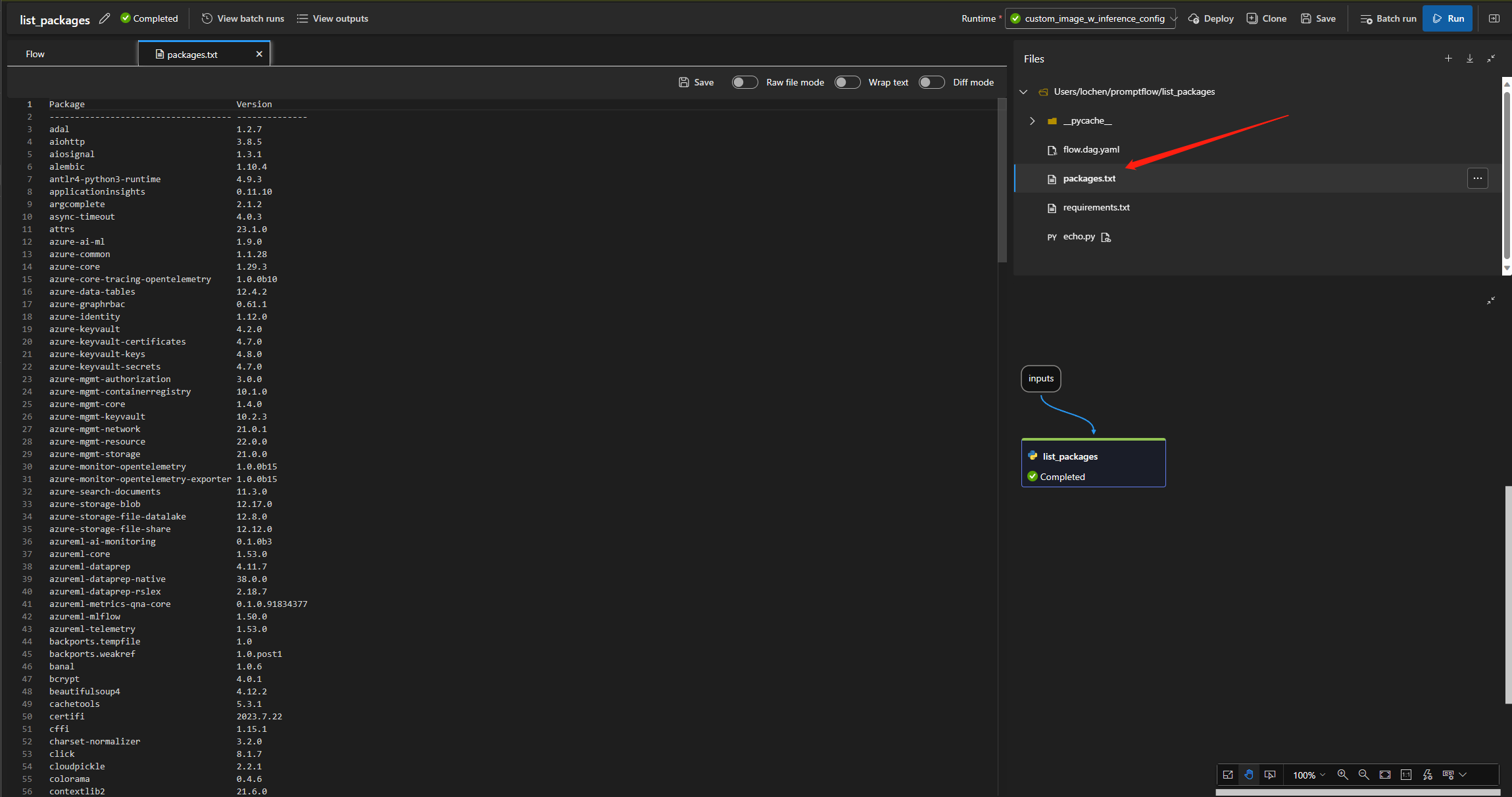

Find Python packages installed in compute instance runtime

Follow these steps to find Python packages installed in compute instance runtime:

Add a Python node in your flow.

Put the following code in the code section:

from promptflow import tool import subprocess @tool def list_packages(input: str) -> str: # Run the pip list command and save the output to a file with open('packages.txt', 'w') as f: subprocess.run(['pip', 'list'], stdout=f)Run the flow. Then you can find

packages.txtin the flow folder.

Runtime start failures using custom environment

CI (Compute instance) runtime start failure using custom environment

To use prompt flow as runtime on CI, you need to use the base image provide by prompt flow. If you want to add extra packages to the base image, you need to follow the Customize environment with Docker context for runtime to create a new environment. Then use it to create CI runtime.

If you got UserError: FlowRuntime on compute instance is not ready, you need sign-in into to terminal of CI and run journalctl -u c3-progenitor.serivice to check the logs.

Automatic runtime start failure with requirements.txt or custom base image

Automatic runtime support to use requirements.txt or custom base image in flow.dag.yaml to customize the image. We would recommend you to use requirements.txt for common case, which will use pip install -r requirements.txt to install the packages. If you have dependency more then python packages, you need to follow the Customize environment with Docker context for runtime to create build a new image base on top of prompt flow base image. Then use it in flow.dag.yaml. Learn more about Customize environment with Docker context for runtime.

- You cannot use arbitrary base image to create runtime, you need to use the base image provide by prompt flow.

- Don't pin the version of

promptflowandpromptflow-toolsinrequirements.txt, because we already include them in the runtime base image. Using old version ofpromptflowandpromptflow-toolsmay cause unexpected behavior.

Flow run related issues

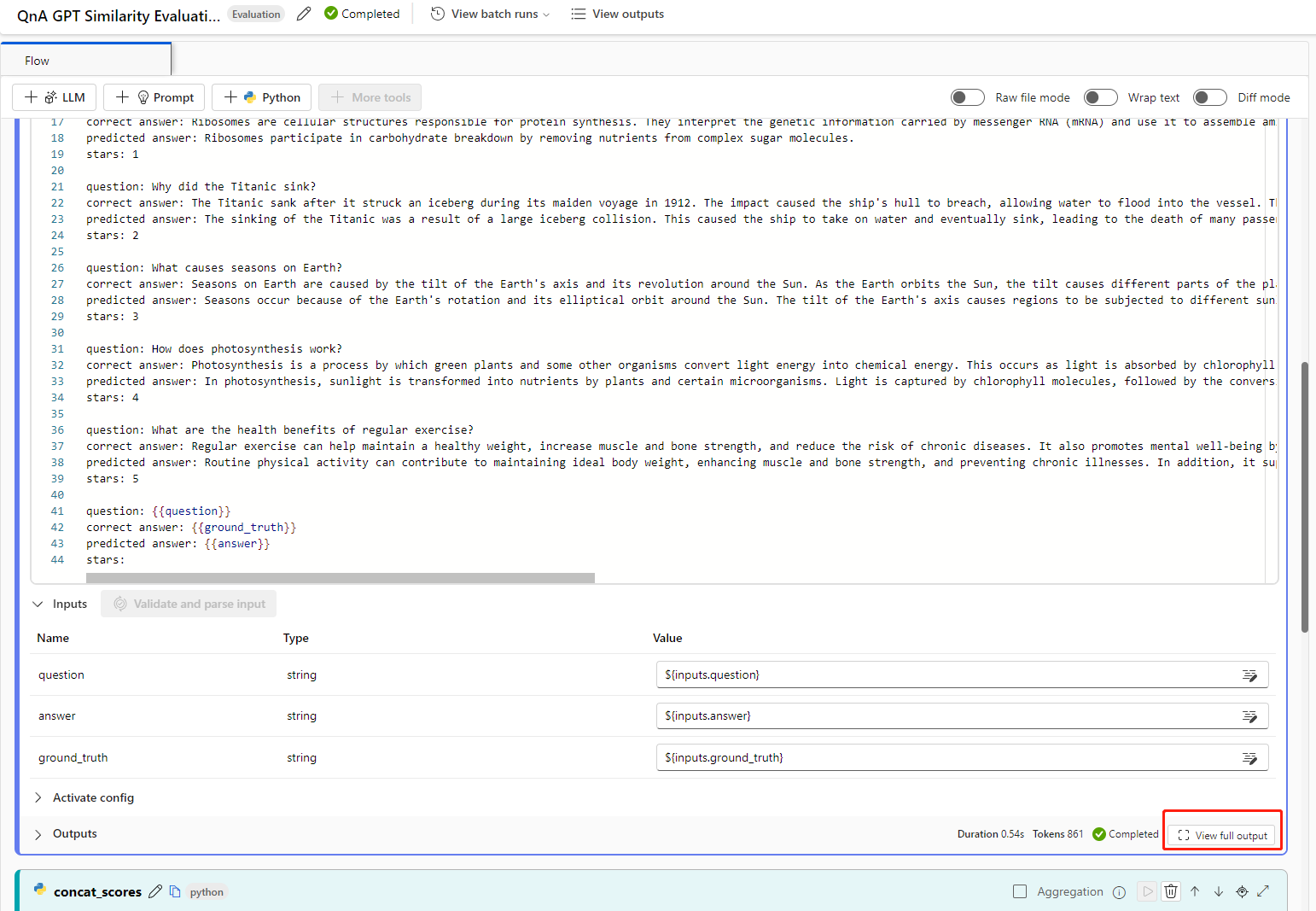

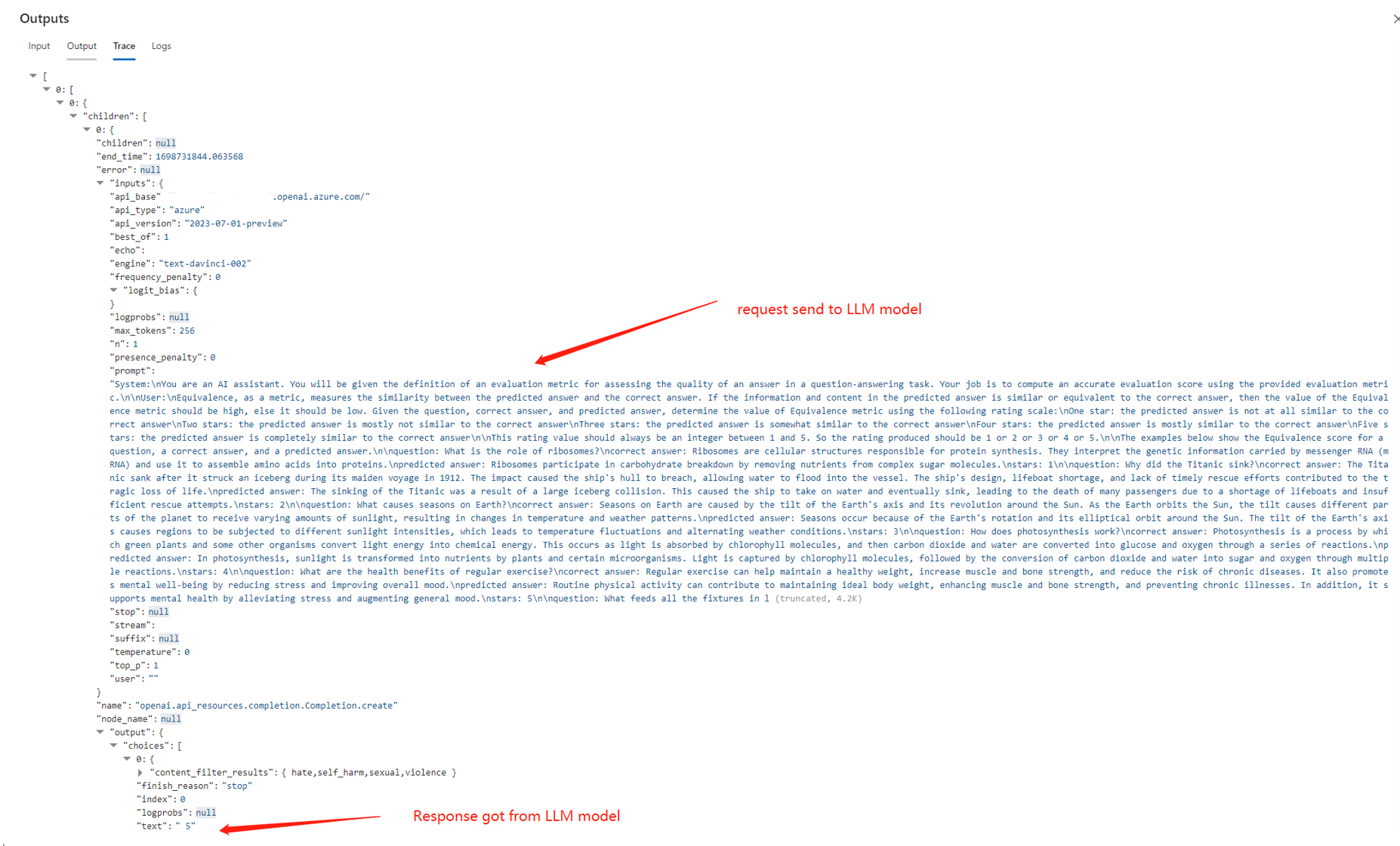

How to find the raw inputs and outputs of in LLM tool for further investigation?

In prompt flow, on flow page with successful run and run detail page, you can find the raw inputs and outputs of LLM tool in the output section. Select the view full output button to view full output.

Trace section includes each request and response to the LLM tool. You can check the raw message sent to the LLM model and the raw response from the LLM model.

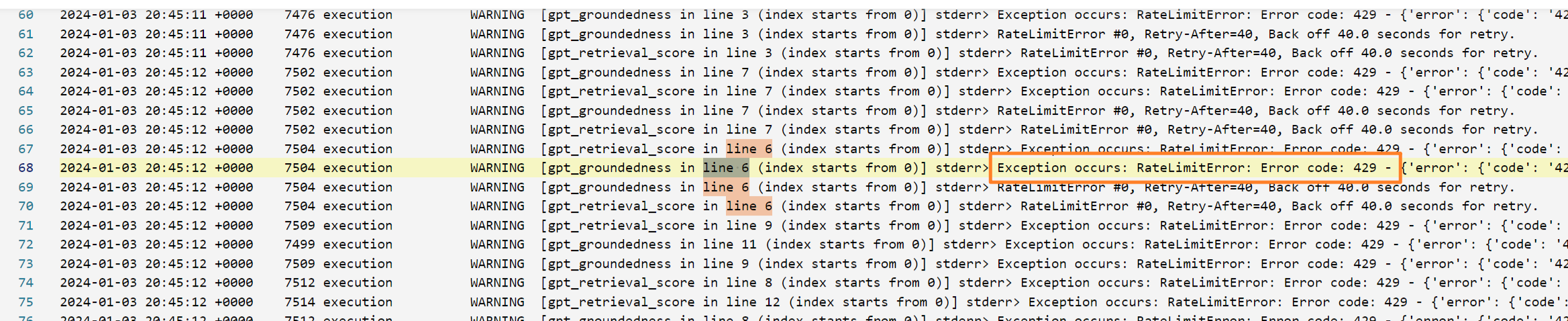

How to fix 409 error from Azure OpenAI?

You may encounter 409 error from Azure OpenAI, it means you have reached the rate limit of Azure OpenAI. You can check the error message in the output section of LLM node. Learn more about Azure OpenAI rate limit.

Authentication and identity related issues

How do I use credential-less data store in prompt flow?

You can follow Identity-based data authentication this part to make your data store credential-less.

To use credential-less data store in prompt flow, you need to grand enough permissions to user identity or managed identity to access the data store.

- If you're using user identity this default option in prompt flow, you need to make sure the user identity has following role on the storage account:

Storage Blob Data Contributoron the storage account, at least need read/write (better have delete) permission.Storage File Data Privileged Contributoron the storage account, at least need read/write (better have delete) permission

- If you're using user assigned managed identity, you need to make sure the managed identity has following role on the storage account:

Storage Blob Data Contributoron the storage account, at least need read/write (better have delete) permission.Storage File Data Privileged Contributoron the storage account, at least need read/write (better have delete) permission- Meanwhile, you need to assign user identity

Storage Blob Data Readrole to storage account, if your want use prompt flow to authoring and test flow.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for