Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

This tutorial helps you accelerate the evaluation process for Data Factory in Microsoft Fabric by providing the steps for a full data integration scenario within one hour. By the end of this tutorial, you understand the value and key capabilities of Data Factory and know how to complete a common end-to-end data integration scenario.

The scenario is divided into an introduction and three modules:

- Introduction to the tutorial and why you should use Data Factory in Microsoft Fabric.

- Module 1: Create a pipeline with Data Factory to ingest raw data from a Blob storage to a bronze data layer table in a data Lakehouse.

- Module 2: Transform data with a dataflow in Data Factory to process the raw data from your bronze table and move it to a gold data layer table in the data Lakehouse.

- Module 3: Complete your first data integration journey and send an email to notify you once all the jobs are complete, and finally, setup the entire flow to run on a schedule.

Why Data Factory in Microsoft Fabric?

Microsoft Fabric provides a single platform for all the analytical needs of an enterprise. It covers the spectrum of analytics including data movement, data lakes, data engineering, data integration, data science, real time analytics, and business intelligence. With Fabric, there's no need to stitch together different services from multiple vendors. Instead, your users enjoy a comprehensive product that is easy to understand, create, onboard, and operate.

Data Factory in Fabric combines the ease-of-use of Power Query with the scale and power of Azure Data Factory. It brings the best of both products together into a single experience. The goal is for both citizen and professional data developers to have the right data integration tools. Data Factory provides low-code, AI-enabled data preparation and transformation experiences, petabyte-scale transformation, and hundreds of connectors with hybrid and multicloud connectivity.

Three key features of Data Factory

- Data ingestion: The Copy activity in pipelines (or the standalone Copy job) lets you move petabyte-scale data from hundreds of data sources into your data Lakehouse for further processing.

- Data transformation and preparation: Dataflow Gen2 provides a low-code interface for transforming your data using 300+ data transformations, with the ability to load the transformed results into multiple destinations like Azure SQL databases, Lakehouse, and more.

- End-to-end automation: Pipelines provide orchestration of activities that include Copy, Dataflow, and Notebook activities, and more. Activities in a pipeline can be chained together to operate sequentially, or they can operate independently in parallel. Your entire data integration flow runs automatically, and can be monitored in one place.

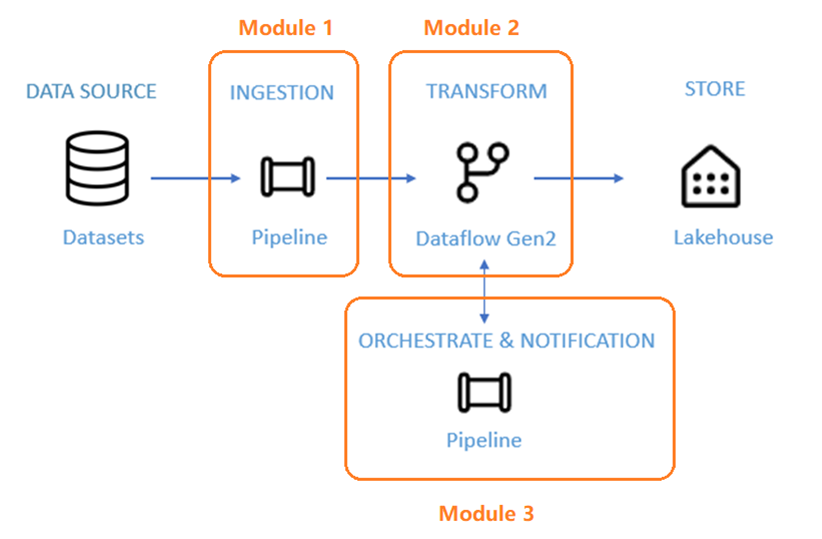

Tutorial architecture

In the next 50 minutes, you'll learn through all three key features of Data Factory as you complete an end-to-end data integration scenario.

The scenario is divided into three modules:

- Module 1: Create a pipeline with Data Factory to ingest raw data from a Blob storage to a bronze data layer table in a data Lakehouse.

- Module 2: Transform data with a dataflow in Data Factory to process the raw data from your bronze table and move it to a gold data layer table in the data Lakehouse.

- Module 3: Complete your first data integration journey and send an email to notify you once all the jobs are complete, and finally, setup the entire flow to run on a schedule.

You use the sample dataset NYC-Taxi as the data source for the tutorial. After you finish, you'll be able to gain insights into daily discounts on taxi fares for a specific period of time using Data Factory in Microsoft Fabric.

Next step

Continue to the next section to create your pipeline.