Quickstart: Get started generating text using the legacy completions API

Use this article to get started making your first calls to Azure OpenAI.

Prerequisites

- An Azure subscription - Create one for free.

- An Azure OpenAI resource with a model deployed. For more information about model deployment, see the resource deployment guide.

Go to the Azure AI Foundry

Navigate to Azure AI Foundry and sign-in with credentials that have access to your Azure OpenAI resource. During or after the sign-in workflow, select the appropriate directory, Azure subscription, and Azure OpenAI resource.

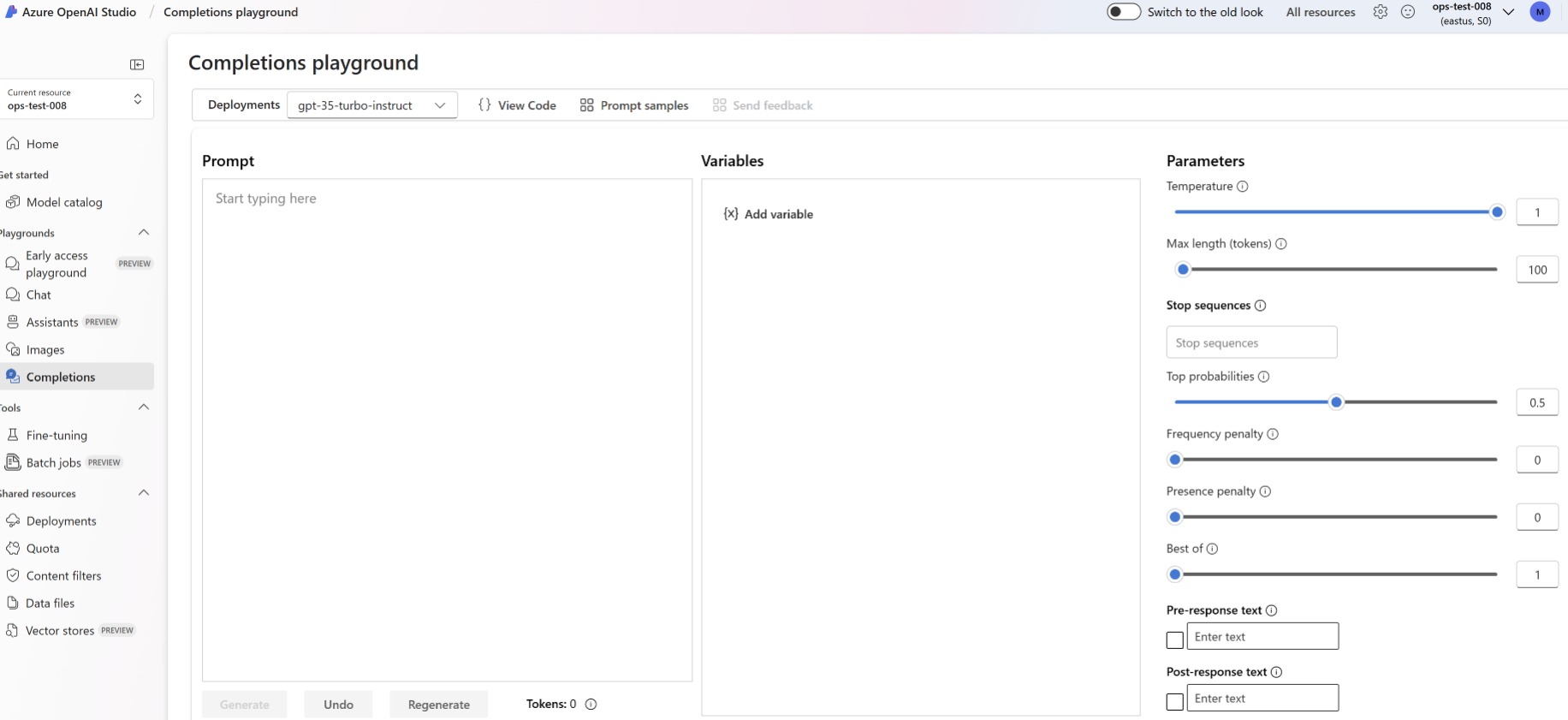

Playground

Start exploring Azure OpenAI capabilities with a no-code approach through the completions playground. It's simply a text box where you can submit a prompt to generate a completion. From this page, you can quickly iterate and experiment with the capabilities.

You can select a deployment and choose from a few pre-loaded examples to get started. If your resource doesn't have a deployment, select Create a deployment and follow the instructions provided by the wizard. For more information about model deployment, see the resource deployment guide.

You can experiment with the configuration settings such as temperature and pre-response text to improve the performance of your task. You can read more about each parameter in the REST API.

- Selecting the Generate button will send the entered text to the completions API and stream the results back to the text box.

- Select the Undo button to undo the prior generation call.

- Select the Regenerate button to complete an undo and generation call together.

Azure OpenAI also performs content moderation on the prompt inputs and generated outputs. The prompts or responses may be filtered if harmful content is detected. For more information, see the content filter article.

In the Completions playground you can also view Python and curl code samples pre-filled according to your selected settings. Just select View code next to the examples dropdown. You can write an application to complete the same task with the OpenAI Python SDK, curl, or other REST API client.

Try text summarization

To use the Azure OpenAI for text summarization in the Completions playground, follow these steps:

Sign in to Azure AI Foundry.

Select the subscription and OpenAI resource to work with.

Select Completions playground on the landing page.

Select your deployment from the Deployments dropdown. If your resource doesn't have a deployment, select Create a deployment and then revisit this step.

Enter a prompt for the model.

Select

Generate. Azure OpenAI will attempt to capture the context of text and rephrase it succinctly. You should get a result that resembles the following text:

The accuracy of the response can vary per model. The gpt-35-turbo-instruct based model in this example is well-suited to this type of summarization, though in general we recommend using the alternate chat completions API unless you have a particular use case that is particularly suited to the completions API.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource or resource group. Deleting the resource group also deletes any other resources associated with it.

Next steps

- Learn more about how to generate the best completion in our How-to guide on completions.

- For more examples check out the Azure OpenAI Samples GitHub repository.

Source code | Package (NuGet) | Samples

Prerequisites

- An Azure subscription - Create one for free

- The current version of .NET Core

- An Azure OpenAI Service resource with the

gpt-35-turbo-instructmodel deployed. For more information about model deployment, see the resource deployment guide.

Set up

Create a new .NET Core application

In a console window (such as cmd, PowerShell, or Bash), use the dotnet new command to create a new console app with the name azure-openai-quickstart. This command creates a simple "Hello World" project with a single C# source file: Program.cs.

dotnet new console -n azure-openai-quickstart

Change your directory to the newly created app folder. You can build the application with:

dotnet build

The build output should contain no warnings or errors.

...

Build succeeded.

0 Warning(s)

0 Error(s)

...

Install the OpenAI .NET client library with:

dotnet add package Azure.AI.OpenAI --version 1.0.0-beta.17

Note

The completions API is only available in version 1.0.0-beta.17 and earlier of the Azure.AI.OpenAI client library. For the latest 2.0.0 and higher version of Azure.AI.OpenAI, the recommended approach to generate completions is to use the chat completions API.

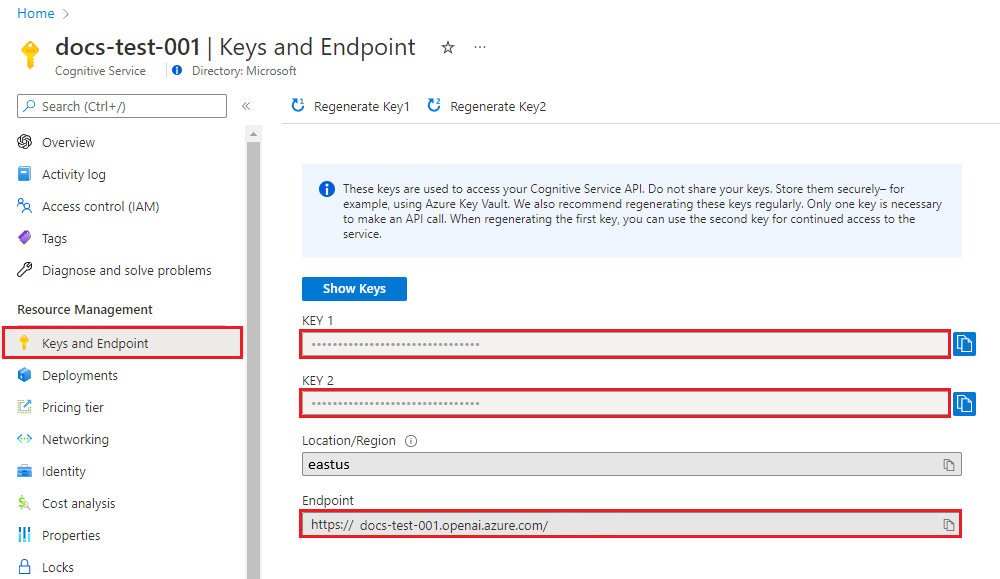

Retrieve key and endpoint

To successfully make a call against Azure OpenAI, you need an endpoint and a key.

| Variable name | Value |

|---|---|

ENDPOINT |

The service endpoint can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the endpoint via the Deployments page in Azure AI Foundry portal. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

Go to your resource in the Azure portal. The Keys & Endpoint section can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

Important

We recommend Microsoft Entra ID authentication with managed identities for Azure resources to avoid storing credentials with your applications that run in the cloud.

Use API keys with caution. Don't include the API key directly in your code, and never post it publicly. If using API keys, store them securely in Azure Key Vault, rotate the keys regularly, and restrict access to Azure Key Vault using role based access control and network access restrictions. For more information about using API keys securely in your apps, see API keys with Azure Key Vault.

For more information about AI services security, see Authenticate requests to Azure AI services.

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

Create a sample application

From the project directory, open the program.cs file and replace with the following code:

using Azure;

using Azure.AI.OpenAI;

using static System.Environment;

string endpoint = GetEnvironmentVariable("AZURE_OPENAI_ENDPOINT");

string key = GetEnvironmentVariable("AZURE_OPENAI_API_KEY");

var client = new OpenAIClient(

new Uri(endpoint),

new AzureKeyCredential(key));

CompletionsOptions completionsOptions = new()

{

DeploymentName = "gpt-35-turbo-instruct",

Prompts = { "When was Microsoft founded?" },

};

Response<Completions> completionsResponse = client.GetCompletions(completionsOptions);

string completion = completionsResponse.Value.Choices[0].Text;

Console.WriteLine($"Chatbot: {completion}");

Important

For production, use a secure way of storing and accessing your credentials like Azure Key Vault. For more information about credential security, see the Azure AI services security article.

dotnet run program.cs

Output

Chatbot:

Microsoft was founded on April 4, 1975.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource you must first delete any deployed models.

Next steps

- For more examples check out the Azure OpenAI Samples GitHub repository

Source code | Package (Go)| Samples

Prerequisites

- An Azure subscription - Create one for free

- Go 1.21.0 or higher installed locally.

- An Azure OpenAI Service resource with the

gpt-35-turbo-instuctmodel deployed. For more information about model deployment, see the resource deployment guide.

Set up

Retrieve key and endpoint

To successfully make a call against Azure OpenAI, you need an endpoint and a key.

| Variable name | Value |

|---|---|

ENDPOINT |

The service endpoint can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the endpoint via the Deployments page in Azure AI Foundry portal. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

Go to your resource in the Azure portal. The Keys & Endpoint section can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

Important

We recommend Microsoft Entra ID authentication with managed identities for Azure resources to avoid storing credentials with your applications that run in the cloud.

Use API keys with caution. Don't include the API key directly in your code, and never post it publicly. If using API keys, store them securely in Azure Key Vault, rotate the keys regularly, and restrict access to Azure Key Vault using role based access control and network access restrictions. For more information about using API keys securely in your apps, see API keys with Azure Key Vault.

For more information about AI services security, see Authenticate requests to Azure AI services.

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

Create a sample application

Create a new file named completions.go. Copy the following code into the completions.go file.

package main

import (

"context"

"fmt"

"os"

"github.com/Azure/azure-sdk-for-go/sdk/ai/azopenai"

"github.com/Azure/azure-sdk-for-go/sdk/azcore"

"github.com/Azure/azure-sdk-for-go/sdk/azcore/to"

)

func main() {

azureOpenAIKey := os.Getenv("AZURE_OPENAI_API_KEY")

modelDeploymentID := "gpt-35-turbo-instruct"

azureOpenAIEndpoint := os.Getenv("AZURE_OPENAI_ENDPOINT")

if azureOpenAIKey == "" || modelDeploymentID == "" || azureOpenAIEndpoint == "" {

fmt.Fprintf(os.Stderr, "Skipping example, environment variables missing\n")

return

}

keyCredential := azcore.NewKeyCredential(azureOpenAIKey)

client, err := azopenai.NewClientWithKeyCredential(azureOpenAIEndpoint, keyCredential, nil)

if err != nil {

// TODO: handle error

}

resp, err := client.GetCompletions(context.TODO(), azopenai.CompletionsOptions{

Prompt: []string{"What is Azure OpenAI, in 20 words or less"},

MaxTokens: to.Ptr(int32(2048)),

Temperature: to.Ptr(float32(0.0)),

DeploymentName: &modelDeploymentID,

}, nil)

if err != nil {

// TODO: handle error

}

for _, choice := range resp.Choices {

fmt.Fprintf(os.Stderr, "Result: %s\n", *choice.Text)

}

}

Important

For production, use a secure way of storing and accessing your credentials like Azure Key Vault. For more information about credential security, see the Azure AI services security article.

Now open a command prompt and run:

go mod init completions.go

Next run:

go mod tidy

go run completions.go

Output

== Get completions Sample ==

Microsoft was founded on April 4, 1975.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

- For more examples, check out the Azure OpenAI Samples GitHub repository

Source code | Artifact (Maven) | Samples

Prerequisites

- An Azure subscription - Create one for free

- The current version of the Java Development Kit (JDK)

- The Gradle build tool, or another dependency manager.

- An Azure OpenAI Service resource with the

gpt-35-turbo-instructmodel deployed. For more information about model deployment, see the resource deployment guide.

Set up

Retrieve key and endpoint

To successfully make a call against Azure OpenAI, you need an endpoint and a key.

| Variable name | Value |

|---|---|

ENDPOINT |

The service endpoint can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the endpoint via the Deployments page in Azure AI Foundry portal. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

Go to your resource in the Azure portal. The Keys & Endpoint section can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

Important

We recommend Microsoft Entra ID authentication with managed identities for Azure resources to avoid storing credentials with your applications that run in the cloud.

Use API keys with caution. Don't include the API key directly in your code, and never post it publicly. If using API keys, store them securely in Azure Key Vault, rotate the keys regularly, and restrict access to Azure Key Vault using role based access control and network access restrictions. For more information about using API keys securely in your apps, see API keys with Azure Key Vault.

For more information about AI services security, see Authenticate requests to Azure AI services.

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

Create a new Java application

Create a new Gradle project.

In a console window (such as cmd, PowerShell, or Bash), create a new directory for your app, and navigate to it.

mkdir myapp && cd myapp

Run the gradle init command from your working directory. This command will create essential build files for Gradle, including build.gradle.kts, which is used at runtime to create and configure your application.

gradle init --type basic

When prompted to choose a DSL, select Kotlin.

Install the Java SDK

This quickstart uses the Gradle dependency manager. You can find the client library and information for other dependency managers on the Maven Central Repository.

Locate build.gradle.kts and open it with your preferred IDE or text editor. Then copy in the following build configuration. This configuration defines the project as a Java application whose entry point is the class OpenAIQuickstart. It imports the Azure AI Vision library.

plugins {

java

application

}

application {

mainClass.set("GetCompletionsSample")

}

repositories {

mavenCentral()

}

dependencies {

implementation(group = "com.azure", name = "azure-ai-openai", version = "1.0.0-beta.3")

implementation("org.slf4j:slf4j-simple:1.7.9")

}

Create a sample application

Create a Java file.

From your working directory, run the following command to create a project source folder:

mkdir -p src/main/javaNavigate to the new folder and create a file called GetCompletionsSample.java.

Open GetCompletionsSample.java in your preferred editor or IDE and paste in the following code.

package com.azure.ai.openai.usage; import com.azure.ai.openai.OpenAIClient; import com.azure.ai.openai.OpenAIClientBuilder; import com.azure.ai.openai.models.Choice; import com.azure.ai.openai.models.Completions; import com.azure.ai.openai.models.CompletionsOptions; import com.azure.ai.openai.models.CompletionsUsage; import com.azure.core.credential.AzureKeyCredential; import java.util.ArrayList; import java.util.List; public class GetCompletionsSample { public static void main(String[] args) { String azureOpenaiKey = System.getenv("AZURE_OPENAI_API_KEY");; String endpoint = System.getenv("AZURE_OPENAI_ENDPOINT");; String deploymentOrModelId = "gpt-35-turbo-instruct"; OpenAIClient client = new OpenAIClientBuilder() .endpoint(endpoint) .credential(new AzureKeyCredential(azureOpenaiKey)) .buildClient(); List<String> prompt = new ArrayList<>(); prompt.add("When was Microsoft founded?"); Completions completions = client.getCompletions(deploymentOrModelId, new CompletionsOptions(prompt)); System.out.printf("Model ID=%s is created at %s.%n", completions.getId(), completions.getCreatedAt()); for (Choice choice : completions.getChoices()) { System.out.printf("Index: %d, Text: %s.%n", choice.getIndex(), choice.getText()); } CompletionsUsage usage = completions.getUsage(); System.out.printf("Usage: number of prompt token is %d, " + "number of completion token is %d, and number of total tokens in request and response is %d.%n", usage.getPromptTokens(), usage.getCompletionTokens(), usage.getTotalTokens()); } }Important

For production, use a secure way of storing and accessing your credentials like Azure Key Vault. For more information about credential security, see the Azure AI services security article.

Navigate back to the project root folder, and build the app with:

gradle buildThen, run it with the

gradle runcommand:gradle run

Output

Model ID=cmpl-7JZRbWuEuHX8ozzG3BXC2v37q90mL is created at 1684898835.

Index: 0, Text:

Microsoft was founded on April 4, 1975..

Usage: number of prompt token is 5, number of completion token is 11, and number of total tokens in request and response is 16.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

- For more examples, check out the Azure OpenAI Samples GitHub repository

Source code | Artifacts (Maven) | Sample

Prerequisites

- An Azure subscription - Create one for free

- The current version of the Java Development Kit (JDK)

- The Spring Boot CLI tool

- An Azure OpenAI Service resource with the

gpt-35-turbomodel deployed. For more information about model deployment, see the resource deployment guide.

Set up

Retrieve key and endpoint

To successfully make a call against Azure OpenAI, you need an endpoint and a key.

| Variable name | Value |

|---|---|

ENDPOINT |

The service endpoint can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the endpoint via the Deployments page in Azure AI Foundry portal. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

Go to your resource in the Azure portal. The Keys & Endpoint section can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

Important

Use API keys with caution. Don't include the API key directly in your code, and never post it publicly. If you use an API key, store it securely in Azure Key Vault. For more information about using API keys securely in your apps, see API keys with Azure Key Vault.

For more information about AI services security, see Authenticate requests to Azure AI services.

Note

Spring AI defaults the model name to gpt-35-turbo. It's only necessary to provide the SPRING_AI_AZURE_OPENAI_MODEL value if you've deployed a model with a different name.

export SPRING_AI_AZURE_OPENAI_API_KEY="REPLACE_WITH_YOUR_KEY_VALUE_HERE"

export SPRING_AI_AZURE_OPENAI_ENDPOINT="REPLACE_WITH_YOUR_ENDPOINT_HERE"

export SPRING_AI_AZURE_OPENAI_MODEL="REPLACE_WITH_YOUR_MODEL_NAME_HERE"

Create a new Spring application

Create a new Spring project.

In a Bash window, create a new directory for your app, and navigate to it.

mkdir ai-completion-demo && cd ai-completion-demo

Run the spring init command from your working directory. This command creates a standard directory structure for your Spring project including the main Java class source file and the pom.xml file used for managing Maven based projects.

spring init -a ai-completion-demo -n AICompletion --force --build maven -x

The generated files and folders resemble the following structure:

ai-completion-demo/

|-- pom.xml

|-- mvn

|-- mvn.cmd

|-- HELP.md

|-- src/

|-- main/

| |-- resources/

| | |-- application.properties

| |-- java/

| |-- com/

| |-- example/

| |-- aicompletiondemo/

| |-- AiCompletionApplication.java

|-- test/

|-- java/

|-- com/

|-- example/

|-- aicompletiondemo/

|-- AiCompletionApplicationTests.java

Edit the Spring application

Edit the pom.xml file.

From the root of the project directory, open the pom.xml file in your preferred editor or IDE and overwrite the file with following content:

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <parent> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-parent</artifactId> <version>3.2.0</version> <relativePath/> <!-- lookup parent from repository --> </parent> <groupId>com.example</groupId> <artifactId>ai-completion-demo</artifactId> <version>0.0.1-SNAPSHOT</version> <name>AICompletion</name> <description>Demo project for Spring Boot</description> <properties> <java.version>17</java.version> </properties> <dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter</artifactId> </dependency> <dependency> <groupId>org.springframework.experimental.ai</groupId> <artifactId>spring-ai-azure-openai-spring-boot-starter</artifactId> <version>0.7.0-SNAPSHOT</version> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-test</artifactId> <scope>test</scope> </dependency> </dependencies> <build> <plugins> <plugin> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-maven-plugin</artifactId> </plugin> </plugins> </build> <repositories> <repository> <id>spring-snapshots</id> <name>Spring Snapshots</name> <url>https://repo.spring.io/snapshot</url> <releases> <enabled>false</enabled> </releases> </repository> </repositories> </project>From the src/main/java/com/example/aicompletiondemo folder, open AiCompletionApplication.java in your preferred editor or IDE and paste in the following code:

package com.example.aicompletiondemo; import java.util.Collections; import java.util.List; import org.springframework.ai.client.AiClient; import org.springframework.ai.prompt.Prompt; import org.springframework.ai.prompt.messages.Message; import org.springframework.ai.prompt.messages.MessageType; import org.springframework.ai.prompt.messages.UserMessage; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.boot.CommandLineRunner; import org.springframework.boot.SpringApplication; import org.springframework.boot.autoconfigure.SpringBootApplication; @SpringBootApplication public class AiCompletionApplication implements CommandLineRunner { private static final String ROLE_INFO_KEY = "role"; @Autowired private AiClient aiClient; public static void main(String[] args) { SpringApplication.run(AiCompletionApplication.class, args); } @Override public void run(String... args) throws Exception { System.out.println(String.format("Sending completion prompt to AI service. One moment please...\r\n")); final List<Message> msgs = Collections.singletonList(new UserMessage("When was Microsoft founded?")); final var resps = aiClient.generate(new Prompt(msgs)); System.out.println(String.format("Prompt created %d generated response(s).", resps.getGenerations().size())); resps.getGenerations().stream() .forEach(gen -> { final var role = gen.getInfo().getOrDefault(ROLE_INFO_KEY, MessageType.ASSISTANT.getValue()); System.out.println(String.format("Generated respose from \"%s\": %s", role, gen.getText())); }); } }Important

For production, use a secure way of storing and accessing your credentials like Azure Key Vault. For more information about credential security, see the Azure AI services security article.

Navigate back to the project root folder, and run the app by using the following command:

./mvnw spring-boot:run

Output

. ____ _ __ _ _

/\\ / ___'_ __ _ _(_)_ __ __ _ \ \ \ \

( ( )\___ | '_ | '_| | '_ \/ _` | \ \ \ \

\\/ ___)| |_)| | | | | || (_| | ) ) ) )

' |____| .__|_| |_|_| |_\__, | / / / /

=========|_|==============|___/=/_/_/_/

:: Spring Boot :: (v3.1.5)

2023-11-07T12:47:46.126-06:00 INFO 98687 --- [ main] c.e.a.AiCompletionApplication : No active profile set, falling back to 1 default profile: "default"

2023-11-07T12:47:46.823-06:00 INFO 98687 --- [ main] c.e.a.AiCompletionApplication : Started AiCompletionApplication in 0.925 seconds (process running for 1.238)

Sending completion prompt to AI service. One moment please...

Prompt created 1 generated response(s).

Generated respose from "assistant": Microsoft was founded on April 4, 1975.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

For more examples, check out the Azure OpenAI Samples GitHub repository

Source code | Package (npm) | Samples

Note

This guide uses the latest OpenAI npm package which now fully supports Azure OpenAI. If you're looking for code examples for the legacy Azure OpenAI JavaScript SDK, they're currently still available in this repo.

Prerequisites

- An Azure subscription - Create one for free

- LTS versions of Node.js

- Azure CLI used for passwordless authentication in a local development environment, create the necessary context by signing in with the Azure CLI.

- An Azure OpenAI Service resource with the

gpt-35-turbo-instructmodel deployed. For more information about model deployment, see the resource deployment guide.

Retrieve resource information

You need to retrieve the following information to authenticate your application with your Azure OpenAI resource:

| Variable name | Value |

|---|---|

AZURE_OPENAI_ENDPOINT |

This value can be found in the Keys and Endpoint section when examining your resource from the Azure portal. |

AZURE_OPENAI_DEPLOYMENT_NAME |

This value will correspond to the custom name you chose for your deployment when you deployed a model. This value can be found under Resource Management > Model Deployments in the Azure portal. |

OPENAI_API_VERSION |

Learn more about API Versions. |

Learn more about keyless authentication and setting environment variables.

Caution

To use the recommended keyless authentication with the SDK, make sure that the AZURE_OPENAI_API_KEY environment variable isn't set.

Install the client library

In a console window (such as cmd, PowerShell, or Bash), create a new directory for your app, and navigate to it.

Install the required packages for JavaScript with npm from within the context of your new directory:

npm install openai @azure/identity

Your app's package.json file is updated with the dependencies.

Create a sample application

Open a command prompt where you created the new project, and create a new file named Completion.js. Copy the following code into the Completion.js file.

const { AzureOpenAI } = require("openai");

const {

DefaultAzureCredential,

getBearerTokenProvider

} = require("@azure/identity");

// You will need to set these environment variables or edit the following values

const endpoint = process.env["AZURE_OPENAI_ENDPOINT"] || "<endpoint>";

const apiVersion = "2024-04-01-preview";

const deployment = "gpt-35-turbo-instruct"; //The deployment name for your completions API model. The instruct model is the only new model that supports the legacy API.

// keyless authentication

const credential = new DefaultAzureCredential();

const scope = "https://cognitiveservices.azure.com/.default";

const azureADTokenProvider = getBearerTokenProvider(credential, scope);

const prompt = ["When was Microsoft founded?"];

async function main() {

console.log("== Get completions Sample ==");

const client = new AzureOpenAI({ endpoint, azureADTokenProvider, apiVersion, deployment });

const result = await client.completions.create({ prompt, model: deployment, max_tokens: 128 });

for (const choice of result.choices) {

console.log(choice.text);

}

}

main().catch((err) => {

console.error("Error occurred:", err);

});

module.exports = { main };

Run the script with the following command:

node.exe Completion.js

Output

== Get completions Sample ==

Microsoft was founded on April 4, 1975.

Note

If your receive the error: Error occurred: OpenAIError: The apiKey and azureADTokenProvider arguments are mutually exclusive; only one can be passed at a time. You might need to remove a preexisting environment variable for the API key from your system. Even though the Microsoft Entra ID code sample isn't explicitly referencing the API key environment variable, if one is present on the system executing this sample, this error is still generated.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

- Azure OpenAI Overview

- For more examples, check out the Azure OpenAI Samples GitHub repository

Source code | Package (npm) | Samples

Note

This guide uses the latest OpenAI npm package which now fully supports Azure OpenAI. If you're looking for code examples for the legacy Azure OpenAI JavaScript SDK, they're currently still available in this repo.

Prerequisites

- An Azure subscription - Create one for free

- LTS versions of Node.js

- TypeScript

- Azure CLI used for passwordless authentication in a local development environment, create the necessary context by signing in with the Azure CLI.

- An Azure OpenAI Service resource with the

gpt-35-turbo-instructmodel deployed. For more information about model deployment, see the resource deployment guide.

Retrieve resource information

You need to retrieve the following information to authenticate your application with your Azure OpenAI resource:

| Variable name | Value |

|---|---|

AZURE_OPENAI_ENDPOINT |

This value can be found in the Keys and Endpoint section when examining your resource from the Azure portal. |

AZURE_OPENAI_DEPLOYMENT_NAME |

This value will correspond to the custom name you chose for your deployment when you deployed a model. This value can be found under Resource Management > Model Deployments in the Azure portal. |

OPENAI_API_VERSION |

Learn more about API Versions. |

Learn more about keyless authentication and setting environment variables.

Caution

To use the recommended keyless authentication with the SDK, make sure that the AZURE_OPENAI_API_KEY environment variable isn't set.

Install the client library

In a console window (such as cmd, PowerShell, or Bash), create a new directory for your app, and navigate to it.

Install the required packages for JavaScript with npm from within the context of your new directory:

npm install openai @azure/identity

Your app's package.json file is updated with the dependencies.

Create a sample application

Open a command prompt where you created the new project, and create a new file named Completion.ts. Copy the following code into the Completion.ts file.

import {

DefaultAzureCredential,

getBearerTokenProvider

} from "@azure/identity";

import { AzureOpenAI } from "openai";

import { type Completion } from "openai/resources/index";

// You will need to set these environment variables or edit the following values

const endpoint = process.env["AZURE_OPENAI_ENDPOINT"] || "<endpoint>";

// Required Azure OpenAI deployment name and API version

const apiVersion = "2024-08-01-preview";

const deploymentName = "gpt-35-turbo-instruct";

// keyless authentication

const credential = new DefaultAzureCredential();

const scope = "https://cognitiveservices.azure.com/.default";

const azureADTokenProvider = getBearerTokenProvider(credential, scope);

// Chat prompt and max tokens

const prompt = ["When was Microsoft founded?"];

const maxTokens = 128;

function getClient(): AzureOpenAI {

return new AzureOpenAI({

endpoint,

azureADTokenProvider,

apiVersion,

deployment: deploymentName,

});

}

async function getCompletion(

client: AzureOpenAI,

prompt: string[],

max_tokens: number

): Promise<Completion> {

return client.completions.create({

prompt,

model: "",

max_tokens,

});

}

async function printChoices(completion: Completion): Promise<void> {

for (const choice of completion.choices) {

console.log(choice.text);

}

}

export async function main() {

console.log("== Get completions Sample ==");

const client = getClient();

const completion = await getCompletion(client, prompt, maxTokens);

await printChoices(completion);

}

main().catch((err) => {

console.error("Error occurred:", err);

});

Build the script with the following command:

tsc

Run the script with the following command:

node.exe Completion.js

Output

== Get completions Sample ==

Microsoft was founded on April 4, 1975.

Note

If your receive the error: Error occurred: OpenAIError: The apiKey and azureADTokenProvider arguments are mutually exclusive; only one can be passed at a time. You might need to remove a preexisting environment variable for the API key from your system. Even though the Microsoft Entra ID code sample isn't explicitly referencing the API key environment variable, if one is present on the system executing this sample, this error is still generated.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

- Azure OpenAI Overview

- For more examples, check out the Azure OpenAI Samples GitHub repository

Library source code | Package (PyPi) |

Prerequisites

- An Azure subscription - Create one for free

- Python 3.8 or later version

- The following Python libraries: os, requests, json

- An Azure OpenAI Service resource with a

gpt-35-turbo-instructmodel deployed. For more information about model deployment, see the resource deployment guide.

Set up

Install the OpenAI Python client library with:

pip install openai

Note

This library is maintained by OpenAI. Refer to the release history to track the latest updates to the library.

Retrieve key and endpoint

To successfully make a call against the Azure OpenAI Service, you'll need the following:

| Variable name | Value |

|---|---|

ENDPOINT |

This value can be found in the Keys and Endpoint section when examining your resource from the Azure portal. You can also find the endpoint via the Deployments page in Azure AI Foundry portal. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys and Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

DEPLOYMENT-NAME |

This value will correspond to the custom name you chose for your deployment when you deployed a model. This value can be found under Resource Management > Model Deployments in the Azure portal or via the Deployments page in Azure AI Foundry portal. |

Go to your resource in the Azure portal. The Keys and Endpoint can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

Important

We recommend Microsoft Entra ID authentication with managed identities for Azure resources to avoid storing credentials with your applications that run in the cloud.

Use API keys with caution. Don't include the API key directly in your code, and never post it publicly. If using API keys, store them securely in Azure Key Vault, rotate the keys regularly, and restrict access to Azure Key Vault using role based access control and network access restrictions. For more information about using API keys securely in your apps, see API keys with Azure Key Vault.

For more information about AI services security, see Authenticate requests to Azure AI services.

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

Create a new Python application

Create a new Python file called quickstart.py. Then open it up in your preferred editor or IDE.

Replace the contents of quickstart.py with the following code. Modify the code to add your key, endpoint, and deployment name:

import os

from openai import AzureOpenAI

client = AzureOpenAI(

api_key=os.getenv("AZURE_OPENAI_API_KEY"),

api_version="2024-02-01",

azure_endpoint = os.getenv("AZURE_OPENAI_ENDPOINT")

)

deployment_name='REPLACE_WITH_YOUR_DEPLOYMENT_NAME' #This will correspond to the custom name you chose for your deployment when you deployed a model. Use a gpt-35-turbo-instruct deployment.

# Send a completion call to generate an answer

print('Sending a test completion job')

start_phrase = 'Write a tagline for an ice cream shop. '

response = client.completions.create(model=deployment_name, prompt=start_phrase, max_tokens=10)

print(start_phrase+response.choices[0].text)

Important

For production, use a secure way of storing and accessing your credentials like Azure Key Vault. For more information about credential security, see the Azure AI services security article.

Run the application with the

pythoncommand on your quickstart file:python quickstart.py

Output

The output will include response text following the Write a tagline for an ice cream shop. prompt. Azure OpenAI returned The coldest ice cream in town! in this example.

Sending a test completion job

Write a tagline for an ice cream shop. The coldest ice cream in town!

Run the code a few more times to see what other types of responses you get as the response won't always be the same.

Understanding your results

Since our example of Write a tagline for an ice cream shop. provides little context, it's normal for the model to not always return expected results. You can adjust the maximum number of tokens if the response seems unexpected or truncated.

Azure OpenAI also performs content moderation on the prompt inputs and generated outputs. The prompts or responses might be filtered if harmful content is detected. For more information, see the content filter article.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource or resource group. Deleting the resource group also deletes any other resources associated with it.

Next steps

- Learn more about how to generate the best completion in our How-to guide on completions.

- For more examples check out the Azure OpenAI Samples GitHub repository.

Prerequisites

- An Azure subscription - Create one for free

- Python 3.8 or later version

- The following Python libraries: os, requests, json

- An Azure OpenAI resource with a model deployed. For more information about model deployment, see the resource deployment guide.

Set up

Retrieve key and endpoint

To successfully make a call against Azure OpenAI, you'll need the following:

| Variable name | Value |

|---|---|

ENDPOINT |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can also find the endpoint via the Deployments page in Azure AI Foundry portal. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

DEPLOYMENT-NAME |

This value will correspond to the custom name you chose for your deployment when you deployed a model. This value can be found under Resource Management > Deployments in the Azure portal or via the Deployments page in Azure AI Foundry portal. |

Go to your resource in the Azure portal. The Endpoint and Keys can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

Important

We recommend Microsoft Entra ID authentication with managed identities for Azure resources to avoid storing credentials with your applications that run in the cloud.

Use API keys with caution. Don't include the API key directly in your code, and never post it publicly. If using API keys, store them securely in Azure Key Vault, rotate the keys regularly, and restrict access to Azure Key Vault using role based access control and network access restrictions. For more information about using API keys securely in your apps, see API keys with Azure Key Vault.

For more information about AI services security, see Authenticate requests to Azure AI services.

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

REST API

In a bash shell, run the following command. You will need to replace gpt-35-turbo-instruct with the deployment name you chose when you deployed the gpt-35-turbo-instruct model. Entering the model name will result in an error unless you chose a deployment name that is identical to the underlying model name.

curl $AZURE_OPENAI_ENDPOINT/openai/deployments/gpt-35-turbo-instruct/completions?api-version=2024-02-01 \

-H "Content-Type: application/json" \

-H "api-key: $AZURE_OPENAI_API_KEY" \

-d "{\"prompt\": \"Once upon a time\"}"

The format of your first line of the command with an example endpoint would appear as follows curl https://docs-test-001.openai.azure.com/openai/deployments/{YOUR-DEPLOYMENT_NAME_HERE}/completions?api-version=2024-02-01 \. If you encounter an error double check to make sure that you don't have a doubling of the / at the separation between your endpoint and /openai/deployments.

If you want to run this command in a normal Windows command prompt you would need to alter the text to remove the \ and line breaks.

Important

For production, use a secure way of storing and accessing your credentials like Azure Key Vault. For more information about credential security, see the Azure AI services security article.

Output

The output from the completions API will look as follows.

{

"id": "ID of your call",

"object": "text_completion",

"created": 1675444965,

"model": "gpt-35-turbo-instruct",

"choices": [

{

"text": " there lived in a little village a woman who was known as the meanest",

"index": 0,

"finish_reason": "length",

"logprobs": null

}

],

"usage": {

"completion_tokens": 16,

"prompt_tokens": 3,

"total_tokens": 19

}

}

The Azure OpenAI Service also performs content moderation on the prompt inputs and generated outputs. The prompts or responses may be filtered if harmful content is detected. For more information, see the content filter article.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource or resource group. Deleting the resource group also deletes any other resources associated with it.

Next steps

- Learn more about how to generate the best completion in our How-to guide on completions.

- For more examples check out the Azure OpenAI Samples GitHub repository.

Prerequisites

- An Azure subscription - Create one for free

- You can use either the latest version, PowerShell 7, or Windows PowerShell 5.1.

- An Azure OpenAI Service resource with a model deployed. For more information about model deployment, see the resource deployment guide.

Retrieve key and endpoint

To successfully make a call against the Azure OpenAI service, you'll need the following:

| Variable name | Value |

|---|---|

ENDPOINT |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can also find the endpoint via the Deployments page in Azure AI Foundry portal. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

DEPLOYMENT-NAME |

This value will correspond to the custom name you chose for your deployment when you deployed a model. This value can be found under Resource Management > Deployments in the Azure portal or via the Deployments page in Azure AI Foundry portal. |

Go to your resource in the Azure portal. The Endpoint and Keys can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

Important

Use API keys with caution. Don't include the API key directly in your code, and never post it publicly. If you use an API key, store it securely in Azure Key Vault. For more information about using API keys securely in your apps, see API keys with Azure Key Vault.

For more information about AI services security, see Authenticate requests to Azure AI services.

$Env:AZURE_OPENAI_API_KEY = 'YOUR_KEY_VALUE'

$Env:AZURE_OPENAI_ENDPOINT = 'YOUR_ENDPOINT'

Create a new PowerShell script

Create a new PowerShell file called quickstart.ps1. Then open it up in your preferred editor or IDE.

Replace the contents of quickstart.ps1 with the following code. Modify the code to add your key, endpoint, and deployment name:

# Azure OpenAI metadata variables $openai = @{ api_key = $Env:AZURE_OPENAI_API_KEY api_base = $Env:AZURE_OPENAI_ENDPOINT # your endpoint should look like the following https://YOUR_RESOURCE_NAME.openai.azure.com/ api_version = '2024-02-01' # this may change in the future name = 'YOUR-DEPLOYMENT-NAME-HERE' #This will correspond to the custom name you chose for your deployment when you deployed a model. } # Completion text $prompt = 'Once upon a time...' # Header for authentication $headers = [ordered]@{ 'api-key' = $openai.api_key } # Adjust these values to fine-tune completions $body = [ordered]@{ prompt = $prompt max_tokens = 10 temperature = 2 top_p = 0.5 } | ConvertTo-Json # Send a completion call to generate an answer $url = "$($openai.api_base)/openai/deployments/$($openai.name)/completions?api-version=$($openai.api_version)" $response = Invoke-RestMethod -Uri $url -Headers $headers -Body $body -Method Post -ContentType 'application/json' return "$prompt`n$($response.choices[0].text)"Important

For production, use a secure way of storing and accessing your credentials like The PowerShell Secret Management with Azure Key Vault. For more information about credential security, see the Azure AI services security article.

Run the script using PowerShell:

./quickstart.ps1

Output

The output will include response text following the Once upon a time prompt. Azure OpenAI returned There was a world beyond the mist...where a in this example.

Once upon a time...

There was a world beyond the mist...where a

Run the code a few more times to see what other types of responses you get as the response won't always be the same.

Understanding your results

Since our example of Once upon a time... provides little context, it's normal for the model to not always return expected results. You can adjust the maximum number of tokens if the response seems unexpected or truncated.

Azure OpenAI also performs content moderation on the prompt inputs and generated outputs. The prompts or responses may be filtered if harmful content is detected. For more information, see the content filter article.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource or resource group. Deleting the resource group also deletes any other resources associated with it.

Next steps

- Learn more about how to generate the best completion in our How-to guide on completions.

- For more examples check out the Azure OpenAI Samples GitHub repository.