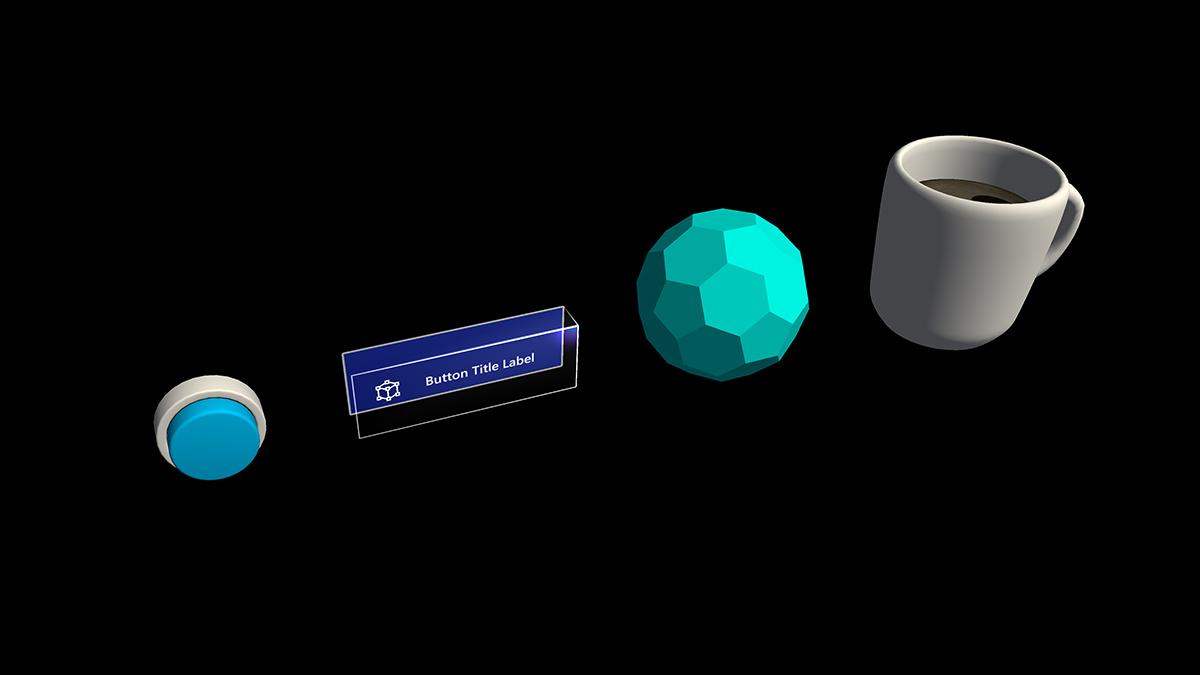

Interactable object

A button has long been a metaphor used for triggering an event in the 2D abstract world. In the three-dimensional mixed reality world, we don’t have to be confined to this world of abstraction anymore. Anything can be an interactable object that triggers an event. An interactable object can be anything from a coffee cup on a table to a balloon in midair. We still do make use of traditional buttons in certain situation such as in dialog UI. The visual representation of the button depends on the context.

Important properties of the interactable object

Visual cues

Visual cues are sensory cues from light, received by the eye, and processed by the visual system during visual perception. Since the visual system is dominant in many species, especially humans, visual cues are a large source of information in how the world is perceived.

Since the holographic objects are blended with the real-world environment in mixed reality, it could be difficult to understand which objects you can interact with. For any interactable objects in your experience, it's important to provide differentiated visual cues for each input state. This helps the user understand which part of your experience is interactable and makes the user confident by using a consistent interaction method.

Far interactions

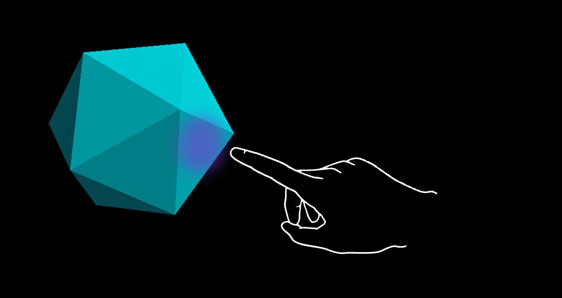

For any objects that user can interact with gaze, hand ray, and motion controller's ray, we recommend having different visual cue for these three input states:

Default (Observation) state

Default idle state of the object.

The cursor isn't on the object. Hand isn't detected.

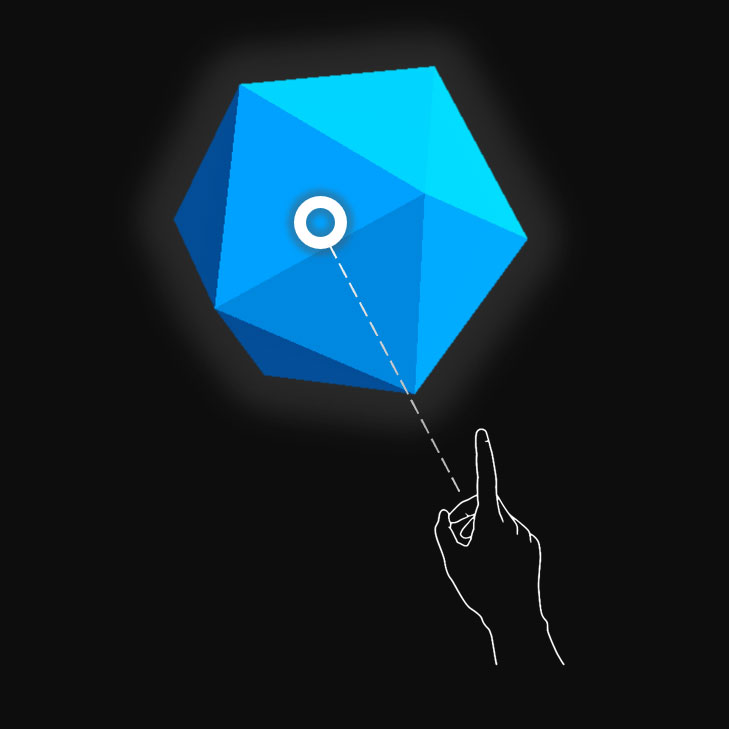

Targeted (Hover) state

When the object is targeted with gaze cursor, finger proximity or motion controller's pointer.

The cursor is on the object. Hand is detected, ready.

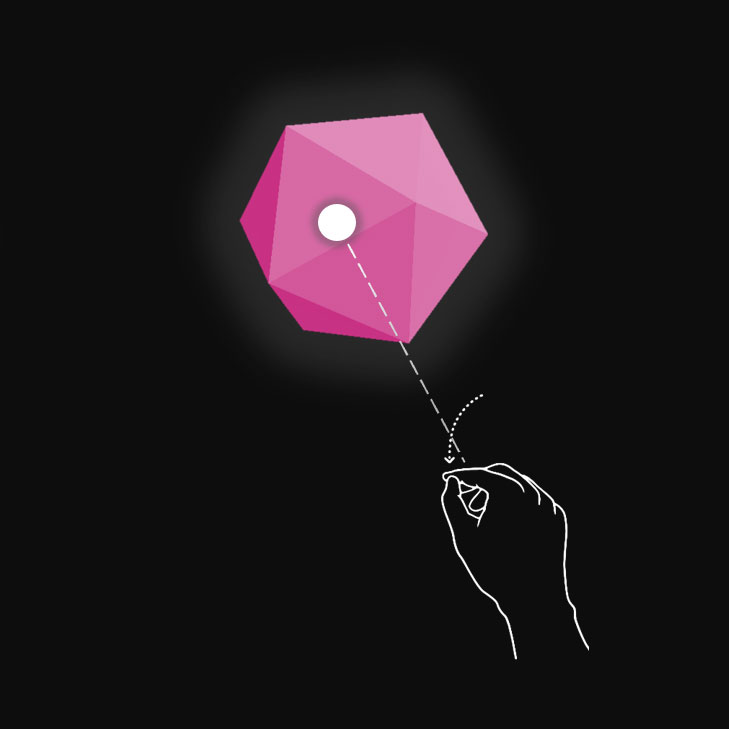

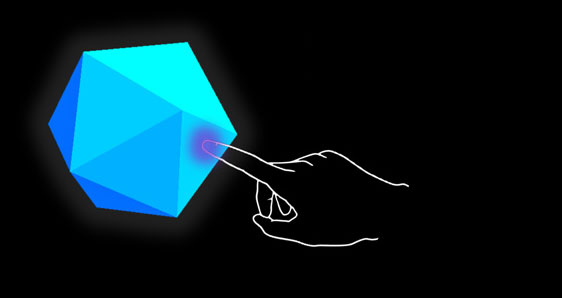

Pressed state

When the object is pressed with an air tap gesture, finger press or motion controller's select button.

The cursor is on the object. Hand is detected, air tapped.

You can use techniques such as highlighting or scaling to provide visual cues for the user’s input state. In mixed reality, you can find examples of visualizing different input states on the Start menu and with app bar buttons.

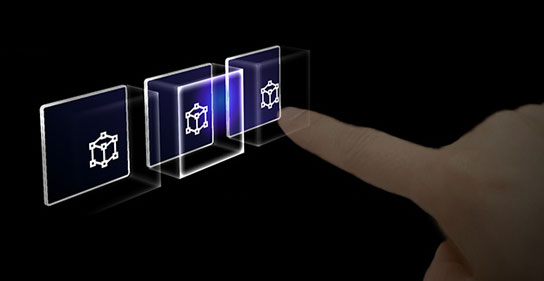

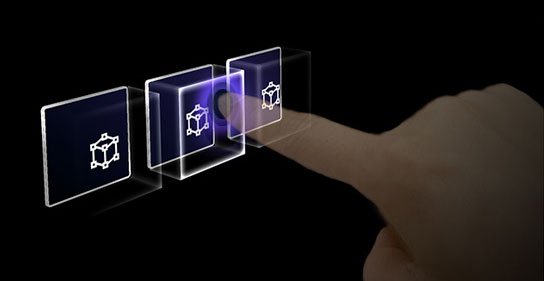

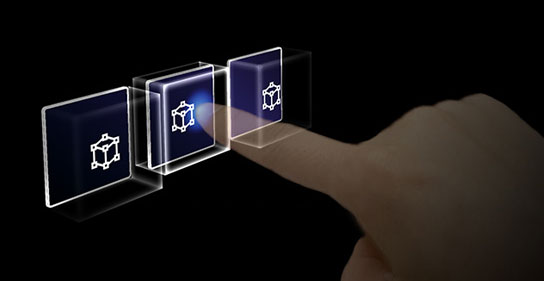

Here's what these states look like on a holographic button:

Default (Observation) state

Targeted (Hover) state

Pressed state

Near interactions (direct)

HoloLens 2 supports articulated hand tracking input, which allows you to interact with objects. Without haptic feedback and perfect depth perception, it can be hard to tell how far away your hand is from an object or whether you're touching it. It's important to provide enough visual cues to communicate the state of the object, in particular the state of your hands based on that object.

Use visual feedback to communicate the following states:

- Default (Observation): Default idle state of the object.

- Hover: When a hand is near a hologram, change visuals to communicate that hand is targeting hologram.

- Distance and point of interaction: As the hand approaches a hologram, design feedback to communicate the projected point of interaction, and how far from the object the finger is

- Contact begins: Change visuals (light, color) to communicate that a touch has occurred

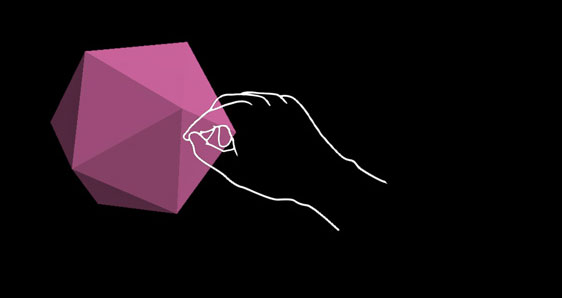

- Grasped: Change visuals (light, color) when the object is grasped

- Contact ends: Change visuals (light, color) when touch has ended

Hover (Far)

Highlighting based on the proximity of the hand.

Hover (Near)

Highlight size changes based on the distance to the hand.

Touch / press

Visual plus audio feedback.

Grasp

Visual plus audio feedback.

A button on HoloLens 2 is an example of how the different input interaction states are visualized:

Default

Hover

Reveal a proximity-based lighting effect.

Touch

Show ripple effect.

Press

Move the front plate.

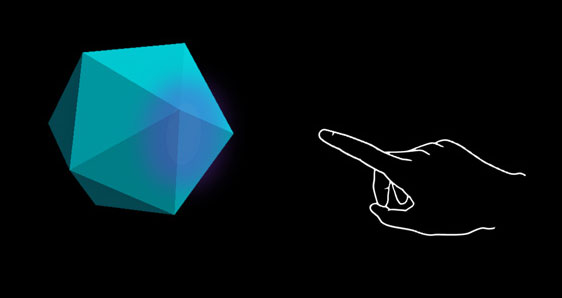

The "ring" visual cue on HoloLens 2

On HoloLens 2, there's an extra visual cue, which can help the user's perception of depth. A ring near their fingertip shows up and scales down as the fingertip gets closer to the object. The ring eventually converges into a dot when the pressed state is reached. This visual affordance helps the user understand how far they are from the object.

Video loop: Example of visual feedback based on proximity to a bounding box

Audio cues

For direct hand interactions, proper audio feedback can dramatically improve the user experience. Use audio feedback to communicate the following cues:

- Contact begins: Play sound when touch begins

- Contact ends: Play sound on touch end

- Grab begins: Play sound when grab starts

- Grab ends: Play sound when grab ends

Voice commanding

For any interactable objects, it's important to support alternative interaction options. By default, we recommend that voice commanding be supported for any objects that are interactable. To improve discoverability, you can also provide a tooltip during the hover state.

Image: Tooltip for the voice command

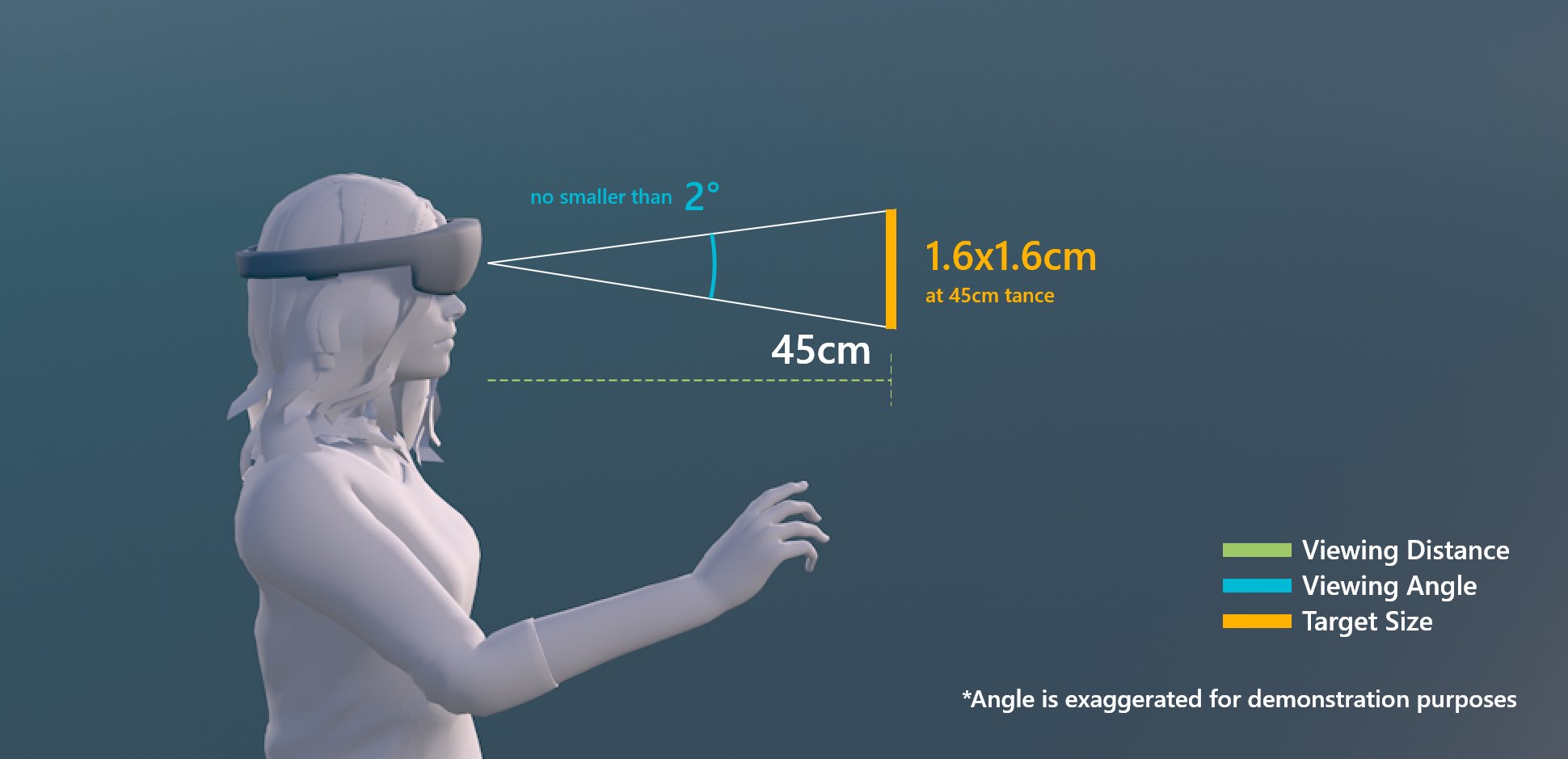

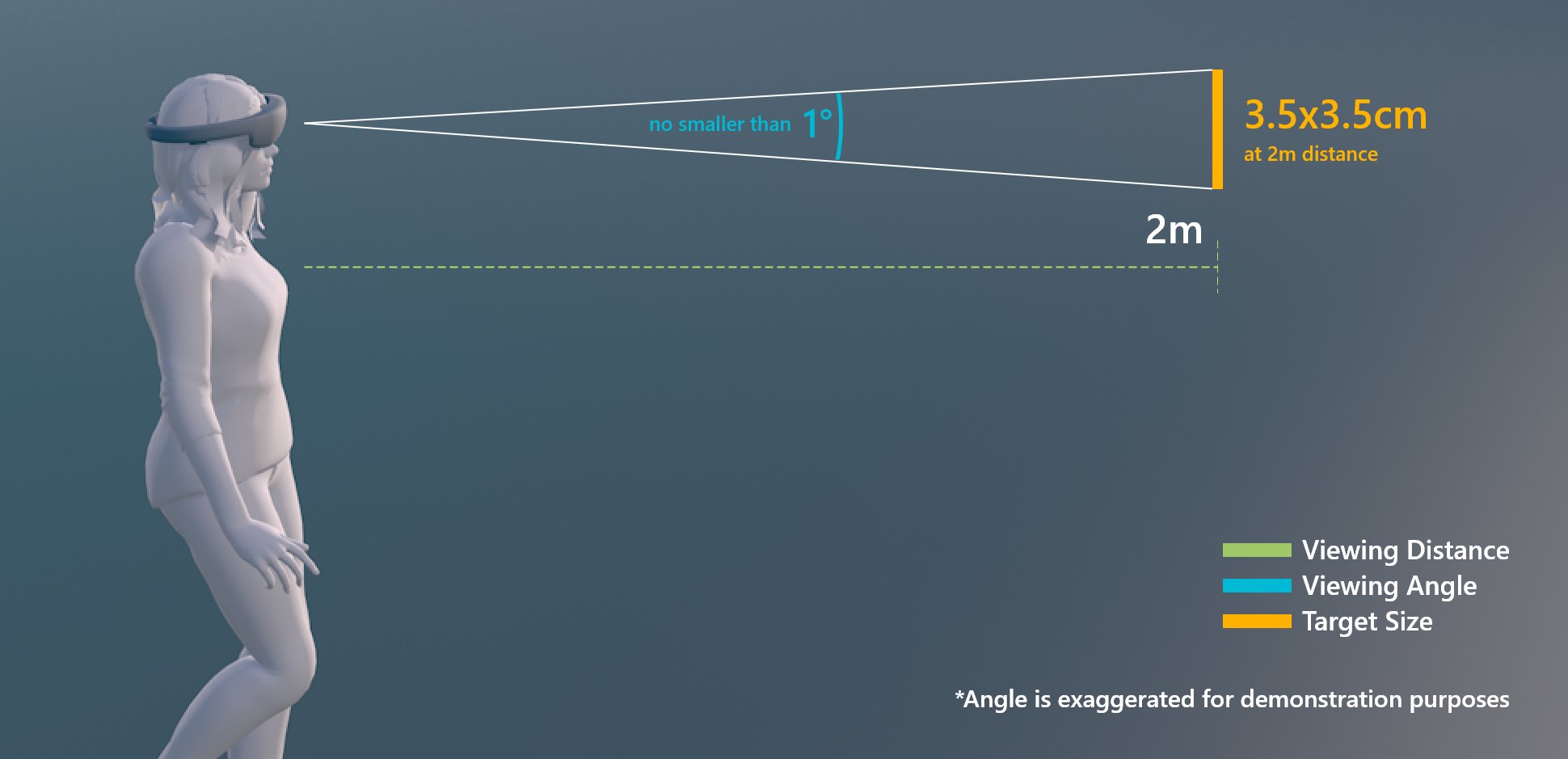

Sizing recommendations

To ensure all interactable objects can easily be touched, we recommend making sure the interactable meets a minimum size based on the distance it's placed from the user. The visual angle is often measured in degrees of visual arc. Visual angle is based on the distance between the user's eyes and the object and stays constant, while the physical size of the target may change as the distance from the user changes. To determine the necessary physical size of an object based on the distance from the user, try using a visual angle calculator such as this one.

Below are the recommendations for minimum sizes of interactable content.

Target size for direct hand interaction

| Distance | Viewing angle | Size |

|---|---|---|

| 45 cm | no smaller than 2° | 1.6 x 1.6 cm |

Target size for direct hand interaction

Target size for hand ray or gaze interaction

| Distance | Viewing angle | Size |

|---|---|---|

| 2 m | no smaller than 1° | 3.5 x 3.5 cm |

Target size for hand ray or gaze interaction

Interactable object in MRTK (Mixed Reality Toolkit) for Unity

In MRTK, you can use the script Interactable to make objects respond to various types of input interaction states. It supports various types of themes that allow you define visual states by controlling object properties such as color, size, material, and shader.

MixedRealityToolkit's Standard shader provides various options such as proximity light that helps you create visual and audio cues.