Secure an Azure Machine Learning inferencing environment with virtual networks (v1)

APPLIES TO:  Python SDK azureml v1

Python SDK azureml v1

APPLIES TO:  Azure CLI ml extension v1

Azure CLI ml extension v1

In this article, you learn how to secure inferencing environments with a virtual network in Azure Machine Learning. This article is specific to the SDK/CLI v1 deployment workflow of deploying a model as a web service.

Tip

This article is part of a series on securing an Azure Machine Learning workflow. See the other articles in this series:

- Virtual network overview

- Secure the workspace resources

- Secure the training environment

- Enable studio functionality

- Use custom DNS

- Use a firewall

For a tutorial on creating a secure workspace, see Tutorial: Create a secure workspace, Bicep template, or Terraform template.

In this article you learn how to secure the following inferencing resources in a virtual network:

- Default Azure Kubernetes Service (AKS) cluster

- Private AKS cluster

- AKS cluster with private link

Prerequisites

Read the Network security overview article to understand common virtual network scenarios and overall virtual network architecture.

An existing virtual network and subnet to use with your compute resources.

To deploy resources into a virtual network or subnet, your user account must have permissions to the following actions in Azure role-based access control (Azure RBAC):

- "Microsoft.Network/*/read" on the virtual network resource. This permission isn't needed for Azure Resource Manager (ARM) template deployments.

- "Microsoft.Network/virtualNetworks/join/action" on the virtual network resource.

- "Microsoft.Network/virtualNetworks/subnets/join/action" on the subnet resource.

For more information on Azure RBAC with networking, see the Networking built-in roles

Important

Some of the Azure CLI commands in this article use the azure-cli-ml, or v1, extension for Azure Machine Learning. Support for the v1 extension will end on September 30, 2025. You're able to install and use the v1 extension until that date.

We recommend that you transition to the ml, or v2, extension before September 30, 2025. For more information on the v2 extension, see Azure Machine Learning CLI extension and Python SDK v2.

Limitations

Azure Container Instances

When your Azure Machine Learning workspace is configured with a private endpoint, deploying to Azure Container Instances in a VNet is not supported. Instead, consider using a Managed online endpoint with network isolation.

Azure Kubernetes Service

- If your AKS cluster is behind of a VNET, your workspace and its associated resources (storage, key vault, Azure Container Registry) must have private endpoints or service endpoints in the same VNET as AKS cluster's VNET. Please read tutorial create a secure workspace to add those private endpoints or service endpoints to your VNET.

- If your workspace has a private endpoint, the Azure Kubernetes Service cluster must be in the same Azure region as the workspace.

- Using a public fully qualified domain name (FQDN) with a private AKS cluster is not supported with Azure Machine Learning.

Azure Kubernetes Service

Important

To use an AKS cluster in a virtual network, first follow the prerequisites in Configure advanced networking in Azure Kubernetes Service (AKS).

To add AKS in a virtual network to your workspace, use the following steps:

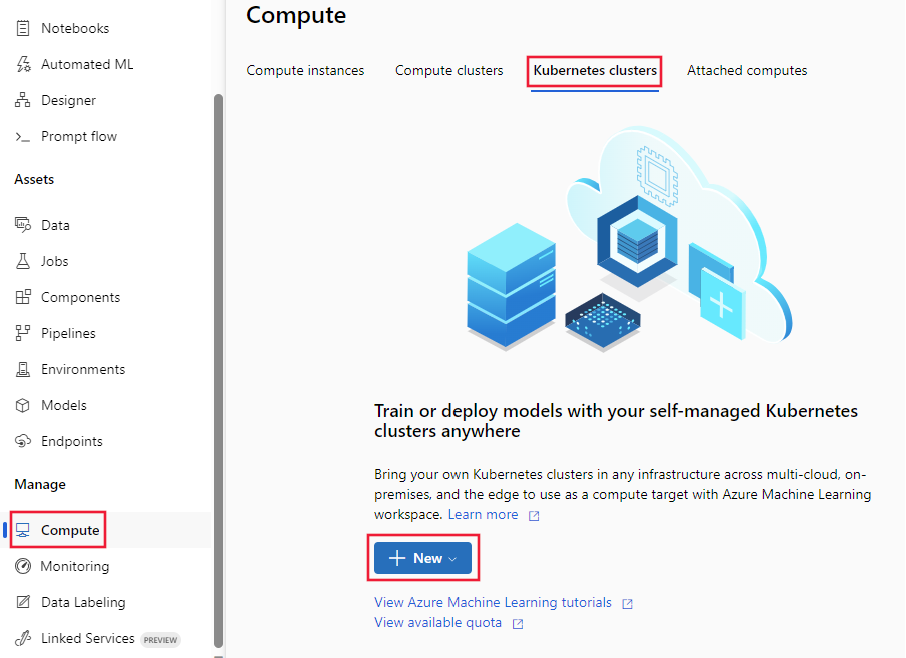

Sign in to Azure Machine Learning studio, and then select your subscription and workspace.

Select Compute on the left, Inference clusters from the center, and then select + New. Finally, select AksCompute.

From the Create AksCompute dialog, select Create new, the Location and the VM size to use for the cluster. Finally, select Next.

From the Configure Settings section, enter a Compute name, select the Cluster Purpose, Number of nodes, and then select Advanced to display the network settings. In the Configure virtual network area, set the following values:

Set the Virtual network to use.

Tip

If your workspace uses a private endpoint to connect to the virtual network, the Virtual network selection field is greyed out.

Set the Subnet to create the cluster in.

In the Kubernetes Service address range field, enter the Kubernetes service address range. This address range uses a Classless Inter-Domain Routing (CIDR) notation IP range to define the IP addresses that are available for the cluster. It must not overlap with any subnet IP ranges (for example, 10.0.0.0/16).

In the Kubernetes DNS service IP address field, enter the Kubernetes DNS service IP address. This IP address is assigned to the Kubernetes DNS service. It must be within the Kubernetes service address range (for example, 10.0.0.10).

In the Docker bridge address field, enter the Docker bridge address. This IP address is assigned to Docker Bridge. It must not be in any subnet IP ranges, or the Kubernetes service address range (for example, 172.18.0.1/16).

When you deploy a model as a web service to AKS, a scoring endpoint is created to handle inferencing requests. Make sure that the network security group (NSG) that controls the virtual network has an inbound security rule enabled for the IP address of the scoring endpoint if you want to call it from outside the virtual network.

To find the IP address of the scoring endpoint, look at the scoring URI for the deployed service. For information on viewing the scoring URI, see Consume a model deployed as a web service.

Important

Keep the default outbound rules for the NSG. For more information, see the default security rules in Security groups.

Important

The IP address shown in the image for the scoring endpoint will be different for your deployments. While the same IP is shared by all deployments to one AKS cluster, each AKS cluster will have a different IP address.

You can also use the Azure Machine Learning SDK to add Azure Kubernetes Service in a virtual network. If you already have an AKS cluster in a virtual network, attach it to the workspace as described in How to deploy to AKS. The following code creates a new AKS instance in the default subnet of a virtual network named mynetwork:

APPLIES TO:  Python SDK azureml v1

Python SDK azureml v1

from azureml.core.compute import ComputeTarget, AksCompute

# Create the compute configuration and set virtual network information

config = AksCompute.provisioning_configuration(location="eastus2")

config.vnet_resourcegroup_name = "mygroup"

config.vnet_name = "mynetwork"

config.subnet_name = "default"

config.service_cidr = "10.0.0.0/16"

config.dns_service_ip = "10.0.0.10"

config.docker_bridge_cidr = "172.17.0.1/16"

# Create the compute target

aks_target = ComputeTarget.create(workspace=ws,

name="myaks",

provisioning_configuration=config)

When the creation process is completed, you can run inference, or model scoring, on an AKS cluster behind a virtual network. For more information, see How to deploy to AKS.

For more information on using Role-Based Access Control with Kubernetes, see Use Azure RBAC for Kubernetes authorization.

Network contributor role

Important

If you create or attach an AKS cluster by providing a virtual network you previously created, you must grant the service principal (SP) or managed identity for your AKS cluster the Network Contributor role to the resource group that contains the virtual network.

To add the identity as network contributor, use the following steps:

To find the service principal or managed identity ID for AKS, use the following Azure CLI commands. Replace

<aks-cluster-name>with the name of the cluster. Replace<resource-group-name>with the name of the resource group that contains the AKS cluster:az aks show -n <aks-cluster-name> --resource-group <resource-group-name> --query servicePrincipalProfile.clientIdIf this command returns a value of

msi, use the following command to identify the principal ID for the managed identity:az aks show -n <aks-cluster-name> --resource-group <resource-group-name> --query identity.principalIdTo find the ID of the resource group that contains your virtual network, use the following command. Replace

<resource-group-name>with the name of the resource group that contains the virtual network:az group show -n <resource-group-name> --query idTo add the service principal or managed identity as a network contributor, use the following command. Replace

<SP-or-managed-identity>with the ID returned for the service principal or managed identity. Replace<resource-group-id>with the ID returned for the resource group that contains the virtual network:az role assignment create --assignee <SP-or-managed-identity> --role 'Network Contributor' --scope <resource-group-id>

For more information on using the internal load balancer with AKS, see Use internal load balancer with Azure Kubernetes Service.

Secure VNet traffic

There are two approaches to isolate traffic to and from the AKS cluster to the virtual network:

- Private AKS cluster: This approach uses Azure Private Link to secure communications with the cluster for deployment/management operations.

- Internal AKS load balancer: This approach configures the endpoint for your deployments to AKS to use a private IP within the virtual network.

Private AKS cluster

By default, AKS clusters have a control plane, or API server, with public IP addresses. You can configure AKS to use a private control plane by creating a private AKS cluster. For more information, see Create a private Azure Kubernetes Service cluster.

After you create the private AKS cluster, attach the cluster to the virtual network to use with Azure Machine Learning.

Internal AKS load balancer

By default, AKS deployments use a public load balancer. In this section, you learn how to configure AKS to use an internal load balancer. An internal (or private) load balancer is used where only private IPs are allowed as frontend. Internal load balancers are used to load balance traffic inside a virtual network

A private load balancer is enabled by configuring AKS to use an internal load balancer.

Enable private load balancer

Important

You cannot enable private IP when creating the Azure Kubernetes Service cluster in Azure Machine Learning studio. You can create one with an internal load balancer when using the Python SDK or Azure CLI extension for machine learning.

The following examples demonstrate how to create a new AKS cluster with a private IP/internal load balancer using the SDK and CLI:

APPLIES TO:  Python SDK azureml v1

Python SDK azureml v1

import azureml.core

from azureml.core.compute import AksCompute, ComputeTarget

# Verify that cluster does not exist already

try:

aks_target = AksCompute(workspace=ws, name=aks_cluster_name)

print("Found existing aks cluster")

except:

print("Creating new aks cluster")

# Subnet to use for AKS

subnet_name = "default"

# Create AKS configuration

prov_config=AksCompute.provisioning_configuration(load_balancer_type="InternalLoadBalancer")

# Set info for existing virtual network to create the cluster in

prov_config.vnet_resourcegroup_name = "myvnetresourcegroup"

prov_config.vnet_name = "myvnetname"

prov_config.service_cidr = "10.0.0.0/16"

prov_config.dns_service_ip = "10.0.0.10"

prov_config.subnet_name = subnet_name

prov_config.load_balancer_subnet = subnet_name

prov_config.docker_bridge_cidr = "172.17.0.1/16"

# Create compute target

aks_target = ComputeTarget.create(workspace = ws, name = "myaks", provisioning_configuration = prov_config)

# Wait for the operation to complete

aks_target.wait_for_completion(show_output = True)

When attaching an existing cluster to your workspace, use the load_balancer_type and load_balancer_subnet parameters of AksCompute.attach_configuration() to configure the load balancer.

For information on attaching a cluster, see Attach an existing AKS cluster.

Limit outbound connectivity from the virtual network

If you don't want to use the default outbound rules and you do want to limit the outbound access of your virtual network, you must allow access to Azure Container Registry. For example, make sure that your Network Security Groups (NSG) contains a rule that allows access to the AzureContainerRegistry.RegionName service tag where `{RegionName} is the name of an Azure region.

Next steps

This article is part of a series on securing an Azure Machine Learning workflow. See the other articles in this series: