Start, monitor, and track run history

APPLIES TO:  Python SDK azureml v1

Python SDK azureml v1

APPLIES TO:  Azure CLI ml extension v1

Azure CLI ml extension v1

The Azure Machine Learning SDK for Python v1 and Machine Learning CLI provide various methods to monitor, organize, and track your runs for training and experimentation. Your ML run history is an important part of an explainable and repeatable ML development process.

Tip

For information on using studio, see Track, monitor, and analyze runs with studio.

If you are using Azure Machine Learning SDK v2, see the following articles:

This article shows how to do the following tasks:

- Monitor run performance.

- Tag and find runs.

- Run search over your run history.

- Cancel or fail runs.

- Create child runs.

- Monitor the run status by email notification.

Tip

If you're looking for information on monitoring the Azure Machine Learning service and associated Azure services, see How to monitor Azure Machine Learning. If you're looking for information on monitoring models deployed as web services, see Collect model data and Monitor with Application Insights.

Prerequisites

You'll need the following items:

An Azure subscription. If you don't have an Azure subscription, create a free account before you begin. Try the free or paid version of Azure Machine Learning today.

The Azure Machine Learning SDK for Python (version 1.0.21 or later). To install or update to the latest version of the SDK, see Install or update the SDK.

To check your version of the Azure Machine Learning SDK, use the following code:

print(azureml.core.VERSION)The Azure CLI and CLI extension for Azure Machine Learning.

Important

Some of the Azure CLI commands in this article use the

azure-cli-ml, or v1, extension for Azure Machine Learning. Support for the v1 extension will end on September 30, 2025. You will be able to install and use the v1 extension until that date.We recommend that you transition to the

ml, or v2, extension before September 30, 2025. For more information on the v2 extension, see Azure ML CLI extension and Python SDK v2.

Monitor run performance

Start a run and its logging process

APPLIES TO:

Python SDK azureml v1

Python SDK azureml v1Set up your experiment by importing the Workspace, Experiment, Run, and ScriptRunConfig classes from the azureml.core package.

import azureml.core from azureml.core import Workspace, Experiment, Run from azureml.core import ScriptRunConfig ws = Workspace.from_config() exp = Experiment(workspace=ws, name="explore-runs")Start a run and its logging process with the

start_logging()method.notebook_run = exp.start_logging() notebook_run.log(name="message", value="Hello from run!")

Monitor the status of a run

APPLIES TO:

Python SDK azureml v1

Python SDK azureml v1Get the status of a run with the

get_status()method.print(notebook_run.get_status())To get the run ID, execution time, and other details about the run, use the

get_details()method.print(notebook_run.get_details())When your run finishes successfully, use the

complete()method to mark it as completed.notebook_run.complete() print(notebook_run.get_status())If you use Python's

with...asdesign pattern, the run will automatically mark itself as completed when the run is out of scope. You don't need to manually mark the run as completed.with exp.start_logging() as notebook_run: notebook_run.log(name="message", value="Hello from run!") print(notebook_run.get_status()) print(notebook_run.get_status())

Tag and find runs

In Azure Machine Learning, you can use properties and tags to help organize and query your runs for important information.

Add properties and tags

APPLIES TO:

Python SDK azureml v1

Python SDK azureml v1To add searchable metadata to your runs, use the

add_properties()method. For example, the following code adds the"author"property to the run:local_run.add_properties({"author":"azureml-user"}) print(local_run.get_properties())Properties are immutable, so they create a permanent record for auditing purposes. The following code example results in an error, because we already added

"azureml-user"as the"author"property value in the preceding code:try: local_run.add_properties({"author":"different-user"}) except Exception as e: print(e)Unlike properties, tags are mutable. To add searchable and meaningful information for consumers of your experiment, use the

tag()method.local_run.tag("quality", "great run") print(local_run.get_tags()) local_run.tag("quality", "fantastic run") print(local_run.get_tags())You can also add simple string tags. When these tags appear in the tag dictionary as keys, they have a value of

None.local_run.tag("worth another look") print(local_run.get_tags())Query properties and tags

You can query runs within an experiment to return a list of runs that match specific properties and tags.

APPLIES TO:

Python SDK azureml v1

Python SDK azureml v1list(exp.get_runs(properties={"author":"azureml-user"},tags={"quality":"fantastic run"})) list(exp.get_runs(properties={"author":"azureml-user"},tags="worth another look"))

Cancel or fail runs

If you notice a mistake or if your run is taking too long to finish, you can cancel the run.

APPLIES TO:  Python SDK azureml v1

Python SDK azureml v1

To cancel a run using the SDK, use the cancel() method:

src = ScriptRunConfig(source_directory='.', script='hello_with_delay.py')

local_run = exp.submit(src)

print(local_run.get_status())

local_run.cancel()

print(local_run.get_status())

If your run finishes, but it contains an error (for example, the incorrect training script was used), you can use the fail() method to mark it as failed.

local_run = exp.submit(src)

local_run.fail()

print(local_run.get_status())

Create child runs

APPLIES TO:  Python SDK azureml v1

Python SDK azureml v1

Create child runs to group together related runs, such as for different hyperparameter-tuning iterations.

Note

Child runs can only be created using the SDK.

This code example uses the hello_with_children.py script to create a batch of five child runs from within a submitted run by using the child_run() method:

!more hello_with_children.py

src = ScriptRunConfig(source_directory='.', script='hello_with_children.py')

local_run = exp.submit(src)

local_run.wait_for_completion(show_output=True)

print(local_run.get_status())

with exp.start_logging() as parent_run:

for c,count in enumerate(range(5)):

with parent_run.child_run() as child:

child.log(name="Hello from child run", value=c)

Note

As they move out of scope, child runs are automatically marked as completed.

To create many child runs efficiently, use the create_children() method. Because each creation results in a network call,

creating a batch of runs is more efficient than creating them one by one.

Submit child runs

Child runs can also be submitted from a parent run. This allows you to create hierarchies of parent and child runs. You can't create a parentless child run: even if the parent run does nothing but launch child runs, it's still necessary to create the hierarchy. The statuses of all runs are independent: a parent can be in the "Completed" successful state even if one or more child runs were canceled or failed.

You may wish your child runs to use a different run configuration than the parent run. For instance, you might use a less-powerful, CPU-based configuration for the parent, while using GPU-based configurations for your children. Another common wish is to pass each child different arguments and data. To customize a child run, create a ScriptRunConfig object for the child run.

Important

To submit a child run from a parent run on a remote compute, you must sign in to the workspace in the parent run code first. By default, the run context object in a remote run does not have credentials to submit child runs. Use a service principal or managed identity credentials to sign in. For more information on authenticating, see set up authentication.

The below code:

- Retrieves a compute resource named

"gpu-cluster"from the workspacews - Iterates over different argument values to be passed to the children

ScriptRunConfigobjects - Creates and submits a new child run, using the custom compute resource and argument

- Blocks until all of the child runs complete

# parent.py

# This script controls the launching of child scripts

from azureml.core import Run, ScriptRunConfig

compute_target = ws.compute_targets["gpu-cluster"]

run = Run.get_context()

child_args = ['Apple', 'Banana', 'Orange']

for arg in child_args:

run.log('Status', f'Launching {arg}')

child_config = ScriptRunConfig(source_directory=".", script='child.py', arguments=['--fruit', arg], compute_target=compute_target)

# Starts the run asynchronously

run.submit_child(child_config)

# Experiment will "complete" successfully at this point.

# Instead of returning immediately, block until child runs complete

for child in run.get_children():

child.wait_for_completion()

To create many child runs with identical configurations, arguments, and inputs efficiently, use the create_children() method. Because each creation results in a network call, creating a batch of runs is more efficient than creating them one by one.

Within a child run, you can view the parent run ID:

## In child run script

child_run = Run.get_context()

child_run.parent.id

Query child runs

To query the child runs of a specific parent, use the get_children() method.

The recursive = True argument allows you to query a nested tree of children and grandchildren.

print(parent_run.get_children())

Log to parent or root run

You can use the Run.parent field to access the run that launched the current child run. A common use-case for using Run.parent is to combine log results in a single place. Child runs execute asynchronously and there's no guarantee of ordering or synchronization beyond the ability of the parent to wait for its child runs to complete.

# in child (or even grandchild) run

def root_run(self : Run) -> Run :

if self.parent is None :

return self

return root_run(self.parent)

current_child_run = Run.get_context()

root_run(current_child_run).log("MyMetric", f"Data from child run {current_child_run.id}")

Monitor the run status by email notification

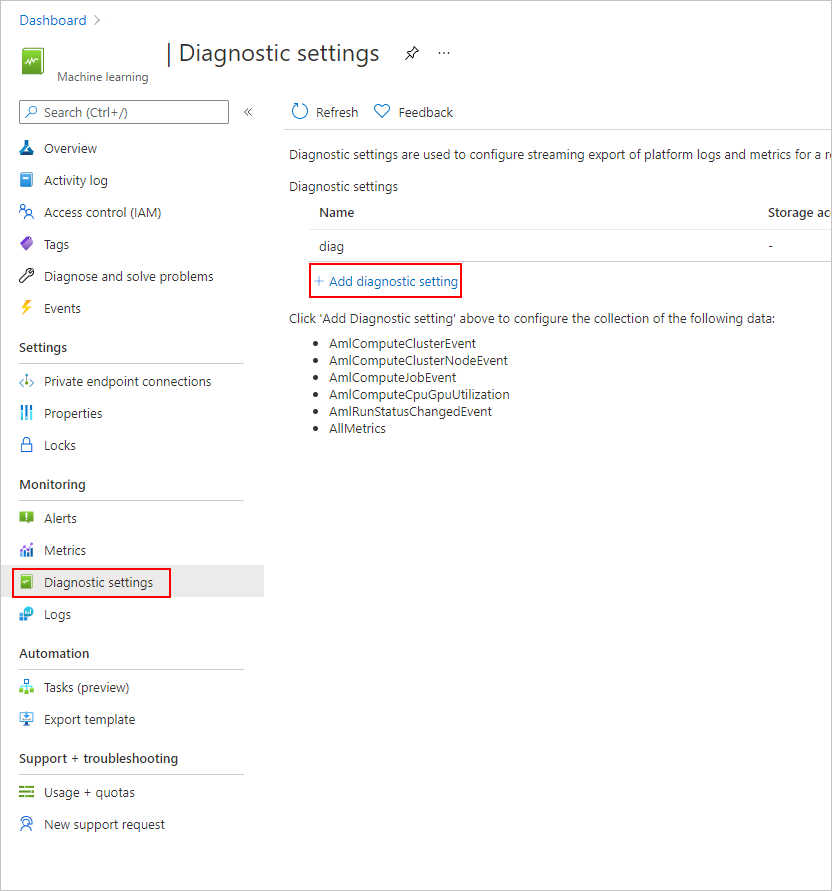

In the Azure portal, in the left navigation bar, select the Monitor tab.

Select Diagnostic settings and then select + Add diagnostic setting.

In the Diagnostic Setting,

- under the Category details, select the AmlRunStatusChangedEvent.

- In the Destination details, select the Send to Log Analytics workspace and specify the Subscription and Log Analytics workspace.

Note

The Azure Log Analytics Workspace is a different type of Azure Resource than the Azure Machine Learning service Workspace. If there are no options in that list, you can create a Log Analytics Workspace.

In the Logs tab, add a New alert rule.

See how to create and manage log alerts using Azure Monitor.

Example notebooks

The following notebooks demonstrate the concepts in this article:

To learn more about the logging APIs, see the logging API notebook.

For more information about managing runs with the Azure Machine Learning SDK, see the manage runs notebook.

Next steps

- To learn how to log metrics for your experiments, see Log metrics during training runs.

- To learn how to monitor resources and logs from Azure Machine Learning, see Monitoring Azure Machine Learning.