Nuta

Dostęp do tej strony wymaga autoryzacji. Możesz spróbować się zalogować lub zmienić katalog.

Dostęp do tej strony wymaga autoryzacji. Możesz spróbować zmienić katalogi.

Dotyczy:Program SQL Server w systemie Linux

Począwszy od programu SQL Server 2017 (14.x), program SQL Server jest obsługiwany zarówno w systemach Linux, jak i Windows. Podobnie jak wdrożenia programu SQL Server oparte na systemie Windows, bazy danych i wystąpienia programu SQL Server muszą być wysoce dostępne w systemie Linux. W tym artykule opisano podstawowe informacje dotyczące programu Pacemaker z programem Corosync oraz sposób planowania i wdrażania go na potrzeby konfiguracji programu SQL Server.

Podstawowe informacje o dodatku lub rozszerzeniu dotyczące wysokiej dostępności

Wszystkie obecnie obsługiwane dystrybucje dostarczają dodatek/rozszerzenie o wysokiej dostępności oparte na stosie klastrowania Pacemaker. Ten zestaw zawiera dwa kluczowe składniki: Pacemaker i Corosync. Wszystkie składniki stosu to:

- Rozrusznik serca. Podstawowy składnik klastrowania, który koordynuje elementy na maszynach klastrowanych.

- Corosync. Struktura i zestaw interfejsów API, które udostępniają takie elementy jak kworum, możliwość ponownego uruchamiania procesów, które zakończyły się niepowodzeniem itd.

- libQB. Udostępnia takie elementy jak rejestrowanie.

- Agent zasobów. Udostępniono określone funkcje, dzięki czemu aplikacja może integrować się z programem Pacemaker.

- Agent ogrodzenia. Skrypty/funkcje, które ułatwiają izolowanie węzłów i radzenie sobie z nimi, jeśli występują problemy.

Uwaga / Notatka

W świecie Linuksa stos klastrów jest zwykle nazywany Pacemakerem.

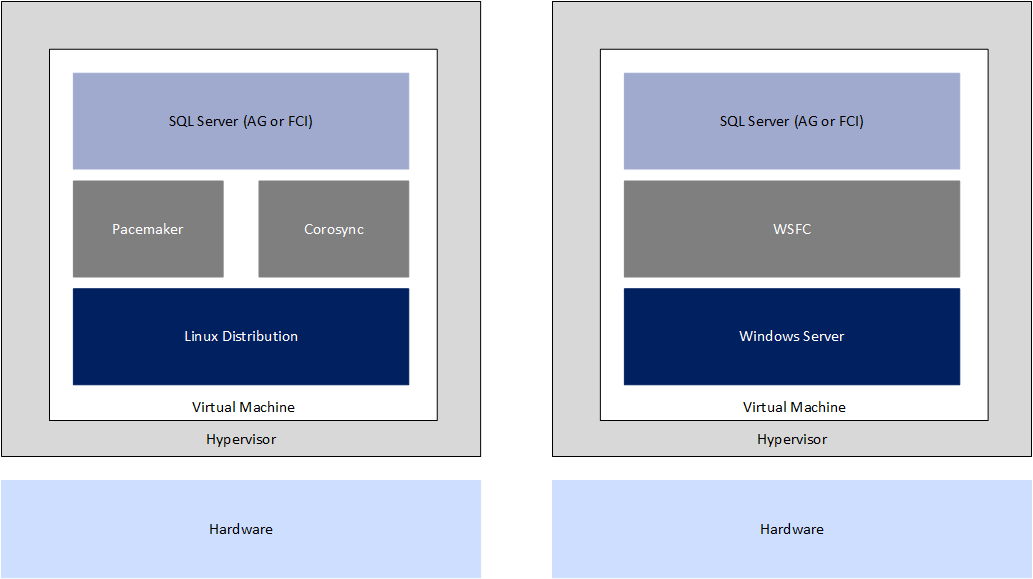

To rozwiązanie jest pod pewnymi względami podobne do, ale na wiele sposobów różni się od wdrażania konfiguracji klastrowanych przy użyciu systemu Windows. W systemie Windows forma dostępności klastra, nazywana klastrem awaryjnym Windows Server (WSFC), jest wbudowana w system operacyjny, a funkcja, która umożliwia tworzenie klastra WSFC, klastrowanie awaryjne, jest domyślnie wyłączona. W systemie Windows grupy dostępności (AGs) i wystąpienia klastrów trybu failover (FCIs) są oparte na WSFC i mają ścisłą integrację dzięki specyficznej bibliotece DLL zasobów dostarczanej przez SQL Server. To ściśle powiązane rozwiązanie jest możliwe w dużej mierze, ponieważ wszystko pochodzi od jednego dostawcy.

W systemie Linux, podczas gdy każda obsługiwana dystrybucja ma dostępny program Pacemaker, każda dystrybucja może dostosowywać i mieć nieco inne implementacje i wersje. Niektóre różnice zostaną odzwierciedlone w instrukcjach w tym artykule. Warstwa klastrowania jest typu open source, więc mimo że jest dostarczana z dystrybucjami, nie jest ściśle zintegrowana w taki sam sposób, w jaki usługa WSFC znajduje się w systemie Windows. Dlatego firma Microsoft udostępnia mssql-server-ha, dzięki czemu program SQL Server i stos Pacemaker mogą zapewnić środowisko zbliżone, ale nie identyczne do tego dla grup dostępności (AG) i instancji przełączeń awaryjnych (FCI), jak w systemie Windows.

Aby uzyskać pełną dokumentację Pacemaker, zawierającą szczegółowe wyjaśnienie oraz pełne informacje referencyjne dla RHEL i SLES:

Uwaga / Notatka

Począwszy od programu SQL Server 2025 (17.x), system SUSE Linux Enterprise Server (SLES) nie jest obsługiwany.

Aby uzyskać więcej informacji na temat całego stosu, zobacz również oficjalną stronę dokumentacji programu Pacemaker w witrynie ClusterLabs.

Pojęcia i terminologia programu Pacemaker

W tej sekcji opisano typowe pojęcia i terminologię dotyczącą implementacji programu Pacemaker.

Node

Węzeł jest serwerem uczestniczącym w klastrze. Klaster Pacemaker natywnie obsługuje maksymalnie 16 węzłów. Tę liczbę można przekroczyć, jeśli narzędzie Corosync nie jest uruchomione w dodatkowych węzłach, ale corosync jest wymagane dla programu SQL Server. W związku z tym maksymalna liczba węzłów, jakie klaster może mieć dla dowolnej konfiguracji opartej na SQL Server, wynosi 16; jest to limit Pacemaker i nie ma nic wspólnego z maksymalnymi ograniczeniami dla Grup Dostępności lub wystąpień trybu failover klastrów (FCI) narzuconych przez SQL Server.

Zasób

Zarówno klaster WSFC, jak i klaster Pacemaker posiadają koncepcję zasobu. Zasób jest specyficzną funkcją, która działa w kontekście klastra, na przykład dysku lub adresu IP. Na przykład, w systemie Pacemaker można utworzyć zasoby FCI i AG. Nie jest to różne od tego, co robi się w klastrze WSFC, w którym widzisz zasób programu SQL Server dla wystąpienia klastra trybu failover lub zasobu grupy dostępności (AG) podczas konfigurowania AG, ale nie jest to dokładnie to samo z powodu podstawowych różnic w sposobie integrowania programu SQL Server z programem Pacemaker.

Program Pacemaker ma standardowe i klonowane zasoby. Zasoby klonowane to te, które działają jednocześnie na wszystkich węzłach. Przykładem może być adres IP, który działa na wielu węzłach na potrzeby równoważenia obciążenia. Każdy zasób tworzony dla wystąpień klastra trybu failover używa zasobu standardowego, ponieważ w danym momencie tylko jeden węzeł może hostować wystąpienie klastra trybu failover.

Uwaga / Notatka

Ten artykuł zawiera odwołania do terminu slave (element podrzędny), który nie jest już używany przez firmę Microsoft. Gdy termin zostanie usunięty z oprogramowania, usuniemy go z tego artykułu.

Gdy tworzona jest AG, wymaga wyspecjalizowanej formy zasobu klonowego, zwanego zasobem wielostanowym. Mimo że Grupa dostępności ma tylko jedną replikę podstawową, sama Grupa dostępności działa na wszystkich węzłach, na których została skonfigurowana, i może potencjalnie umożliwiać dostęp tylko do odczytu. Ponieważ jest to "aktywne" użycie węzła, zasoby mają pojęcie dwóch stanów: Promowane (wcześniej Master) i Niepromowane (wcześniej Slave). Aby uzyskać więcej informacji, zobacz Zasoby wielostanowe: zasoby, które mają wiele trybów.

Grupy zasobów/zestawy

Podobnie jak role w usłudze WSFC, klaster Pacemaker ma koncepcję grupy zasobów. Grupa zasobów (nazywana zestawem w systemie SLES) jest kolekcją zasobów, które działają razem i mogą przełączać się w tryb failover z jednego węzła do drugiego jako pojedyncza jednostka. pl-PL: Grupy zasobów nie mogą zawierać zasobów skonfigurowanych jako promowane lub niepromowane; w związku z tym nie można ich używać w przypadku grup dostępności. Grupy zasobów można używać w przypadku wystąpień klastra trybu failover, ale zazwyczaj nie jest to zalecana konfiguracja.

Ograniczenia

WSFCs mają różnorodne parametry dotyczące zasobów, takie jak zależności, które informują usługę WSFC o relacji nadrzędnej/podrzędnej między dwoma różnymi zasobami. Zależność to tylko reguła, która mówi WSFC, który zasób musi być najpierw dostępny online.

Klaster Pacemaker nie ma pojęcia zależności, ale istnieją ograniczenia. Istnieją trzy rodzaje ograniczeń: kolokacja, lokalizacja i kolejność.

- Ograniczenie kolokacji wymusza, czy w tym samym węźle powinny być uruchomione dwa zasoby.

- Ograniczenie lokalizacji definiuje, gdzie w klastrze Pacemaker można (lub nie) uruchomić zasób.

- Reguła kolejności informuje klaster Pacemaker o kolejności uruchamiania zasobów.

Uwaga / Notatka

Ograniczenia kolokacji nie są wymagane dla zasobów w grupie zasobów, ponieważ wszystkie te elementy są postrzegane jako pojedyncza jednostka.

Kworum, agenci ogrodzenia i STONITH

Kworum w ramach Pacemakera jest koncepcyjnie podobne do WSFC. Cały cel mechanizmu kworum klastra jest zapewnienie, że klaster pozostaje uruchomiony i działa. Zarówno dodatek WSFC, jak i dodatek HA dla dystrybucji systemu Linux mają koncepcję głosowania, gdzie każdy węzeł wnosi wkład do kworum. Chcesz, aby większość głosów była za, jeśli nie, w najgorszym scenariuszu klaster zostanie zamknięty.

W przeciwieństwie do WSFC, nie ma zasobu świadka do współpracy z kworum. Podobnie jak w WSFC, celem jest utrzymanie liczby głosujących na poziomie nieparzystym. Konfiguracja kworum wymaga innych uwzględnień dla grup dostępności niż dla wystąpień klastrów przy pracy awaryjnej.

WSFCs monitorują stan uczestniczących węzłów i obsługują je w przypadku wystąpienia problemu. Nowsze wersje platformy WSFCs oferują takie funkcje jak kwarantowanie węzła, który działa nieprawidłowo lub jest niedostępny (węzeł nie jest włączony, komunikacja sieciowa nie działa itp.). Po stronie systemu Linux ten typ funkcjonalności jest dostarczany przez agenta ogrodzenia. Koncepcja ta jest czasami określana jako fechtowanie. Jednak te mechanizmy zabezpieczające są specyficzne dla wdrożenia i często są udostępniane przez dostawców sprzętu i niektórych dostawców oprogramowania, takich jak ci, którzy oferują hypervisory. Na przykład program VMware udostępnia agenta ogrodzenia, który może być używany dla maszyn wirtualnych z systemem Linux zwirtualizowanych przy użyciu programu vSphere.

Kworum i mechanizm odizolowania są powiązane z inną koncepcją zwaną STONITH, czyli Strzel w Głowę Drugi Węzeł. StoNITH jest wymagany do posiadania obsługiwanego klastra Pacemaker we wszystkich dystrybucjach systemu Linux. Aby uzyskać więcej informacji, zobacz Fencing in a Red Hat High Availability Cluster (RHEL).

corosync.conf

Plik corosync.conf zawiera konfigurację klastra. Znajduje się on w lokalizacji /etc/corosync. W trakcie normalnych codziennych operacji ten plik nigdy nie powinien być edytowany, jeśli klaster jest prawidłowo skonfigurowany.

Lokalizacja dziennika klastra

Miejsca przechowywania dzienników klastrów Pacemaker różnią się w zależności od dystrybucji.

- RHEL i SLES:

/var/log/cluster/corosync.log - Ubuntu:

/var/log/corosync/corosync.log

Aby zmienić domyślną lokalizację rejestrowania, zmodyfikuj element corosync.conf.

Planowanie klastrów Pacemaker dla programu SQL Server

W tej sekcji omówiono ważne punkty planowania klastra Pacemaker.

Wirtualizacja klastrów Pacemaker opartych na systemie Linux dla programu SQL Server

Używanie maszyn wirtualnych do wdrożeń SQL Server opartych na systemie Linux dla grup dostępności (AG) i klastrów trybu failover (FCI) podlega tym samym regułom, co w przypadku ich odpowiedników opartych na systemie Windows. Istnieje podstawowy zestaw reguł umożliwiających obsługę zwirtualizowanych wdrożeń programu SQL Server udostępnianych przez firmę Microsoft w zasadach pomocy technicznej dla produktów Microsoft SQL Server działających w środowisku wirtualizacji sprzętu. Różne funkcje hypervisor, takie jak Hyper-V firmy Microsoft i ESXi firmy VMware, mogą mieć różne wariancji na tej podstawie ze względu na różnice w samych platformach.

Jeśli chodzi o grupy wysokiej dostępności i instancje klastra przełączania awaryjnego, upewnij się, że dla węzłów danego klastra Pacemaker ustawiono anty-koligację. Jeśli skonfigurowano wysoką dostępność w konfiguracji grupy dostępności lub klastra trybu failover, maszyny wirtualne hostujące SQL Server nigdy nie powinny być uruchomione na tym samym hoście hypervisora. Na przykład, jeśli wdrażany jest dwuwęzłowy FCI, muszą istnieć co najmniej trzy hosty hypervisora, aby było miejsce dla maszyny wirtualnej hostującej węzeł, aby mogła się przenieść w przypadku awarii hosta, zwłaszcza jeśli używane są funkcje takie jak Migracja na żywo lub vMotion.

Aby uzyskać dokumentację Hyper-V, zobacz Using Guest Clustering for High Availability (Korzystanie z klastrowania gościa w celu zapewnienia wysokiej dostępności)

Sieć

W przeciwieństwie do usługi WSFC program Pacemaker nie wymaga dedykowanej nazwy ani co najmniej jednego dedykowanego adresu IP dla samego klastra Pacemaker. Grupy dostępności i instancje klastrów trybu failover będą wymagać adresów IP (sprawdź dokumentację dla każdego z nich, aby uzyskać więcej informacji), ale nie nazw, ponieważ nie ma zasobu nazwy sieciowej. Usługa SLES zezwala na konfigurację adresu IP do celów administracyjnych, ale nie jest wymagana, jak widać w temacie Tworzenie klastra Pacemaker.

Podobnie jak WSFC, Pacemaker preferuje nadmiarową sieć, co oznacza, że różne karty sieciowe (NIC lub pNIC dla fizycznych interfejsów) mają indywidualne adresy IP. Jeśli chodzi o konfigurację klastra, każdy adres IP będzie miał to, co jest znane jako własny pierścień. Jednak podobnie jak w przypadku usług WSFCs obecnie wiele implementacji jest zwirtualizowanych lub w chmurze publicznej, w której istnieje tylko jedna zwirtualizowana karta sieciowa (vNIC) przedstawiona serwerowi. Jeśli wszystkie pNIC i vNIC są połączone z tym samym przełącznikiem fizycznym lub wirtualnym, nie ma prawdziwej nadmiarowości w warstwie sieciowej, dlatego skonfigurowanie wielu kart sieciowych jest tylko pozorna dla maszyny wirtualnej. Redundancja sieci jest zwykle wbudowana w hypervisor dla wdrożeń zwirtualizowanych i zdecydowanie w chmurze publicznej.

Jedną z różnic między wieloma kartami sieciowymi a Pacemakerem w porównaniu z WSFC jest to, że Pacemaker pozwala na wiele adresów IP w tej samej podsieci, podczas gdy WSFC nie. Aby uzyskać więcej informacji na temat wielu podsieci i klastrów systemu Linux, zobacz artykuł Configure multiple-subnet Always On availability groups and failover cluster instances (Konfigurowanie zawsze włączonych grup dostępności i wystąpień klastra trybu failover).

Kworum i STONITH

Konfiguracja i wymagania dotyczące kworum są związane z wdrożeniami specyficznymi dla grupy dostępności lub specyficznego klastrowania w trybie failover programu SQL Server.

StoNITH jest wymagany dla obsługiwanego klastra Pacemaker. Skorzystaj z dokumentacji z dystrybucji, aby skonfigurować STONITH. Przykładem jest ogrodzenie oparte na magazynie dla SLES. Istnieje również agent STONITH dla oprogramowania VMware vCenter dla rozwiązań opartych na technologii ESXI. Aby uzyskać więcej informacji, zobacz Stonith Plugin Agent for VMware VM VCenter SOAP Fencing (Nieoficjalne).

Współdziałanie

W tej sekcji opisano, jak klaster oparty na systemie Linux może wchodzić w interakcje z usługą WSFC lub z innymi dystrybucjami systemu Linux.

WSFC

Obecnie nie ma bezpośredniego sposobu współpracy klastra WSFC i Pacemaker. Oznacza to, że nie ma możliwości utworzenia AG lub klastra przełączania awaryjnego działającego w ramach usług WSFC i Pacemaker. Istnieją jednak dwa rozwiązania współdziałania, z których oba są przeznaczone dla grup zabezpieczeń. Jedynym sposobem, w jaki zgrupowanie instancji klastra trybu awaryjnego może uczestniczyć w konfiguracji międzyplatformowej, jest udział jako instancja w jednym z tych dwóch scenariuszy:

- Grupa dostępności z typem klastra None. Aby uzyskać więcej informacji, zobacz dokumentację grup dostępności systemu Windows.

- Rozproszona grupa dostępności, która jest specjalnym typem grupy dostępności, umożliwiająca skonfigurowanie dwóch różnych grup dostępności jako indywidualnych grup dostępności. Aby uzyskać więcej informacji na temat rozproszonych grup dostępności, zobacz dokumentację Rozproszone grupy dostępności.

Inne dystrybucje systemu Linux

W systemie Linux wszystkie węzły klastra Pacemaker muszą znajdować się w tej samej dystrybucji. Oznacza to na przykład, że węzeł RHEL nie może być częścią klastra Pacemaker, który ma węzeł SLES. Główną przyczyną tego stanu była wcześniej określona: dystrybucje mogą mieć różne wersje i funkcje, więc elementy nie mogły działać prawidłowo. Dystrybucje łączenia mają taką samą historię jak łączenie klastrów WSFC i Linuksa: użyj opcji brak lub rozproszone grupy dostępności.